The Social Web is a set of relationships that link together people over the Web. The Web is an universal and open space of information where every item of interest can be identified with a URI. While the best known current social networking sites on the Web limit themselves to relationships between people with accounts on a single site, the Social Web should extend across the entire Web. Just as people can call each other no matter which telephone provider they belong to, just as email allows people to send messages to each other irrespective of their e-mail provider, and just as the Web allows links to any website, so the Social Web should allow people to create networks of relationships across the entire Web, while giving people the ability to control their own privacy and data. The standards that enable this should be open and royalty-free. We present a framework for understanding the Social Web and the relevant standards (from both within and outside the W3C) in this report, and conclude by proposing a strategy for making the Social Web a "first-class citizen" of the Web.

1. Overview

The Social Web is a set of relationships that link together people over the Web. The Web is an universal and open space of information where every item of interest can be identified with a Uniform Resource Identifier (URI) [URI]. While the best known current social networking sites on the Web limit themselves to relationships between people with accounts on a single site, the Social Web should extend across the entire Web. Just as people can call each other no matter which telephone provider they belong to, just as email allows people to send messages to each other irrespective of their e-mail provider, and just as the Web allows links to any website, so the Social Web should allow people to create networks of relationships across the entire Web, while giving people the ability to control their own privacy and data.

The Social Web is not just about relationships, but about the applications and innovations that can be built on top of these relationships. Social-networking sites and other user-generated-content services on the Web have a potential to be enablers of innovation, but cannot achieve this potential without open and royalty-free standards for data portability, identity, social networking, and privacy.

The Social Web Incubator Group (SWXG) was founded as an outcome of the W3C Workshop on the Future of Social Networking [MSNWS] to uncover and document existing technologies, software, and standards (both proposed and adopted) needed to enable a universal and decentralized Social Web. The group also sought to identify gaps, conflicts, and other areas for future standardization and research to increase adoption of the Social Web.

Over the course of the SWXG’s activity the, approximately thirty, participants on the conference calls discussed a wide variety of topics and heard from over thirty invited guests from within and outside the W3C. We conclude that while the Social Web is a space of innovation, it is still not a "first-class" citizen of the Web: Social applications currently largely evolved as silos and thus implementations and integration are inconsistent, with little guarantees of privacy and enforcement of terms-of-service.

Further, the members of the XG conclude:

- The Social Web does not suffer from a lack of potential standards. A large number of diverse groups have evolved data models, communication protocols, and data formats at tangents to one another, addressing a large number of communities, each of which has its own terminology and viewpoint.

- While there has been a large amount of work done in this area, in terms of both current potential and standards, these tend to address basic issues around identity and portability, but do not address more complex and vital issues such as privacy, policy enforcement, and provenance. All of these issues are present scope for further research and the development of future standards.

- The creation of a decentralized and federated Social Web, as part of Web architecture, is a revolutionary opportunity to provide both increased social data portability and enhanced end-user privacy.

- One key to make ordinary users take advantage of a decentralized Social Web is to build identity and portability into the browser and other devices.

We respectfully recommend to the W3C areas of future work in which the W3C should play a pivotal role:

- Investigate how identity can become a central part of the Web by supporting solutions that would allow for a high-level of security, multiple identities, and that are decentralized in nature. The W3C can focus on the topic of identity in the browser initially and its work should be coordinated with existing identity work and communities.

- Define mappings between existing data-formats for profiles on a semantic level, making sure that a common core is available in a consistent manner across various syntactic serializations.

- Start work combining the Social Web with the Semantic Web to enable people to describe social media, with a focus on describing terms-of-service agreements, licensing, and micropayment information using standardized vocabularies, which should be easy to find and use.

- Begin an over-arching privacy activity for the W3C, exploring the combination of technical and social approaches. All W3C Recommendations should be inspected for privacy concerns.

- Support the Federated Social Web effort by providing resources to the test-case driven development of a decentralized social web.

- Create a more "light-weight" and open process so that groups working on the Social Web are able to work with the W3C and liaison more easily.

This work could form the basis of new Working Groups, improved liaising with non-W3C efforts and standardization bodies, and increased co-ordination and focus on the Social Web among existing W3C working groups.

2. State of the Social Web in 2010

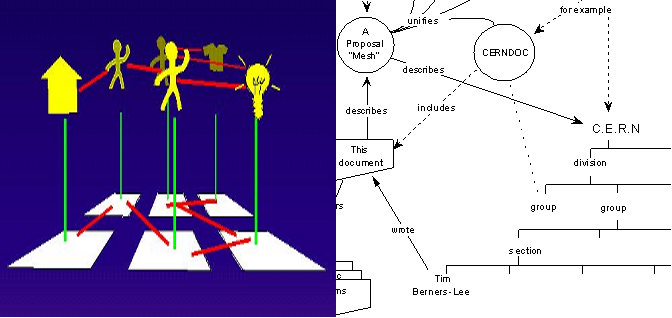

2010 has been a tumultuous year for the Social Web. However, the Social Web is not a new phenomenon that has no precedent, but the result of a popularization of existing technologies. Many social features were available over the Internet before the Web, ranging from the blog-like features of Engelbart's "Journal" system in NLS (oN-Line System, the second node of the Internet), messaging via e-mail and IRC, the Well (1984), and the "Member Profiles" of AOL. The "list of friends" that is ubiquitous on the Social Web existed in the hand-authored links on the earliest webpages. The Web has always been social. As shown by this diagram below by Tim Berners-Lee in his original 1989 proposal to create the World Wide Web, the Web from its inception was meant to include not only connections between hypertext documents, but the relationships between people [TBL1989]. This was clarified as one of the original reasons for adding "machine-readable semantics" to the Web [TBL1994].

What was missing was an easy-to-use interface to make finding people you know and sharing data with them easily accessible. A number of websites, ranging from Classmates.com (1995) to SixDegrees (1997), pioneered these features for ordinary users of the Web. Since the early days of the Web people that maintained their own homepages have been posting activity updates to their sites, and this has been pushed into the mainstream with the development of user friendly blogging software (the word "blog" coming from "web log") such as LiveJournal. Innovations in this space allowed the general public to become more and more apt at blogging, and independent news sites such as Indymedia (1999) pioneered the notion of user-generated content management. However, these services remained fairly experimental up until after the collapse of the initial "dot-com" bubble. After this a rash of social networking sites like Friendster (2002), LinkedIn (2003), MySpace (2003), Orkut (2004), and Facebook (2004) took off, and eventually became the most popular sites on the Web. Starting with Flickr (2004) and Youtube (2005), user-generated content took over this newly re-invigorated Social Web. The launch of Twitter (2007), a micro-blogging site, which propagated updates to users' social networks, via desktop and mobile devices, showed another dominant trend in the Social Web. It was around this time that the concept of the Social Web became associated both with the aforementioned companies and with the wider "Web 2.0" paradigm. Today, the Social Web is becoming part of corporate communication portfolios and Web 2.0 companies start commercializing data from and about their users.

While the social networking site usage remains geographically disparate, with many countries developing their own most popular social networking sites such as Hi5 in Japan and QQ in China, there has been an overall tendency towards users moving their profiles between services, such as users moving their profiles from Friendster to MySpace for example. This, in turn, led to a dismissive attitude by some that the most "popular" social networking sites would simply turn over every year or two. In a similar manner to how competition amongst search engines eventually led to the dominance of Google, Facebook rapidly rose to become a global leader in social networking. A number of major vendors began either purchasing social networking sites (such as the purchase of Blogger (2003) and Orkut (2007) by Google) and other companies like Yahoo! trying to roll their-own social networking sites like Yahoo! 360 (2005). Social Web features, such as comments and user-generated content, became intertwined with such phenomenon as Flickr for sharing photos and YouTube for sharing video. Today, it is a de-facto requirement for websites to have social features and for individuals and organizations to have a presence on popular social networking sites. Yet the ways for web-sites to do so are currently fractured and have yet to be standardized.

While empowered by the compelling user experience of these social networking sites, the real victim of these data-silos has been the end-users. Social networking sites encourage users to put their data into the given proprietary platform, and have tended to make the portability of the user's own data to another site or even their home computer difficult if not impossible. Architects of new Social Web services and user-advocacy groups began to ask for the ability of users to move their data from platform to platform. The first technology created specifically for a portable social graph was the Friend-of-a-Friend project (FOAF) for the Semantic Web in 2001 [FOAF], and in 2005, a biannual gathering of developers started the Internet Identity Workshop from which work like OpenID emerged [OPENID]. Momentum took off after Brad Fitzpatrick (formerly of Livejournal) posted his "Thoughts on the Social Graph" with David Recordon in 2007 [OPENGRAPH]. There quickly followed a number of initiatives like the DataPortability initiative [DATAPORT], the Data Liberation Front at Google [DLF], and lately, the Federated Social Web initiative [FSW]. As most of this activity was outside the W3C, many developers involved in the Social Web created the Open Web Foundation in 2008 to create light-weight patent and copyright agreements to cover their draft specifications [OWF]. This momentum has continued to attract interest from developers. However, an open and decentralized Social Web still seems distant and few users have actually left these data-silos.

Many social networking sites considered privacy and portability to be contradictory. At its inception, Facebook denied users the ability to let data be portable outside its system due to concerns over user privacy, as their terms of service in 2006 stated that "We understand you may not want everyone in the world to have the information you share on Facebook; that is why we give you control of your information" [FBTOS]. In one particularly infamous incident, in 2008 blogger Robert Scoble wanted to make his information portable by copying his contacts from Facebook, but had his account disabled by Facebook [SCOBLE]. However, in 2009 there seemed to be little concern about issues of privacy and portability except amongst those deeply immersed in designing social networking sites, with 20 percent of users listing privacy as a primary concern motivating their choice in using a social networking site [JUNGLE]. Today, privacy is a secondary argument to stimulate new sign-ups. Widespread usability problems impede users to exercise effective control over their personal information on social networking sites, where permissive defaults are another threat to privacy. Although Scott McNealy of SUN infamously remarked that "You have zero privacy anyway," recent studies show that youth have "an aspiration for increased privacy" and are equally concerned about privacy as adults [PEW].

As more people are adopting Web-enabled smartphones, with mobile users spending more minutes per day on social networking sites than the average PC user, in 2010 30% of smartphone users accessed social networks via mobile browsers, the mobile Social Web must not be ignored. Users seem attracted to mobile device access because they can consult with friends and quickly make decisions while remaining mobile, allowing users to use applications in a context such as the live-tracking of buses. Many popular social networks at the time of writing this report tend to offer both a Web-based version, and a dedicated application which can be downloaded for the given smartphone platform. These dedicated applications tend to be able to make much greater use of built in sensors, and applications found on these smartphones. As several mobile social networking sites allow users to both upload their location and see the location of their friends, a number of small groups have joined together to form the OSLO alliance (Open Sharing of Location-based Objects) [OSLO]. OSLO includes many players in mobile social networking and location-based social software which have signed an agreement to enable their approximately 30 million users to share location information between mobile social networks, in essence supporting the portability of location information between services. However, this activity seems to have stalled and the W3C Device API WG is quickly filling the gap by standardizing a set of APIs to be implemented by mobile browsers to cater for access to device functionality, such as a user's address book, calendar, location, within a Web Application running inside a standard mobile browser. As more and more of Web usage goes mobile and data access speeds increase, one can expect the difference in capabilities between the Social Web and the Mobile Web to diminish.

The end of 2009 was when the issues of privacy on the Social Web grew beyond a niche concern and entered the popular consciousness. Facebook's membership began increasing globally, overtaking many local social networking site [MAP]. In December 2009, Facebook changed its privacy settings by defaulting certain privacy settings which in turn made part of a user's profile information public. Users were encouraged to use "privacy controls" to provide access control to their data, but many users found these controls to be confusing and the default settings led to revealing lists of friends. This sparked widespread outrage, even amongst governments [GERMANLAW]. Recently, in 2010 the United States's Federal Trade Commission even put forward a proposal for a "Do-Not-Track" mechanism [FTC].

In response to these privacy concerns, there was increased interest in decentralizing the Social Web. Tim Berners-Lee proposed "Socially Aware Cloud Computing" [TBL2009], where he illustrated that the technologies required to have a decentralized Social Web were available and how it is but a matter of engineering to realize this vision. The announcement of the open-source Diaspora Project in the New York Times to create privacy-preserving and decentralized social network [NYT] led to even more media attention. Overall interest is high amongst vendors, as witnessed by the launch in 2010 of products like Vodafone's OneSocialWeb [OSW].The first attempt at developing a common test-suite across differing standards-based social networking sites happened at the Federated Social Web Summit [FSW]. At this point in history, the Social Web has became the dominant platform for communication, rapidly beginning to even eclipse the use of e-mail amongst youth. The next steps taken by the companies and communities around the Social Web will have real consequences on the future of the Web and communication itself.

3. Social Web Frameworks

3.1 The Problem of Walled Gardens

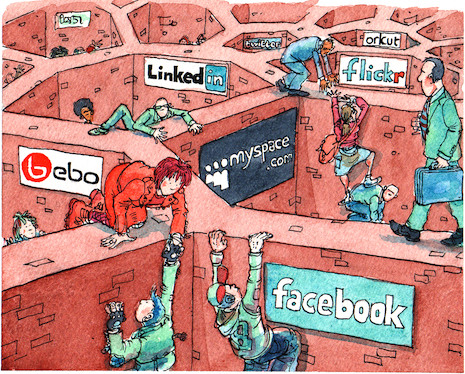

The importance of the Web has always been its open and distributed nature as a universal space of information. Until recently this space of information has been limited to hypertext web-pages without attention being paid to social interactions and relationships. This was not a particular fault of the Web, in fact but a result of a certain focus of the early Web on documents. However, these kinds of activities are currently restricted to particular social networking sites, where the identity of a user and their data can easily be entered, but only accessed and manipulated via proprietary interfaces, so creating a "wall" around connections and personal data, as illustrated in the picture below. This current dismal situation is analogous to the early days of hypertext before the World Wide Web, where various systems stored hypertext in proprietary and incompatible formats without the ability to use, globally link and access hypertext data across systems, a situation solved by the creation of URIs and HTML. A truly universal, open, and distributed Social Web architecture is needed.

The lack of such an architecture deeply impacts the everyday experience of the Web of many users. There are four major problems experienced by the end user:

- Portability: An ordinary user can not download their own data and share it as they like. Information stored on social networks could be useful for any number of applications, but the lack of portability of tediously entered social networking information causes users to continually re-enter and update their personal information, wasting their time.

- Identity: Not having a easy way to manage digital identity across digital networks leads to unsafe re-usage of passwords. Every time a user goes to a new site, they must not only create a new username and password, but re-find their friends and entice friends to move sites with them. Porting personal data from one network to another does not solve the problem of loosing one's friends if one moves.

- Linkability: Users have no way of being notified if they are being mentioned on a social networking site which they are not a member of. For example, if someone takes a photo of some friends at a party and wishes to publish it on the Web to share with those friends, but does not wish to make that publicly available, he must find a social network where each one of them is already a member, or simply not tell people that the photo has been uploaded.

- Privacy: A user cannot control how their information is viewed by others in different contexts by different social applications even on the same social networking site, which raises privacy concerns. Privacy means giving people control over their data, empowering people so they can communicate the way they want. This control is lacking if configuring data sharing is effectively impossible or data disclosure by others about oneself cannot be prevented or undone.

Participation is the life blood of social networks. If no one (or if too few people) participates, a social networking application dies. If social applications are to thrive and provide engaging and valuable services to users, they must be easy-to-use, and must support ways for people to connect with and manage their social interactions and connections across multiple sites. While we take a "user-centric" approach in this report, having a common set of Social Web standards is a "win-win" proposition for both industry and users. As portability issues prevent new and small companies from building innovative applications, as these applications often need access to social data held on third-party sites. In turn, large social networking sites themselves lack standards to easily share and monetize their data with other companies. Lastly, the lack of standards forces developers to create multiple versions of the same social application for different closed platforms.

3.2 The Social Web Vision

People express different aspects depending on context, thus giving themselves multiple profiles that enable them to maintain various relationships within and across different contexts: the family, the sporting team, the business environment, and so on. Equally so, in every context certain information is usually desired to be kept private. In the 'pre-Web world' people can usually sustain this multiplicity of profiles as they are physically constrained to a relatively small set of social contexts and interaction opportunities. In some ways, social dynamics on the Web resemble those outside the Web, but social interactions on the Web differ in a number of important ways:

- the kinds of profile exhibited by a single person are not controlled by the same constraints and so are less limited in scope, and so may include profiles for fictional personae.

- the set (number) of people with whom interactions are possible is not limited by distance or time. The Web allows for users to user connect with a vast number of people, which was inconceivable only a few years ago.

- a person can explicitly "manage" the relationships and access to information they wish to have with others and with the increasing convergence of the Web and the world outside the Web is also leading to increasing concerns about privacy as these worlds collide.

Anyone should be able to create and to organize one or more different profiles using a trusted social networking site of choice, including hosting their own site that they themselves run either on a server or locally in their browser. For example, a user might want to manage their personal information such as home address, telephone number, and best friends on their own personal "node" in a federated social network while their work-related information such as office address, office telephone number, and work colleagues is kept on a private social network on their corporate intra-net. Current aggregator-based approaches, exemplified by FriendFeed, are but a short-term solution akin to "screen scraping", that work over a limited number of social networking sites, are fragile to changes in the sites' HTML, and which are legally dubious.

The approach we endorse allows the user to own their own data and associate specific parts of their personal data directly to different social networking sites, as well as the ability to link to data and friends across different sites. For example, your Friends Profile can be exposed to MySpace and Twitter, whereas your Work Profile to Plaxo and LinkedIn, and links between data and friends should be possible across all these sites. Traditional services can utilize these features, so that your "health" profile can be exposed to health care providers and your "citizen" profile exposed to online government sites and services. In this world of portable social data, both large and small new players can then also interface to profiles and offer seamless personalized social applications.

Privacy is a complex topic, and we understand privacy as control over accessibility of social information in general, including security as an enabler (the authentication of digital identity and ownership of data). Privacy controls are often not well-understood by users and they do not stop data "leaking" from the social networking site itself, which may give user data to other companies or even governments for some kind of gain without alerting the user. In this regard, public key encryption is one solid technical basis of keeping data private, whether on the server-side or in terms of encrypting client to server communication using SSL [TLS]. Privacy should be controlled by the users themselves in an explicit contract with social networking sites and applications that makes privacy controls easy-to-use and understandable. As custodian of their own profiles, users can then decide which social applications can access which profile details via explicitly exposing personal data to that application provider, and retracting it as well, at an appropriate level of granularity. This in itself is one of the biggest challenges for the entire Web community, not just social networks, and needs a new policy-oriented Web architecture to support trust and privacy on the Web in the longer term, while building on the technical strengths of encryption. Whilst technical security is a mandatory enabler, users' effective ability to control the processing of their data is largely influenced by helpful user interface design with strong visual metaphors, and privacy-enhancing default settings regarding data sharing.

This Social Web architecture articulated here is not the invention of the Social Web Incubator Group, but of a long-standing community-based effort that has been running for multiple years, of which only a small fraction of the contributors have been explicitly interviewed and acknowledged by the Social Web Incubator Group. This report is dedicated to all the developers out there working to make this vision a reality.

3.3 The Terminology

As the Social Web is a large and innovative space, the creation of new terms can not be avoided, but to be too loose with terminology may serve to cause confusion rather than build consensus. Building on existing work like the lexicon of Identity Commons [IDLEXICON], we propose definitions for the following concepts in order to clarify our presentation:

| Concept

| Definition

| Graphical Representation

|

| User

| The user is a person, organization, or other agent that participates in online social interactions on the Web.

|

|

| Identity

| A single digital representation of a user. These are potentially unlimited and may coincide with different personae of the user such as a personal profile and work profile. This is a "personae" in the Identity Commons Lexicon.

|

|

| Profile Attribute

| Information about a user that is a component of the profile such as name, e-mail, status, photo, work phone, home phone, blog address, etc. This is an "identity attribute" in the Identity Commons Lexicon.

|  . .

|

| Social Connection

| Social connections are associations between a profile and a resource (or group of resources) and may include the type of the relationship (e.g. friend, colleague, spouse, likes etc) and may be either reciprocal ('friend') or uni-directional (following). The connection may be between different users or between a user and some social media (a video or an item the user likes). The collection of all connections of a profile is called the Social Graph of that profile.

|

|

| Social Group

| Social Groups are explicit named sets of social connections between resources. For example, My Football Team, Wine Club, My Favorite Movies, etc.

|

|

| Social Platforms

| Social platforms refer to a collection of features in which the user can interact with their social connections and social media, publish social media, and use social applications. The social platform is often centered on a single website,a social networking site analogous to Facebook or LinkedIn, but may also be owned and controlled by the user.

|

|

| Distributed Social Graph

| A set of profiles and social connections between agents which may be hosted across different social platforms.

|

|

| Social Applications

| Social Applications are functions of a social platform such as real-time messaging and social games. Social applications may be bound to a particular social platform (Facebook and FBML, Twitter API) or capable of running across multiple social platforms (OpenSocial). Note that the difference between a social platform and a social application is often fuzzy, as some platforms do not allow third-party applications, and some platforms are indistinguishable from their applications.

|

|

| Profile Association

| A kind of social connection. A profile associations are used to indicate the link between a specific profile and a social platform. The social platform can then provide profile attributes for use to social applications.

|

|

| Social Interaction

| A social interaction links a Social Web user and a social platform by providing all the necessary applications and profile information.

|

|

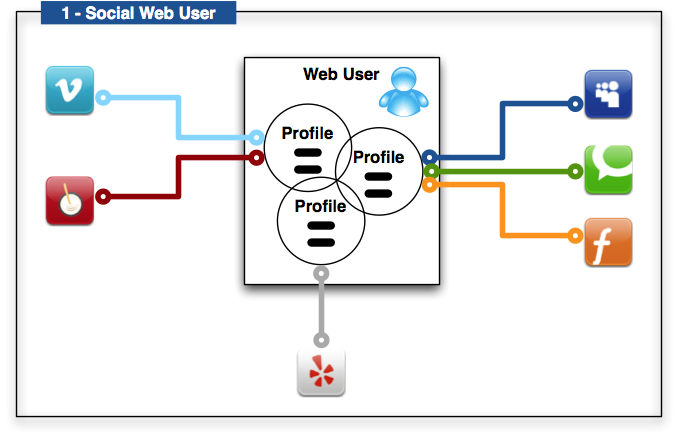

3.3.1 Social Web User and Profiles

Figure 1 belows shows how a single user (one person) can have multiple profiles that share common attributes. A user can then associate her profile at the profile level with particular social applications, perhaps controlling them in some sort of aggregated view in an application. The profiles are exposed to and synchronized with different social networking sites and platforms. In some cases, the social networking site will update a profile property and this modified property will be reflected across all profile instances. The attributes included in a profile will depend greatly on the needs and desires of the user and context of each social application, including dynamic attributes that capture the evolving changes of a person’s context, such as geolocation attributes. In Figure 1, one profile is associated with the "light blue" and "red" social applications, one profile to the "grey" social application, and one profile to the "blue", "green", and "orange" social applications.

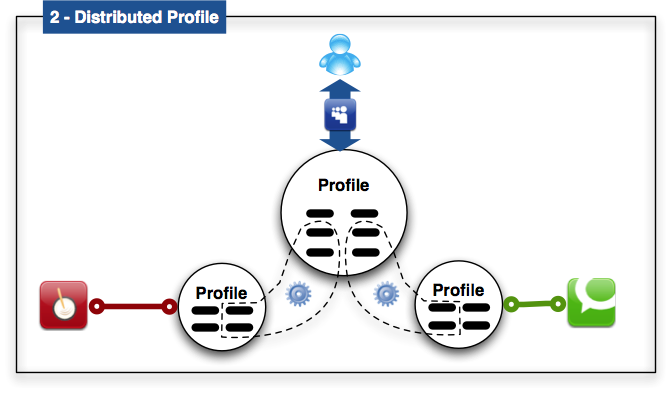

3.3.2 Single Distributed Social Graph

Attributes within a profile, including information about social connections, may be distributed. This means that the relevant attributes and social connections could be stored with a social application for use in the context of that application. For example, a work phone attribute is stored by my current employer's social platform, but another social networking site (e.g., LinkedIn) may store my previous employer's information. Together, these two (distributed) attributes can be considered a distributed single "work" profile whose information I may want to combine in context of a social application (such as a job-hunting social application). Figure 2 below shows a profile that has two sets of two attributes at distributed sites each with two local attributes. The user is interacting with the profile through the "blue" social platform, which could be a node in a decentralized Social Web. For example, a profile management service that could be run in the browser or via a third-party web-site would keep track of the distributed attributes and multiple profiles and allow the user to edit the attributes across multiple platforms and sites.

3.3.3 Multiple Distributed Social Graphs

A profile is associated with one or more social platforms in which the user's social graph is formed and nurtured. The social platform is the context for how a user is connected to the profiles of others and will support the specific connection types (e.g. friend, colleague, likes, etc) that will typically serve the purpose of some social application. A core feature or service of a social application is to make, maintain, and expand these connections.

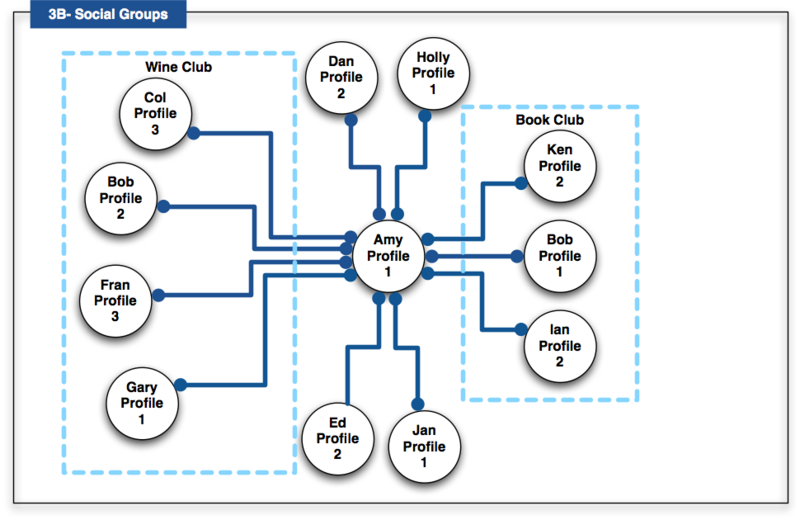

A user’s connections in a particular social networking site or platform should be portable. The user should be able to take them to another social networking site, so it is not necessary to re-establish all connections again for another (new) social application. Note that Amy (Profile 1) in the "blue" social platform is connected twice to Bob via his Profile 1 and 2. This demonstrates that the same user can connect via different social networking sites. The social networking sites do not necessarily have to be controlled by the same entity, but could be links through the open Web. The lines between profiles are either uni-directional (such as Twitter) or bi-directional (such as Facebook) to capture where the connection is one-way (following) or mutual (friendship). Two dots means that the connection is bi-directional. One dot means the connection or association it is not reciprocal.

Figure 3A shows an example of multiple distributed social graphs with a number of different users, profiles, and social networking sites or platforms. For example:

- The blue social platform connects Amy (Profile#1) to Bob (Profile#2), Col (Profile#3), Dan (Profile#2), Bob (Profile#1).

- The green social platform connects Amy (Profile#1) to Fran (Profile#3), Gary (Profile#1), Ed (Profile#2).

- The orange platform connects Bob (Profile#1) to Amy (Profile#2), Fran (Profile#1), Ed (Profile#7).

- The red social platform connects Ed (Profile#7) to Dan (Profile#2).

Figure 3B shows an example of explicit groups. In this example, Amy has designated a number of her connections into two groups. These named groups then enable Amy to refer to the collection of connections in a single instance. For example, "allow my book reviews to be read by my Book Club members only", and with global digital identities and profile information, these groups could encompass users across many social networking sites and platforms.

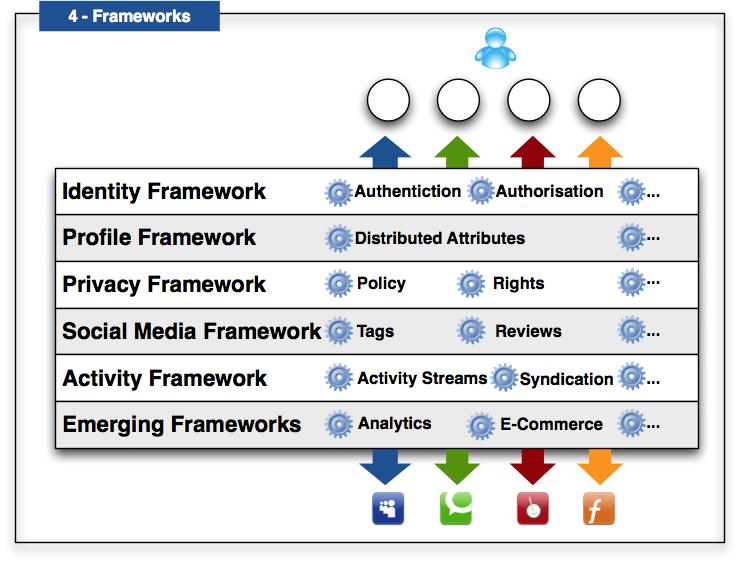

So far, an emphasis has been placed on the creation and management of profiles with their associated interwoven multiple social graphs. To be successful, the Social Web must include far more than distributed profile and social graph management. We propose an open conceptual system in which there are multiple interoperable frameworks (see Figure 4) covering different levels of complexity and use-cases.

3.4 The Case for Open Social Web Standards

In effect we depict a "meta-framework" within which there currently appears:

- Identity Framework,

- Profile Framework,

- Social Media Framework,

- Privacy Framework,

- Activity Framework, and

- Emerging Frameworks

At this point, we will assume the frameworks will be able to work together seamlessly via a combination and harmonization of standards in order to enable a wide variety of innovation across social networking sites, platforms and applications. An evolving combination of interoperable frameworks will move the Social Web towards this overall objective without constraining developers to a single monolithic architecture.

Of utmost importance is the fact that any framework should lead to a core set of functionality that allows developers to easily interrelate their existing technologies while encouraging new uses and hence leading innovation rather than holding it back by premature optimization. The framework we are also proposing is modular, so that new emerging social applications and frameworks can be added. For example, it is possible to envisage an e-Commerce framework encompassing an assortment of billing, product tracking, fulfillment protocols which are already in use in e-commerce applications and that can build on top of the social media and policy frameworks. Shockingly enough, users are already sharing this kind of information through sites such as Blippy. Another possible framework is an analytics framework that enables users to benefit from active social application participation by providing the dynamic analysis of the users behavior's and feeding this back into the user's profile via automatically creating and updating a user's profile information based on an analysis of their activity. This could enable the formation of communities-of-interest as the profiles of individuals reach a threshold of similarity if the privacy settings of a person's profile allow such connections to be made. Lastly, one could imagine a trust framework that is highly dependent on identity, context, and the provenance of social media. The level of trust necessary between a merchant and a user, for the purpose of fulfilling a transaction is on a different scale than that of sharing a blog post and trust will likely vary wildly across individuals and contexts, and so not easily be reducible to overly-simplistic metrics.

A critical problem in realizing this vision of Social Web is the fact that any "distributed" social networking platform will become yet another walled garden unless it is based on open and royalty-free standards. Simply creating yet another decentralized social networking codebase will likely not be the solution by itself, as that would require everyone to use the same code and limit innovation. Instead, a number of social networking sites and applications based on different code-bases can band together and share their data using standards, which allows them to maintain their autonomy and development while still gaining the "network effect" of having large numbers of users. Via open standards, multiple social networking platforms ranging from large vendors to simple personal websites should be able to demonstrate interoperability. For example, one codebase could use Atom, a format based on serializing updates in tree-structured XML [ATOM], while another could use RDF, a graph-based data model where all the data is identified with URIs. However, by using open standards that can communicate to each other, these different architectures and codebases should be able to work together to share status updates with each other. While the technology and work already exists to create such an decentralized social web, the standards needed by developers are currently scattered across various communities and are at times even incompatible, so that producing a single overview of what technologies and standards exist is a difficult if not impossible task. Lastly, without strong royalty-free patent policies, it is also impossible to guarantee that implementers can develop a distributed social networking codebase without being hit by a patent lawsuit.

4. Identity

Identity is the connection between a profile, a set of attributes, and a user. Some credentials or "proof" of identity may be required from the user to access or create a profile, which is the step of authentication. In particular, these credentials may take many forms such as a password, a signed digital certificate, or some other log-in credentials. Identity providers make claims (at least one) by providing attributes and may or

may not authenticate the identity of a user. One of the most important parts of any profile claim is the identifier (a URI, including an

e-mail address) for a user, although making a claim does not always reveal an identifier. An identity may be de-coupled from all but the most minimal of profiles (a simple identifier) and make claims without revealing any identifier, and may be anonymized so as to not include a user's true identity (i.e. legal name or other identifying characteristics).

Using an identity selector, a user may want to select from amongst multiple profiles (each of which could be a personae) and their attendant set

of attributes. Each of these sets of claims could be hosted by different providers. A user should be able to have multiple identities as well as multiple profiles. A user should be able to revoke an identity if it becomes compromised or for any other reason.

4.1 Problem: Usernames and Passwords are Insecure

Username and password combinations are currently the most prevalent identification technology on the Web. They are easy to understand, but suffer from a number of technical and economic drawbacks, including phishing threats. Web users are excessively requested to create password-backed accounts across various websites, leading to password-reuse with growing insecurity of each account. Passwords that are manually generated are often insecure, and automatically generated ones are difficult to remember. Widespread technical negligence in implementing password systems securely further undermines the security of password systems on the Web, and can be partially attributed to lacking practical advice or standards on how to implement good password schemes [PASSWORD]. Approaches like Facebook Connect and Google FriendConnect at this point rely on user-name and password-based authentication for sharing personal social data.

4.2 Use Case: No more passwords (or only one)

Social Web user Alice wants to access her social networking sites Twitbook for her friends and BizLink for job contacts. She wants to keep the two identities separate, and access these sites from multiple devices. Unfortunately, Alice uses so many social networking sites and associated applications that she currently just repeats the same password and username combination over and over again, which is insecure and may lead to identity theft. Luckily, using a distributed and secure identity framework, she can verify her identity by associating herself to a profile using some proof like self-signed certificates on her favorite devices like her laptop and mobile phone. Furthermore, as sometimes she may want to access her social networking site using an Internet cafe while traveling, she finds a trusted third-party passphrase-based identity provider called SocialAggregator. It should be noted that if SocialAggregator is developed on top of open standards, people would be able to implement their own version of such a service, allowing them to host it where they wished. As both Twitbook and Bizlink support her standardized identity authentication mechanism, whether it is used via her browser on her mobile phone and laptop or via a third-party identity provider, Alice no longer has to remember passwords when she uses any social networking site or application on her trusted everyday devices, and has to use a passphrase only when not using a trusted device.

4.3 Identity Standards

This section will list a number of online identity providers which are currently deployed or in development on the Web. We will include both identity standards, as well as authentication and discovery standards that rely on a notion of digital identity.

4.3.1 Browser-based Password Management

Browsers now make it easier for users to create different passwords for each website by remembering them for the user, as is currently implemented by Mozilla. The Weave Project of Mozilla aims to make password based authentication more integrated in the browser by allowing the browser to create and update passwords automatically across the Web, and its first release is the Sync project [SYNC]. Instead of trapping the user within the browser, Mozilla's Sync plugin allows the user to copy passwords, browser preferences and bookmarks from one browser and device to another in a secure manner by storing these preferences cryptographically encrypted on a server [SYNC]. The end user then only needs to remember this URL and the one password for its contents, to be able to retrieve it in any other device that knows how to decrypt and read the content. While currently browser based approaches do not track social connections, these could be addressed in future work, and one could imagine cross-browser generalizations of Sync functionality. However, even then it would not address the ability to make and use connections across different social networking sites.

4.3.2 OAuth

OAuth (Open Authorization) is an IETF standard that lets users share their private resources on a resource-hosting site with another third-party site without having to give the third-party their credentials for the site and so access to all their personal data on the social site [OAUTH1]. OAuth is a standard for granting data authorization to third parties, allowing people to grant access to private resources after authenticating themselves via their online identity. This standard essentially defeats the dangerous practice of many early social networking sites of accessing the username and password of an e-mail account in order to populate a list of friends. Instead, OAuth allows an authorized handshake to happen between a resource-hosting site and a third-party, which then lets the third-party redirect the user to authorize the transaction explicitly on the original site. If the transaction is explicitly authorized, then OAuth generates a duration-limited token for the third party that grants access to the resource-hosting site for specific resource. OAuth's tokens establish a unique ID and shared secret for the client making the request, the authorization request, and the access grant. To its huge advantage, this approach works securely over ordinary HTTP requests, as the client generates a signature on every API call by encrypting unique information using the token secret, and the token secrets never leave the sites. However, a session-fixation attack was discovered in the original specification that allowed a malicious party to save the authorization request and then convince a victim to authorize it, giving the malicious party access to the victim's resources. This attack was fixed by having the third-party register with the resource-hosting site, as given in an update to OAuth. Recently there has also been a timing attacks (using the difference of time in "bad" and correct digital signature verification to figure out tokens), but this has been addressed by having digital signature verification use a constant time.

While OAuth 1.0 is highly successful, the creation of the cryptographic work needed to produce correct signatures and the managing of various tokens was considered too difficult by many developers, so the IETF draft standard OAuth 2.0 simplifies the process [OAUTH2]. OAuth 2.0 does this by relying on Transport Layer Security (TLS), another IETF standard for securing traffic over the Internet using encryption, which is usually known by the name of its predecessor from Netscape, Secure Sockets Layer (SSL) [TLS]. OAuth 2.0 also breaks apart the various use-cases around getting tokens such that each is simpler. OAuth 2.0 requires that the resource-hosting site use HTTPS rather than HTTP and is therefore backwards incompatible with OAuth 1.0, i.e. as OAuth 2.0 requires SSL and OAuth 1.0 does not. SSL is required to generate tokens in OAuth 2.0, so signatures are no longer required for both token generation and API calls. Decreasing complexity, OAuth 2.0 has just a single security token and no signature is required. This has led to wider adoption across social networking sites like Twitter and Facebook.

4.3.3 OpenID

OpenID centralizes the authentication step at an identity provider, so that a user can identify themselves with one site (an OpenID identity provider) and share their profile data with another site, the relying party [OPENID]. A user need only remember one globally unique identity, which in OpenID 1.0 was a URI. In the initial OpenID 1.0 specification, the identity provider was discovered by following links of a HTML page accessed by the OpenID 1.0 URI, and OpenID 2.0 also allowed the use of the XRD format [XRD]. One of the primary findings of the OpenID effort was that many non-technical users were unable to use URIs to identify themselves, and so approaches like directed identity and Webfinger, or even just an e-mail address enabled by the Webfinger, were developed to facilitate adoption [WEBFINGER]. With directed identity, the user only needs to click on a graphical icon of their preferred ID provider to execute the login process. These enhancements dramatically improved the usability and adoption of OpenID.

Once the OpenID provider is discovered, a shared secret is established in between the provider and the relying party, allowing them to share data.Initially data was exchanged via one of two OpenID extensions, Simple Registration or Attribute Exchange [OPENID]. These extensions allow the user to specify what personal data should be sent to the relying party. Note that the attribute exchange protocol is constrained by the information that can be placed as attribute-value pairs inside a URI, which is practically limited to a maximum of 2000 characters. However, many identity providers have implemented an OpenID/OAuth hybrid approach which allows for more robust data sharing. Additionally, the OpenID Foundation is working on the Artifact Binding protocol which will also allow for more extensive data sharing. Large international OpenID identity providers include AOL, Blogger, Flickr, France Telecom, Google, GMX/Web.DE, Hyves, Janrain, Livedoor, LiveJournal, Mixi, MySpace, NEC Biglobe, Netlog, Rakuten, Telecom Italia, Verisign, WordPress, and Yahoo. In total these represent over one billion user accounts. Not all OpenID providers are also OpenID relying parties, but over the past year a number including Yahoo, Google, and AOL have also become relying parties.

As a server-side solution, OpenID and successor technologies have the advantage of only relying on server-side HTTP redirects, and so in general works independent of browsers. OpenID does not specify the credentials needed by the authentication mechanism, and very few OpenID providers provide authentication based on certificates or other kinds of credentials today, generally utilizing username-password authentication. As with all traditional username-password authentication processes, which represent the majority of all web-based authentication processes today, a possibility for phishing using redirection to "fake" identity providers exists [LAURIE]. Thus far there have been no significant reported OpenID related incidents and secondary forms of authentication (i.e. certificates, challenge questions, biometrics, one time passwords, etc.) can be utilized to minimize the threat, as with traditional username/password authentication processes. Many OpenID providers including Google, Verisign, and Janrain offer various kinds of secondary authentication. Additionally, most major OpenID providers have implemented sophisticated backend policies and analytic tools to ensure the security of their users and services, in much the same way credit card issuers utilize analytics to detect and prevent unusual or fraudulent behavior. In this way, OpenID-based authentication can be more secure and reliable than traditional username/password authentication since OpenID identity providers have dedicated teams and capabilities well beyond what most independent website operators provide.

Some developers view the technology as complex, requiring up to 7 HTTPS connections in the workflow. OpenID supporters feel that past and future enhancements continue to drive ease of deployment and adoption of the technology. There are also a number of third party solutions and plug-ins that facilitate deployment. Additionally, given the similarities between the workflow of OpenID and the success of OAuth with developers, the OpenID Foundation is pursuing a new version of OpenID, known as OpenID Connect, being built on top of OAuth [OIDCONNECT]. Due to the existence of OAuth 2.0, OpenID Connect is designed to be a thin layer on top of OAuth. One of the major things this brings to OAuth is true decentralization in terms of not needing to pre-register consumer keys and secrets with a given service. It will also standardize some basic profile attributes that are commonly available across providers. OpenID-Connect will offer an alternative to the OpenID 2.0 with Attribute Exchange and Artifact Binding approach also in development. As each of these initiatives progresses, the market will determine the appropriate applications and use cases for each approach.

4.3.4 WebID

WebID uses TLS and client-side certificates for identification and authentication [WEBID]. To authenticate a user requesting an access-controlled resource over HTTPS, the "verifying agent" controlling the resource needs to request an X.509 certificate from the client. Inside this certificate, in addition to the public-key there is a "Subject Alternative Name" field which contains a URI identifying the user (the "WebID"). Using standard TLS mutual-authentication, the user agent confirms they know the private key matching the public-key in the certificate. A single HTTPS cachable lookup on the WebID should retrieve a profile. If the semantics of the profile specifies that the user named by that URI is whoever knows the private key of the public-key sent in the X.509 certificate, this will confirm that the user is indeed named by the WebID, allowing the authenticating agent to make an access control decision based on the position of the WebID in a web of trust. WebID was originally known as FOAF+SSL [FOAFSSL].

The user does not need to remember any identifier or even password and the protocol uses exactly the same TLS stack as is used for global commercial transactions and is not vulnerable to phishing. As it is widely known that certificate authorities can be impersonated (although with a lot of work) [ROGUE], instead of relying on widely known certificate authorities, the client side certificates may be self-signed. Such certificates can be generated in the browser in a one click operation. Disabling a certificate is as simple as removing the public keys from the personal profile.

However, there are a number of problems with this approach. First, certificate management and selection in browsers still has a lot of room for improvement on desktop browsers, and is a lot less widely supported on mobile devices, although there exists WebID implementations that are written in Javascript as to be completely de-coupled from the browser. Furthermore, it is often thought that by tying identity to a certificate in a browser, users are tied to the device on which their certificate was created. In fact a user profile can publish a number of keys for each browser, and certificates are cheap to create. Some people see that this can be enhanced by uses of protocol such as the Nigori Protocol that requires only a single password to access "secrets" like certificates on a server [NIGORI]. Combined with Nigori, WebID could be integrated into a Mozilla Sync-style identity management system [SYNC].

4.3.5 Infocard

Infocard (Information Cards) is a user-centered identity technology based on three interrelated concepts: the card metaphor, active client software, and a protocol for identity authentication [INFOCARD]. As such, it is a multi-layered integrated approach and infrastructure in and of itself. "Active client" software integrated with the local browser acts as a local digital wallet for the user. Each card in this wallet supports a set of profile attributes called "claims." Personal cards can be created directly by the user and hold self-asserted claims and values. "Managed cards," on the other hand, are issued by identity provider websites that act as the authority for the claims supported by that card. The interactions between the active client and external services are defined by the OASIS IMI protocol [IMI].

Under IMI, an Infocard-compatible relying party website, usually via HTML extensions passively expresses its policy: the set of claim URIs that it requires, the card issuer it trusts, etc [IMI]. When the user clicks on an HTML button, extensions with the browser trigger the invocation of the active client which displays a set of cards that support the claims required. If a managed card is selected by the user, the user authenticates and the client fetches a security token from the card issuer site using IMI protocols, and POSTs it to the relying website where it can be validated and the claim values extracted. Thus Infocards eliminates the need for per-site passwords, allows minimum disclosure, and provider stronger levels of assurance if the verification is done locally. Microsoft's Cardspace, is built into Vista and Windows 7 and open-source projects include Novell's Digital Me, OpenInfocard, and Eclipse Higgins [HIGGINS].

However, its main disadvantage is the perceived complexity of interlocking standards and technology needed to support the architecture, so current work is on driving adoption via focus on applications in the government sector, as it does offer a higher level of assurance than browser-redirect-based identity technologies. Also, cards are too tied to a single device, so work is underway to incorporate Web services to at least provide "card roaming" across browsers and devices as well as making Infocards more compatible with other technology stacks.

4.3.6 XAuth

XAuth allows multiple identity providers to update an "XAuth provider" (currently only xauth.org) so that third parties can authenticate a given user's identity [XAUTH]. When a user signs-on to an account on an XAuth-enabled identity provider, the identity provider notifies xauth.org. When a site is encountered that needs authentication, the site can use some simple embedded Javascript to ask xauth.org which identity providers the user is logged in on, and then uses the cookies stored locally on the browser to help the user authenticate with the third-party site. This approach easily allows logging out (as XAuth-enabled identity providers can tell xauth.org that the user's session has ended) and lets users enable or block identity providers. However, this approach has been heavily criticized. First, xauth.org is controlled by a single entity (currently the company Meebo), and as a result XAuth is heavily centralized [XAUTHC]. Although this could be fixed (i.e. letting xauth.org redirect to a local host [XAUTHD], it still reveals to third-parties the identity providers a user employs without their authentication, which can be enough information to identify them for malicious purposes [MALI]. Google and Meebo deploy XAuth.

4.3.7 SAML

SAML (Security Assertion Markup Language) is an OASIS standard for the exchange of authentication and privacy between identity providers and service providers using an XML-based data format, tackling the single-sign on problem amongst many others [SAML]. SAML allows one to make assertions that include the subject making the assertion, the time of the assertion, any conditions to the assertion, and the resource to be accessed. An identity provider that can verify these assertions using a number of means by the identity provider, such as SSL, and make an authorization decision. SAML is often embedded in SOAP messages. In addition to the SAML protocol itself, SAML metadata supports the communication of identity provider and relying party information across multiple federations and so can be leveraged by federation providers and the majority of higher education institutions around the world as well as by other protocols such as Infocard and OpenID.

Examples of SAML deployment include universities, Google, SalesForce.com, Cisco, and WebEx. Unlike many other identity technologies, SAML is able to provide security solutions for banking and government web portals. In 2010 SAML was certified by the US Government for use by external identity providers at Identity Level of Assurance 1 through 3 for accessing specified government resources. SAML is, however, often viewed as being more complex than is necessary to support implementations requiring low levels of assurance. This has driven many developers to deploy simpler technologies like OpenID in low assurance scenarios.

4.3.8 Kantara Trust Framework

The Kantara Initiative fosters the emerging Trust Framework model which enables interoperability and trust in identity authentication systems through certified credentials [KANTARA]. This framework is composed of policy, privacy, and protocol deployment criteria to enable trust across all actors in a transaction, from end-users to identity providers and federation operators.

Kantara Initiative has developed an Identity Assurance Framework (IAF) as the criteria for interoperability amongst identity providers [IAF]. The IAF is certified when a combination of privacy and protocol profiles provides the Trust Framework. The Kantara Initiative IAF is technology agnostic and available to be profiled (specific to jurisdictions and verticals). Kantara Initiative also currently operates an Accreditation and Certification Program to accredit auditors to perform assessments and certify identity providers. The US Government has fostered some of the first deployments of this model. Kantara is continuing to refine the model with other stakeholders across the globe.

5. Profiles

The Profile framework contains those applications which can be used to access attributes and the distributed access to such information. Users in this stage should also be able to find, discover, add and delete connections in order to update their profile. A user may want to select amongst multiple profiles (each of which could be a personae) and their attendant set of attributes. Each of these set of claims could be hosted by different providers. It should be possible for a user to control multiple profiles across multiple social networking sites, and synchronize the updates to their identity providers. In this manner, social applications should be able to share profile information, but on an as needed basis, so that only the information needed in a particular context is revealed. Users can then be able to import their connections to new social networking sites and applications so they do not have to find and confirm all contacts from scratch over and over again. Furthermore, a user should be able to export all their profile information and delete all profile information from an identity provider.

5.1 Problem: Can Not Describe Yourself

Today, when users create profiles they are often constrained in how they describe themselves and have to manually re-find their friends. Worse, some social networking sites constrain preferences, such as gender and religion preferences, that can be very sensitive. Also, many users may wish to have different names and profiles on different kinds of sites, and on some sites anonymity is a must. Furthermore, a near fatal problem with the uptake of new social networking sites and applications is that not only do users have to re-enter all their information to conform to what the new site wants, but then they have to re-locate all their friends on the new site or re-invite them.

5.2 Use-Case: Keep Your Profile and Friends Across Networks

Alice has gotten bored of her social networking sites, and wants to move to the new and increasingly popular augmented social reality gaming platform Fazer. However, she does not want to re-enter her old information and find her friends again. She authenticates herself using her browser-based ID and then accesses Fazer, and selects her "personal" identity as to not let her work colleagues know about her game-playing identity. Since Fazer is a "real-world" augmented reality social game, she does not create a completely fictional profile (although she could) but instead opts to use an existing profile. In the account creation process, she is not required to complete all the profile attributes, but has them auto-completed, and she even creates a few new (custom) fields in a profile, and this new updated personal profile information is automatically synchronized between Twitbook and Fazer. She also explicitly agrees to share her geolocation with Fazer, which she has never done with Twitbook. Her various settings, such as avatars, presence, mood indicators, time-of-day and geolocation context are also automatically synchronized. Then using her set of social connections, her existing friends are automatically discovered on Fazer and she is given the option to add each of them or invite them if they are not on Fazer. A few months later she quickly gets tired of Fazer after having made some new friends in the process of playing various augmented reality games, and she decides to completely remove her profile from Fazer. However, as Fazer supports portability, Alice is able to download her own data to her profile manager at SocialAggregator and not lose touch with her friends, including downloading their numbers automatically to her mobile phone and backing her valuable data up locally.

5.3 Profile Standards

A number of standards exist for profile and relationship information on the Web. One distinction among them is what data format (plaintext, XML, RDFa) the profile is in and whether or not they are easily extensible. Even more importantly, there are differences in how, given a digital identity, any particular application can then try to discover and access the profile data and other capabilities that the digital identity may implement. While some profiles mention this discovery and use techniques explicitly and others do not, these common or standardized discovery techniques will be mentioned in context with each profile data format.

5.3.1 XRD

XRD (Extensible Resource Description), formerly YADIS and XRD-Simple (XRD-S), is an XML file format for discovering what capabilities a particular identity provider may have [XRD]. For example, is this provider also an OpenID identity provider or does it provide Portable Contacts information? The XRD format provides this for arbitrary resources via the use of types and typed links describing URIs (URI templates) given in the XML format that can then be queried by a user-agent. The work around XRD has led to a number of innovations for locating XRD besides the W3C-style use of content negotiation, including the use of the IETF draft standards like ".host-meta" [HOSTMETA]and more generic ".well-known" subdirectories from any URI [WELLKNOWN]. Furthermore, the XRD file (or other metadata format) can be discovered via possibly a combination of markup directly in the document (such as a Link element in HTML), HTTP Link Headers in response codes, and then generic directories like .host-meta. The priority can be determined by the IETF LRDD (Link-based Resource Descriptor Discovery) informational document [LRDD], which has now been subsumed by the host-meta [HOSTMETA]. The Web Linking standard specifies an IETF standard for Link Registries [WEBLINKING].

Despite the fact that XRD was originally developed in 2004 by the OASIS XRI (Extensible Resource Identifier) Technical Committee as the resolution format for XRIs (an alternative to URIs for personal identifiers), it no longer mandates the use of XRIs, which are custom URI-like identifiers for people and organizations that have in the past not been used in W3C Recommendations due to technical concerns [CONC] and the use of at least previously patented technology [PT]. Also, Web developers want a JSON specification of XRD, tentatively called JRD (although there is no RDF serialization of XRD)[JRDF]. The general discovery management also needs to be integrated with content negotiation, but Web Linking and related specifications provide a much needed clarification of how to retrieve metadata about resources on the Web.

5.3.2 VCard

VCard is the oldest and most widespread IETF standard format for personal address-book data, which is the kind of information typically found on a business card, such as names, phone number, and address [VCARD3]. Therefore, this format serves in general as the common core of most data-formats, except for FOAF (leading to a the definition of vCard 3.0 in RDF [VCARDRDF]). However, vCard 3.0 in general lacked the ability to describe social relationships and was serialized in a ASCII text format, so the VCard 4.0 activity at IETF has provided improved semantics for properties about people and organizations (such as the ability to express groups of users, e.g. "Wine Club members") and direct relationships between users ("friendship") and mechanisms to extend these terms [VCARD4]. Syntactically, vCard can be expressed in its native format similar to VCard 3.0 and in a new XML format similar to the PortableContacts XML format [VCARD4XML]. VCard import and export is supported by most mail programs like Thunderbird, Microsoft Exchange, and Apple Mail.

Based on vCard 3.0, profiles can also be embedded in HTML pages using the hCard microformat [HCARD]. Another microformat often used for relationship data is XFN (XHTML Friends Network), which embeds its own idiosyncratic social contact relationships directly into HTML links using the "rel" attribute, and provides a set of finite attributes to define which kind of relationships exist between individuals (friend, co-worker, met) [XFN]. This kind of contact information based on hCard is currently deployed by sites such as Slideshare, dopplr, and Twitter to express social networks and can be converted to formats like RDF via GRDDL [GRDDL]. Despite debates on alignment vCard 4.0 promises to be a stable core set of terms for the Social Web.

5.3.3 FOAF

The first project that used standards to describe decentralized social networks was the FOAF project (Friend-of-a-Friend)[FOAF]. FOAF however only attempts to address descriptive challenges, rather than the entire problem space. FOAF provides an extensible and open-ended approach to modeling information about people, groups, organizations and associated entities, and is designed to be used alongside other descriptive vocabularies. Despite these innovations, FOAF itself does not provide for social networking functionality by itself. It assumes other tools and techniques will be used alongside it, and does not itself specify authentication, syndication or update mechanisms. Today the vast majority of data expressed in FOAF is exported from large "social network" sites. However when FOAF began, most social networking sites (except Livejournal) did not yet exist, and the conceptual model for FOAF was the personal homepage.

FOAF profiles can be used to describe both attributes of a user as well as their social network. The discovery of FOAF information currently supports that information being simply accessed via RDFa or Linked Data over HTTP, and for private profile data, authentication using an identity provider before access. Current applications natively export FOAF profiles of their users, including Hi5, StatusNet, Drupal, and Semantic Micro-blogging [SMOB]. Various exporters have been created by the community to enable FOAF export of sites like Twitter, Facebook, and Last.fm.

FOAF is well-suited to enable a decentralized Social Web due to its use of URIs and web-scale linking. Like other RDF vocabularies, FOAF can be easily extended in a decentralized manner, as done by the SIOC vocabulary regards user-profiles and user-generated content [SIOC] and the Online Presence Ontology does for presence [OPO]. However, while FOAF was created to demonstrate the decentralized nature of distributed vocabularies, it's historic divergence from vCard and PortableContacts makes it difficult to use with current Social Web applications [PORT], along with the general perceived complexity of RDF and lack of adequate RDF tooling. The FOAF project does not propose FOAF as the only format that should be adopted for decentralized social networking; rather it is offered as a representational model that can find middle-ground between the semantics from diverse initiatives ranging from digital libraries and cultural heritage to those used in the Social Web. Recent changes to the FOAF specification have brought parts of it into closer alignment with the PortableContacts and VCard 4.0, and further such convergence is needed if FOAF is to be seen as a modern component of the technology landscape.

An increasingly popular profile standard is PortableContacts, which is derived from vCard and is serialized as XML and JSON [PORT]. It contains a vast amount of profile attributes, such as the relationshipStatus property, that map easily to common profiles on the Web like the Facebook Profile. More than a profile standard, the PortableContacts profile scheme is designed to give users a secure way to permit applications to access their contacts, depending on XRD for discovery of PortableContact end-points and OAuth for delegated authorization. It provides a common access pattern and contact scheme as well as authentication and authorization requirements for access to private contact information. It has support from Google, Hi5, Plaxo and others, and is a subset of the contact schema used by OpenSocial, so every valid OpenSocial provider is also a PortableContacts profile provider.

Originally as VCard 3.0 did not have an XML format, PortableContacts was the first realistic contact schema with an XML format. It is also a proper super-set of vCard 3.0 and is very close to mapping on to vCard 4.0, as co-ordination work in the DAP group shows [CFC]. Ideally, PortableContacts and vCard 4.0 could converge or gain an easy-to-understand super-set or subset relationship with each other to reduce the friction between various profile data formats.

5.3.5 OpenSocial

OpenSocial is a collection of Javascript APIs, controlled by the OpenSocial Foundation, that allow Google Gadgets (a proprietary portable Javascript application) to access profile data, as well as other necessary tasks such as persistence and data exchange [OPENSOCIAL]. It allows developers to easily embed social data into their Gadgets. The profile data it uses is a superset of the PortableContacts and vCard 3.0 data formats. It does not require access to Google servers to run, but instead can run-off the open-source Shindig implementation and so positions itself as an "open" alternative to the Facebook Platform, and has been supported by a number of vendors such as Google, MySpace, Yahoo!, IBM, and Ning.

There is a rather unfortunate mismatch between OpenSocial Gadgets and W3C Widgets from the Web Applications WG [WIDGETS], given that both are primarily based on top of HTML and Javascript. Currently there is work being undertaken by Apache Wookie (incubating) to provide interoperability between W3C Widgets and OpenSocial Gadgets, although ideally in the future the W3C Widgets would either be adopted or work more closely with major vendors in the next iteration [WOOKIE]. Also, W3C Working Groups like the DAP (Device Access Policy) Working Group are also producing APIs that involve contact information and so should ideally maintain some baseline compatibility with OpenSocial and PortableContacts.

The Social Web is not only the connections between people, but the connections between people and arbitrary resources, including messages like blog posts, audio, photos, videos, and other resources. So social media is any resource that is used in a social relationship with a user. A user should also be capable of having connections to "non-Web" resources like locations and items. For example, a user may "like" a particular musical style or "review" a particular album. The Social Web should offer a way to avoid having identical user content stored in different social networking sites and platforms. Users should be able to create, link to, and annotate social media with multiple social applications to aggregate their social media together in designated social platforms, as well as being given the option to save the data to local storage (e.g. in their browser). This is an extension of what is called by Berners-Lee "Linked Data" where links (connections) should be possible between arbitrary resources (anything identified with a URI), not just hypertext web-pages [LINKED]. One of the most important features that will support the generation of media on the Social Web is provenance. Provenance information should support the tracking of social media, identifying when and how it came to be posted on a given social networking site or application on the Web. Any such provenance information should be capable of answering questions such as "When was it originally posted?", "Where does it originate from?", and "Who posted it?"

6.1 Problem: Fined for Consuming Social Media

Increasingly users find themselves consuming social media, but not knowing if it is trustworthy or whether or not they can consume such social media without a monetary fine, i.e. whether their usage breaks the content's copyright! Not knowing this information can lead to disaster. People who are often downloading and re-using social media can now be fined for huge amounts of money, but many of them are unaware that the data was under copyright in the first place. So many users would like to have mechanisms to automatically determine whether a Web document or resource can be used, based on the original source of the content, the licensing information associated with the resource, and any usage restrictions on that content. Without any provenance (the information about who created the data and how has it changed over time), users can not trust social media. This applies to social applications themselves, whose reputation can be dependent on verifying sources, such as verifying the person or organization who created a news story in order to credit the original source in its site, which most real-world social applications would like to do automatically for thousands of sites a day. With the increase in fines related to social media consumption, users will want to be exceptionally well-informed about the social media they consume.

6.2 Use-Case: Safely Drag-and-Drop Social Media Across Multiple Platforms

Alice enjoys taking photographs about penguins and would like to share them as widely as possible with her friends. Using an image processor on her laptop, she fine-tunes her photos and publishes these to her personal blog using a graphical drag-and-drop interface that lets her just drop the photo into her blog and automatically update social networking sites she uses. Since she controls not only her profile but her social media, she can easily attach a Creative Commons with attribution non-Commercial license and ask for a small fee of 10 cents for commercial use. As Alice explores social media, she even finds herself paying for some social media she finds useful, and she uses a simple micropayment policy that allows her to consume up to five dollars in social media a week without having to worry about fines. She finds herself automatically paying tiny amounts of money for some social media to help support her friends and creators she likes and she finds herself collecting micropayments for her penguin photos, allowing her to turn her hobbies into a way to help sustain herself. Also, not only can she drag-and-drop social media safely, she can remove social media. When she discovers she has accidentally sent a message on Twitbook that spread a false rumor about an oil spill threatening penguins, she retracts it immediately so she does not cause a panic. Not only is the message removed from Twitbook, but it's removed from other sites as that aggregated it as well!

6.3.1 Tagging