[contents]

Copyright © 2009 W3C ® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark, document use rules apply.

This document is an introductory guide to the Evaluation and Report Language (EARL) 1.0 and is intended to accompany the normative document Evaluation and Report Language 1.0 Schema [EARL-Schema]. The Evaluation and Report Language is a framework for expressing test results. Although the term test can be taken in its most widely accepted definition, EARL is primarily intended for reporting and exchanging results of tests of Web applications and resources. EARL is a vendor-neutral and platform-independent format.

EARL is expressed in the form of an RDF vocabulary. The Resource Description Framework (RDF) is a language for representing semantically information about resources in the World Wide Web. However, EARL is not conceptually restricted to these resources and may be applied in other contexts outside the Web.

This section describes the status of this document at the time of its publication. Other documents may supersede this document. A list of current W3C publications and the latest revision of this technical report can be found in the W3C technical reports index at http://www.w3.org/TR/.

Please send comments about this document to the mailing list of the ERT WG. The archives for this list are publicly available.

This is a W3C Working Draft of the Evaluation and Report Language (EARL) 1.0 Guide. This document will be published and maintained as a W3C Recommendation after review and refinement. Publication as a Working Draft does not imply endorsement by the W3C Membership. This is a draft document and may be updated, replaced or obsoleted by other documents at any time. It is inappropriate to cite this document as other than work in progress.

This document was produced under the 5 February 2004 W3C Patent Policy. The Working Group maintains a public list of patent disclosures relevant to this document; that page also includes instructions for disclosing a patent. An individual who has actual knowledge of a patent which the individual believes contains Essential Claim(s) with respect to this specification should disclose the information in accordance with section 6 of the W3C Patent Policy.

This document has been produced as part of the W3C Web Accessibility Initiative (WAI). The goals of the Evaluation and Repair Tools Working Group (ERT WG) are discussed in the Working Group charter. The ERT WG is part of the WAI Technical Activity.

This document is an introductory guide to the Evaluation and Report Language (EARL) 1.0 and is intended to accompany the normative document Evaluation and Report Language 1.0 Schema [EARL-Schema] and its associated vocabularies: HTTP Vocabulary in RDF [HTTP-RDF], Representing Content in RDF [Content-RDF] and Pointer Methods in RDF [Pointers-RDF]. The objectives of this document are:

The primary audience of this document are quality assurance and testing tool developers such as accessibility checkers, markup validators, etc. Additionally, we expect that EARL can support accessibility and usability advocates, metadata experts and Semantic Web practitioners, among others. We do not assume any previous knowledge of EARL, but it is not the target of this document to introduce the reader to the intricacies of RDF and therefore, the following background knowledge is required:

Although the concepts of the Semantic Web are simple, their abstraction with RDF may bring difficulties to beginners. It is recommended to read carefully the aforementioned references and other tutorials found on the Web. It must be also borne in mind that RDF is primarily targeted to be machine processable and therefore, some of its expressions are not very intuitive for developers used to work with XML only.

This document is organized as follows. Section 2 introduces the basic concepts of EARL, its target audiences and some use cases. Section 3 presents a step-by-step introduction to write an EARL report. Section 4 presents some advanced uses of the vocabulary and how to extend it. Finally, Section 5 presents some conclusions and possible future work.

The Evaluation and Report Language (EARL) is a framework targeted to express and compare test results. EARL builds on top of the Resource Description Framework [RDF], which is the basis for the Semantic Web. Like any RDF vocabulary, EARL is a collection of statements about resources, each with a subject, a predicate (or a verb) and an object. These statements can be serialized in many ways (e.g., RDF/XML or Notation 3, also known as N3). A typical EARL report could contain the following statements (simplifying the notation and not including namespaces):

<#someone> <#checks> <#resource> . <#resource> <#fails> <#test> .

From these two simple statements, it can be inferred already the main components of an EARL Report (wrapped up in an assertion):

This structure shows the universal applicability of EARL and its ability to refer to any type of test: bug reports, software unit tests, test suite evaluations, conformance claims or even tests outside the world of software and the World Wide Web (although in such cases, there might be open issues for its full applicability). It must be stressed again the semantic nature of EARL: its purpose is to facilitate the extraction and comparison of test results by humans and especially by tools (the Semantic Web paradigm); it is not an application optimized for information storage, for which some other XML applications might be more suitable.

Initially, EARL was conceived as a way to create, merge and compare Web accessibility reports from different sources (tools, experts, etc.). However, this original aim has been expanded to cover wider testing scenarios. Summarizing, EARL enables the:

The extensibility of RDF allows tool vendors or developers the addition of new functionalities to the vocabulary, without losing any of the aforementioned characteristics. Other testers might ignore the extensions that they do not understand when processing third-party results.

It is also important to consider potential security and privacy issues when using EARL. For instance, test results expressed in EARL could contain sensitive information such as the internal directory structure of a Web server, username and password information, parts of restricted Web pages, or testing modalities. The scope of this document is limited to the use of the EARL vocabulary: security and privacy considerations need to be made at the application level. For example, certain parts of the data may be restricted to appropriate user permissions, encrypted or obfuscated.

The keywords must, required, recommended, should, may, and optional in this document are used in accordance with RFC 2119 [RFC2119].

The applicability of EARL to different scenarios can be seen in use cases such as the following:

The list of use cases is not limited to the Web. EARL could also be applied to generic Software Quality Assurance processes, where it will only be required to map real objects, actors and processes to URIs. EARL is as well flexible enough to respond to the needs of a variety of audiences involved in testing or quality assurance processes. Typical profiles include:

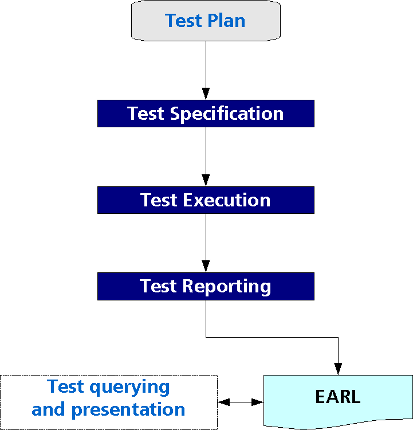

In software testing and quality assurance environments there are several typical steps that are followed. These are:

Figure 1 displays graphically the aforementioned elements:

Figure 1. Steps in software testing processes.

The previous steps can be matched to existing standards like IEEE 829 [IEEE-829], which defines a set of basic software tests documents.

EARL is not an standalone vocabulary, and builds on top of many existing vocabularies that cover some of its needs for metadata definition. This approach avoids the re-creation of applications already established and tested like the Dublin Core elements. The referenced specifications are:

RDF can be serialized in different ways, but its XML representation [RDF/XML] is the preferred method and will be used throughout this document. However, even when selecting this approach, there are many equivalent ways to express an RDF model. These vocabularies are referenced via namespaces in the corresponding RDF serialization. The list of the normative namespaces can be found in the EARL 1.0 Schema.

In the following sections, we will make an step-by-step introduction to EARL with several examples. The root element of any EARL report is an RDF node, as with any RDF vocabulary. There we declare the namespaces used to define additional classes and/or properties.

Example 3.1. The root element of an EARL report [download file].

<rdf:RDF xmlns:earl="http://www.w3.org/ns/earl#"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#">

<!-- ... -->

</rd:RDF>Next, let us assume that we want to express the results of

an XHTML validation in a

given document with the W3C HTML Validator in

EARL. The tested document can be found in the fictitious URL

http://example.org/resource/index.html, and has the following HTML

code:

Example 3.2. An XHTML document to be validated [download file].

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Strict//EN"

"http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd">

<html lang="en" xmlns="http://www.w3.org/1999/xhtml" xml:lang="en">

<head>

<title>Example of project pages</title>

</head>

<body>

<h1>Project description</h1>

<h2>My project name</h2>

<!-- ... -->

</body>

</html>This document has three errors that will constitute the basis of our EARL report:

li"

here; missing one of "ul", "ol" start-tag.li" omitted, but OMITTAG NO

was specified.alt".The first step is to define who performed the test, either a

human being or a software tool. This is noted in the EARL framework as an

Assertor. Let us consider different use cases. First, let us assume that

only the W3C HTML Validator performed the test. As an Assertor, this could

be expressed:

Example 3.3. A generic tool as an

Assertor [download file].

<earl:Assertor rdf:about="http://validator.w3.org/about.html#">

<dct:title xml:lang="en">W3C HTML Validator</dct:title>

<dct:description xml:lang="en">W3C Markup Validation Service, a

free service that checks Web documents in formats like HTML

and XHTML for conformance to W3C Recommendations and other standards.

</dct:description>

</earl:Assertor>Notice how in the Assertor class, EARL

offers the possibility to specify more information by using standard Dublin Core

properties like dct:title and dct:description. This is not the

only possible serialization of this report. An alternative, expressed in N3, could

be:

Example

3.4. An Assertor expressed in N3 notation [download

file].

@prefix earl: <http://www.w3.org/ns/earl#> .

@prefix dct: <http://purl.org/dc/terms/> .

<http://validator.w3.org/about.html#>

a earl:Assertor ;

dct:description """W3C Markup Validation Service, a free service that checks

Web documents in formats like HTML and XHTML for conformance

to W3C Recommendations and other standards."""@en ;

dct:title "W3C HTML Validator"@en .An Assertor is a

generic type. EARL allows the use of certain FOAF classes like Agent, Organisation or

Person to provide more semantic information on the type of assertor. Additionally, EARL

defines the Software class to declare tool assertors. Thus, our W3C Validator could be

described more adequately in the following way:

Example 3.5. A

Software assertor [download file].

<earl:Software rdf:about="http://validator.w3.org/about.html#">

<dct:title xml:lang="en">W3C HTML Validator</dct:title>

<dct:hasVersion>0.7.1</dct:hasVersion>

<dct:description xml:lang="en">W3C Markup Validation

Service, a free service that checks

Web documents in formats like HTML and XHTML for conformance

to W3C Recommendations and other standards.</dct:description>

</earl:Software>Notice how we inserted a new property, indicating the version of the software. Let us consider now the case where the assertor is a person. This can be expressed as in the following example:

Example 3.6. A

Person as an EARL assertor [download file].

<foaf:Person rdf:ID="john">

<foaf:mbox rdf:resource="mailto:john@example.org"/>

<foaf:name>John Doe</foaf:name>

</foaf:Person>EARL offers the possibility of defining combination

of assertors. The typical example is an expert evaluator and a software tool, which

perform the analysis. This set of assertors can be expressed under the umbrella of a

foaf:Group. We should define who is the main assertor within a

foaf:Group through the mainAssertor property (notice in the

example how the person is defined as a blank node):

Example 3.7. A

foaf:Group (software tool and person) as an assertor [download

file].

<foaf:Group rdf:ID="assertor01">

<dct:title>John Doe and the W3C HTML Validator</dct:title>

<earl:mainAssertor rdf:resource="http://validator.w3.org/about.html#"/>

<foaf:member>

<foaf:Person>

<foaf:mbox rdf:resource="mailto:john@example.org"/>

<foaf:name>John Doe</foaf:name>

</foaf:Person>

</foaf:member>

</foaf:Group>The second step is to define what was

analyzed, the tested resource. For that, EARL defines the TestSubject

class. This class is a generic wrapper for things to be tested like Web resources

(cnt:Content) or software (earl:Software). In this case, the

Example 3.2 could be represented as:

Example

3.8. A TestSubject with some Dublin Core properties

(non-abbreviated RDF/XML serialization) [download file].

<rdf:Description rdf:about="http://example.org/resource/index.html">

<dct:title xml:lang="en">Project Description</dct:title>

<dct:date rdf:datatype="http://www.w3.org/2001/XMLSchema#date">2006-02-14</dct:date>

<rdf:type rdf:resource="http://www.w3.org/ns/earl#TestSubject"/>

</rdf:Description>Using the Representing Content in RDF vocabulary

(via the cnt:TextContent class), we could insert the content of the test

XHTML file into the report:

Example 3.9. A test subject expressed as

cnt:TextContent (notice that the special XML characters have been escaped

because the document is not well-formed to be expressed as an XML Literal) [download

file].

<cnt:TextContent rdf:about="http://example.org/resource/index.html">

<dct:title xml:lang="en">Project Description</dct:title>

<dct:date rdf:datatype="http://www.w3.org/2001/XMLSchema#date"

>2006-02-14</dct:date>

<cnt:characterEncoding>UTF-8</cnt:characterEncoding>

<cnt:chars><?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Strict//EN"

"http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd">

<html lang="en" xmlns="http://www.w3.org/1999/xhtml" xml:lang="en">

<head>

<title>Example of project pages</title>

</head>

<body>

<h1>Project description</h1>

<h2>My project name</h2>

<p>The strategic goal of this project is to make you understand EARL.</p>

<ul>

<li>Here comes objective 1.

<li>Here comes objective 2.</li>

</ul>

<p alt="what?">And goodbye ...</p>

</body>

</html>

</cnt:chars>

</cnt:TextContent>The third step is to define the

criterion used for testing the resource. EARL defines test criteria

under the umbrella of the TestCriterion class. This class has two

subclasses, TestRequirement and TestCase, depending on whether

the criterion is a high level requirement, composed of many tests, or an atomic test

case. In our example, we are testing validity against the XHTML 1.0 Strict specification, which could be

expressed in the following way via the TestRequirement class:

Example

3.10. A TestRequirement with some Dublin Core properties [download

file].

<earl:TestRequirement rdf:about="http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd">

<dct:title xml:lang="en">XHTML 1.0 Strict Document Type Definition</dct:title>

<dct:description xml:lang="en">DTD for XHTML 1.0 Strict.</dct:description>

</earl:TestRequirement>The fourth step is to specify the

results of the test. There were three errors discovered by the W3C

Validator that need to be presented as TestResults. In this case, we

present only the errors, but within EARL is also possible to present positive results,

as we will see later. In the example below, we present the message errors as text

messages within XHTML snippets. We will see later how to improve the machine-readability

of such results.

Example 3.11. Results of the tests with the validator [download file].

<earl:TestResult rdf:ID="error1">

<dct:description rdf:parseType="Literal">

<div xml:lang="en" xmlns="http://www.w3.org/1999/xhtml">

<p>Error - Line 14 column 7: document type does not allow element

<code>li</code>here; missing one of

<code>ul</code>, <code>ol</code> start-tag.</p>

</div>

</dct:description>

<earl:outcome rdf:resource="http://www.w3.org/ns/earl#Fail" />

</earl:TestResult>

<earl:TestResult rdf:ID="error2">

<dct:description rdf:parseType="Literal">

<div xml:lang="en" xmlns="http://www.w3.org/1999/xhtml">

<p>Error - Line 15 column 6: end tag for

<code>li</code> omitted, but OMITTAG NO was specified.</p>

</div>

</dct:description>

<earl:outcome rdf:resource="http://www.w3.org/ns/earl#Fail" />

</earl:TestResult>

<earl:TestResult rdf:ID="error3">

<dct:description rdf:parseType="Literal">

<div xml:lang="en" xmlns="http://www.w3.org/1999/xhtml">

<p>Error - Line 16 column 9: there is no attribute

<code>alt</code>.</p>

</div>

</dct:description>

<earl:outcome rdf:resource="http://www.w3.org/ns/earl#Fail" />

</earl:TestResult>The final step is to merge together the created

components. The EARL

statements for this purpose are called Assertions, and have four key

properties: earl:assertedBy, earl:subject,

earl:test and earl:result. Each of them serves to point to the

corresponding assertors, test subjects, test requirements and results. From our previous

examples, we could build our first complete report with our three assertions:

Example 3.12. Results of the tests with the validator [download file].

<earl:Assertion rdf:ID="ass1">

<earl:result>

<earl:TestResult rdf:ID="error1" />

</earl:result>

<earl:test>

<earl:TestRequirement

rdf:about="http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd" />

</earl:test>

<earl:subject>

<cnt:TextContent rdf:about="http://example.org/resource/index.html" />

</earl:subject>

<earl:assertedBy>

<foaf:Group rdf:about="#assertor01" />

</earl:assertedBy>

</earl:Assertion>

<earl:Assertion rdf:ID="ass2">

<earl:result>

<earl:TestResult rdf:ID="error2" />

</earl:result>

<earl:test>

<earl:TestRequirement

rdf:about="http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd" />

</earl:test>

<earl:subject>

<cnt:TextContent rdf:about="http://example.org/resource/index.html" />

</earl:subject>

<earl:assertedBy>

<foaf:Group rdf:ID="assertor01" />

</earl:assertedBy>

</earl:Assertion>

<earl:Assertion rdf:ID="ass3">

<earl:result>

<earl:TestResult rdf:ID="error3" />

</earl:result>

<earl:test>

<earl:TestRequirement

rdf:about="http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd" />

</earl:test>

<earl:subject>

<cnt:TextContent rdf:about="http://example.org/resource/index.html" />

</earl:subject>

<earl:assertedBy>

<foaf:Group rdf:about="#assertor01" />

</earl:assertedBy>

</earl:Assertion>Our next example presents the results of an accessibility test in a given Web resource. Let us consider a simple XHTML page, which presents the image of a cat:

Example 3.13. An XHTML document to be validated [download file].

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Strict//EN"

"http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd">

<html lang="en" xmlns="http://www.w3.org/1999/xhtml" xml:lang="en">

<head>

<title>A cat's photography</title>

</head>

<body>

<h1>A cat's photography</h1>

<p>Image of a cat who likes

<acronym title="Evaluation and Report Language">EARL</acronym>, although it

seems quite tired.

<img src="../images/cat.jpg" alt="Image of a white cat with black spots."/>

</p>

</body>

</html>We have in this case a software tool called «Cool Tool» that

performs a test against the Common Failure F65

from the (X)HTML techniques for WCAG 2.0 [WCAG20]. This

technique proofs the existence of the alt attribute for given (X)HTML

elements like img. The software can be represented as:

Example

3.14. A Software assertor [download file].

<earl:Software rdf:about="http://example.org/cooltool/">

<dct:title xml:lang="en">Cool Tool accessibility checker</dct:title>

<dct:hasVersion>1.0.c</dct:hasVersion>

<dct:description xml:lang="en">A reliable compliance checker for

Web Accessibility</dct:description>

</earl:Software>The test requirement can be represented as:

Example

3.15. A TestCase for a WCAG 2.0 technique [download

file].

<earl:TestCase rdf:about="http://www.w3.org/TR/2008/NOTE-WCAG20-TECHS-20081211/F65">

<dct:title xml:lang="en">Failure of Success Criterion 1.1.1 from

WCAG 2.0</dct:title>

<dct:description xml:lang="en">Failure due to omitting the

alt attribute on img elements, area elements, and input elements of type

image.</dct:description>

</earl:TestCase>We make now the test result more verbose and amenable to machine processing by making use of the EARL Pointers [Pointers-RDF] vocabulary. In this case, we identify the line number where the test was compliant:

Example 3.16. A TestResult with a

pointer [download file].

<ptr:LineCharPointer rdf:ID="pointer">

<ptr:lineNumber>15</ptr:lineNumber>

<ptr:reference rdf:resource="http://example.org/resource/index.html" />

</ptr:LineCharPointer>

<earl:TestResult rdf:ID="result">

<earl:pointer rdf:resource="#pointer" />

<earl:outcome rdf:resource="http://www.w3.org/ns/earl#Pass" />

</earl:TestResult>Which leads to the following assertion:

Example

3.17. Accessibility Assertion [download file].

<earl:Assertion rdf:ID="assert">

<earl:result rdf:resource="result" />

<earl:test rdf:resource="http://www.w3.org/TR/2008/NOTE-WCAG20-TECHS-20081211/F65" />

<earl:subject rdf:resource="http://example.org/resource/index.html" />

<earl:assertedBy rdf:resource="http://example.org/cooltool/" />

</earl:Assertion>[Editor's note: Here comes an example with content negotiation.]

[Editor's note: To be added: EARL extension; aggregation of reports; ...]

This guide presented a thorough overview of the Evaluation and Report Language (EARL). As mentioned in the introduction, EARL must be seen as a generic framework that can facilitate the creation and exchange of test reports. In this generality lies its strength, as it can be applied to multiple scenarios and use cases, which may even lay outside the world of software development and compliance testing.

The EARL framework allows as well merging and aggregation of results in a semantic manner, thus enabling different testing actors to share and improve results.

Of course, there could be scenarios where EARL might not be able to cope with their underlying complexity. However, its semantic nature allows its extensibility via proprietary vocabularies based upon RDF, without endangering the interoperability of the reports.

The Working Group is looking forward to receiving feedback on the current version of the schema, and expects from implementers of compliance tools issues and suggestions for improvement.

http://dublincore.org/schemas/rdfs/http://xmlns.com/foaf/spec/http://www.iso.org/iso/iso_catalogue/catalogue_tc/catalogue_detail.htm?csnumber=52142http://www.niso.org/standards/z39-85-2007/http://www.w3.org/TR/1999/REC-rdf-syntax-19990222/http://www.w3.org/TR/rdf-primer/http://www.w3.org/TR/rdf-schema/http://www.w3.org/TR/rdf-syntax-grammar/http://www.w3.org/DesignIssues/RDF-XMLhttp://www.ietf.org/rfc/rfc2119.txthttp://www.ietf.org/rfc/rfc5013.txthttp://www.w3.org/TR/owl-features/http://www.w3.org/TR/WCAG10/http://www.w3.org/TR/WCAG20/The following is a list of changes with respect to the previous internal version:

Shadi Abou-Zahra, Carlos Iglesias, Michael A Squillace, Johannes Koch and Carlos A Velasco.