Registration & Discovery of

Multimodal Modality Components in Multimodal Systems: Use Cases

and Requirements

W3C Working Group

Note 5 July 2012

- This version:

- http://www.w3.org/TR/2012/NOTE-mmi-discovery-20120705/

- Latest published version:

- http://www.w3.org/TR/mmi-discovery/

- Previous version:

- none

- Editor:

- B. Helena Rodriguez, Institut Telecom

- Authors:

- Piotr Wiechno, France Telecom

- Deborah Dahl, W3C Invited

Experts

- Kazuyuki Ashimura, W3C

- Raj Tumuluri, Openstream, Inc.

Copyright © 2012 W3C® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark and document use rules apply.

Abstract

This document addresses people who want either to develop

Modality Components for Applications that communicate with the user

through different modalities such as voice, gesture, or

handwriting, and/or to distribute them through a multimodal system

using multi-biometric elements, multimodal interfaces or multi-sensor

recognizers over a local network or "in the cloud". With this goal,

this document collects a number of use cases together with their

goals and requirements for describing, publishing, discovering,

registering and subscribing to Modality Components in a system

implemented according the Multimodal

Architecture Specification. In this way, Modality Components can

be used by automated tools to power advanced Services such as : more accurate searches

based on modality, behavior recognition for a better interaction

with intelligent software agents and an enhanced knowledge

management achieved by the means of capturing and producing

emotional data.

Status of This Document

This section describes the status of this document at the

time of its publication. Other documents may supersede this

document. A list of current W3C publications and

the latest revision of this technical report can be found in the W3C technical reports

index at http://www.w3.org/TR/.

This is the 5 July 2012

W3C Working Group Note of

"Registration & Discovery of Multimodal Modality Components

in Multimodal Systems: Use Cases and Requirements".

This W3C Working

Group Note has been developed by

the Multimodal Interaction

Working Group of the W3C Multimodal

Interaction Activity.

This document was published by

the Multimodal Interaction

Working Group as a Working Group Note. If you wish to make

comments regarding this document, please send them

to www-multimodal@w3.org

(subscribe, archives). All

comments are welcomed and should have a subject starting with the

prefix '[dis]'.

Publication as a Working Group Note does not imply endorsement by

the W3C

Membership. This is a draft document and may be updated, replaced or

obsoleted by other documents at any time. It is inappropriate to cite

this document as other than work in progress.

This document was produced by a group operating under

the 5

February 2004 W3C

Patent Policy. W3C maintains a public list of

any patent disclosures made in connection with the deliverables of

the group; that page also includes instructions for disclosing a

patent. An individual who has actual knowledge of a patent which the

individual believes

contains Essential

Claim(s) must disclose the information in accordance

with section

6 of the W3C

Patent Policy.

Table of Contents

1. Introduction

User interaction with Internet Applications on mobile phones, personal

computers, tablets or other electronic Devices is moving towards a multi-mode

environment in which important parts of the interaction are

supported in multiple ways. This heterogeneity is driven by Applications that

compete to enrich the user experience in accessing all kinds of

services. More and more, Applications need interaction variety,

which has been proven to provide numerous concurrent advantages. At

the same time, it brings new challenges in multimodal integration,

which is often quite difficult to handle in a context with multiple

networks and input/output resources.

Today, users, vendors, operators and broadcasters can produce

and use all kinds of different Media and Devices that are capable of supporting

multiple modes of input or output. In this context, tools for

authoring, edition or distribution of Media for Application developers are well documented.

Mature proprietary / open source tools and Services that handle, capture, present,

play, annotate or recognize Media in multiple modes are available.

Nevertheless, there is a lack of powerful tools or practices for a

richer integration and semantic synchronization of all these

media.

To the best of our knowledge, there is no standardized way to

build a web Application that can dynamically combine

and control discovered modalities by querying a registry based on

user-experience data and modality states. This document describes

design requirements that the Multimodal Architecture and Interfaces

specification needs to cover in order to address this problem.

2. Domain Vocabulary

- Interaction Context

- For the purposes of this document an Interaction Context

represents a single exchange between a system and one or multiple

users across one or multiple interaction modes and covers the

longest period of communication over which it would make sense for

components to keep the information available. It can be as simple

as a single period of audio visual content (e.g. a program ), a

phone call or a web session. But it can be also a richer

interaction combining for example, voice , gesture and a direct

interaction with a light pointer or a shared whiteboard with an

associated VoIP call that during the interaction evolves to a text

chat. In these cases, a single context persists across various

modality configurations. For more details see the multimodal context

in the MMI architecture.

- Multimodal System

- For the purposes of this document a Multimodal System

is any system communicating with the user through different

modalities such as voice, gesture, or handwriting in the same

interaction cycle identified by an unique context. In a Multimodal

System the Application or the final user can

dynamically switch modalities in the same context of information

exchange. This is a bi-directional system with combined inputs and

outputs in multiple sensorial modes (e.g. visual, acoustic, haptic,

olfactive, gustative) and modalities (e.g. voice, gesture,

handwriting, biometrics capture, temperature sensing, etc). This is

also a system in which input and output data can be integrated [

See Fusion ] or

dissociated [ See Fission ] in order to identify the meaning

of the user's behavior or in order to compose a

more adapted, relevant and pertinent returning message using

multiple media, modes

and modalities. [ See a Multimodal

System

Example ]

A Multimodal

System can be any Infrastructure ( or any IaaS ), any Platform

( or any PaaS ) or

any Software ( on any SaaS ) implementing

human-centered multimodal communication. For more information : [

See the basic component's Description for a

Multimodal Interaction Framework ]

- Modality Component

- For the purpose of this document, a modality is a term that

covers the way an idea could be communicated or the manner an

action could be performed. In some mobile multimodal systems, e.g.

the primary modality is speech and an additional modality can be

typically gesture, gaze, sketch, or any combination thereof. These

are forms of representing information in a known and recognizable

logical structure. For example, acoustic data can be expressed as a

musical sound modality (e.g. a human singing) or as a speech

modality (e.g. a human talking). Following this idea, in this

document a Modality

Component is a logic entity that handles the input and

output of different hardware Devices (e.g. microphone, graphic tablet,

keyboard) or software Services (e.g. motion detection, biometric

changes) associated with the Multimodal System. Modality Components are

responsible for specific tasks, including handling inputs and

outputs in various ways, such as speech, writing, video, etc..

Modality Components are also loosely coupled software modules that

may be either co-resident on a device or distributed across a

network.

For example, a Modality Component can be charged

at the same time of the speech recognition and the sound input

management (i.e. some advanced signal treatment task like source

separation). Another Modality Component can manage the

complementary command inputs on two different devices: a graphics

tablet and a microphone. Two modality components, can manage

separately two complementary inputs given by a single device: a

camcorder. Or finally, a Modality Component, can use an external

recognition web service and only be responsible for the control of

communication exchanges needed for the recognition task. [ See :

this Modality Component

Example ]

In all four cases the system has a generic Modality

Component for the detection of a voice command input,

despite the differences of implementation. Any Modality

Component can potentially wrap multiple features

provided by multiple physical Devices but also more than one Modality

Component could be included in a single device. To

this extent the Modality Component is an

abstraction of the same kind of input handled and implemented

differently in each case. For more information : [ See the

Multimodal Interaction Framework input/output Description

]

- Interaction

Manager

- For the purposes of this document, the Interaction

Manager is also a logical component handling the multimodal integration and composition. It is

responsible for all message exchanges between the components of the

Multimodal

System and the hosting runtime framework [ See :

Architecture Components ]. This is a communication bus and also

an event handler. Each Application can configure at least one

Interaction Manager to define the required interaction logic. This

is a controller at the core of all the multimodal interaction :

- It manages the specific behaviors triggered by the events

exchanged between the various input and output components.

- It manages the communication between the modules and the client

application.

- It ensures consistency between multiple inputs and outputs and

provides a general perception of the application's current

status.

- It is responsible for data synchronization.

- It is responsible for focus management.

- It manages communication with any other entity outside the

system.

For more information : [ See the Multimodal Interaction Framework

Description of

an Interaction Manager ]

- Data Component

- For the purposes of this document a Data

Component is a logic entity that stores the public and

private data of any module in a Multimodal System. The data component's

primary role is to save the public data that may be required by one

or several Modality Components or by other

modules (eg, a session component in the hosting framework ). The

Data Component can be an internal module or an

external module [ See :

Example ]. This depends on the implementation chosen by each application.

However, the Interaction Manager is the only

module that has direct access to the Data

Component and only Interaction

Manager can view and edit the data and communicate

with external servers if necessary. As a result, the Modality

Components must use an Interaction

Manager as a mediator to access any private or public

data in the case of an implementation following the

principle of nested dolls given by the MMI Architecture

Specification [ See : MMI Recursion ].

For the storage of private data, each Modality

Component can implement its own Data

Component. This private Data Component

accesses external servers and keeps the data that the Modality

Components may require, for example, in the speech

recognition task. For more information : [ See the Multimodal

Interaction Framework Description of an

Session Component ]

- Application

- For the purposes of this document, the term Application refers

to a collection of events, components and resources which use

server-side or client-side processing and the Multimodal Architecture

Specification to provide sensorial, cognitive and emotional

information [ See :

Emotion Use Cases ] through a rich multimodal user experience.

For example, a multimodal Application can be implemented to use in an

integrated way, mobile Devices and cell phones, home appliances,

Internet of Things objects, television and home networks,

enterprise applications, web applications, "smart" cars or medical

devices.

- Service

- For the purposes of this document, a Service is a set of functionalities

associated with a process or system that performs a task and is

wrapped in a Modality Component abstraction. While most

of the industrial approaches for s ervice discovery use hardware Devices as networked Services and the

majority of web services discovery approaches consider a Service as a software

component performing a specific functionality, our definition

covers both views and focuses on the Multimodal Component

abstraction. Thus, a multimodal Service is any functionality wrapped in a

Multimodal Component (e.g. a Modality

Component or the Interaction

Manager) and using one or multiple devices, device's

services, Devices

APIs or web services. For example, in the case of a Modality

Component a Service is a functionality provided to

handle input or output interaction in one or more devices, sensors,

effectors, players (e.g. for virtual reality Media display), on-demand SaaS Recognizers (e.g.

for natural language recognition) and on-demand SaaS User Interface Widgets

(e.g. for geolocation display).

For the purposes of this document, we will use the term Service Description as

a set of attributes (metadata) describing a particular service. The

term Service Advertisement refers to the publication of the

metadata and the Service itself by indexing the Service in some

registry and making available the Service to the client's requests.

- Device

- For the purposes of this document, a Device is any hardware material resource

wrapped in a Modality Component. The MMI

Architecture does not describe how Modality

Components are allocated to hardware devices, this is

dependent on the implementation. A Device can act as an input sensor. In this

case, for example, cameras, haptic devices, microphones, biometric

devices, keyboards, mouse and writing tablets; are devices that

provide input Services wrapped in one or many Modality

Components. A Device can also act as an output effector.

In this case displays, speakers, pressure applicators, projectors,

Media players,

vibrating Devices and

even some sensor Devices (galvanic skins, switches, motion

platforms) can provide effector Services wrapped in one or many Modality

Components implemented in the Multimodal

System.

- Media

- For the purposes of this document, the multimodal Media are resources

depending on the semantics of the message, the user/author goals

and the capabilities of the support itself. The multimodal Media adhere to a certain

content mode (e.g. visual) and modality (e.g. animated image) and can be a

document, a stream, a set of data or any other conventional logical

entity to represent the information that is communicated through devices, services and networks.

In a Multimodal System, Media can be played, displayed, recognized,

touched, scented, heard, shaken, pushed, shared, adapted, fused,

composed, etc.. and annotated to enhance integration, recognition,

composition and interpretation [ See the use cases for MultiModal

Media Annotation with

EMMA ].

- Fusion

- For the purposes of this document, the multimodal Fusion mechanism is the

integration of data from multiple Modality

Components to produce specific and comprehensive

unified information about the interaction. The goal of the

multimodal Fusion is

to integrate the data coming from a set of input Modality

Components or to integrate data in the actions to be

executed by a set of output Modality

Components.

- Fission

- For the purposes of this document, the multimodal Fission mechanism

refers to the media

composition phenomenon: this is the process of realizing a single

message on some combination of the available modalities

for more than one sensorial mode. This process occurs before the

processes that are dedicated to the information rendering or to the

Media restitution. The

goal is to generate an adequate message, according to the space (in

the car, home, conference room), current activity (course,

conference, brainstorm) or preferences and profile (chair of the

conference, blind user, elderly). A Fission component determines which are the

most relevant modalities, selects which media has the best

content to return with the Modality

Components available in the given conditions; and

coordinates this final result [ See the MMI Architecture Standard

Life-Cycle Events ]. In this way, the multimodal Fission mechanism

handles the repartition of information among several Modality

Components and resolves which part of the content will

be generated within each Modality Component when the global

multimodal content has been defined.

3. Use Cases

This section is a non-exhaustive list of use cases that would be

enabled by implementing the Discovery & Register of Modality

Components. Each use case is written according a template that is

inspired on the Requirements

for Home Networking Scenarios Use Cases template. This allows

to locate our proposal in relation with the cases covered by the

Web and TV Interest Group. Each use case is structured as a list

of:

[Code]

In the form UC X.X (ucSet.ucNumber)

[Description]

A High level description of the use case

[Motivation]

Explanation of the need that addresses the use case.

[Requirements]

List of requirements implied by this Use Case in two columns: a

low level requirement and a high level requirement; both related to

the MMI Architecture specification.

3.1 Use Cases SET 1 : Smart

Homes

Multimodality as an assistive support in intimate, personal and

social spaces. Home appliances, entertainment equipment and

intelligent home Devices are available as modality

components for applications. These appliances can also provide

their own Interaction Managers that connect to the

multimodal interface on a smartphone to enable control of

intelligent home features through the user's mobile device.

3.1.1 Audiovisual Devices Acting as Smartphone Extensions

A home entertainment system providing user interface components to

command and extend a mobile application.

| Code |

| [ UC 1.1 ] |

| Description |

A home entertainment system is adapted by a mobile

Device as a set of

user interface components.

In addition to Media

rendering and playback, these Devices also act as input modalities for

the smartphone's applications. The mobile Device does not have to be manipulated

directly at all. A wall-mounted touch-sensitive TV can be used to

navigate applications, and a wide-range microphone can handle

speech input. Spatial (Kinect-style) gestures may also be used to

control Application behavior.

The smartphone discovers available modalities and arranges them to

best serve the user's purpose. One display can be used to show

photos and movies, another for navigation. As the user walks into

another room, this configuration is adapted dynamically to the new

location. User intervention may be sometimes required to decide on

the most convenient modality configuration. The state of the

interaction is maintained while switching between modality sets.

For example, if the user was navigating a GUI menu in the living

room, it is carried over to another screen when she switches rooms,

or replaced with a different modality such as voice if there are no

displays in the new location. |

| Motivation |

Many of today's home Devices can provide similar functionality

(e.g. audio/video playback), differing only in certain aspects of

the user interface. This allows continuous interaction with a

specific Application as the user moves from room to

room, with the user interface switched automatically to the set of

Devices available in

the user's present location.

On the other hand, some Devices can have specific capabilities and

user interfaces that can be used to add information to a larger

context that can be reused by other Applications and devices. This drives the

need to spread an Application across different Devices to achieve a

more user-adapted and meaningful interaction according to the

context of use. Both aspects provide arguments for exploring use

cases where Applications use distributed multimodal

interfaces.

|

| Requirements |

Low-Level |

High-Level |

| Distribution |

Lan |

Any |

| Advertisement |

Devices Description |

Modality Component's Description |

| Discovery |

The Application must handle MMI

requests/responses |

Fixed, Mediated, Active or

Passive |

| Registration |

The Application must use the Status Event to

provide the Modality Component's Description and the register

lifetime information. |

Hard-State |

| Querying |

The Application must send MMI

requests/responses |

Queries searching for attributes in the Description of the

Modality Component (a predefined MC Data Model needed) |

3.1.2 Intelligent Home

Apparatus

Mobile Devices as

command mediators to control intelligent home apparatus.

| Code |

| [ UC 1.2 ] |

| Description |

Smart home functionality (window blinds/lights/air

conditioning etc.) is controlled through a multimodal interface,

composed from modalities built into the house itself (e.g. speech

and gesture recognition) and those available on the user's personal

Devices (e.g.

smartphone touchscreen). The system may automatically adapt to the

preferences of a specific user, or enter a more complex interaction

if multiple people are present.

Sensors built into various Devices around the house can act as input

modalities that feed information to the home and affect its

behavior. For example, lights and temperature in the gym room can

be adapted dynamically as workout intensity recorded by the fitness

equipment increases. The same data can also increase or decrease

volume and tempo of music tracks played by the user's mobile Device or the home's Media system. In

addition, the intelligent home in tandem with the user's personal

Devices can monitor

user behavior for emotional patterns such as 'tired' or 'busy' and

adapt further. |

| Motivation |

The increase in the number of controllable Devices in an

intelligent home creates a problem with controlling all available

services in a coherent and useful manner. Having a shared context

-built from information collected through sensors and direct user

input - would improve recognition of user intent, and thus simplify

interactions.

In addition, multiple input mechanisms could be selected by the

user based on Device

type, level of trust and the type of interaction required for a

particular task. |

| Requirements |

Low-Level |

High-Level |

| Distribution |

Lan, Wan |

Any |

| Advertisement |

Application Manifests, Device Description |

Modality Component's Description |

| Discovery |

The Application must handle MMI

requests/responses |

Fixed |

| Registration |

The Modality Component's Description

and the register lifetime information may be predefined in the home

server. |

Hard-State |

| Querying |

The Application must send MMI

requests/responses |

MMI Lifecycle Events using knowing descriptions |

3.2 Use Cases SET 2 : Personal Externalized Interfaces

Multimodality as a way to simplify and personalize interaction

with complex Devices

that are shared by family or close friends, for example cars. The

user's personal mobile Device communicates with the external

shared equipment in a way that enables the functionality of one to

be available through the interface of the other.

3.2.1 Smart Cars

Multimodality as a nomadic tool to configure user interfaces

provided in Smart Cars.

| Code |

| [ UC 2.1 ] |

| Description |

Basic in-car functionality is standardized to be

managed by other devices. A user can control seat, radio or AC

settings through a personalized multimodal interface shared by the

car and her personal mobile device. User preferences are stored on

the mobile Device (or

in the cloud), and can be transferred across different car models

handling a specific functionality (e.g. all cars with touchscreens

should be able to adapt to a "high contrast" preference).

The car can make itself available as a complex modality component

that wraps around all functionality and supported modalities, or as

a collection of modality components such as touchscreen / speech

recognition / audio player. In the latter case, certain user

preferences may be shared with other environments.

For example, a user may opt to select the "high contrast" scheme

at night on all of her displays, in the car or at home. A car that

provides a set of modalities can be also adapted by the mobile Device to compose an

interface for its functionality, for example manage playback of

music tracks through the car's voice control system. Sensor data

provided by the phone can be mixed with data recorded by the car's

own sensors to profile user behavior which can be used as context

in multimodal interaction. |

| Motivation |

User interface personalization is a task that most

often needs to be repeated for all Devices a user wishes to interact with

recurringly. With complex devices, this task can also be very

time-consuming, which is problematic if the user regularly accesses

similar, but not identical Devices -as in the case of several cars

rented over a month.

A standardized set of personal information and preferences that

could be used to configure personalizable Devices automatically would be very helpful

for all these cases in which the interaction becomes a customary

practice.

|

| Requirements |

Low-Level |

High-Level |

| Distribution |

Lan, Wan, Man |

Distributed |

| Advertisement |

Constructor-Dependent |

Modality Component's Description |

| Discovery |

The Application must handle MMI

requests/responses |

Fixed, Mediated |

| Registration |

Multi-node Registry |

Soft-State |

| Querying |

The Application must send MMI

requests/responses |

Requests limited to a federated group of MC |

3.3 Use Cases SET 3 : Public Spaces

Multimodality as a way to access public Devices to perform tasks or for fun.

Functionality of a user's personal Device is projected to modality components

available in the immediate proximity. Public Devices present a multimodal interface

tailored to the user's preferences. The user's mobile Device can also

advertise itself as a modality component to allow for direct

targeted messaging without privacy abuse.

3.3.1 Interactive Spaces

Multimodality as a support for advertising and accessing

information on public user interfaces.

| Code |

| [ UC 3.1 ] |

| Description |

| Interactive installations such as touch-sensitive

or gesture-tracking billboards are set up in public places. Objects

that present public information (e.g. a map of a shopping mall) can

use a multimodal interface (built-in or in tandem with the user's

mobile devices) to simplify user interaction and provide faster

access. Other setups can stimulate social activities, allowing

multiple people to enter an interaction simultaneously to work

together towards a certain goal (for a prize) or just for fun (e.g.

play a musical instrument or control a lighting exhibition). In a

context where privacy is an issue (for example, with

targeted/personalized alerts or advertisements), the user's mobile

Device acts as a

complex modality component or a set of modality components for an

Interaction

Manager running on the public network. This allows the user to

receive relevant information in the way she sees fit. These alerts

can serve as triggers for interaction with public Devices if the user

chooses to do so. |

| Motivation |

Public spaces provide many opportunities for

engaging, social and fun interaction. At the same time, preserving

privacy while sharing tasks and activities with other people is a

major issue in ambient systems. These systems may also deliver

personalized information in tandem with more general services

presented publicly.

A trustful discovery of the Modality Components available in such

environments is a necessity to guarantee personalization and

privacy in public-space applications. |

| Requirements |

Low-Level |

High-Level |

| Distribution |

Lan, Wan, Man |

Any |

| Advertisement |

Application Manifests, Device Description, User

Profile |

Modality Component's Description |

| Discovery |

The Application must handle MMI

requests/responses |

Fixed, Mediated, Passive, Active |

| Registration |

The Modality Component's Description

and the register lifetime information may be predefined in the

space server. |

Soft-State |

| Querying |

The Application must send MMI

requests/responses |

Requests limited to a federated group of MC |

3.3.2 In-Office Events Assistance

Automatic in-office presentation hardware

projectors/speakers/ASR/gesture tracking controlled through a

mobile device

| Code |

| [ UC 3.2 ] |

| Description |

A conference room where a series of meetings will

take place. People can go in and out of the room before, after and

during the meeting. The door is "touched" by a badge. The system

activates any available display in the room and the room and the

event default Applications : the outline modality

component, the notification Modality Component and the guiding Modality

Component. The chair of the meeting is notified by a

dynamically composed graphic animation, audio notification or a

mobile phone notification, about the Modality

Components availability with a shortcut to the default Application

instance endpoint.

The chair of the meeting selects a setup procedure by text amongst

the discovered options. These options could be, for example: photo

step-by-step instructions (smartphone, HDTV display, Web site),

audio Instructions (Mp3 audio guide, Room speakers reproduction,

HDTV audio) or RFID enhanced instructions (mobile SmartTag Reader,

RFID Reader for smartphone). The chair of the meeting chooses the

room speakers reproduction, the guiding Service is activated and he starts to set

the video projector. Then some attendees arrived. The chair of the

meeting changes to the slide show option and continues to follow

the instructions at the same step it was paused but with another

more private modality for example, a smartphone slideshow. |

| Motivation |

| Meeting Room environments are more and more

complex. During meetings room setup users spend a lot of time

trying to put up presentations and make remote connections: a task

that usually affects his emotional state producing anxiety and

increasing his stress level. Assistance Applications using modality discovery can

reduce the task load and the cognitive load for the user with

reassuring multimodal context-aware interfaces. |

| Requirements |

Low-Level |

High-Level |

| Distribution |

Lan, Wan |

Any |

| Advertisement |

Application Manifests, Device Description,

Situation Description, User Profile |

Modality Component's Description |

| Discovery |

The Application must handle MMI

requests/responses |

Fixed, Passive |

| Registration |

One part of the Modality

Component's Description and the register lifetime information

may be predefined in the space server. |

Soft-State, Hard-State |

| Querying |

The Application must send MMI

requests/responses |

Requests limited to a federated group of Modality

Components |

3.4 Use Cases SET 4 : Health Sensing

Multimodality as sensor and control support for medical reporting.

3.4.1 Health Notifiers

Multimodal Applications to alert and report medical

data.

| Code |

| [ UC 4.1 ] |

| Description |

| In medical facilities, a complex Modality

Component provide multiple options to control sensor operations

by voice or gesture ("start reading my blood pressure now") for

example. The complex Modality Component is attached to a

smartphone. The Application integrates information from

multiple sensors (for example, blood pressure and heart rate);

reports medical sensor readings periodically (for example, to a

remote medical facility) and sends alerts when unusual

readings/events are detected. |

| Motivation |

| In critical situations regarding health, like

medical urgency, multimodality is the most effective way to

communicate alerts. With this goal a dynamic and effective Modality

Component discovery is needed. This is also the case when the

goal is to monitor the health evolution of a person in all its

complexity with rich media. |

| Requirements |

Low-Level |

High-Level |

| Distribution |

Lan |

Any |

| Advertisement |

Application Manifests, Device Description, User

Profile |

Modality Component's Description |

| Discovery |

The Application must handle MMI

requests/responses |

Fixed, Passive |

| Registration |

The Modality Component's Description

and the register lifetime information may be predefined in the

space server. |

Hard-State |

| Querying |

The Application must send MMI

requests/responses |

Queries to a Centralized Registry |

3.5 Use Cases SET 5 : Synergistic Interaction and Recognition

Automatic discovery of Modality Component's resources providing

high-level features of recognition and analysis to adapt

application Services

and user interfaces, track the user behavior and assist the user in

temporary difficult tasks.

3.5.1 Multimodal support to recognition

| Code |

| [ UC 5.1 ] |

| Description |

| A modality component is an audio recognizer trained

with the more common sounds in the house to alert in case of

emergency. In the same house security Application uses a video recognizer to

identify people at the front door. These two Modality

Component's' advertise some features, to cooperate with a

remote home management Application using a discovery mechanism for

its Fission feature.

The Application

validates and completes both recognition results while using

them. |

| Motivation |

| The current recognizer system development has

arrived to a point of maturity where if we want to dramatically

enhance recognition, multimodal complementary results will be

needed. In order to achieve this, an image recognizer can use

results coming from other kind of recognizers (e.g. audio

recognizer) within the network engaged in the same interaction

cycle. |

| Requirements |

Low-Level |

High-Level |

| Distribution |

Lan, Wan, Man |

Any |

| Advertisement |

Application Manifests |

Modality Component's Description |

| Discovery |

The Application must handle MMI

requests/responses |

Fixed, Passive |

| Registration |

The Modality Component's Description

and the register lifetime information may be predefined in the

server. |

Hard-State |

| Querying |

The Application must send MMI

requests/responses |

Queries to a Centralized Registry |

3.5.2 Discovery to enhance synergic interaction

Multimodal discovery as a tool to avoid difficult

interaction.

| Code |

| [ UC 5.2 ] |

| Description |

| A person working mostly with a pc having a problem

with his right arm and hands. He is unable to use a mouse or a

keyboard for a few months. He can point at things, sketch, clap,

make gestures, but he can not make any precise movements. An Application

proposes a generic interface to allow this person the access to his

most important tasks in his personal devices: to call someone, open

a mailbox, access his agenda or navigate over some Web pages. It

proposes child-oriented intuitive interfaces like a clapping-based

Modality

Component, a very articulated TTS component or reduced gesture

input widgets. Other Modality Components like phone Devices with very big

numbers, very simple remote controls, screens displaying text at

high resolution, voice command Devices based on a reduced number or orders

can be used. |

| Motivation |

One of the main indicators concerning the usability

of an Application is the corresponding level of

accessibility provided by it. The opportunity for all the users to

receive and to deliver all kinds of information, regardless of the

information format or the type of user profile, state or impairment

is a recurrent need in web applications. One of the means to

achieve accessibility is the design of a more synergic interaction

based on the discovery of multimodal Modality

Components.

Synergy is two or more entities functioning together to produce a

result that is not obtainable independently. It means "working

together". For example, in nomadic Applications (always affected by the

changing context) to avoid disruptive interaction is an important

issue. In these applications, user interaction is difficult,

distracted and less precise. Multimodal discovery can increase

synergic interaction offering new possibilities more adapted to the

current disruptive. A well-founded discovery mechanism can enhance

the Fusion process

for target groups of users experiencing permanent or temporary

learning difficulties or with sensorial, emotional or social

impairments. |

| Requirements |

Low-Level |

High-Level |

| Distribution |

Lan, Wan, Man |

Any |

| Advertisement |

Application Manifests, Device Description, User

Profile |

Modality Component's Description |

| Discovery |

The Application must handle MMI

requests/responses |

Fixed, Mediated, Passive, Active |

| Registration |

Multi-node Registry |

Soft-State |

| Querying |

The Application must send MMI

requests/responses |

Distributed Registry. Handled by pattern matching |

4. Requirements

4.1 Distribution

Modality

Components can be distributed in a centralized way, an hybrid

way or a fully decentralized way. For the Discovery & Register

purposes the distribution of the Modality Components influences how many

requests the Multimodal System can handle in a given

time interval, and how efficiently it can execute these requests.

The MMI Architecture Specification is distribution-agnostic. Modality

Components can be located anywhere: in a local network or in

the web. The decision about how to distribute the Multimodal

Constituents depends on the implementation.

4.2 Advertisement

Advertisement of Modality Components is one of the most

influential criteria to evaluate how well the system can discover

Modality

Components, starting from the description mechanism. This

allows the Multimodal System to reach correctness in

the Modality

Components retrieval, because a pertinent and expressive

description enables the result to match more closely to the

application's or user's request. On the other hand, Advertisement

also affects the completeness in the Modality

Component retrieval. To return all matching instances

registered and corresponding to the user's request, the request

criteria must match to some basic attributes defined in the Modality

Component's Description. A Modality Component's Description that lists

some multimodal attributes and actions is required.

For this reason, conforming specifications should provide a means for applications to

advertise Modality Components in any of the

distributions enumerated in section 5.1.

This Modality Component's' Description can be

expressed as a data structure (e.g. ASN.1) as a simple attribute-value pairs list,

as a list of attributes with hierarchical tree relationships (e.g.

the intentional name schema in INS) , as a WSDL document or as an XML manifest document. The

language and form of the document is Application dependent.

The kind of data described by this document depends also on the

implementation and some examples of the information that could be

advertised can be found in the Best

practices for creating MMI Modality Components.

For instance, the Modality Component's' Description data can

describe :

- Information about the of the identity Modality

Component :

For example the unique identifier of the Modality

Component, its name, address, port number, its embedded Device or service,

constructor, version, transport protocol or lifetime.

- Information about the of the behavior of the Modality

Component :

The list of content or Media handled by the Modality

Component; a list of the commands or actions (e.g. described as

a service, functionality, operation or activity), to which the Modality

Component responds; parameters or arguments for each action;

and information variables that models the state of the Modality

Component.

- Information about the of the context of use of the Modality

Component :

This may cover the name of the organization providing the Modality

Component, its authorizations, authentication procedures,

privacy policies, its business type (e.g. its UDDI businessService),

its access point , complementary information about invocation

policies or implementation details.

- Information about the semantics of the Modality

Component for an specific domain :

A Modality

Component type that can be application-specific or can follow

some specification (e.g.

UPnP,

ECHONET or IANA)

depending on the implementation. The Modality

Component's' federation group (e.g. the Zone in AppleTalk Name

Binding Protocol or the DNS subdomain), its scope (e.g. like UA

scopes in SLP),

its category, its intent (e.g. like

Implicit Intents in Android or Web Intents) or any other

semantic metadata.

A data model with some examples of attributes specific to the

multimodal domain can be suggested by the MMI Architecture

Specification to guarantee interoperability, expressiveness and

relevance in the description's content from a Multimodal

Interaction point of view. In this way, this data model can be used

also as information support for the annotation, for the modality

selection and for the Fusion and Fission mechanisms in the analyzers or

synthesizers implemented in the Multimodal System.

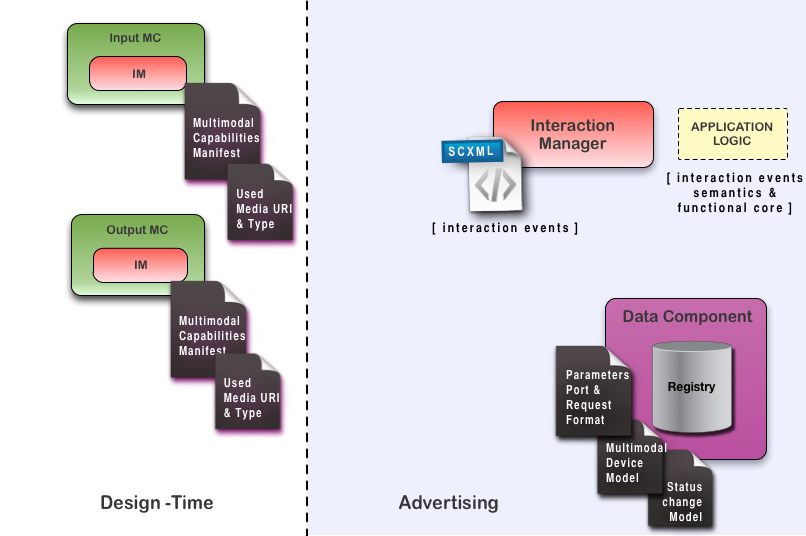

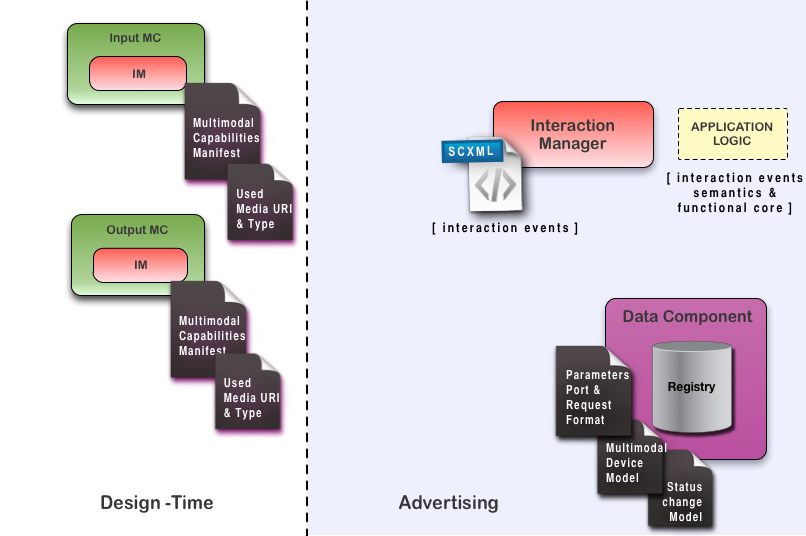

As an example, Figure 1 shows a manifest that can be created at

Design Time or at Load Time, and that can be joined by a list of Media handled by the Modality

Component. It can be an annotated list of Media (e.g. EMMA) and

its URIs.

Figure 1: Manifest that can be created either at Design Time or at Load Time

To store these descriptions, the MMI Data Component must be used as a directory support in a

centralized or a distributed manner.

A centralized registry hosted by a Data Component should provide a register of Modality

Components states and their descriptions; if the problems

associated with having a centralized registry such as a single

point of failure, bottlenecks, the scalability of data replication,

the notification to subscribers when performing system upgrades or

handling versioning of Services are not an issue. This decision

will depend on the kind of Application being implemented.

A distributed registry hosted by multiple Data Components

should provide multiple

public or private registries . Data Components can be federated in groups

following a mechanism similar to P2P or METEOR-S

registries, if conducting inquiries across the federated

environment of Data Components in a time consuming manner

or the risk of some inconsistencies are not an issue. This decision

also will depend on the kind of Application to implement.

Depending on the parameters given by the Application logic,

the distribution of the Modality Components or the context of interaction,

the advertisement can persist or not. It should be a hard-state advertisement with an

infinite Modality Component lifetime or should be a soft-state

advertisement, in which a life-time is associated to the Modality

Component (e.g. the timestamps assigned with OGSA), and if it

is not renewed before expiration, the Modality

Component will be assumed as no longer available .

The communication model used by the registries in the Data Component

must be specified by the

interaction life-cycle events of the MMI Architecture. The Data Component

deliver the descriptions of the Modality Components by an exchange of

request and responses with MMI events.

4.3 Discovery

The MMI Architecture specification doesn't focus on the

discovery implementation and is not language-oriented.

There could be various approaches of implementations for the

discovery of Modality Components, e.g

ECMAScript or any other language. Any infrastructure can be

used as well.

Nevertheless, conforming specifications must use the protocol proposed by the interaction

life-cycle events to discover Modality Components. Bootstrapping

properties can be specified in a generic way based on Modality

Component types defined by a domain taxonomy. These properties

play an important role on the announcement to the discovery

mechanism.

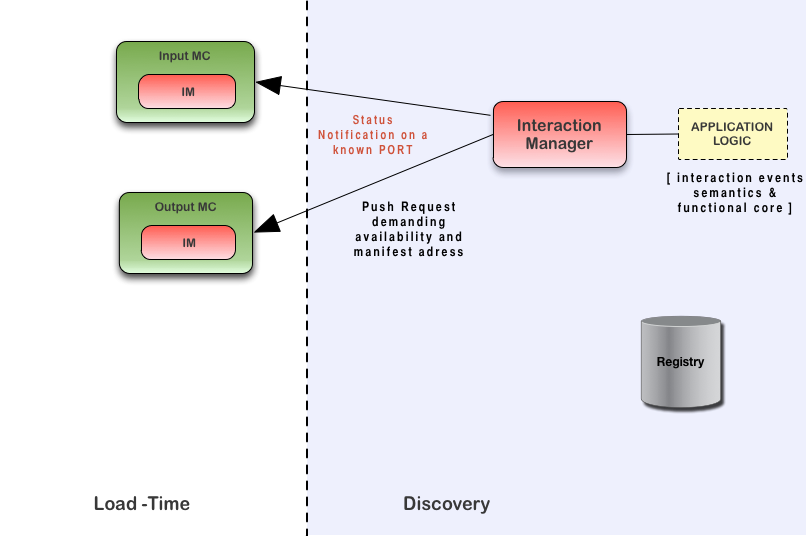

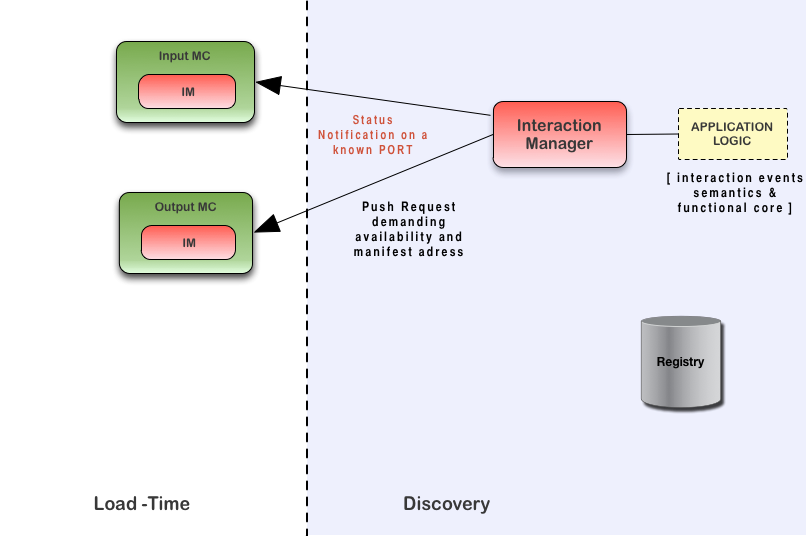

For example, at Load Time, on bootstrapping, when access to the

registry is not preconfigured, the Interaction

Manager can try to discover Modality Components using one of this four

mechanisms : fixed discovery, mediated discovery, active discovery

or passive discovery.

In the fixed discovery case, the Interaction

Manager and the Modality Components are assumed to know

their address and the port number to listen. In this way, the Interaction

Manager can send a request via the StatusRequest/StatusResponse pair

to the Modality Component asking for availability,

manifest address and Media list, as showed in Figure 2. In other

implementations, the Modality Component can also announce its

availability and description directly to the Interaction

Manager using the same event.

Figure 2: Interaction Manager sending a request via the StatusRequest/StatusResponse pair to the Modality Components

In mediated discovery, the Interaction Manager can use the scanning

features provided by the underlying network (e.g. use DHCP) looking

for Modality

Components tagged in their descriptions with a specific group

label (e.g. in federated distributions). If the Modality Component

is not tagged it can use some generic mechanism provided only to

subscribe to a generic 'welcome' group (e.g. in JXTA

implementations). In this case, the Modality

Component should send a

request via the Status event to the Interaction

Manager subscribing to the register. After the bootstrapping

mechanism, the Interaction Manager can aks for a manifest

address and the Media

list address needed for advertisement. In other implementations the

Modality

Component can send it directly to the register after

bootstrapping.

In active discovery, the Interaction Manager can initiate a

multicast, anycast or broadcast request or any other push mechanism

depending on the underlying networking mechanism implemented. This

should be done via the

Status Event or the Extension Notification defined by the MMI

Architecture.

And finally in passive discovery, the Interaction

Manager can listen to advertisement messages coming from Modality

Components over the network in a known port. In this case, the

Interaction

Manager may provide some directory Service feature (e.g. acting as a Jini Lookup Service

or the

UPnP control points), looking for the Modality

Component's' announcements that should be published with the Status Event or

the Extension Notification. More precise details of the discovery

protocols are out of scope for this document and are implementation

dependent.

4.4 Registration

The Interaction Manager is actually used as

support to the Modality Component's' registration by the

use of Context requests. We propose to extend the Interaction

Manager to registration and discovery to avoid the complexity

of an approach that will add a new Component.

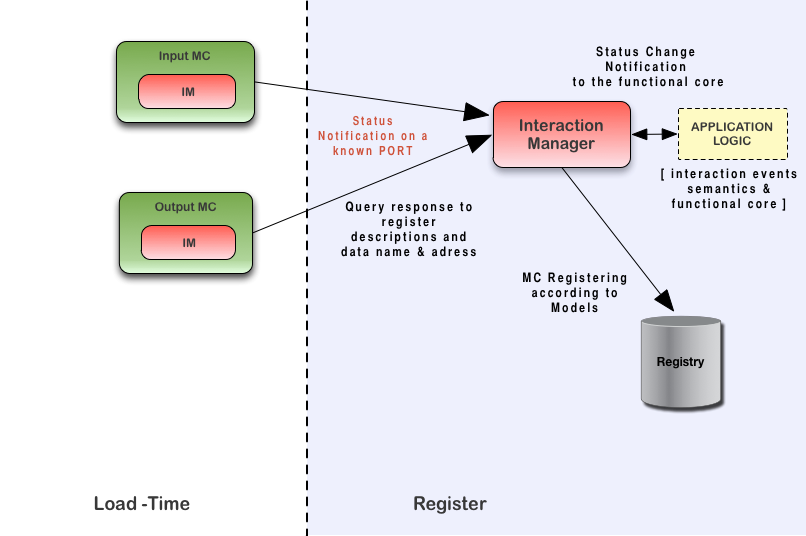

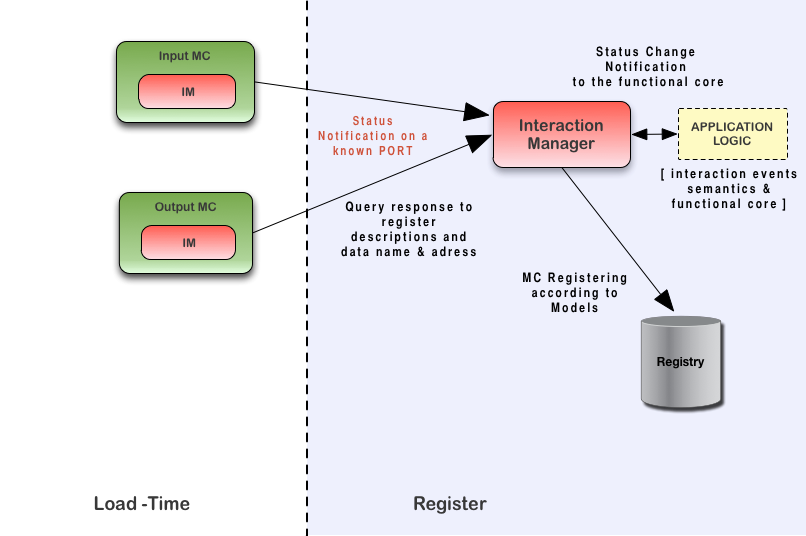

Figure 3 shows a possible example of registration in which a Modality

Component uses a StatusRequest/StatusResponse pair to

register its description on a known port. This is a case of fixed

discovery in which a Modality Component announces its presence

to the Interaction Manager and then, the Interaction

Manager insert this information in some registry according to

the implemented data Model.

Figure 3: Modality Components using a StatusRequest/StatusResponse pair to register its description

Soft-state and hard-state registering depends on the Modality

Component lifetime information. In soft-state Advertisement a

registration renewal is needed. This mechanism of renewal should be implemented with

attributes proposed in the MMI Architecture like the

RequestAutomaticUpdate or the ExtensionNotification. On the other

hand, explicit de-registration should be implemented by the use of the Status

Event.

As a consequence of the structure of the MMI Architecture that

follows the 'Russian Doll' model, the distribution of Data

Components in nested Modality Components facilitates the

implementation of a multi-node Registry, which is a mechanism also

available in UDDI,

Jini and SLP. In this way the

balance of the registry load can be resolved using the nested Data Component

structure. It will also depend on the implementation.

4.5 Querying

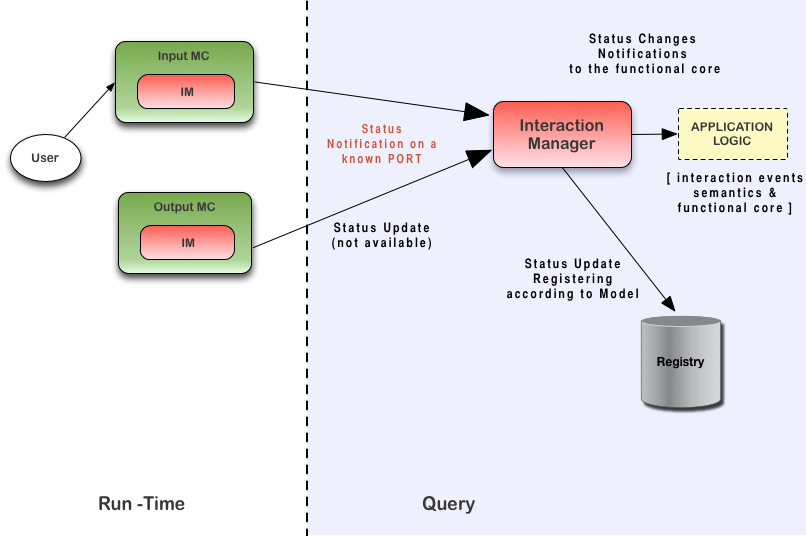

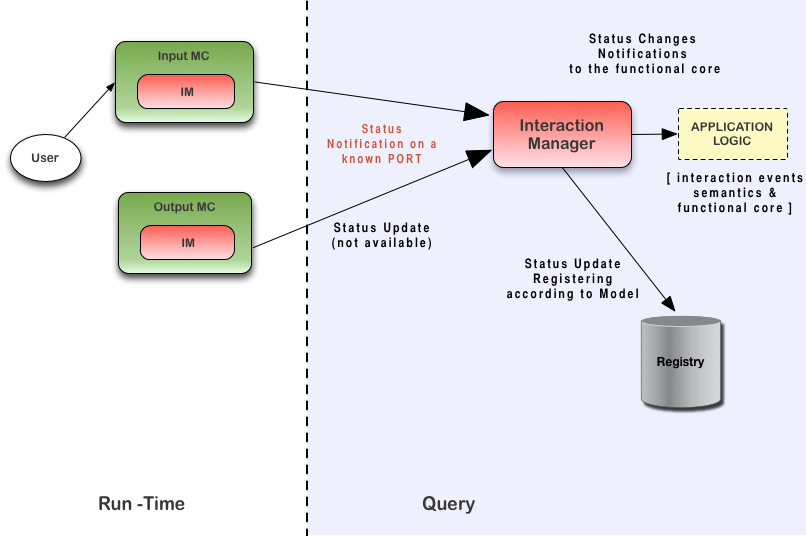

In the MMI Architecture the query lifecycle must be handled with the Interaction Life-cycle

Events Protocol. The monitoring of the state of a Modality

Component is a shared responsibility between the Modality

Component and the Interaction Manager. These components should use StatusRequest/StatusResponse or

ExtensionNotifications to query updates, as showed in Figure 4.

The expressiveness of the discovery query and the format of the

query result can be defined by the Interaction Life-cycle Events

since the routing model depends on the implementation and is out of

the scope of this document.

Figure 4:

Modality Components and Interaction Manager using StatusRequest/StatusResponse of ExtensionNotification events to query updates

The correctness of the result of the query can be ensured by a

pattern matching mechanism during the filtering and selection of Modality

Components based, in the case of a centralized registry

implementation, on the information stored as Descriptions of the Modality

Component's in the Data Component Registry.

In the case of a distributed implementation in federated groups

(e.g. by modality type, capability, scope, zones, DNS subgroups or

intent categories), the correctness of the result of the query is

limited to the administrative boundary defined by the networking

underlying mechanism (e.g.

UPnP or SLP )

which is also out of the scope of this document.

4.6 Open Issues

During this work, the MMI Discovery and Registration Subgroup

did not have time to explore topics like Fault tolerance in Modality

Component nodes or in Modality Component's' communication during

discovery, register or state updates. Consistency maintenance is a

subject of extreme interest and the MMI Discovery and Registration

Subgroup would suggest the MMI working group to further investigate

this topic from the point of view of the MMI Architecture,

eventually with the use of specified attributes like the

RequestAutomaticUpdate or the Extension Notification and the use of

Modality

Component States.

5.1 Web and TV Interest Group

The Web and TV Interest Group's work covers

audio-visual content, e.g., broadcasting programs and Web pages,

and related services delivered by satellite and terrestrial

broadcasting, or via cable services, as well as delivery through

IP. This group is currently working on services discovery for

home-networking scenarios from the perspective of content

providing.

5.2 Web Intents Task Force

The Web Intents Task Force works on a joint deliverable of the

WebApps and Device APIs WGs that aims to produce a way for web

applications to register themselves as handlers for services, and

for other web applications to interact with such services (which

may have been registered through Intents or in other

implementation-specific manners) through simple requests brokered

by the user agent (a system commonly known as Intents, or

Activities). This Task Force is currently working on registering

for a limited type of modalities and from a unimodal perspective

oriented on process announcement and description.

This working group covers the domain of web accessibility. Its

objective is to explore how to make web content usable by persons

with disabilities. The Accessible Rich Internet Applications

Candidate Recommendation is primarily focused on developers of Web

browsers, assistive technologies, and other user agents; developers

of Web technologies (technical specifications); and developers of

accessibility evaluation tools. WAI-ARIA provides a framework for

adding attributes to identify features for user interaction, how

they relate to each other, and their current state. WAI-ARIA

describes new navigation techniques to mark regions and common Web

structures as menus, primary content, secondary content, banner

information, and other types of Web structures. This work is mostly

oriented to web content, web UI components and web events

annotation.

A. References

A.1 Key References

- [ECMA-262]

- ECMA International.

ECMAScript Language Specification, Third Edition.

December 1999. URL:

http://www.ecma-international.org/publications/standards/Ecma-262.htm

- [EMMA]

- Michael Johnston. EMMA:

Extensible MultiModal Annotation markup language. 10

February 2009. W3C Recommendation. URL: http://www.w3.org/TR/2009/REC-emma-20090210/

- [MMI-ARCH]

- Jim Barnett. Multimodal

Architecture and Interfaces. 12 January 2012. W3C

Candidate Recommendation. URL: http://www.w3.org/TR/2012/CR-mmi-arch-20120112/

- [SLP]

- E. Guttman et al. RFC 2608: Service

Location Protocol. Version 2,1999. URL: http://www.ietf.org/rfc/rfc2608.txt

- [WSDL]

- Roberto Chinnici,Jean-Jacques Moreau, Arthur Ryman, Sanjiva

Weerawarana. A Web

Services Description Language WSDL, v.2.0, 26 June 2007.

URL: http://www.w3.org/TR/wsdl20/

- [XML]

- Tim Bray, Jean Paoli, C.M. Sperberg-McQueen, Eve Maler, John

Cowan, François Yergeau. XML Extensible

Markup Language, XML 1.1, 4 February 2004. URL: http://www.w3.org/TR/xml11/

A.2 Other References

- [A-INTENT]

- Google.

Android Intent Class Documentation Available at

URL:

http://developer.android.com/reference/android/content/Intent.html

- [ARIA]

- James Craig et al.

Accessible Rich Internet Applications WAI-ARIA

version 1.0. W3C Working Draft, 16 September 2010. Available at

URL: http://www.w3.org/TR/wai-aria/

- [ASN.1]

- John Larmouth, Douglas Steedman, James E. White. ASN.1

International Telecommunication Union. ISO/IEC 8824-1 > 8824-4.

Available at URL: http://www.itu.int/ITU-T/asn1/introduction/index.htm

- [ECHONET]

- Echonet Consortium.

Detailed stipulation for Echonet Device Objects

Available at URL:

http://www.echonet.gr.jp/english/spec/pdf/spec_v1e_appendix.pdf

- [EMOTION-USE]

- Marc Schröder, Enrico Zovato, Hannes Pirker,

Christian Peter, Felix Burkhardt.

Emotion Incubator Group Use Cases Available at

URL:

http://www.w3.org/2005/Incubator/emotion/XGR-emotion/#AppendixUseCases

- [IANA]

- IANA.IANA Domain

Name Service ICCAN - Internet Assigned Numbers Authority

(IANA). Available at URL: http://www.iana.org/

- [INS]

- William Adjie-Winoto, Elliot Schwartz, Hari Balakrishnan,

Jeremy Lilley. INS:

Intentional Naming System Available at URL: http://nms.csail.mit.edu/projects/ins/

- [JINI]

- Sun Microsystems JINI /

RIVER Apache River (formerly JINI). Available at URL: http://river.apache.org/about.html

- [INS/TWINE]

- Magdalena Balazinska, Hari Balakrishnan, David Karger INS /

TWINE Apache River (formerly JINI). Available at URL: http://nms.csail.mit.edu/projects/twine/

- [JXTA]

- Sun Microsystems

Juxtapose JXTA Protocols Specification v2.0 October 16,

2007. v2.0 . Available at URL: http://jxta.kenai.com/Specifications/JXTAProtocols2_0.pdf

- [METEOR-S]

- Kunal Verma, Karthik Gomadam, Amit P. Sheth, John A. Miller,

Zixin Wu. METEOR-S:

Semantic Web Services and Processes Technical Report,

06/24/2005. Available at URL: http://lsdis.cs.uga.edu/projects/meteor-s/

- [OGSA]

- I. Foster, Argonne et al. OGSA

The Open Grid Services Architecture, 24 July 2006 Version 1.5.

Available at URL: http://www.ogf.org/documents/GFD.80.pdf

- [RES-DIS]

- Reaz Ahmed et al.

Resource and Service Discovery in Large-Scale Multi-Domain

Networks.in Ieee Communications Surveys & Tutorials.

4Th Quarter 2007, Vol 9. Available at URL:

http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=5473882&isnumber=5764312

- [UDDI]

- Luc Clement, Andrew Hately, Claus von Riegen, Tony Rogers. UDDI Spec

Technical Committee Draft, Version 3.0.2. Dated 2004/10/19.

Available at URL: http://uddi.org/pubs/uddi_v3.htm

- [UPNP-DEVICE]

- UPnP Forum.

UPnP Device Architecture 1.0 Version 1.0, 15

October 2008. PDF document. URL:

http://www.upnp.org/specs/arch/UPnP-arch-DeviceArchitecture-v1.0-20081015.pdf

- [UPNP-CONTENT]

- UPnP Forum.

ContentDirectory:4 Service Standardized DCP.

Version 1.0, 31 December 2010. PDF document.URL:

http://www.upnp.org/specs/av/UPnP-av-ContentDirectory-v4-Service-20101231.pdf

- [WEB-INTENTS]

- Paul Kinlan. Web

Intents. Available at URL: http://webintents.org/