Status of this Document

This section describes the status of this document at the time

of its publication. Other documents may supersede this document. A

list of current W3C publications and the latest revision of this

technical report can be found in the W3C technical reports index at

http://www.w3.org/TR/.

This is the 12 January 2012 W3C Candidate Recommendation of

"Multimodal Architecture and Interfaces". W3C publishes a technical

report as a Candidate Recommendation to indicate that the document

is believed to be stable, and to encourage implementation by the

developer community.

This document has been produced as part of the W3C Multimodal

Interaction Activity, following the procedures set out for the

W3C Process.

The authors of this document are members of the Multimodal Interaction Working

Group.

This document was produced by a group operating under the 5

February 2004 W3C Patent Policy. W3C maintains a public list of any patent disclosures made in

connection with the deliverables of the group; that page also

includes instructions for disclosing a patent. An individual who

has actual knowledge of a patent which the individual believes

contains

Essential Claim(s) must disclose the information in accordance

with

section 6 of the W3C Patent Policy.

Publication as a Candidate Recommendation does not imply

endorsement by the W3C Membership. This is a draft document and may

be updated, replaced or obsoleted by other documents at any time.

It is inappropriate to cite this document as other than work in

progress.

There is no normative change since the second Last

Call Working Draft in September 2011, though several

clarifications had been added to the second Last

Call Working Draft in order to address detailed feedback from

the Multimodal public list. Please check the Disposition of Comments received

during the first Last Call period. A diff-marked version of this document is also

available for comparison purposes.

The entrance criteria to the Proposed Recommendation phase

require at least two independently developed interoperable

implementations of each feature. Detailed implementation

requirements and the invitation for participation in the

Implementation Report are provided in the Implementation

Report Plan. We expect to meet all requirements of that report

within the Candidate Recommendation period closing 29 February

2012. The Multimodal Interaction Working Group will advance

Multimodal Architecture and Interfaces to Proposed Recommendation

no sooner than 29 February 2012.

The following features in the current draft specification are

considered to be at risk of removal due to potential lack of

implementations:

- The Confidential field in all life-cycle events.

- The RequestAutomaticUpdate field of the StatusRequest

message.

- The AutomaticUpdate field of the StatusResponse message.

Comments for this specification are welcomed until 29 February

2012 and should have a subject starting with the prefix '[ARCH]'.

Please send them to <www-multimodal@w3.org>,

the public email list for issues related to Multimodal. This list

is archived

and acceptance of this archiving policy is requested automatically

upon first post. To subscribe to this list send an email to <www-multimodal-request@w3.org>

with the word subscribe in the subject line.

For more information about the Multimodal Interaction Activity,

please see the Multimodal Interaction

Activity statement.

1 Conformance

Requirements

An implementation is conformant with the MMI Architecture if it

consists of one or more software constituents that are conformant

with the MMI Life-Cycle Event specification.

A constituent is conformant with the MMI Life-Cycle Event

specification if it supports the Life-Cycle Event interface between

the Interaction Manager and the Modality Component defined in 6 Interface between the Interaction Manager

and the Modality Components. To support the Life-Cycle

Event interface, a constituent must be able to handle all

Life-Cycle events defined in 6.2

Standard Life Cycle Events either as an Interaction Manager

or as a Modality Component or as both.

Transport and format of Life-Cycle Event messages may be

implemented in any manner, as long as their contents conform to the

standard Life-Cycle Event definitions given in 6.2 Standard Life Cycle Events.

Any implementation that uses XML format to represent the life-cycle

events must comply with the normative MMI XML schemas contained in

C Event Schemas.

The key words MUST, MUST NOT, REQUIRED,

SHALL,

SHALL

NOT, SHOULD, SHOULD

NOT, RECOMMENDED, MAY, and OPTIONAL

in this specification are to be interpreted as described in [IETF RFC 2119].

The terms BASE URI and RELATIVE

URI are used in this specification as they are defined in [IETF RFC 2396].

Any section that is not marked as 'informative' is

normative.

2 Summary

This section is informative.

This document describes a loosely coupled architecture for

multimodal user interfaces, which allows for co-resident and

distributed implementations, and focuses on the role of markup and

scripting, and the use of well defined interfaces between its

constituents.

3 Overview

This section is informative.

This document describes the architecture of the Multimodal

Interaction (MMI) framework [MMIF] and the

interfaces between its constituents. The MMI Working Group is aware

that multimodal interfaces are an area of active research and that

commercial implementations are only beginning to emerge. Therefore

we do not view our goal as standardizing a hypothetical existing

common practice, but rather providing a platform to facilitate

innovation and technical development. Thus the aim of this design

is to provide a general and flexible framework providing

interoperability among modality-specific components from different

vendors - for example, speech recognition from one vendor and

handwriting recognition from another. This framework places very

few restrictions on the individual components, but instead focuses

on providing a general means for communication, plus basic

infrastructure for application control and platform services.

Our framework is motivated by several basic design goals:

- Encapsulation. The architecture should make no assumptions

about the internal implementation of components, which will be

treated as black boxes.

- Distribution. The architecture should support both distributed

and co-hosted implementations.

- Extensibility. The architecture should facilitate the

integration of new modality components. For example, given an

existing implementation with voice and graphics components, it

should be possible to add a new component (for example, a biometric

security component) without modifying the existing components.

- Recursiveness. The architecture should allow for nesting, so

that an instance of the framework consisting of several components

can be packaged up to appear as a single component to a

higher-level instance of the architecture.

- Modularity. The architecture should provide for the separation

of data, control, and presentation.

Even though multimodal interfaces are not yet common, the

software industry as a whole has considerable experience with

architectures that can accomplish these goals. Since the 1980s, for

example, distributed message-based systems have been common. They

have been used for a wide range of tasks, including in particular

high-end telephony systems. In this paradigm, the overall system is

divided up into individual components which communicate by sending

messages over the network. Since the messages are the only means of

communication, the internals of components are hidden and the

system may be deployed in a variety of topologies, either

distributed or co-located. One specific instance of this type of

system is the DARPA Hub Architecture, also known as the Galaxy

Communicator Software Infrastructure [Galaxy]. This is a distributed,

message-based, hub-and-spoke infrastructure designed for

constructing spoken dialogue systems. It was developed in the late

1990's and early 2000's under funding from DARPA. This

infrastructure includes a program called the Hub, together with

servers which provide functions such as speech recognition, natural

language processing, and dialogue management. The servers

communicate with the Hub and with each other using key-value

structures called frames.

Another recent architecture that is relevant to our concerns is

the model-view-controller (MVC) paradigm. This is a well known

design pattern for user interfaces in object oriented programming

languages, and has been widely used with languages such as Java,

Smalltalk, C, and C++. The design pattern proposes three main

parts: a Data Model that represents the underlying logical

structure of the data and associated integrity constraints, one or

more Views which correspond to the objects that the user

directly interacts with, and a Controller which sits

between the data model and the views. The separation between data

and user interface provides considerable flexibility in how the

data is presented and how the user interacts with that data. While

the MVC paradigm has been traditionally applied to graphical user

interfaces, it lends itself to the broader context of multimodal

interaction where the user is able to use a combination of visual,

aural and tactile modalities.

4 Design versus Run-Time

considerations

This section is informative.

In discussing the design of MMI systems, it is important to keep

in mind the distinction between the design-time view (i.e., the

markup) and the run-time view (the software that executes the

markup). At the design level, we assume that multimodal

applications will take the form of multiple documents from

different namespaces. In many cases, the different namespaces and

markup languages will correspond to different modalities, but we do

not require this. A single language may cover multiple modalities

and there may be multiple languages for a single modality.

At runtime, the MMI architecture features loosely coupled

software constituents that may be either co-resident on a device or

distributed across a network. In keeping with the loosely-coupled

nature of the architecture, the constituents do not share context

and communicate only by exchanging events. The nature of these

constituents and the APIs between them is discussed in more detail

in Sections 3-5, below. Though nothing in the MMI architecture

requires that there be any particular correspondence between the

design-time and run-time views, in many cases there will be a

specific software component responsible for each different markup

language (namespace).

4.1 Markup and The Design-Time

View

At the markup level, an application consists of multiple

documents. A single document may contain markup from different

namespaces if the interaction of those namespaces has been defined.

By the principle of encapsulation, however, the internal structure

of documents is invisible at the MMI level, which defines only how

the different documents communicate. One document has a special

status, namely the Root or Controller Document, which contains

markup defining the interaction between the other documents. Such

markup is called Interaction Manager markup. The other documents

are called Presentation Documents, since they contain markup to

interact directly with the user. The Controller Document may

consist solely of Interaction Manager markup (for example a state

machine defined in CCXML [CCXML] or SCXML [SCXML]) or it may contain Interaction Manager

markup combined with presentation or other markup. As an example of

the latter design, consider a multimodal application in which a

CCXML document provides call control functionality as well as the

flow control for the various Presentation documents. Similarly, an

SCXML flow control document could contain embedded presentation

markup in addition to its native Interaction Management markup.

These relationships are recursive, so that any Presentation

Document may serve as the Controller Document for another set of

documents. This nested structure is similar to 'Russian Doll' model

of Modality Components, described below in 4.2 Software Constituents and The Run-Time

View.

The different documents are loosely coupled and co-exist without

interacting directly. Note in particular that there are no shared

variables that could be used to pass information between them.

Instead, all runtime communication is handled by events, as

described below in 6 Interface between the

Interaction Manager and the Modality Components. Note,

however, that this only applies to non-root documents. The IM,

which loads the root document, interacts with "other components".

I.e., the IM (having the root-document) interacts directly through

life-cycle events with Modality Components (having different

documents and/or namespaces).

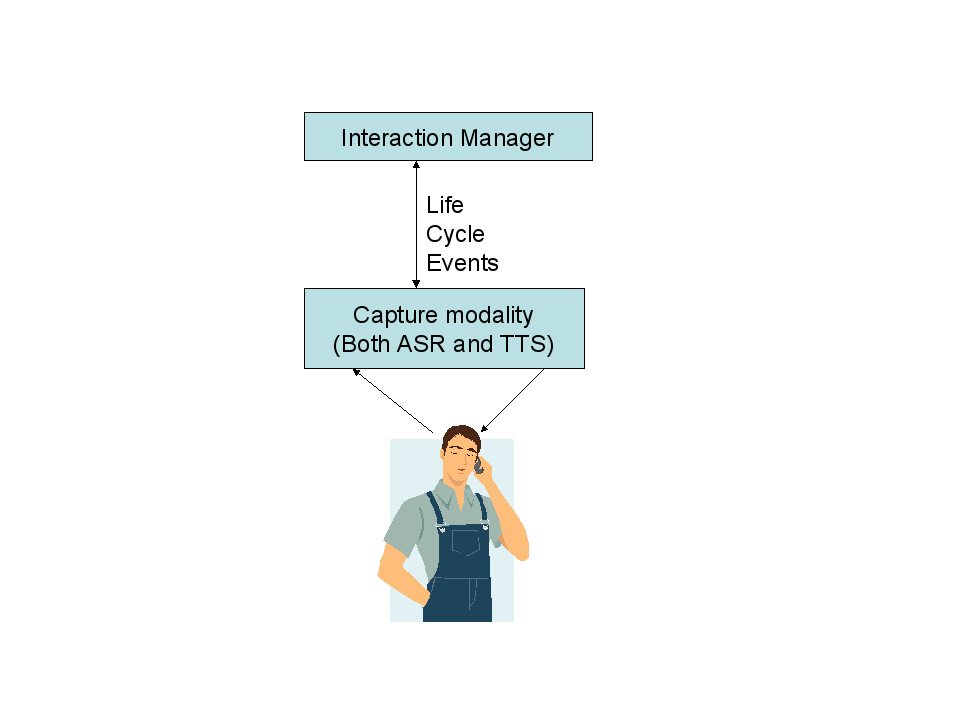

Furthermore, it is important to note that the asynchronicity of

the underlying communication mechanism does not impose the

requirement that the markup languages present a purely asynchronous

programming model to the developer. Given the principle of

encapsulation, markup languages are not required to reflect

directly the architecture and APIs defined here. As an example,

consider an implementation containing a Modality Component

providing Text-to-Speech (TTS) functionality. This Component must

communicate with the Interaction Manager via asynchronous events

(see 4.2 Software Constituents and The

Run-Time View). In a typical implementation, there would

likely be events to start a TTS play and to report the end of the

play, etc. However, the markup and scripts that were used to author

this system might well offer only a synchronous "play TTS" call, it

being the job of the underlying implementation to convert that

synchronous call into the appropriate sequence of asynchronous

events. In fact, there is no requirement that the TTS resource be

individually accessible at all. It would be quite possible for the

markup to present only a single "play TTS and do speech

recognition" call, which the underlying implementation would

realize as a series of asynchronous events involving multiple

Components.

Existing languages such as HTML may be used as either the

Controller Documents or as Presentation Documents. Further examples

of potential markup components are given in 5.2.7 Examples

4.2 Software Constituents and

The Run-Time View

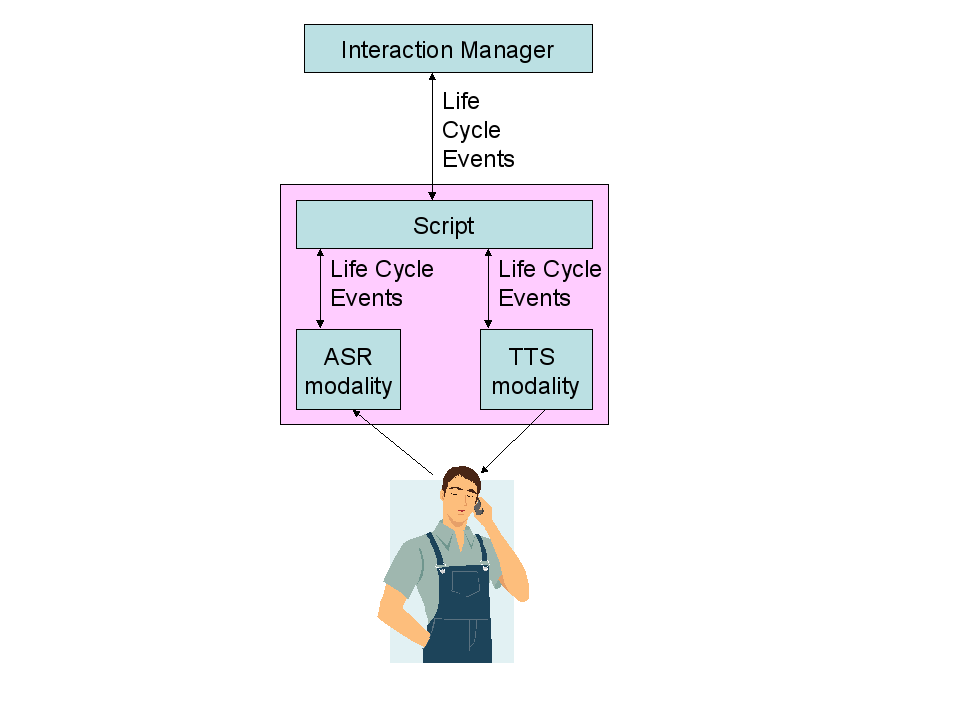

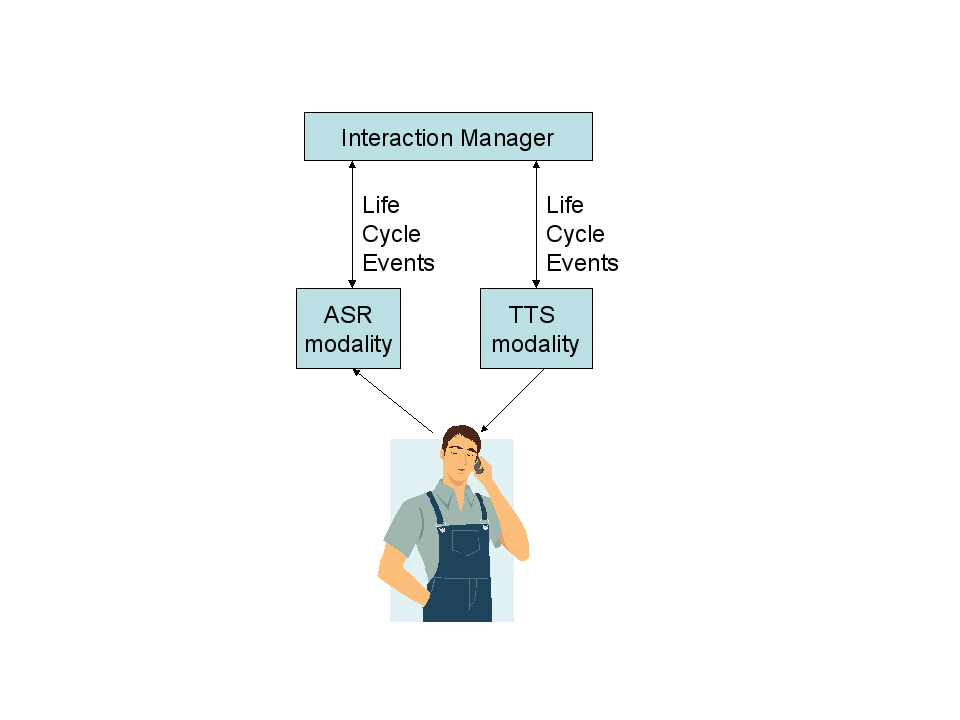

At the core of the MMI runtime architecture is the distinction

between the Interaction Manager (IM) and the Modality Components,

which is similar to the distinction between the Controller Document

and the Presentation Documents. The Interaction Manager interprets

the Controller Document while the individual Modality Components

are responsible for specific tasks, particularly handling input and

output in the various modalities, such as speech, pen, video,

etc.

The Interaction Manager receives all the events that the various

Modality Components generate. Those events may be commands or

replies to commands, and it is up to the Interaction Manager to

decide what to do with them, i.e., what events to generate in

response to them. In general, the MMI architecture follows a

'targetless' event model. That is, the Component that raises an

event does not specify its destination. Rather, it passes it up to

the Runtime Framework, which will pass it to the Interaction

Manager. The IM, in turn, decides whether to forward the event to

other Components, or to generate a different event, etc.

Modality Components are black boxes, required only to implement

the Modality Component Interface API which is described below. This

API allows the Modality Components to communicate with the IM and

thus indirectly with each other, since the IM is responsible for

delivering events/messages among the Components. Since the

internals of a Component are hidden, it is possible for an

Interaction Manager and a set of Components to present themselves

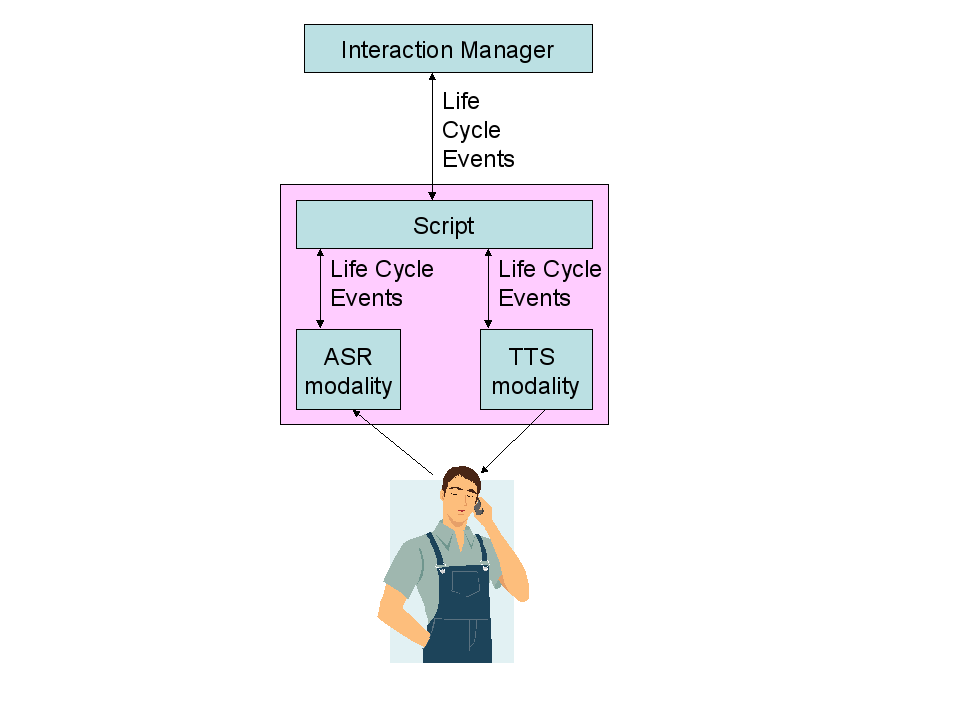

as a Component to a higher-level Interaction Manager. All that is

required is that the IM implement the Component API. The result is

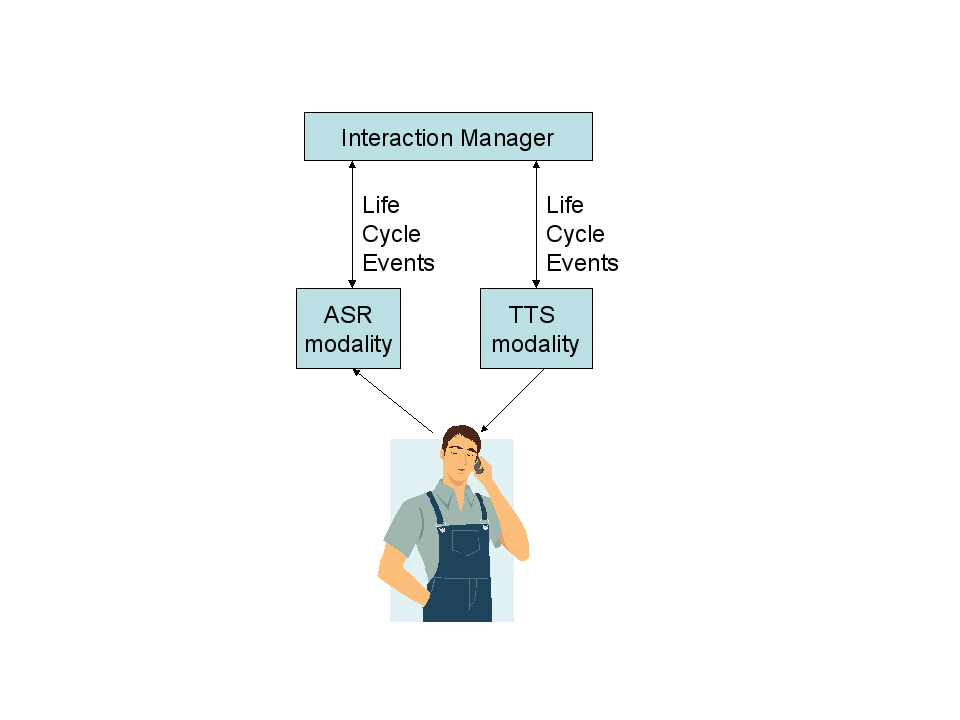

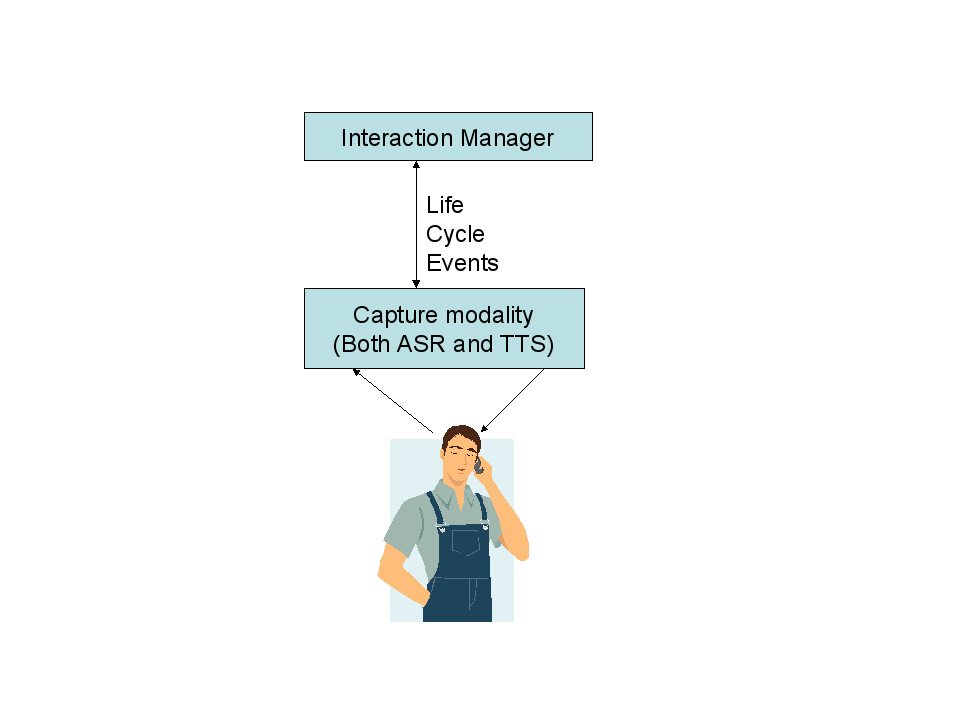

a "Russian Doll" model in which Components may be nested inside

other Components to an arbitrary depth. Nesting components in this

manner is one way to produce a 'complex' Modality Component, namely

one that handles multiple modalities simultaneously. However, it is

also possible to produce complex Modality Components without

nesting, as discussed in 5.2.3 The

Modality Components.

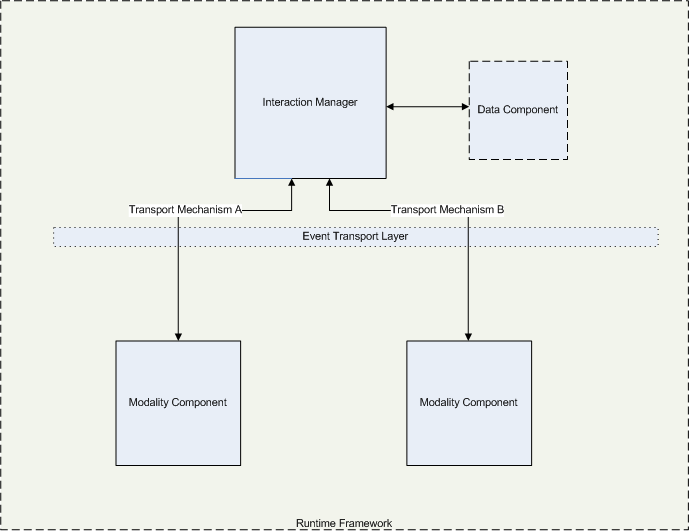

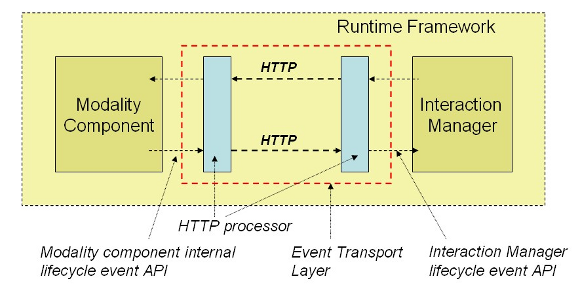

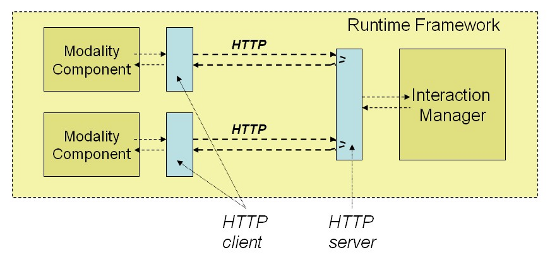

In addition to the Interaction Manager and the modality

components, there is a Runtime Framework that provides

infrastructure support, in particular a transport layer which

delivers events among the components.

Because we are using the term 'Component' to refer to a specific

set of entities in our architecture, we will use the term

'Constituent' as a cover term for all the elements in our

architecture which might normally be called 'software

components'.

4.3

Relationship to EMMA

The Extended Multimodal Annotation Language [EMMA], is a set of specifications for multimodal

systems, and provides details of an XML markup language for

containing and annotating the interpretation of user input. For

example, a user of a multimodal application might use both speech

to express a command, and keystroke gesture to select or draw

command parameters. The Speech Recognition Modality would express

the user command using EMMA to indicate the input source (speech).

The Pen Gesture Modality would express the command parameters using

EMMA to indicate the input source (pen gestures). Both modalities

may include timing information in the EMMA notation. Using the

timing information, a fusion module combines the speech and pen

gesture information into a single EMMA notation representing both

the command and its parameters. The use of EMMA enables the

separation of recognition process from the information fusion

process, and thus enables reusable recognition modalities and

general purpose information fusion algorithms.

5

Overview of Architecture

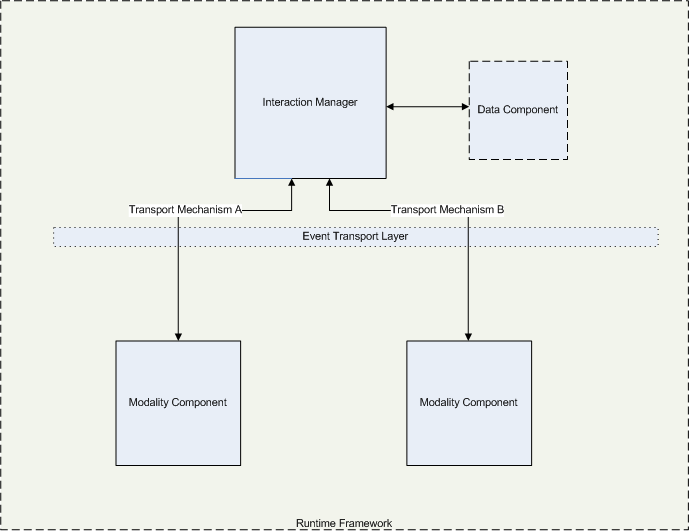

Here is a list of the Constituents of the MMI architecture. They

are discussed in more detail below.

- the Interaction Manager, which coordinates the different

modalities. It is the Controller in the MVC paradigm.

- the Data Component, which provides the common data model and

represents the Model in the MVC paradigm.

- the Modality Components, which provide modality-specific

interaction capabilities. They are the Views in the MVC

paradigm.

- the Runtime Framework, which provides the basic infrastructure

and enables communication among the other Constituents.

5.1 Run-Time

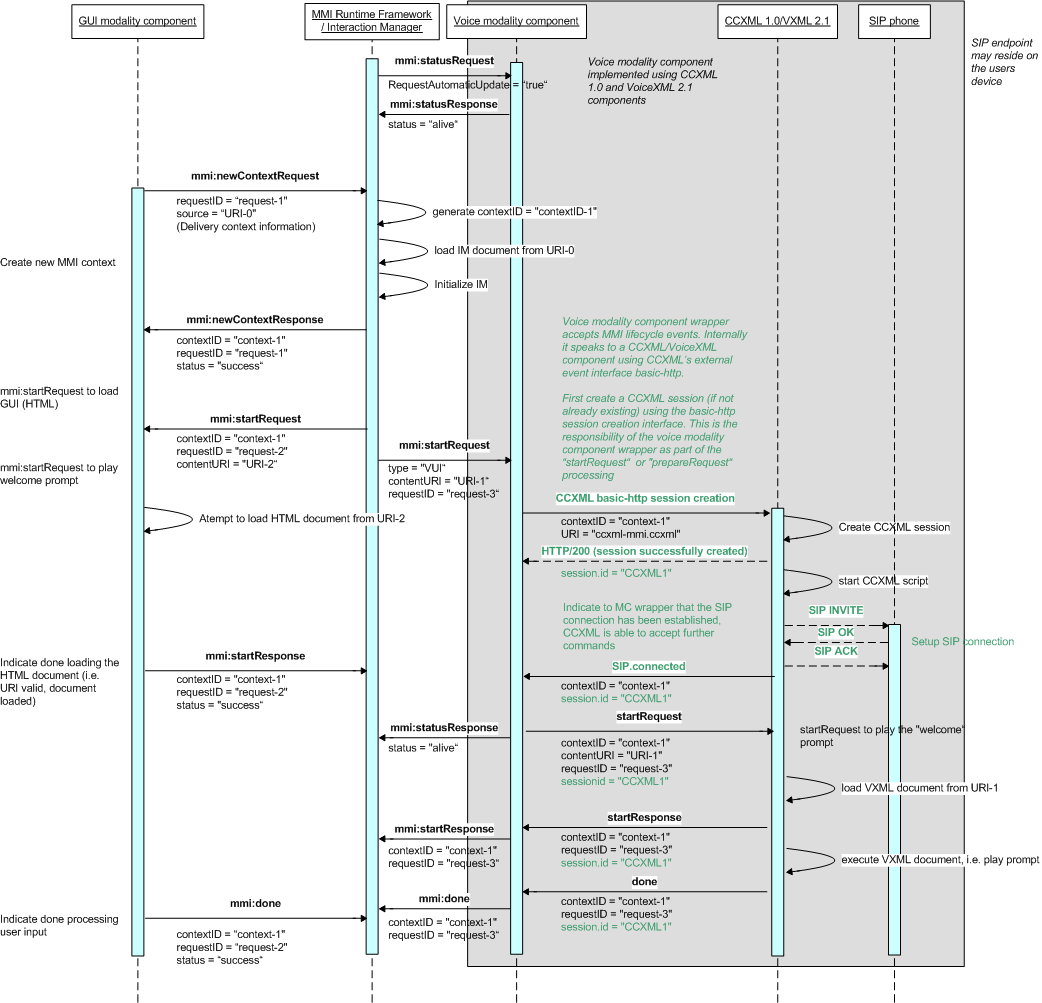

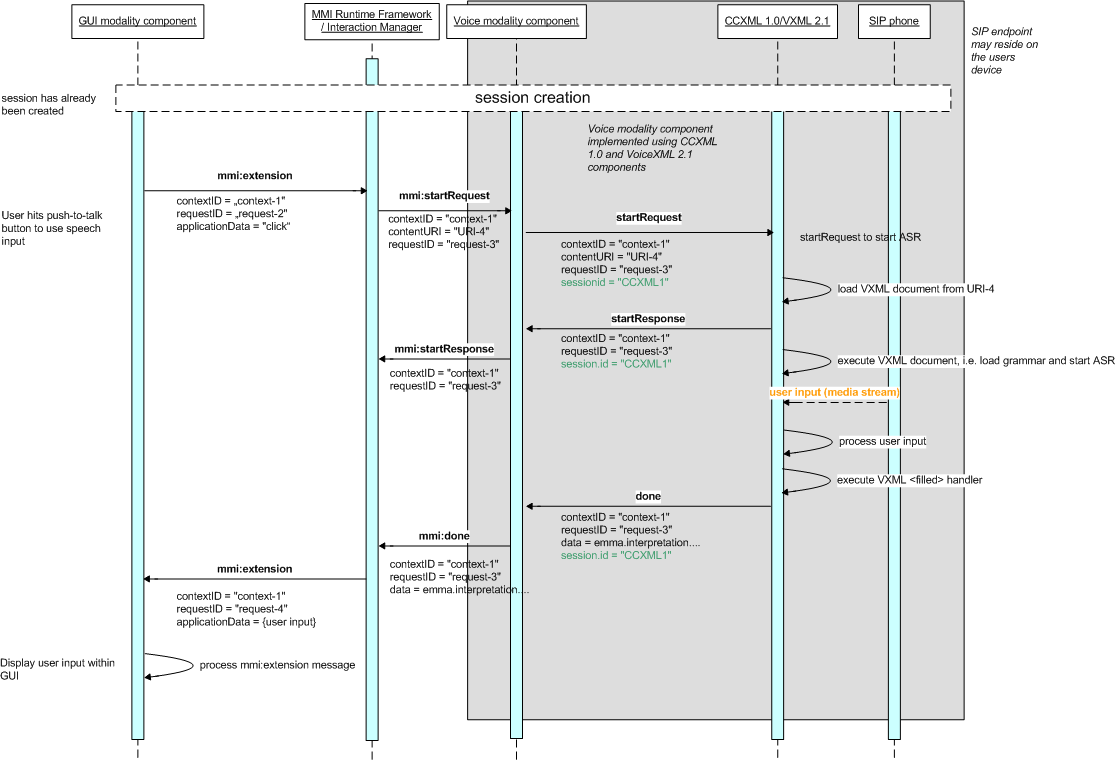

Architecture Diagram

5.2 The

Constituents

This section presents the responsibilities of the various

constituents of the MMI architecture.

5.2.1 The Interaction

Manager

All life-cycle events that the Modality Components generate MUST be

delivered to the Interaction Manager. All life-cycle events that

are delivered to Modality Components MUST be sent

by the Interaction Manager.

Due to the Russian Doll model, Modality Components MAY contain

their own Interaction Managers to handle their internal events.

However these Interaction Managers are not visible to the top level

Runtime Framework or Interaction Manager.

If the Interaction Manager does not contain an explicit handler

for an event, it MUST respect any default behavior that has

been established for the event. If there is no default behavior,

the Interaction Manager MUST ignore the event. (In effect, the

Interaction Manager's default handler for all events is to ignore

them.)

The following paragraph is informative.

Normally there will be specific markup associated with the IM

instructing it how to respond to events. This markup will thus

contain a lot of the most basic interaction logic of an

application. Existing languages such as SMIL, CCXML, SCXML, or

ECMAScript can be used for IM markup as an alternative to defining

special-purpose languages aimed specifically at multimodal

applications. The IM fulfills multiple functions. For example, it

is responsible for synchronization of data and focus, etc., across

different Modality Components as well as the higher-level

application flow that is independent of Modality Components. It

also maintains the high-level application data model and may handle

communication with external entities and back-end systems.

Logically these functions could be separated into separate

constituents and implementations may want to introduce internal

structure to the IM. However, for the purposes of this standard, we

leave the various functions rolled up in a single monolithic

Interaction Manager component. We note that state machine languages

such as SCXML are a good choice for authoring such a multi-function

component, since state machines can be composed. Thus it is

possible to define a high-level state machine representing the

overall application flow, with lower-level state machines nested

inside it handling the the cross-modality synchronization at each

phase of the higher-level flow.

5.2.2 The Data Component

This section is informative.

The Data Component is responsible for storing application-level

data. The Interaction Manager is a client of the Data Component and

is able to access and update it as part of its control flow logic,

but Modality Components do not have direct access to it. Since

Modality Components are black boxes, they may have their own

internal Data Components and may interact directly with backend

servers. However, the only way that Modality Components can share

data among themselves and maintain consistency is via the

Interaction Manager. It is therefore a good application design

practice to divide data into two logical classes: private data,

which is of interest only to a given modality component, and public

data, which is of interest to the Interaction Manager or to more

than one Modality Component. Private data may be managed as the

Modality Component sees fit, but all modification of public data,

including submission to back end servers, should be entrusted to

the Interaction Manager.

This specification does not define an interface between the Data

Component and the Interaction Manager. This amounts to treating the

Data Component as part of the Interaction Manager. (Note that this

means that the data access language will be whatever one the IM

provides.) The Data Component is shown with a dotted outline in the

diagram above, however, because it is logically distinct and could

be placed in a separate component.

5.2.3

The Modality Components

This section is informative.

Modality Components, as their name would indicate, are

responsible for controlling the various input and output modalities

on the device. They are therefore responsible for handling all

interaction with the user(s). Their only responsibility is to

implement the interface defined in 6

Interface between the Interaction Manager and the Modality

Components. Any further definition of their

responsibilities will be highly domain- and application-specific.

In particular we do not define a set of standard modalities or the

events that they should generate or handle. Platform providers are

allowed to define new Modality Components and are allowed to place

into a single Component functionality that might logically seem to

belong to two or more different modalities. Thus a platform could

provide a handwriting-and-speech Modality Component that would

accept simultaneous voice and pen input. Such combined Components

permit a much tighter coupling between the two modalities than the

loose interface defined here. Furthermore, modality components may

be used to perform general processing functions not directly

associated with any specific interface modality, for example,

dialog flow control or natural language processing.

In most cases, there will be specific markup in the application

corresponding to a given modality, specifying how the interaction

with the user should be carried out. However, we do not require

this and specifically allow for a markup-free modality component

whose behavior is hard-coded into its software.

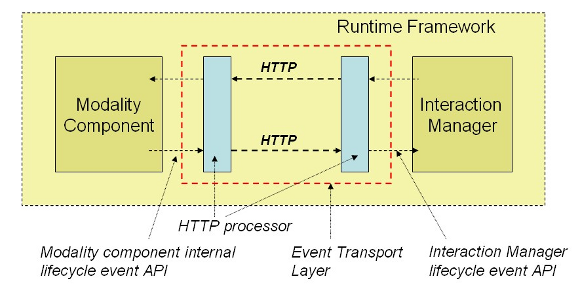

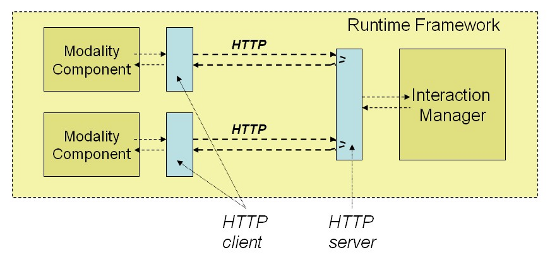

5.2.4 The Runtime Framework

The Runtime Framework is a cover term for all the infrastructure

services that are necessary for successful execution of a

multimodal application. This includes starting the components,

handling communication, and logging, etc. For the most part, this

version of the specification leaves these functions to be defined

in a platform-specific way, but we do specifically define a

Transport Layer which handles communications between the

components.

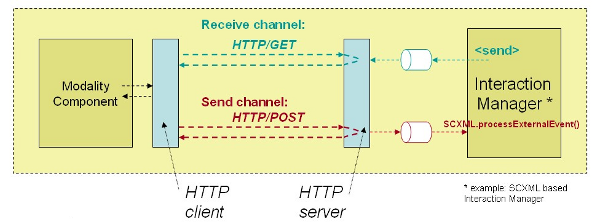

5.2.4.1 The Event Transport

Layer

The Event Transport Layer is responsible for delivering events

among the IM and the Modality Components. Clearly, there are

multiple transport mechanisms (protocols) that can be used to

implement a Transport Layer and different mechanisms may be used to

communicate with different modality components. Thus the Event

Transport Layer consists of one or more transport mechanisms

linking the IM to the various Modality Components.

We place the following requirements on all transport

mechanisms:

- Events MUST be delivered reliably. In particular, the

event delivery mechanism MUST report an error if an event can not be

delivered, for example if the destination endpoint is

unavailable.

- Events MUST be delivered to the destination in the

order in which the source generated them. There is no guarantee on

the delivery order of events generated by different sources. For

example, if Modality Component M1 generates events E1 and E2 in

that order, while Modality Component M2 generates E3 and then E4,

we require that E1 be delivered to the Runtime Framework before E2

and that E3 be delivered before E4, but there is no guarantee on

the ordering of E1 or E2 versus E3 or E4.

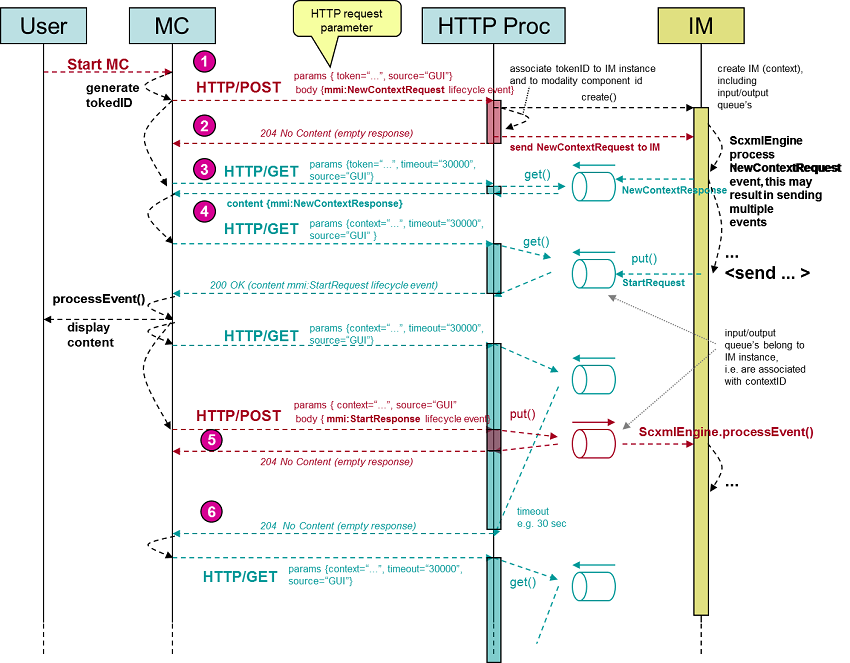

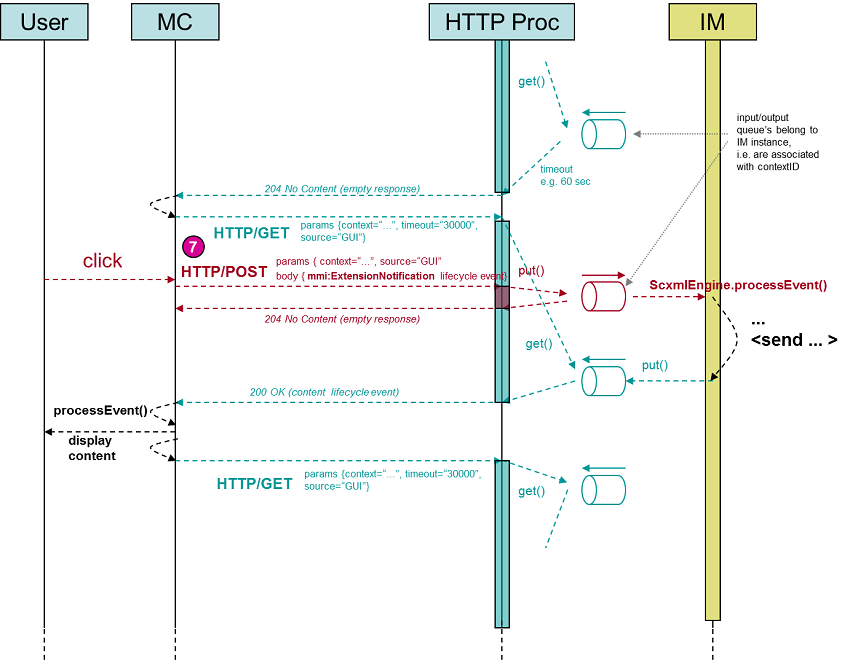

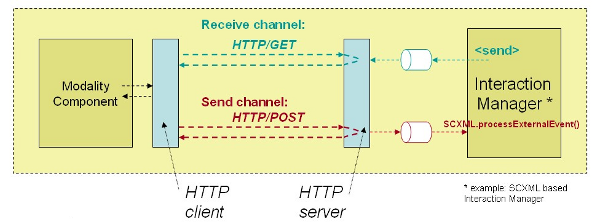

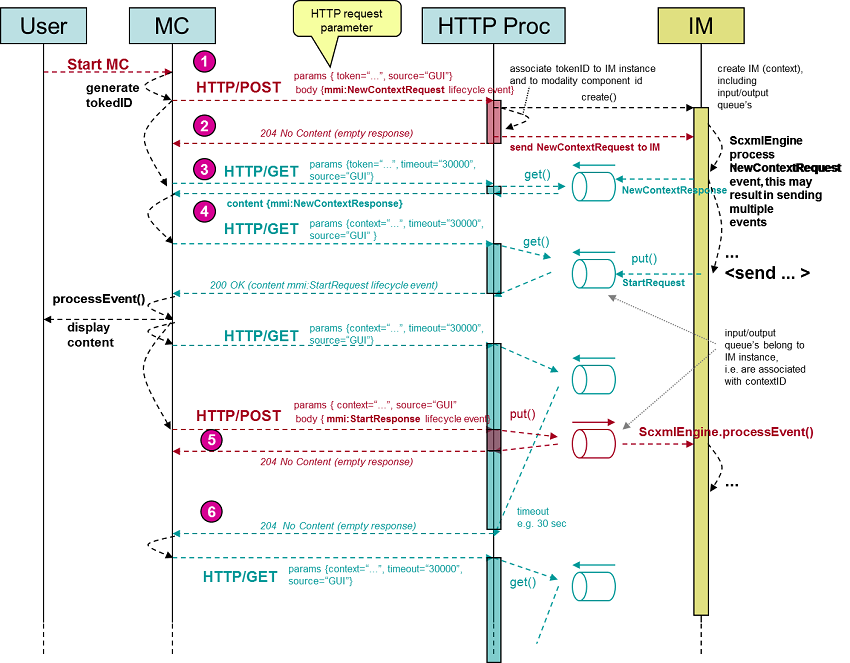

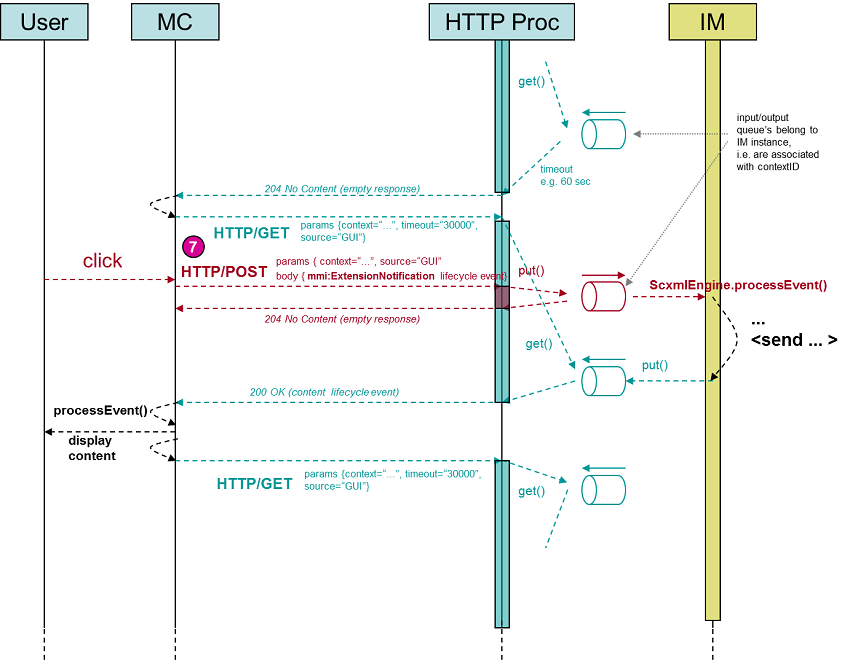

For a sample definition of a Transport Layer relying on HTTP,

see F HTTP transport of MMI lifecycle

events. This definition is provided as an example only.

5.2.4.1.1 Event and

Information Security

This section is informative.

Events will often carry sensitive information, such as bank

account numbers or health care information. In addition events must

also be reliable to both sides of transaction: for example, if an

event carries an assent to a financial transaction, both sides of

the transaction must be able to rely on that assent.

We do not currently specify delivery mechanisms or internal

security safeguards to be used by the Modality Components and the

Interaction Manager. However, we believe that any secure system

will have to meet the following requirements at a minimum:

The following two optional requirements can be met by using the

W3C's XML-Signature Syntax and Processing specification [XMLSig].

- Authentication. The event delivery mechanism should be able to

ensure that the identity of components in an interaction are

known.

- Integrity. The event delivery mechanism should be able to

ensure that the contents of events have not been altered in

transit.

The remaining optional requirements for event delivery and

information security can be met by following other

industry-standard procedures.

- Authorization. A component should provide a method to ensure

only authorized components can connect to it.

- Privacy. The event delivery mechanism should provide a method

to keep the message contents secure from any unauthorized access

while in transit.

- Non-repudiation. The event delivery mechanism, in conjunction

with the components, may provide a method to ensure that if a

message is sent from one constituent to another, the originating

constituent cannot repudiate the message that it sent and that the

receiving constituent cannot repudiate that the message was

received.

Multiple protocols may be necessary to implement these

requirements. For example, TCP/IP and HTTP provide reliable event

delivery, but additional protocols such as TLS or HTTPS could be

required to meet security requirements.

5.2.5 System and OS

Security

This section is informative.

This architecture does not and will not specify the internal

security requirements of a Modality Component or Runtime

Framework.

5.2.6 Media stream handling

Media streams do not typically flow through the Interaction

Manager. This specification does not specify how media connections

are established, as the main focus of this specification is the

flow of control data. However, all control data logically sent

between modality components MUST flow through the Interaction Manager.

5.2.7

Examples

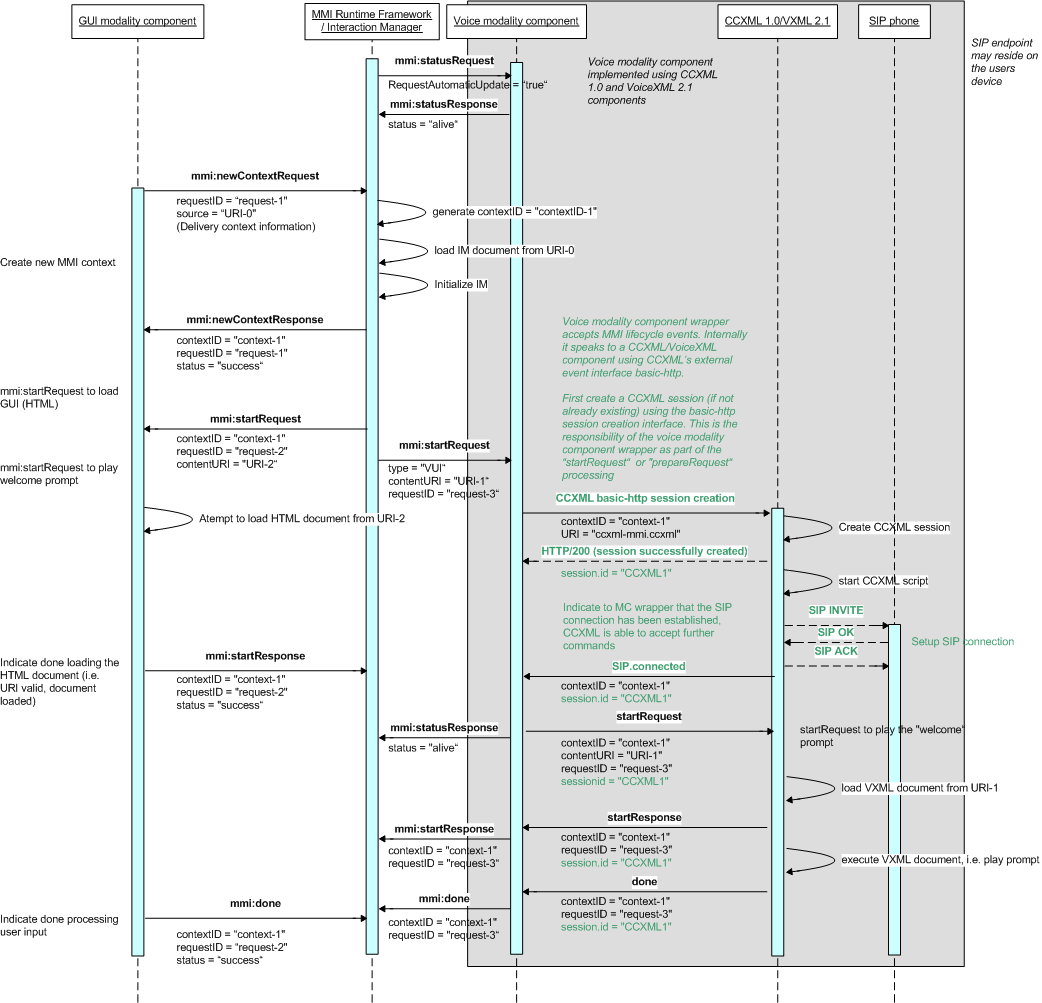

This section is informative.

For the sake of concreteness, here are some examples of

components that could be implemented using existing languages. Note

that we are mixing the design-time and run-time views here, since

it is the implementation of the language (the browser) that serves

as the run-time component.

- CCXML [CCXML]could be used as both the

Controller Document and the Interaction Manager language, with the

CCXML interpreter serving as the Runtime Framework and Interaction

Manager.

- SCXML [SCXML] could be used as the

Controller Document and Interaction Manager language

- In an integrated multimodal browser, the markup language that

provided the document root tag would define the Controller Document

while the associated scripting language could serve as the

Interaction Manager.

- HTML [HTML] could be used as the markup for

a Modality Component.

- VoiceXML [VoiceXML]could be used as the

markup for a Modality Component.

- SVG [SVG] could be used as the markup for a

Modality Component.

- SMIL [SMIL]could be used as the markup for

a Modality Component.

6 Interface between the

Interaction Manager and the Modality Components

The most important interface in this architecture is the one

between the Modality Components and the Interaction Manager.

Modality Components communicate with the IM via asynchronous

events. Constituents MUST be able to send events and to handle

events that are delivered to them asynchronously. It is not

required that Constituents use these events internally since the

implementation of a given Constituent is black box to the rest of

the system. In general, it is expected that Constituents will send

events both automatically (i.e., as part of their implementation)

and under mark-up control.

The majority of the events defined here come in request/response

pairs. That is, one party (either the IM or an MC) sends a request

and the other returns a response. (The exceptions are the

ExtensionNotification, StatusRequest and StatusResponse events,

which can be sent by either party.) In each case it is specified

which party sends the request and which party returns the response.

If the wrong party sends a request or response, or if the request

or response is sent under the wrong conditions (e.g. response

without a previous request) the behavior of the receiving party is

undefined. In the descriptions below, we say that the originating

party "MAY" send the request, because it is up to

the internal logic of the originating party to decide if it wants

to invoke the behavior that the request would trigger. On the other

hand, we say that the receiving party "MUST" send the response, because it is

mandatory to send the response if and when the request is

received.

6.1

Common Event Fields

The concept of 'context' is basic to these events described

below. A context represents a single extended interaction with zero

or more users across one or more modality components. In a simple

unimodal case, a context can be as simple as a phone call or SSL

session. Multimodal cases are more complex, however, since the

various modalities may not be all used at the same time. For

example, in a voice-plus-web interaction, e.g., web sharing with an

associated VoIP call, it would be possible to terminate the web

sharing and continue the voice call, or to drop the voice call and

continue via web chat. In these cases, a single context persists

across various modality configurations. In general, the 'context'

SHOULD

cover the longest period of interaction over which it would make

sense for components to store information.

For examples of the concrete XML syntax for all these events,

see B Examples of Life-Cycle

Events

The following common fields are shared by multiple life-cycle

events:

6.1.1 Context

A URI that MUST be unique for the lifetime of the system.

It is used to identify this interaction. All events relating to a

given interaction MUST use the same context URI. Events

containing a different context URI MUST be

interpreted as part of other, unrelated, interactions.

6.1.2 Source

A URI representing the address of the sender of the event. The

recipient of the event MUST be able to send an event back to the

sender by using this value as the 'target' of a message.

6.1.3 Target

A URI that MUST represent the address to which the event

will be delivered.

6.1.4

RequestID

A unique identifier for a Request/Response pair. Most life-cycle

events come in Request/Response pairs that share a common

RequestID. For any such pair, the RequestID in the Response event

MUST match

the RequestID in the request event. The RequestID for such a pair

MUST be

unique within the given context.

6.1.5 Status

An enumeration of 'Success' and 'Failure'. The Response event of

a Request/Response pair MUST use this field to report whether it

succeeded in carrying out the request.

6.1.6

StatusInfo

The Response event of a Request/Response pair MAY use this

field to provide additional status information.

6.1.7 Data

Any event MAY use this field to contain arbitrary data.

The format and meaning of this data is application-specific.

6.1.8

Confidential

Any event MAY use this field to indicate whether the

contents of this event are confidential. The default value is

'false'. If the value is 'true', the Interaction Manager and

Modality Component implementations MUST not log

the information or make it available in any way to third parties

unless explicitly instructed to do so by the author of the

application.

6.2 Standard

Life Cycle Events

The Multimodal Architecture defines the following basic

life-cycle events which the Interaction Manager and Modality

Components MUST support. These events allow the

Interaction Manager to invoke modality components and receive

results from them. They thus form the basic interface between the

IM and the Modality components. Note that the ExtensionNotification

event offers extensibility since it contains arbitrary content and

can be raised by either the IM or the Modality Components at any

time once the context has been established. For example, an

application relying on speech recognition could use the 'Extension'

event to communicate recognition results or the fact that speech

had started, etc.

In the definitions below, all fields are mandatory, unless

explicitly stated to be optional.

6.2.1

NewContextRequest/NewContextResponse

A Modality Component MAY send a NewContextRequest to the IM to

request that a new context be created. If this event is sent, the

IM MUST

respond with the NewContextResponse event. The NewContextResponse

event MUST

ONLY be sent in response to the NewContextRequest event. Note

that the IM MAY create a new context without a previous

NewContextRequest by sending a PrepareRequest or StartRequest

containing a new context ID to the Modality Components. Furthermore

the IM may respond with the same context in response to

NewContextRequests from different (multiple) Modality Components,

since the interaction can be started by different Modality

Components independently.

6.2.1.1 NewContextRequest

Properties

6.2.1.2 NewContextResponse

Properties

6.2.2

PrepareRequest/PrepareResponse

The IM MAY send a PrepareRequest to allow the

Modality Components to pre-load markup and prepare to run. Modality

Components are not required to take any particular action in

response to this event, but they MUST return a

PrepareResponse event. Modality Components that return a

PrepareResponse event with Status of 'Success' SHOULD be

ready to run with close to 0 delay upon receipt of the

StartRequest.

The Interaction Manager MAY send multiple PrepareRequest events to a

Modality Component for the same Context before sending a

StartRequest. Each request MAY reference a different ContentURL or

contain different in-line Content. When it receives multiple

PrepareRequests, the Modality Component SHOULD

prepare to run any of the specified content.

6.2.2.1 PrepareRequest

Properties

RequestID. See 6.1.4

RequestID. A newly generated identifier used to identify

this request.Context See 6.1.1

Context. Note that the IM MAY use the same context

value in multiple PrepareRequest events when it wishes to execute

multiple instances of markup in the same context.ContentURL Optional URL of the content that the

Modality Component SHOULD prepare to execute.Content Optional Inline markup that the Modality

Component SHOULD prepare to execute.Source See 6.1.2

Source.Target See 6.1.3

Target.Data Optional. See 6.1.7

Data.Confidential Optional. See 6.1.8 Confidential.

The IM MUST NOT specify both the ContentURL and

Content in a single PrepareRequest. The IM MAY leave both

contentURL and content empty. In such a

case, the Modality Component MUST revert to its default behavior. For

example, this behavior could consist of returning an error event or

of running a preconfigured or hard-coded script.

6.2.2.2 PrepareResponse

Properties

6.2.3

StartRequest/StartResponse

To invoke a modality component, the IM MUST send a

StartRequest. The Modality Component MUST return a

StartResponse event in response. The IM MAY include a

value in the ContentURL or Content field of this event. In this

case, the Modality Component MUST use this value.

If a Modality Component receives a new StartRequest while it is

executing a previous one, it MUST either cease execution of the previous

StartRequest and begin executing the content specified in the most

recent StartRequest, or reject the new StartRequest, returning a

StartResponse with status equal to 'Failure'.

6.2.3.1 StartRequest

Properties

RequestID. See 6.1.4

RequestID. A newly generated identifier used to identify

this request.Context See 6.1.1

Context. Note that the IM MAY use the same context

value in multiple StartRequest events when it wishes to execute

multiple instances of markup in the same context.ContentURL Optional URL of the content that the

Modality Component MUST attempt to execute.Content Optional Inline markup that the Modality

Component MUST attempt to execute.Source See 6.1.2

Source.Target See 6.1.3

Target.Data Optional. See 6.1.7

Data.Confidential Optional. See 6.1.8 Confidential.

The IM MUST NOT specify both the ContentURL and

Content in a single StartRequest. The IM MAY leave both

contentURL and content empty. In such a case, the Modality

Component MUST run the content specified in the most

recent PrepareRequest in this context, if there is one. Otherwise

it MUST

revert to its default behavior. For example, this behavior could

consist of returning an error event or of running a preconfigured

or hard-coded script.

6.2.3.2 StartResponse

Properties

6.2.4 DoneNotification

If the Modality Component reaches the end of its processing, it

MUST

return a DoneNotification to the IM.

6.2.4.1 DoneNotification

Properties

The DoneNotification event is intended to indicate the

completion of the processing that has been initiated by the

Interaction Manager with a StartRequest. As an example a voice

modality component might use the DoneNotification event to indicate

the completion of a recognition task. In this case the

DoneNotification event might carry the recognition result expressed

using EMMA. However, there may be tasks which do not have a

specific end. For example the Interaction Manager might send a

StartRequest to a graphical modality component requesting it to

display certain information. Such a task does not necessarily have

a specific end and thus the graphical modality component might

never send a DoneNotification event to the Interaction Manager.

Thus the graphical modality component would display the screen

until it received another StartRequest (or some other lifecycle

event) from the Interaction Manager.

6.2.5

CancelRequest/CancelResponse

The IM MAY send a CancelRequest to stop processing in

the Modality Component. In this case, the Modality Component MUST stop

processing and then MUST return a CancelResponse.

6.2.5.1 CancelRequest

Properties

6.2.5.2 CancelResponse

Properties

6.2.6

PauseRequest/PauseResponse

The IM MAY send a PauseRequest to suspend processing

by the Modality Component. Modality Components MUST return a

PauseResponse once they have paused, or once they determine that

they will be unable to pause.

6.2.6.1 PauseRequest

Properties

6.2.6.2 PauseResponse

Properties

6.2.7

ResumeRequest/ResumeResponse

The IM MAY send the ResumeRequest to resume

processing that was paused by a previous PauseRequest. The IM MUST NOT

send the ResumeRequest to a context that is not paused due to a

previous PauseRequest. Implementations that have paused MUST attempt

to resume processing upon receipt of this event and MUST return a

ResumeResponse afterwards. The 'Status' MUST be

'Success' if the implementation has succeeded in resuming

processing and MUST be 'Failure' otherwise.

6.2.7.1 ResumeRequest

Properties

6.2.7.2 ResumeResponse

Properties

6.2.8

ExtensionNotification

This event MAY be generated by the IM and MAY be

generated by the Modality Component. It is used to encapsulate

application-specific events that are extensions to the framework

defined here. For example, if an application containing a voice

modality wanted that modality component to notify the Interaction

Manager when speech was detected, it would cause the voice modality

to generate an ExtensionNotification event ( with a 'name' of

something like 'speechDetected') at the appropriate time.

6.2.8.1 ExtensionNotification

Properties

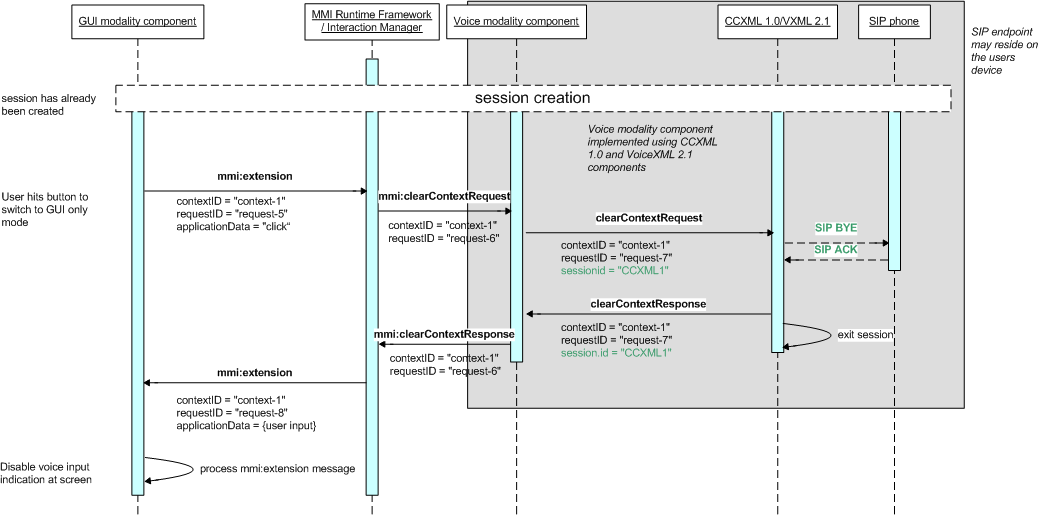

6.2.9

ClearContextRequest/ClearContextResponse

The IM MAY send a ClearContextRequest to indicate

that the specified context is no longer active and that any

resources associated with it may be freed. Modality Components are

not required to take any particular action in response to this

command, but MUST return a ClearContextResponse. Once the

IM has sent a ClearContextRequest to a Modality Component, it MUST NOT

send the Modality Component any more events for that context.

6.2.9.1 ClearContextRequest

Properties

6.2.9.2 ClearContextResponse

Properties

6.2.10

StatusRequest/StatusResponse

The StatusRequest message and the corresponding StatusResponse

are intended to provide keep-alive functionality. Either the IM or

the Modality Component MAY send the StatusRequest message. The

recipient MUST respond with the StatusResponse

message.

6.2.10.1 Status Request

Properties

RequestID. See 6.1.4

RequestID. A newly generated identifier used to identify

this request.Context See 6.1.1

Context. Optional specification of the context for which

the status is requested. If it is present, the recipient MUST respond

with a StatusResponse message indicating the status of the

specified context. If it is not present, the recipient MUST send a

StatusResponse message indicating the status of the underlying

server, namely the software that would host a new context if one

were created.RequestAutomaticUpdate. A boolean value. If it is

'true' the recipient SHOULD send periodic StatusResponse messages

without waiting for an additional StatusRequest message. If it is

'false', the recipient SHOULD send one and only one StatusResponse

message in response to this request.Source See 6.1.2

Source.Target See 6.1.3

Target.Data Optional. See 6.1.7

Data.Confidential Optional. See 6.1.8 Confidential.

6.2.10.2 StatusResponse

Properties

RequestID. See 6.1.4

RequestID. This MUST match the RequestID in the StatusRequest

event.AutomaticUpdate. A boolean value. If it is 'true'

the sender MUST keep sending StatusResponse messages in

the future without waiting for another StatusRequest message. If it

is 'false', the sender MUST wait for a subsequent StatusRequest

message before sending another StatusResponse message.Context See 6.1.1

Context. An optional specification of the context for which

the status is being returned. If it is present, the response MUST represent

the status of the specified context. If it is not present, the

response MUST represent the status of the underlying

server.Status An enumeration of 'Alive' or 'Dead'. The

meaning of these values depends on whether the 'context' parameter

is present. If it is, and the specified context is still active and

capable of handling new life cycle events, the sender MUST set this

field to 'Alive'. If the 'context' parameter is present and the

context has terminated or is otherwise unable to process new life

cycle events, the sender MUST set the status to 'Dead'. If the

'context' parameter is not provided, the status refers to the

underlying server. If the sender is able to create new contexts, it

MUST set

the status to 'Alive', otherwise, it MUST set it to

'Dead'.Source See 6.1.2

Source.Target See 6.1.3

Target.Data Optional. See 6.1.7

Data.Confidential Optional. See 6.1.8 Confidential.

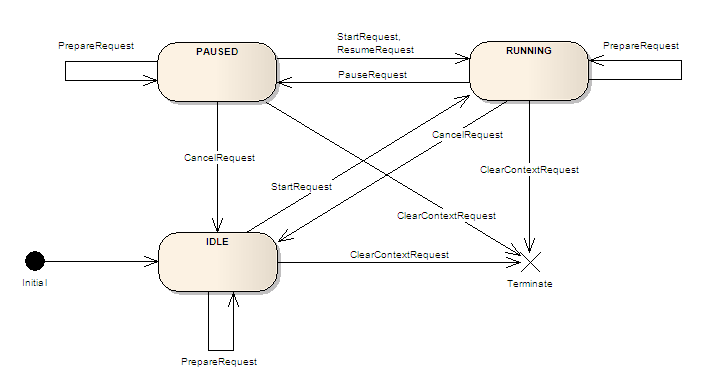

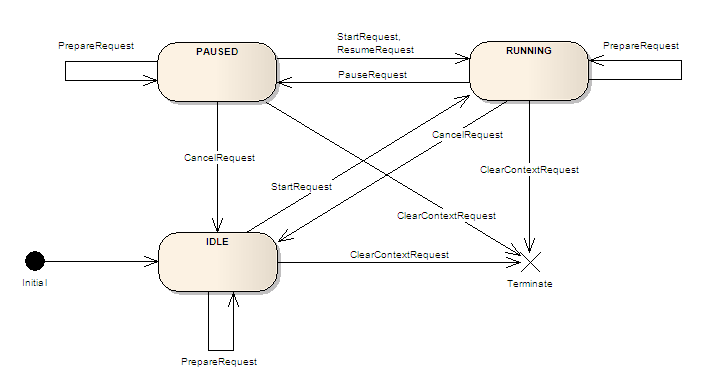

A Modality Component

States

[This section is informative]

Within an established context, a Modality Component can be

viewed as functioning in one of three states: Idle, Running or

Paused. Lifecycle events received from the Interaction Manager

imply specific actions and transitions between states. The table

below shows possible MC actions, state transitions and response

contents for each Request event the IM may send to a MC in a

particular state.

A Failure: ErrorMessage annotation indicates that the

specified Request event is either invalid or redundant in the

specified state. In this case, the Modality Component responds by

sending a matching Response event with Status=Failure and

StatusInfo=ErrorMessage. In all other cases, the Modality performs

the requested action, possibly transitioning to another state as

indicated.

| event / state |

Idle |

Running |

Paused |

| PrepareRequest |

preload or update content |

preload or update content |

preload or update content |

| StartRequest |

Transition: Running

use new content if provided, otherwise use last available

content

|

stop processing current content, restart as in

Idle |

Transition: Running

stop processing current content, restart as in Idle

|

| Failure: NoContent if MC requires content to run and

none has been provided |

| CancelRequest |

Ignore |

Transition: Idle |

Transition: Idle |

| PauseRequest |

Failure: ignore |

Transition: Paused |

Ignore |

| Failure: CantPause if MC is unable to pause |

| ResumeRequest |

Ignore |

Failure: AlreadyRunning |

Transition: Running |

| StatusRequest |

send status |

send status |

send status |

| ClearContextRequest |

close session |

close session |

close session |

Here is a state chart representation of these transitions:

B Examples of

Life-Cycle Events

[This section is informative]

B.1 NewContextRequest (from MC

to IM)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:newContextRequest mmi:source="someURI"

mmi:target="someOtherURI" mmi:requestID="request-1">

</mmi:newContextRequest>

</mmi:mmi>

B.2 NewContextResponse (from

IM to MC)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:newContextResponse mmi:source="someURI" mmi:target="someOtherURI"

mmi:requestID="request-1" mmi:status="success" mmi:context="URI-1">

</mmi:newContextResponse>

</mmi:mmi>

B.3 PrepareRequest (from IM to

MC, with external markup)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:prepareRequest mmi:source="someURI" mmi:target="someOtherURI"

mmi:context="URI-1" mmi:requestID="request-1">

<mmi:contentURL mmi:href="someContentURI" mmi:max-age="" mmi:fetchtimeout="1s"/>

</mmi:prepareRequest>

</mmi:mmi>

B.4 PrepareRequest (from IM to

MC, inline VoiceXML markup)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0"

xmlns:vxml="http://www.w3.org/2001/vxml">

<mmi:prepareRequest mmi:source="someURI" mmi:target="someOtherURI"

mmi:context="URI-1" mmi:requestID="request-1" >

<mmi:content>

<vxml:vxml version="2.0">

<vxml:form>

<vxml:block>Hello World!</vxml:block>

</vxml:form>

</vxml:vxml>

</mmi:content>

</mmi:prepareRequest>

</mmi:mmi>

B.5 PrepareResponse (from MC

to IM, Success)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:prepareResponse mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI"

mmi:requestID="request-1" mmi:status="success"/>

</mmi:mmi>

B.6 PrepareResponse (from MC

to IM, Failure)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:prepareResponse mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI"

mmi:requestID="request-1" mmi:status="failure">

<mmi:statusInfo>

NotAuthorized

</mmi:statusInfo>

</mmi:prepareResponse>

</mmi:mmi>

B.7 StartRequest (from IM to

MC)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:startRequest mmi:source="someURI" mmi:target="someOtherURI" mmi:context="URI-1" mmi:requestID="request-1">

<mmi:contentURL mmi:href="someContentURI" mmi:max-age="" mmi:fetchtimeout="1s"/>

</mmi:startRequest>

</mmi:mmi>

B.8 StartResponse (from MC to

IM)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:startResponse mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI" mmi:requestID="request-1"

mmi:status="failure">

<mmi:statusInfo>

NotAuthorized

</mmi:statusInfo>

</mmi:startResponse>

</mmi:mmi>

B.9 DoneNotification (from MC

to IM, with EMMA result)

This requestID corresponds to the requestID of the

"StartRequest" event that started it.

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0"

xmlns:emma="http://www.w3.org/2003/04/emma">

<mmi:doneNotification mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI"

mmi:status="success" mmi:requestID="request-1" mmi:confidential="true">

<mmi:data>

<emma:emma version="1.0">

<emma:interpretation id="int1" emma:medium="acoustic" emma:confidence=".75"

emma:mode="voice" emma:tokens="flights from boston to denver">

<origin>Boston</origin>

<destination>Denver</destination>

</emma:interpretation>

</emma:emma>

</mmi:data>

</mmi:doneNotification>

</mmi:mmi>

B.10 DoneNotification (from MC

to IM, with EMMA "no-input" result)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0"

xmlns:emma="http://www.w3.org/2003/04/emma">

<mmi:doneNotification mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI"

mmi:status="success" mmi:requestID="request-1" >

<mmi:data>

<emma:emma version="1.0">

<emma:interpretation id="int1" emma:no-input="true"/>

</emma:emma>

</mmi:data>

</mmi:doneNotification>

</mmi:mmi>

B.11 CancelRequest (from IM to

MC)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:cancelRequest mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI"

mmi:requestID="request-1"/>

</mmi:mmi>

B.12 CancelResponse (from MC

to IM)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:cancelResponse mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI" mmi:requestID="request-1"

mmi:status="success"/>

</mmi:mmi>

B.13 PauseRequest (from IM to

MC)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:pauseRequest mmi:context="someURI" mmi:source="someURI" mmi:target="someOtherURI"

mmi:requestID="request-1"/>

</mmi:mmi>

B.14 PauseResponse (from MC to

IM)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:pauseResponse mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI" mmi:requestID="request-1"

mmi:status="success"/>

</mmi:mmi>

B.15 ResumeRequest (from IM to

MC)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:resumeRequest mmi:context="someURI" mmi:source="someURI" mmi:target="someOtherURI"

mmi:requestID="request-1"/>

</mmi:mmi>

B.16 ResumeResponse (from MC

to IM)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:resumeResponse mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI" mmi:requestID="request-1"

mmi:status="success"/>

</mmi:mmi>

B.17 ExtensionNotification

(formerly the data event, sent in both directions)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:extensionNotification mmi:name="appEvent" mmi:source="someURI"

mmi:target="someOtherURI" mmi:context="someURI" mmi:requestID="request-1">

</mmi:extensionNotification>

</mmi:mmi>

B.18 ClearContextRequest (from

the IM to MC)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:clearContextRequest mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI"

mmi:requestID="request-2"/>

</mmi:mmi>

B.19 ClearContextResponse

(from the MC to IM)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:clearContextResponse mmi:source="someURI" mmi:target="someOtherURI" mmi:context="someURI"

mmi:requestID="request-2" mmi:status="success"/>

</mmi:mmi>

B.20 StatusRequest (from the

IM to the MC)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:statusRequest mmi:requestAutomaticUpdate="true" mmi:source="someURI"

mmi:target="someOtherURI" mmi:requestID="request-3" mmi:context="aToken"/>

</mmi:mmi>

B.21 StatusResponse (from the

MC to the IM)

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:statusResponse mmi:automaticUpdate="true" mmi:status="alive"

mmi:source="someURI" mmi:target="someOtherURI" mmi:requestID="request-3" mmi:context="aToken"/>

</mmi:mmi>

C Event Schemas

This specification does not require any particular transport

format for life cycle events, however in the case where XML is

used, the following schemas are normative.

C.1 mmi.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" elementFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

Schema definition for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="NewContextRequest.xsd"/>

<xs:include schemaLocation="NewContextResponse.xsd"/>

<xs:include schemaLocation="ClearContextRequest.xsd"/>

<xs:include schemaLocation="ClearContextResponse.xsd"/>

<xs:include schemaLocation="CancelRequest.xsd"/>

<xs:include schemaLocation="CancelResponse.xsd"/>

<xs:include schemaLocation="DoneNotification.xsd"/>

<xs:include schemaLocation="ExtensionNotification.xsd"/>

<xs:include schemaLocation="PauseRequest.xsd"/>

<xs:include schemaLocation="PauseResponse.xsd"/>

<xs:include schemaLocation="PrepareRequest.xsd"/>

<xs:include schemaLocation="PrepareResponse.xsd"/>

<xs:include schemaLocation="ResumeRequest.xsd"/>

<xs:include schemaLocation="ResumeResponse.xsd"/>

<xs:include schemaLocation="StartRequest.xsd"/>

<xs:include schemaLocation="StartResponse.xsd"/>

<xs:include schemaLocation="StatusRequest.xsd"/>

<xs:include schemaLocation="StatusResponse.xsd"/>

<xs:element name="mmi">

<xs:complexType>

<xs:choice>

<xs:sequence>

<xs:element ref="mmi:NewContextRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:NewContextResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:ClearContextRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:ClearContextResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:CancelRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:CancelResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:DoneNotification"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:ExtensionNotification"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:PauseRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:PauseResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:PrepareRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:PrepareResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:ResumeRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:ResumeResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:StartRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:StartResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:StatusRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:StatusResponse"/>

</xs:sequence>

</xs:choice>

<xs:attribute form="unqualified" name="version" type="xs:decimal" use="required"/>

</xs:complexType>

</xs:element>

</xs:schema>

C.2 mmi-datatypes.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

general Type definition schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:simpleType name="targetType">

<xs:restriction base="xs:string"/>

</xs:simpleType>

<xs:simpleType name="requestIDType">

<xs:restriction base="xs:string"/>

</xs:simpleType>

<xs:simpleType name="contextType">

<xs:restriction base="xs:string"/>

</xs:simpleType>

<xs:simpleType name="statusType">

<xs:restriction base="xs:string">

<xs:enumeration value="success"/>

<xs:enumeration value="failure"/>

</xs:restriction>

</xs:simpleType>

<xs:simpleType name="statusResponseType">

<xs:restriction base="xs:string">

<xs:enumeration value="alive"/>

<xs:enumeration value="dead"/>

</xs:restriction>

</xs:simpleType>

<xs:complexType name="contentURLType">

<xs:attribute name="href" type="xs:anyURI" use="required"/>

<xs:attribute name="max-age" type="xs:string" use="optional"/>

<xs:attribute name="fetchtimeout" type="xs:string" use="optional"/>

</xs:complexType>

<xs:complexType name="contentType">

<xs:sequence>

<xs:any maxOccurs="unbounded" namespace="http://www.w3.org/2001/vxml" processContents="skip"/>

</xs:sequence>

</xs:complexType>

<xs:complexType name="emmaType">

<xs:sequence>

<xs:any maxOccurs="unbounded" namespace="http://www.w3.org/2003/04/emma" processContents="skip"/>

</xs:sequence>

</xs:complexType>

<xs:complexType mixed="true" name="anyComplexType">

<xs:complexContent mixed="true">

<xs:restriction base="xs:anyType">

<xs:sequence>

<xs:any maxOccurs="unbounded" minOccurs="0" processContents="skip"/>

</xs:sequence>

</xs:restriction>

</xs:complexContent>

</xs:complexType>

</xs:schema>

C.3 mmi-attribs.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

general Type definition schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:attributeGroup name="source.attrib">

<xs:attribute name="Source" type="xs:string" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="target.attrib">

<xs:attribute name="Target" type="mmi:targetType" use="optional"/>

</xs:attributeGroup>

<xs:attributeGroup name="requestID.attrib">

<xs:attribute name="RequestID" type="mmi:requestIDType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="context.attrib">

<xs:attribute name="Context" type="mmi:contextType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="confidential.attrib">

<xs:attribute name="Confidential" type="xs:boolean" use="optional"/>

</xs:attributeGroup>

<xs:attributeGroup name="context.optional.attrib">

<xs:attribute name="Context" type="mmi:contextType" use="optional"/>

</xs:attributeGroup>

<xs:attributeGroup name="status.attrib">

<xs:attribute name="Status" type="mmi:statusType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="statusResponse.attrib">

<xs:attribute name="Status" type="mmi:statusResponseType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="extension.name.attrib">

<xs:attribute name="Name" type="xs:string" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="requestAutomaticUpdate.attrib">

<xs:attribute name="RequestAutomaticUpdate" type="xs:boolean" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="automaticUpdate.attrib">

<xs:attribute name="AutomaticUpdate" type="xs:boolean" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="group.allEvents.attrib">

<xs:attributeGroup ref="mmi:source.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

<xs:attributeGroup ref="mmi:requestID.attrib"/>

<xs:attributeGroup ref="mmi:context.attrib"/>

<xs:attributeGroup ref="mmi:confidential.attrib"/>

</xs:attributeGroup>

<xs:attributeGroup name="group.allResponseEvents.attrib">

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<xs:attributeGroup ref="mmi:status.attrib"/>

</xs:attributeGroup>

</xs:schema>

C.4 mmi-elements.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

general elements definition schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<!-- ELEMENTS -->

<xs:element name="statusInfo" type="mmi:anyComplexType"/>

</xs:schema>

C.5 NewContextRequest.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" elementFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

NewContextRequest schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="NewContextRequest">

<xs:complexType>

<xs:sequence>

<xs:element minOccurs="0" name="data" type="mmi:anyComplexType"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:source.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

<xs:attributeGroup ref="mmi:requestID.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

C.6

NewContextResponse.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" elementFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

NewContextResponse schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="NewContextResponse">

<xs:complexType>

<xs:sequence>

<xs:element minOccurs="0" ref="mmi:statusInfo"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

C.7 PrepareRequest.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" elementFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

PrepareRequest schema for MMI Life cycle events version 1.0.

The optional PrepareRequest event is an event that the Runtime Framework may send

to allow the Modality Components to pre-load markup and prepare to run (e.g. in case of

VXML VUI-MC). Modality Components are not required to take any particular action in

response to this event, but they must return a PrepareResponse event.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:element name="PrepareRequest">

<xs:complexType>

<xs:choice>

<xs:sequence>

<xs:element name="ContentURL" type="mmi:contentURLType"/>

</xs:sequence>

<xs:sequence>

<xs:element name="Content" type="mmi:anyComplexType"/>

</xs:sequence>

<xs:sequence>

<xs:element minOccurs="0" name="Data" type="mmi:anyComplexType"/>

</xs:sequence>

</xs:choice>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

C.8 PrepareResponse.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" elementFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

PrepareResponse schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="PrepareResponse">

<xs:complexType>

<xs:sequence>

<xs:element minOccurs="0" name="data" type="mmi:anyComplexType"/>

<xs:element minOccurs="0" ref="mmi:statusInfo"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

C.9 StartRequest.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" elementFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

StartRequest schema for MMI Life cycle events version 1.0.

The Runtime Framework sends the event StartRequest to invoke a Modality Component

(to start loading a new GUI resource or to start the ASR or TTS). The Modality Component

must return a StartResponse event in response. If the Runtime Framework has sent a previous

PrepareRequest event, it may leave the contentURL and content fields empty, and the Modality

Component will use the values from the PrepareRequest event. If the Runtime Framework includes

new values for these fields, the values in the StartRequest event override those in the

PrepareRequest event.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:element name="StartRequest">

<xs:complexType>

<xs:choice>

<xs:sequence>

<xs:element name="ContentURL" type="mmi:contentURLType"/>

<xs:element minOccurs="0" name="data" type="mmi:anyComplexType"/>

</xs:sequence>

<xs:sequence>

<xs:element name="Content" type="mmi:anyComplexType"/>

<xs:element minOccurs="0" name="data" type="mmi:anyComplexType"/>

</xs:sequence>

</xs:choice>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

C.10 StartResponse.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch"

xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch"

attributeFormDefault="qualified"

elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

StartResponse schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="StartResponse">

<xs:complexType>

<xs:sequence>

<xs:element name="Data" minOccurs="0" type="mmi:anyComplexType"/>

<xs:element ref="mmi:StatusInfo" minOccurs="0"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

C.11 DoneNotification.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" elementFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

DoneNotification schema for MMI Life cycle events version 1.0.

The DoneNotification event is intended to be used by the Modality Component to indicate that

it has reached the end of its processing. For the VUI-MC it can be used to return the ASR

recognition result (or the status info: noinput/nomatch) and TTS/Player done notification.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="DoneNotification">

<xs:complexType>

<xs:sequence>

<xs:element minOccurs="0" name="data" type="mmi:anyComplexType"/>

<xs:element minOccurs="0" ref="mmi:statusInfo"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

C.12 CancelRequest.xsd

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" attributeFormDefault="qualified" elementFormDefault="qualified" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

CancelRequest schema for MMI Life cycle events version 1.0.

The CancelRequest event is sent by the Runtime Framework to stop processing in the Modality

Component (e.g. to cancel ASR or TTS/Playing). The Modality Component must return with a

CancelResponse message.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:element name="CancelRequest">

<xs:complexType>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<!-- no elements -->

</xs:complexType>

</xs:element>

</xs:schema>

C.13 CancelResponse.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch"

xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch"

attributeFormDefault="qualified"

elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

CancelResponse schema for MMI Life cycle events version 1.0.