Workshop Report

Table of contents

See also:

- The minutes of day 1

- The minutes of day 2

- Videos of most talks, linked from the agenda

- Reports from participants: Andrzej Mazur, Matthew Atkinson

- Videos on games tech from the W3C developers meetup co-organized between W3C Developers and SeattleJS on the day before the workshop

- Status update on Web Games technologies published on 26 November 2019 and that details standardization progress since the workshop

Executive summary

W3C held a two day workshop on Web Games in Redmond on 27-28 June 2019, hosted by Microsoft, bringing together browser vendors, game engines developers, games developers, game distributors, and device manufacturers (100 participants in total) to enrich the Open Web Platform with additional technologies for games.

Workshop participants acknowledged progress in Web technologies over the past few years, which continues today for example with WebAssembly and WebGPU. They highlighted support for real threading on the Web for 3D rendering and advanced audio processing as a core need to address to run AAA content on the Web. They also discussed proposals to improve support for cloud gaming scenarios, including proposals to reduce I/O latency (such as the pointerrawupdate event or the Audio Device Client proposal) and improve communication in real-time between clients and servers (the WebTransport proposal). They also identified technical updates that would benefit the Web games ecosystem as a whole, for instance more efficient bindings between WebAssembly and Web APIs defined using WebIDL, a stable and complete Gamepad specification, or the possibility to specify multiple storage buckets per origin. Broader discussions took place on discoverability, monetization, hosted Web runtimes and accessibility (for instance on efficient mechanisms to expose Web accessibility hooks to games compiled to WebAssembly).

On top of individual topics, one of the suggested next steps is to explore the possible creation of a games activity at W3C to coordinate inputs from the games community, pursue some of the broader discussions mentioned above, and track progress on needs identified during the workshop.

Introduction

W3C holds workshops to discuss a specific space from various perspectives and identify needs that could warrant standardization efforts at W3C or elsewhere, and assess support and priorities among relevant communities. The Workshop on Web Games was held in Redmond on 27-28 June 2019, hosted by Microsoft. Main goal of the workshop was to bring together browser vendors, game engines developers, games developers, game distributors, and device manufacturers to enrich the Open Web Platform with additional technologies for games. The workshop convened a diverse group of participants representing different facets of the industry, in total 101 registered participants. Discussions covered a wide range of topics as well, from discoverability and monetization issues down to specific technical issues such as threading and latency.

Setting the context

First session sets the context for the rest of the workshop, raising an initial set of priorities and obstacles for games on the Web. In his keynote, David Catuhe, author of the Babylon.js game engine at Microsoft, shares his love for the Web and his frustration for some of its limitations when compared to native. These limitations are precisely what the workshop needs to tackle: assets management, threading support, code performance in WebAssembly, cloud gaming, gamepad support, etc.

Chris Hawkins reports on Facebook's experience with Instant Games, HTML5 games that run in a WebView within Facebook's app. Christian Rudnick and Gili Zeevi detail recurring issues they encounter with Web games they develop or that get published on Softgames' platform. Andrzej Mazur reviews the history of Web games for indie developers, starting with the first workshop on Web games that W3C organized in 2011. The technical landscape for games developers is much better in 2019, most pressing technologies have been developed and implemented in the meantime (Workers, Pointer Lock, Gamepad, etc.) and the Web games ecosystem has grown exponentially in the past few years.

“All you had to do [in 2011] was just make a game [...] and it would be everywhere, and it just worked. A few years later we have a situation in which some developers are claiming that they made 500 games in a few years, and we have hundreds, if not thousands of developers like these. It's super easy to create an HTML5 game compared to a few years ago.” - Andrzej Mazur

With so many developers and so many games being developed, discoverability has become a key issue for games on the Web. Coupled with the difficulty to monetize content on the Web, this makes it difficult to develop games for the Web, all the more so than it is easy to clone an entire Web game or to re-create casual Web games in just a few days, even if code is obfuscated. How can Web games be protected? Yet the potential for business is enormous when one compares with the native world.

“The simplicity and the immediacy of in-app purchases [in native games] is driving 60 billion dollars revenue on a yearly basis. This is a dream for us to achieve the same thing in Web games.” - Gili Zeevi

The importance and difficulty to keep players engaged in a game is also pointed out. Web games do not need to be installed which alleviates some of the initial friction, but games need to load a bunch of assets before they can run, which can take time, whereas players expect games to start in a snap. Long starting times are a sure guarantee to lose players, with as much as 12% of players dropping for every second taken to load the game. Push notifications help re-engage players into a game. They are possible on the Web but conversion rates are much higher with native notifications.

“The Web is everywhere and the Web is even in places where network is not that good.” - David Catuhe

The difficulty to infer device capabilities makes it hard to evaluate what 3D textures can be loaded without running into memory and similar issues that can crash the game. This is obviously problematic for a user experience perspective and, in the case of hosted apps, crashing the game actually means crashing the entire app. The lack of feature parity between regular browsers and WebView is also challenging to run games in hosted apps.

“It's difficult to infer device capabilities and what sort of device you're operating on. This is a challenge for a lot of our developers who tend to load up texture memory and then end up crashing the WebView.” - Chris Hawkins

Other issues that complete the landscape for the workshop include the difficulty to run a game completely offline, the fact that the "Add to home screen" feature is sometimes hard to find, the need to transition game sessions from one device to another seamlessly, and the need for better development tools.

Expressing the needs

See also: Report on mapping needs

The “mapping games users and designers needs to technologies” session was designed to get participants to think about specific users needs and how they could be fulfilled with web games technologies. The user groups were touch/haptics researchers, UX designers, gamers, hardware developers and serious games designers (educational and therapeutic applications), all groups who could be using web game technologies. The outputs of the workshop are a matrix, and series of speculative design ideas. The matrix shows the user groups and ideas that could help them. The speculative design looked at how these groups could be using the web in the future, and what would need to be implemented for this to happen.

Themes have been identified from the outputs of the session. One of the biggest themes was around APIs with suggestions for new APIs or extending existing ones for example the Gamepad API. Social/collaborative was another large theme with suggestions such as creating an open source social graph, permission based access for friends and a proximity API. Other themes include metadata, standards, latency, documentation and UI.

The speculative design ideas are varied but there were a few that would give the user more agency. For example being able to easily disconnect from touch, and having an open source natural language processing. There were also ideas around having 3D model texture and temperature as material properties, native support for 3D in the browser, and expanding the access to instrumentation and tooling for performing UX studies in the browser. The documents produced from this session can be analysed further to find practical solutions that fulfill these user groups needs.

Topics

Threading support on the Web

See also:

Game engines need to maintain complex structures in memory such as the scene graph. Various algorithms need to be applied to the scene graph before a frame can be rendered, for instance frustum culling to evict objects that do not need to be rendered or particles computations. These operations can be run in parallel, but they need access to the scene graph.

Workers provide some support for threads on the Web. However, communication between workers on the Web is only possible through postMessage, which allows one to pass strings across workers, and through unstructured shared memory buffers using SharedArrayBuffer. None of these is satisfactory for the problem at hand:

- Using

postMessagemeans having to serialize/deserialize objects, which is too costly for a scene that needs to be exchanged between workers 60 times per second. - Web browsers dropped support for

SharedArrayBufferdue to Spectre and Meltdown and are only progressively re-introducing it. Luke Wagner reports that browser vendors recently reached consensus on a mechanism that would allow pages to opt-in toSharedArrayBuffer, provided relevant HTTP headers are set. This should boost the reappearance ofSharedArrayBufferon the Web, across browsers and devices. However,SharedArrayBufferis not a good fit for the problem at hand since we want to share a complex graph structure, and not an unstructured buffer.

David Catuhe champions the idea of a real threading mechanism on the Web that would allow to share structured memory across threads. David also questions the design choice that led to workers taking a file as input, and not a function. Game engines handle the threading logic on behalf of underlying applications. Having to pass a file to a worker means that game engines need to keep track of modules that these applications may import, which requires some help from the application itself. If workers could start from a function, they would gain access to code (even though they wouldn't share memory with the initial thread).

Game developers in the room are generally supportive of the idea but note the challenge of adding support for real threading in JavaScript. Workshop participants refine the proposal during a breakout session on the topic on the second day. During that session, Luke Wagner notes that the proposed notion of TypedObject in JavaScript, which is surfacing in WebAssembly as well, would make it possible to have immutable objects that could then be shared across workers. Although this solution would not address general threading scenarios, it could provide a good middle-ground solution for game engines: most algorithms that need to run on a scene graph only need read-only access to the structure.

David takes an action to flesh out a concrete proposal. Down the road, support for TypedObject should be discussed within EcmaScript's TC39 and adjustments to workers within WHATWG. Discussions with the WebAssembly community might also help align needs and proposed solutions.

WebAssembly

See also:

Luke Wagner describes the history and status of WebAssembly in 2019, and details the WebIDL Bindings proposal (renamed to "Interface Types Proposal" after the workshop) that will make WebAssembly bindings from/to WebIDL APIs more efficient. Initial proposal used WebAssembly tables and indexes maps to convert between JavaScript and WebAssembly, which was suboptimal. The new proposal refines reference types in WebAssembly.

“The goal [of the Mozilla Games program] at the time was: if we make the web platform better for games, then in addition to allowing games to run, which is really cool, we'll be making it better for all sorts of use cases.” - Luke Wagner

The WebIDL Bindings proposal opens the door for tighter integration of Web APIs into game engines written in C++ et al. Matthew Atkinson notes the potential to use these bindings to map native code to Web accessibility interfaces such as those defined in the Accessibility Object Model, under incubation in the WICG. This would provide a useful mechanism to alleviate the opacity of games developed in native code with regards to accessibility when they are run on the Web.

Debugging C++ code can of course be done using native debuggers, but there is always Web-specific code in applications compiled to WebAssembly. Björn Ritzl pleads for better debugging tools there. Renewed activity on the DWARF for WebAssembly proposal in the WebAssembly Community Group looks promising in that regard.

During the breakout session on WebAssembly on day 2, participants discuss:

- The need to keep WebAssembly in mind when designing new Web APIs

- The fact that WebAssembly does not provide any extra protection for game assets. Web Cryptography might be a better choice for game developers.

- Ways for JavaScript developers not to miss the WebAssembly wagon, e.g. with AssemblyScript

- Performances of module loading, caching and cost of calls from JavaScript to WebAssembly

3D rendering

See also:

The Khronos Group publishes royalty-free 3D standards to bring silicon acceleration to graphics such as OpenGl/WebGL, Vulkan, OpenXR, SPIR. WebGL triggered work on glTF, a compact file format for 3D models suitable for transmission across a network. Neil Trevett reviews glTF features, including support for up-to-date 3D, animations, Physically Based Rendered (PBR) materials. glTF is independent from the run time and most environments now render glTF content (game engines, XR engines, Facebook, Microsoft Office, Adobe tools, Blender, etc.).

glTF Extensions are being considered for inclusion in the core specification, starting with mesh compression, based on Google's Draco mesh compression technology, possibly followed by some mechanism to compress textures, which are often the larger part of 3D assets.

“It's the 21st century [and] we're still confounded by this diverse set of GPU native texture formats, and every platform seems to support a different set of GPU-accelerated formats. There's PVRTC, ASTC on the desktop. It's different to mobile [...] In the native space, very often, game developers actually end up shipping multiple versions of their texture assets.” - Neil Trevett

On top of compression, a key issue with textures is that platforms support different GPU native texture formats. The Khronos Group is working with Binomial and Google to integrate Binomial's Basis Universal format into glTF. This feature, known as Compressed Texture Transmission Format (CTTF) would provide a suitable texture format for transmission that can easily be transcoded into any of the GPU formats actually supported by the platform at low processing costs and on the fly, without having to unpack the entire textures first.

Another potential new feature for glTF is support for next generation of Physically Based Rendering (PBR), primarily pushed by 3D commerce folks. Additional features could be integrated down the road but need to be prioritized, for instance facial animation for avatars' body animation or point clouds technologies. Neil notes that vertical activities are starting to build on top of glTF too, such as the VRM format for human avatars developed in Japan.

Discussions suggest that it would be useful to consider metadata definitions for glTF to expose semantics of 3D scenes, notably for accessibility purpose. A liaison between the Khronos Group and the Accessible Platform Architecture Working Group would help converge on suitable solutions in that space.

Switching to actual 3D rendering, Myles C. Maxfield presents the current status of the WebGPU proposal. WebGPU exposes a common abstraction on top of different hardware (DirectX, Metal and Vulkan). This would allow developers to leverage the full capabilities of the hardware, and would also let developers take advantage of computing capabilities of graphic cards, e.g. for artificial intelligence (AI) scenarios.

Core WebGPU features include a security model compatible with the Web, portability across platforms and performance. WebGPU is also designed to allow batching context parameters into an opaque object that can be reused across calls, making WebGPU very good at switching between different sets of configurations.

The definition of a suitable shading language for WebGPU is still under discussion, with two competing proposals on the table: a dialect of SPIR-V and a solution based on HLSL. The languages are similar but the workflow to create code is different: SPIR-V gets compiled, whereas WHLSL can be used out of the box. Goal is to converge on one language.

The WebGPU proposal is being incubated in the GPU for the Web Community Group. The group is considering transitioning to a Working Group.

On the second day, some workshop participants discuss the possibility to create a 3D element for the Web, which could be particularly useful for 3D commerce use cases, leading to the creation of an issue on Native glTF support on the incubation repository of the Immersive Web CG. Here as well, a liaison between the Khronos Group and the Immersive Web groups would help continue the discussion.

Assets loading & storage

As stressed out in context setting, starting times are a sure guarantee to lose players before they even start to play. Kasper Mol shares statistics from the Poki games platform that show how the conversion to actual play time falls with the initial download size of a game: 20% of potential players disappear before the game starts for a 10MB download in countries where users have good connections, up to 40% when connection is poor.

On top of compression techniques, it seems that the problem is mostly not technical but rather educational: substantive gains can be achieved by bundling things differently and only loading core assets when the game starts. However most game developers are used to the native world, where games are installed and all assets get fetched upfront. A best practices document would help raise the issue across developers.

Andrew Sutherland reviews the different technologies available for storage of game assets: cookies, localStorage, IndexedDB, and the Cache API. Andrew emphasizes the cost of serialization/deserialization, which had already been pointed out during the discussion on threading. More importantly, he explains quota and bucket restrictions (per TLD+1 or origin) and how browsers evict content, making it hard to rely on persistent storage in practice.

“Buckets are the spec standard for eviction. We'll wipe out all of your site's data if we wipe you out. The interesting stuff going forward spec-wise is we would like to have multiple buckets. For instance the emails the user has composed are something that probably you would want to keep but things that are just cache could possibly be evicted.” - Andrew Sutherland

Feedback from participants shows support for having multiple buckets per origin (e.g. to distinguish between permanent resources and those that can be downloaded again when needed), although the exact semantics of how this can be achieved, so that users can understand what they're committing to, are hard to get right. One possible option could be through the Background Fetch proposal, which connects with the download manager, making it easier for users to grasp what is going on behind the scenes.

Games accessibility

During the session on accessibility, Luis Rodriguez details the landscape for users with motor impairment, reviewing different types of motor impairment conditions such cerebral palsy, neural tube defects, and trauma incidents, and their implication in terms of accessibility needs, and of ability switches (sip and puff headsets, wands, etc.) that users may use to control a game. Matthew Atkinson describes how these needs can be mapped to concrete design choices and assistive technologies. Practical challenges remain, but it is quite positive to note the progression of awareness around accessibility. Question is no longer "What is it?" but "How do I do it?".

“Games are meant to be challenging [...] If it's not challenging, it's not fun! But the challenge varies from game to game, so the way that you make a game accessible varies from game to game.” - Ian Hamilton

There are lots of improvements that can be made to the actual contents of a game to improve its accessibility, such as increasing the size of target areas, using spatialized audio or visual indications to mark the origin of some game event, or providing subtitles, which turn out to be heavily used in games by all users. On top of that, the best approach to make a game accessible is to integrate with assistive technologies through APIs, as these APIs were designed to provide accessibility support for free. In a DOM world, assistive technologies build on top of an accessibility tree, which is associated with the DOM. HTML elements provide base semantics, WAI-ARIA defines additional roles and attributes. However, many games are rendered in a canvas, maybe because they were compiled to WebAssembly or just generally render things pictorially. There is no standard mechanism to provide the required semantic information to assistive technologies in Web browsers when there is no DOM. However, that is precisely the goal of the Accessibility Object Model (AOM) proposal, under incubation in the WICG.

One question that arises is: how can games compiled to WebAssembly take advantage of these new Web APIs? Calling Web APIs from WebAssembly code is slow and convoluted today, but the WebIDL Bindings proposal, discussed in the WebAssembly session would provide an efficient mechanism for game engines that compile games into WebAssembly to integrate support for the AOM APIs.

Luis champions the idea that games should integrate voice capability, meaning real voice conversation capabilities and not only text-to-speech or voice recognition. There are a lot of new developments in what is known as the voice UX ecosystem. Voice assistants are a good illustration of that trend.

“Will people want to talk to your web game? I venture to say yes because in the case of users with disability there's that escapism, that coping strategy that our games can provide.” - Luis Rodriguez

Ian Hamilton introduces the 21st Century Communications and Video Accessibility Act (CVAA) that video games now need to comply with. The act provides additional incentives to integrate accessibility consideration into the design of any communication functionality present in a game. While compliance is an obvious incentive, accessibility should not stop at compliance; developers should be thinking about how to make their experiences in general as enjoyable as possible. CVAA requires accessibility to be considered from early in development and requires people with disabilities to be consulted as part of it; these considerations and the technical solutions that become available as a result should all contribute to general accessibility improvements, improvements that are not restricted to communications functionality alone.

Audio & Games

See also:

Michel Buffa demonstrates the power of the Web Audio API as it exists today, notably showing how it is possible to create an open plugin standard for audio on the Web using JavaScript and/or an AudioWorklet that runs code in WebAssembly, allowing to run audio plugins on the Web written in specialized languages such as FAUST. Michel points out that latency issues with audio I/O on some platforms. The outputLatency property of AudioContext is not implemented. This is more an implementation (and driver) issue than an issue with the Web Audio API (support in Firefox was added shortly after the workshop).

Philippe Milot reports on AudioKinetics's journey to have the Wwise sound engine run on the Web, and points out the limits of the AudioWorklet approach for audio engines such as Wwise that need to integrate closer to the hardware and that rely on threading support. Threading needs overlap with those discussed in the sessions on threading.

Hongchan Choi presents the Audio Device Client (ADC) proposal, under incubation in the Web Audio Community Group, which aims at solving the issues encountered by Philippe and other companies in the pro-audio space by providing a truly low-level audio I/O. Key features of the proposal include:

- A dedicated global scope in a separate thread (at the right priority) for audio processing to avoid complex plumbing and workarounds that some libraries need to resort to today

- A low-level audio I/O, just a callback with input and output buffers, which makes it a perfect fit for WebAssembly-powered audio processing

- Configurable render block size (not locked to the 128 sample-frames of the Web Audio API)

- Ability to select audio I/O devices via a

MediaTrackConstraintspattern - Implicit sample rate conversion when needed

“[ADC] is meant to be the lowest layer in the web platform: no built-in building blocks or infrastructure, no redundant overhead. Just a callback function with input and output buffers, perfect for WASM-heavy approach. Also, it is close to hardware layer, so it allows you to configure a device client in different ways without exposing users' privacy.” - Hongchan Choi

There is general support in the room for the ADC approach presented which would go a long way toward addressing audio needs in games. There are tons of audio-processing code written in C/C++ that could be ported to the Web easily if ADC gets adopted.

During the breakout session on audio, workshop participants re-iterate the request for a low-level approach from pro-audio vendors, investigate how the WebCodecs proposal could integrate with ADC, and talk about positional audio for immersive scenarios. The Web Audio Working Group is gathering use cases for the next iteration of the Web Audio API.

Gamepad support

See also: Presentations and Minutes

The Gamepad API has been on the Recommendation track for a number of years already. It provides support for basic gamepad functionality and has been implemented across browsers. However, its development has stalled since 2014 for lack of momentum. And yet Kelvin Yong explains that the current limitations of the Gamepad API make it impossible for game companies to rely on Web technologies for user interfaces. Main changes required:

- A standardization of gamepad inputs: controller buttons may be exposed differently to applications. This is all the more so problematic than the variation exists both across controllers and across browsers for the same controller! There may also be a need for remapping buttons for accessibility purpose.

- Support for modern controller features: touch surfaces, light indicators, haptics, accelerometer, gyroscope, etc.

Current plan is to bring the current Gamepad specification to Recommendation, and to start working on a second iteration that would add support for modern gamepad features, starting with touchpad support (which covers various shapes and single/multi-touch) and light indicators, which is both more complex and more important than meets the eye: it is key to guide users to the active controller. A proposal for haptics is also in the works, from Google.

Some controllers are event-based, others are poll-based. The Gamepad API is poll-based for now but adding an event-based mechanism is envisioned but would require active contributions. The user agent may have to align polling and eventing to keep the event loop aligned with the display framerate, especially in WebXR scenarios.

Talking about WebXR, Nell Waliczek provides an update on where it is heading with regards to controllers. WebXR has the notion of input sources, which can be hands, controllers, tracked or untracked. The Gamepad API is exposed as part of XRInputSource. One challenge in VR is that form factors of controllers are much more diverse. Discussions on WebXR have converged toward a shared model with a hierarchy of interaction profiles. The WebXR community is also building a registry of controllers.

There is overwhelming support across game developers in the room for progressing the Gamepad API. More engagement from the games community towards finalizing the current specification and contributing to new proposals would greatly help.

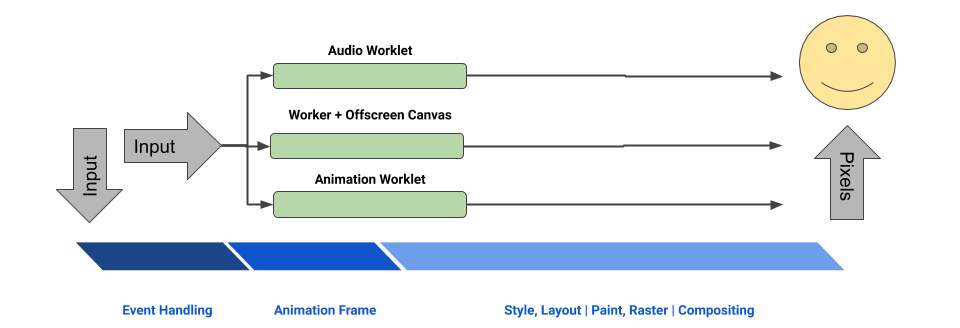

Inputs latency

Navid Zolghadr reviews sources of latency for inputs on the Web, either due to developers running long tasks or to design choices such as the decision to align user inputs with requestAnimationFrame (rAF). A major bottleneck today is that all input events have to go through the main thread, which is suboptimal for some use cases: local gaming in a worker, cloud gaming, low-latency drawing taking advantage of OffscreenCanvas or interactive animations based on user inputs (e.g. scroll-based animations).

Navid presents a proposal for exposing user input events to workers. The API would allow to delegate specific user input events on a given target to a worker, which would completely avoid having to block the main thread. The API, still at early design stages, would make it possible to push input events to the network at no cost.

Another proposal for non-rAF-aligned events (again useful for cloud gaming) is also being investigated, using new raw events. By definition, these events may impact performance, hence the need to evaluate their suitability in practice.

WebTransport & WebCodecs

See also:

Cloud gaming may use different technologies to stream media in and out. None of them are a great fit for cloud gaming though. WebRTC is great in theory but still hard to use in a client/server architecture. Also, coupling between transport and encoding/decoding means that applications cannot fine tune encoding/decoding parameters for cloud gaming. WebSockets is TCP-based where the cloud gaming scenario would benefit from the flexibility of UDP. Media Source Extensions (MSE) does not give the application any control over the amount of frames that the user agent will buffer before it starts playback. Some browsers support a live mode but it is based on heuristics. MSE may be extended to cover the needs but with the risk of ending up with a lower level API in the end.

“People say: "Wow, if a UDP equivalent of WebSockets was around, it would be great for games" [...] Tada! WebTransport would allow you to have something very close to UDP datagrams except secure” - Peter Thatcher

Peter Thatcher presents two proposals to solve these issues:

- WebTransport: An API based on QUIC that could be peer-to-peer or client/server. For client/server, WebTransport would provide the closest thing to a UDP version of WebSockets that can be achieved on the Web without running into security issues. WebTransport also has pluggable congestion control. The WebTransport proposal is under incubation in the WICG.

- WebCodecs: An API to expose media encoding/decoding capabilities of the browser. WebCodecs notably avoids the need to ship WebAssembly code to support particular codecs, which affects performance and battery life. The WebCodecs proposal has been proposed to the WICG but needs support to move on.

The proposals may be useful in a variety of other cases, including multiplayer games, low-latency streaming of game assets, real-time communication in games, client/server maching learning, or transcoding.

The two proposals receive warm support from workshop participants and several of them review them in more details during a breakout session. The client/server mode of WebTransport seems to be preferred option to start with. Polyfills could help with the transition from WebSockets to WebTransport. WebCodecs could perhaps be used to easily synchronize media with other types of content.

Gender-inclusive languages in games

Elina Bytskevich and Gabriel Tutone explain genderization, the act of making distinctions according to gender, which can create an unequal representation of society. Some games manage to avoid gender biases: there is no notion of gender in the Sims 4, Fallen London proposes a gender-less option, Sunless Sea refers to one character using both male and female pronouns, etc. However, this is hard to get right.

People often end up doing genderization without noticing it as part of language. Languages may be non-gendered (like English or Finnish), have common or neuter gender (like Dutch and Danish), masculine and feminine (like Spanish, French, Italian), or masculine, feminine and neuter gender (like German and Russian). Some languages also distinguish between animate and inanimate objects (like Basque).

Inclusive language is a form of language that is not biased toward a particular sex or social representation of gender. Using inclusive language is a challenge in English. It is even more complicated in gendered languages where highly convoluted sentences may be needed to create neutral structures. Languages themselves have started to evolve to provide better support for inclusivity, with new pronouns and forms to blend masculine and feminine. These forms are more in more in use in written articles, as in French, German, Spanish or Italian.

“In French, "Cher.e.s ami.e.s" refers to both male and female friends. In German, [...] an asterisk [may be used] to indicate that there are more variations than just the ones displayed, for example "der*die Priester*in" to indicate that this refers both to male and female” - Elina Bytskevich

Interestingly, these new forms often cannot be pronounced, and screen readers, voice assistants, and other text-to-speech tools may choke on them. The Pronunciation Task Force of the Accessible Platform Architecture (APA) Working Group seems the right recipient for a discussions on pronunciation of new gender-inclusive forms.

Web games in hosted apps

The Web games in hosted apps breakout discusses the specific (but common) case where games built using Web technologies get run in a native wrapper. Examples include Baidu Smart Games, Facebook Instant Games, Tencent's WeChat Mini games. Wu Ping details the Baidu Smart Games platform.

The combination of a native shell with Web content creates an extensible environment where specific APIs can be exposed to ease integration with the hosting application, for instance a social graph API to interact with the user's social network. Running games in such an environment is challenging though: the WebView that native applications may use to render Web content may not be in par with the Web environment that runs in real Web browsers. Some Web APIs are also not available in a WebView for security reasons. In practice, some of these hosted environments are re-implementing Web APIs and ship with limited support for Web APIs that are not seen as critical for games (such as the DOM).

Conversely, some of the additional APIs that applications may have access to in hosted environments may be useful in a broader context. In practice, some hosted environments re-implement Web APIs and ship without DOM support, providing only a rendering canvas to applications.

This suggests further exploration would be useful to clarify restrictions that running Web application in a hosted structure trigger, and to study how such environments and the Web at large may converge longer term.

Discoverability & Monetization

Discoverability and monetization were mentioned in the context setting session as key issues for Web games: as opposed to hosted game platforms, the Web is an open ecosystem where it's easier for games to disappear among other types of content, and harder to monetize content. Tom Greenaway runs a breakout session on the topic to assess ways to improve the situation.

One idea to improve discoverability is to extend metadata formats, notably the notion of VideoGame in schema.org to allow developers to describe and declare the capabilities of their games. Current schema allow to detail the game's director, the composer of the soundtrack, and cheat codes, but it does not help with categorizing games. An extended metadata format could also help specify what type(s) of input controller(s) the game supports or requires (e.g. keyboard/mouse, game controller, touch screen), whether the game can be played offline, and the monetization schemes that it proposes.

On a different front, deep linking into games can help leverage the essence of the games: sharing links that immediately get users to the right content.

All in all, improving discoverability would also improve re-discoverability, as some players complain that they have a hard time finding a game again after playing once... which also explains why they may not be ready to invest in a game.

Talking about monetization, games seem to be progressively moving out of a purchasing model and towards subscription models or free-toplay. Rewarded video ads are mentioned as an efficient mechanism to monetize games and increase retention. Microtransactions, more or less transparent and integrated to the browser and Web application, could also help.

Beware of kids playing games though. Parental controls are moderately effective to prevent kids from overspending in games. However, one key difference between the Web and native here is that the user often resist logging in on the Web (for good reasons).

Next steps

See also:

- Presentation and Minutes

- Status update on Web Games technologies, published on 26 November 2019

In the final session, participants review topics discussed during the workshop to assess possible next steps that could improve the Web ecosystem for games: threads, performance, 3D rendering, storage, accessibility, audio, latency, network, discoverability, etc. Next steps presented in this section received general support from workshop participants. Please note that, even though these steps are formulated as concise action statements, there is no guarantee that these steps will be enacted. Progress on most of them depends on further discussions among implementers and users to assess willingness to work on them and prioritize work items, and on people actually committing time to do the work.

Update (26 November 2019): Thanks to the efforts of workshop participants and a few others, most of the topics mentioned below have already made significant progress. Please check the Status update on Web Games technologies blog post for details.

On top of individual topics, a quick show of hands suggests that it would be useful to gather continuous feedback on Web technologies from the games community, to track identified needs and to steer standardization efforts to address them. This could be done through the creation of a games activity at W3C, which could take the form of a Interest Group (or of a Task Force within an existing group). Participants also encourage running additional workshop-like events around games to gather inputs from other parts of the world.

Technologies in progress

Some suggested steps touch on existing standardization efforts, at various levels of maturity. From a standardization perspective, they can make progress right away, provided interested parties join relevant groups and contribute to the work:

- Advance the WebAssembly WebIDL Bindings proposal to the WebAssembly Working Group after incubation in the WebAssembly Community Group (note the proposal was renamed to "Interface Types Proposal" after the workshop).

- Advance the DWARF for WebAssembly proposal to the WebAssembly Working Group after incubation in the WebAssembly Community Group.

- Advance the Audio Device Client (ADC) proposal to the Audio Working Group after incubation in the Web Audio Community Group.

- Finalize the first version of the Gamepad specification within the WebApps Working Group.

- Start adding support for advanced gamepad features in a second version of the Gamepad specification within the WebApps Working Group.

- Assess suitability of the

pointerrawupdateevent to decorrelate input events fromrequestAnimationFrameand integrate it in the Pointer Events specification in the Pointer Events Working Group. - Add support for unaccelerated mouse events in Pointer Lock in the WebApps Working Group.

- Integrate support for Compressed Texture Transmission Format (CTTF) in glTF in the Khronos Group.

Exploratory work

Other suggested steps touch on technologies that are either being proposed for incubation or at early stages of incubation. Interested parties are encouraged to provide explicit support for the proposals and/or share feedback based on experience and needs:

- Advance WebGPU currently in incubation the GPU for the Web Community Group to a Working Group.

- Incubate WebTransport in the WICG.

- Support the proposal to expose input events to worker threads in the WICG.

- Support the WebCodecs proposal in the WICG. Note that, per charter, the Media Working Group may adopt the proposal after a period of incubation.

New technical proposals

Workshop discussions also pointed out new technical needs that would be beneficial for the games ecosystem on the Web. Proposed work in this category includes:

- Improve threading support on the Web and notably allow sharing of structured objects across workers. A proposed solution in that space would likely be at the ECMAScript level. Also allow workers to start from a function and inherit their parent's scope (code only, not data). Worker extension proposals should be brought to the WHATWG.

- Raise the idea of a native 3D element for the Web in the Immersive Web Community Group [see the Native glTF issue raised shortly after the workshop].

- Expose multiple buckets per origin to accommodate different permanent/temporary storage scenarios. Investigate suitability of Background Fetch to make such a feature palpable to users.

- Raise the pronunciation of gender-inclusive forms in the Pronunciation Task Force of the Accessible Platform Architectures (APA) Working Group.

- Flesh out a proposal to extend

VideoGamein schema.org to improve the discoverability of Web games.

Other proposals

Next steps in this category are possibly more generic or abstract in essence. Further discussion on these topics would help refine scope and/or clarify possible standardization needs:

- Investigate the suitability of the Accessibility Object Model (AOM) API coupled with WebAssembly WebIDL Bindings to expose accessibility hooks in games cross-compiled to WebAssembly, and advance AOM, under incubation in the WICG to the standardization track. Note accessibility topics are discussed at W3C within the Accessible Platform Architectures (APA) Working Group.

- Explore metadata layers for glTF to carry semantics needed for accessibility and immersive scenarios, through exchanges between the Khronos Group and relevant groups at W3C (APA and Immersive Web groups).

- Consider developing guidelines for game developers, e.g. to promote efficient solutions for assets loading and accessibility.

- Discuss monetization issues for games on the Web, including support for rewarded video ads and mechanisms to mitigate piracy issues.

- Discuss specificities of Web environments hosted in native applications and commonalities with regular Web runtimes. Work on a convergence plan to expose Web APIs to hosted environments where possible and bring some APIs defined in hosted environments to the Web.

Thank you!

The organizers express deep gratitude to those who helped with the organization and execution of the workshop, starting with the members of the Program Committee who provided initial support and helped shape the workshop. Huge kudos to David Catuhe for his enthusiasm and smooth chairing of the workshop, to Microsoft for hosting the event is so good conditions, and to Facebook gaming for sponsoring. Many thanks to those who took an active role under the hood, notably scribes, events teams at W3C and Microsoft, Microsoft's A/V team, W3C's BizDev team, Marie-Claire Forgue for editing the videos after the workshop and all W3C team members who took part in the workshop one way or the other. And finally, a big thank you to the speakers and participants without whom the workshop wouldn't have been such a productive and inspiring event. Congratulations to all, level one completed, on to level two!