This section only applies to user agents, data mining tools, and conformance checkers.

The rules for parsing XML documents (and thus XHTML documents) into DOM trees are covered by the XML and Namespaces in XML specifications, and are out of scope of this specification. [XML] [XMLNS]

For HTML documents, user agents must use the parsing rules described in this section to generate the DOM trees. Together, these rules define what is referred to as the HTML parser.

While the HTML form of HTML5 bears a close resemblance to SGML and XML, it is a separate language with its own parsing rules.

Some earlier versions of HTML (in particular from HTML2 to HTML4) were based on SGML and used SGML parsing rules. However, few (if any) web browsers ever implemented true SGML parsing for HTML documents; the only user agents to strictly handle HTML as an SGML application have historically been validators. The resulting confusion — with validators claiming documents to have one representation while widely deployed Web browsers interoperably implemented a different representation — has wasted decades of productivity. This version of HTML thus returns to a non-SGML basis.

Authors interested in using SGML tools in their authoring pipeline are encouraged to use XML tools and the XML serialization of HTML5.

This specification defines the parsing rules for HTML documents, whether they are syntactically correct or not. Certain points in the parsing algorithm are said to be parse errors. The error handling for parse errors is well-defined: user agents must either act as described below when encountering such problems, or must abort processing at the first error that they encounter for which they do not wish to apply the rules described below.

Conformance checkers must report at least one parse error condition to the user if one or more parse error conditions exist in the document and must not report parse error conditions if none exist in the document. Conformance checkers may report more than one parse error condition if more than one parse error conditions exist in the document. Conformance checkers are not required to recover from parse errors.

Parse errors are only errors with the syntax of HTML. In addition to checking for parse errors, conformance checkers will also verify that the document obeys all the other conformance requirements described in this specification.

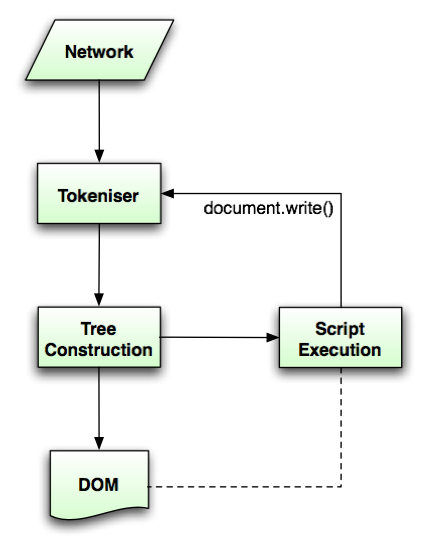

The input to the HTML parsing process consists of a stream of Unicode

characters, which is passed through a tokenisation stage (lexical analysis) followed

by a tree construction stage (semantic

analysis). The output is a Document object.

Implementations that do not support

scripting do not have to actually create a DOM Document

object, but the DOM tree in such cases is still used as the model for the

rest of the specification.

In the common case, the data handled by the tokenisation stage comes

from the network, but it can also come from script, e.g. using the document.write() API.

There is only one set of state for the tokeniser stage and the tree construction stage, but the tree construction stage is reentrant, meaning that while the tree construction stage is handling one token, the tokeniser might be resumed, causing further tokens to be emitted and processed before the first token's processing is complete.

In the following example, the tree construction stage will be called upon to handle a "p" start tag token while handling the "script" start tag token:

...

<script>

document.write('<p>');

</script>

...

The stream of Unicode characters that consists the input to the tokenisation stage will be initially seen by the user agent as a stream of bytes (typically coming over the network or from the local file system). The bytes encode the actual characters according to a particular character encoding, which the user agent must use to decode the bytes into characters.

For XML documents, the algorithm user agents must use to determine the character encoding is given by the XML specification. This section does not apply to XML documents. [XML]

In some cases, it might be impractical to unambiguously determine the encoding before parsing the document. Because of this, this specification provides for a two-pass mechanism with an optional pre-scan. Implementations are allowed, as described below, to apply a simplified parsing algorithm to whatever bytes they have available before beginning to parse the document. Then, the real parser is started, using a tentative encoding derived from this pre-parse and other out-of-band metadata. If, while the document is being loaded, the user agent discovers an encoding declaration that conflicts with this information, then the parser can get reinvoked to perform a parse of the document with the real encoding.

User agents must use the following algorithm (the encoding sniffing algorithm) to determine the character encoding to use when decoding a document in the first pass. This algorithm takes as input any out-of-band metadata available to the user agent (e.g. the Content-Type metadata of the document) and all the bytes available so far, and returns an encoding and a confidence. The confidence is either tentative or certain. The encoding used, and whether the confidence in that encoding is tentative or confident, is used during the parsing to determine whether to change the encoding.

If the transport layer specifies an encoding, return that encoding with the confidence certain, and abort these steps.

The user agent may wait for more bytes of the resource to be available, either in this step or at any later step in this algorithm. For instance, a user agent might wait 500ms or 512 bytes, whichever came first. In general preparsing the source to find the encoding improves performance, as it reduces the need to throw away the data structures used when parsing upon finding the encoding information. However, if the user agent delays too long to obtain data to determine the encoding, then the cost of the delay could outweigh any performance improvements from the preparse.

For each of the rows in the following table, starting with the first one and going down, if there are as many or more bytes available than the number of bytes in the first column, and the first bytes of the file match the bytes given in the first column, then return the encoding given in the cell in the second column of that row, with the confidence certain, and abort these steps:

| Bytes in Hexadecimal | Encoding |

|---|---|

| FE FF | UTF-16BE |

| FF FE | UTF-16LE |

| EF BB BF | UTF-8 |

This step looks for Unicode Byte Order Marks (BOMs).

Otherwise, the user agent will have to search for explicit character encoding information in the file itself. This should proceed as follows:

Let position be a pointer to a byte in the input stream, initially pointing at the first byte. If at any point during these substeps the user agent either runs out of bytes or decides that scanning further bytes would not be efficient, then skip to the next step of the overall character encoding detection algorithm. User agents may decide that scanning any bytes is not efficient, in which case these substeps are entirely skipped.

Now, repeat the following "two" steps until the algorithm aborts (either because user agent aborts, as described above, or because a character encoding is found):

If position points to:

Advance the position pointer so that it points at the first 0x3E byte which is preceded by two 0x2D bytes (i.e. at the end of an ASCII '-->' sequence) and comes after the 0x3C byte that was found. (The two 0x2D bytes can be the same as the those in the '<!--' sequence.)

Advance the position pointer so that it points at the next 0x09, 0x0A, 0x0B, 0x0C, 0x0D, 0x20, or 0x2F byte (the one in sequence of characters matched above).

Get an attribute and its value. If no attribute was sniffed, then skip this inner set of steps, and jump to the second step in the overall "two step" algorithm.

If the attribute's name is neither "charset" nor "content", then

return to step 2 in these inner steps.

If the attribute's name is "charset", let

charset be the attribute's value, interpreted

as a character encoding.

Otherwise, the attribute's name is "content": apply the algorithm for extracting an encoding from a

Content-Type, giving the attribute's value as the string to

parse. If an encoding is returned, let charset

be that encoding. Otherwise, return to step 2 in these inner

steps.

If charset is a UTF-16 encoding, change it to UTF-8.

If charset is a supported character encoding, then return the given encoding, with confidence tentative, and abort all these steps.

Otherwise, return to step 2 in these inner steps.

Advance the position pointer so that it points at the next 0x09 (ASCII TAB), 0x0A (ASCII LF), 0x0B (ASCII VT), 0x0C (ASCII FF), 0x0D (ASCII CR), 0x20 (ASCII space), or 0x3E (ASCII '>') byte.

Repeatedly get an attribute until no further attributes can be found, then jump to the second step in the overall "two step" algorithm.

Advance the position pointer so that it points at the first 0x3E byte (ASCII '>') that comes after the 0x3C byte that was found.

Do nothing with that byte.

When the above "two step" algorithm says to get an attribute, it means doing this:

If the byte at position is one of 0x09 (ASCII TAB), 0x0A (ASCII LF), 0x0B (ASCII VT), 0x0C (ASCII FF), 0x0D (ASCII CR), 0x20 (ASCII space), or 0x2F (ASCII '/') then advance position to the next byte and redo this substep.

If the byte at position is 0x3E (ASCII '>'), then abort the "get an attribute" algorithm. There isn't one.

Otherwise, the byte at position is the start of the attribute name. Let attribute name and attribute value be the empty string.

Attribute name: Process the byte at position as follows:

Advance position to the next byte and return to the previous step.

Spaces. If the byte at position is one of 0x09 (ASCII TAB), 0x0A (ASCII LF), 0x0B (ASCII VT), 0x0C (ASCII FF), 0x0D (ASCII CR), or 0x20 (ASCII space) then advance position to the next byte, then, repeat this step.

If the byte at position is not 0x3D (ASCII '='), abort the "get an attribute" algorithm. The attribute's name is the value of attribute name, its value is the empty string.

Advance position past the 0x3D (ASCII '=') byte.

Value. If the byte at position is one of 0x09 (ASCII TAB), 0x0A (ASCII LF), 0x0B (ASCII VT), 0x0C (ASCII FF), 0x0D (ASCII CR), or 0x20 (ASCII space) then advance position to the next byte, then, repeat this step.

Process the byte at position as follows:

Process the byte at position as follows:

Advance position to the next byte and return to the previous step.

For the sake of interoperability, user agents should not use a pre-scan algorithm that returns different results than the one described above. (But, if you do, please at least let us know, so that we can improve this algorithm and benefit everyone...)

If the user agent has information on the likely encoding for this page, e.g. based on the encoding of the page when it was last visited, then return that encoding, with the confidence tentative, and abort these steps.

The user agent may attempt to autodetect the character encoding from applying frequency analysis or other algorithms to the data stream. If autodetection succeeds in determining a character encoding, then return that encoding, with the confidence tentative, and abort these steps. [UNIVCHARDET]

Otherwise, return an implementation-defined or user-specified default

character encoding, with the confidence tentative. In

non-legacy environments, the more comprehensive UTF-8 encoding is recommended. Due to its use in legacy

content, windows-1252 is recommended as a default

in predominantly Western demographics instead. Since these encodings can

in many cases be distinguished by inspection, a user agent may

heuristically decide which to use as a default.

The document's character encoding must immediately be set to the value returned from this algorithm, at the same time as the user agent uses the returned value to select the decoder to use for the input stream.

User agents must at a minimum support the UTF-8 and Windows-1252 encodings, but may support more.

It is not unusual for Web browsers to support dozens if not upwards of a hundred distinct character encodings.

User agents must support the preferred MIME name of every character encoding they support that has a preferred MIME name, and should support all the IANA-registered aliases. [IANACHARSET]

When comparing a string specifying a character encoding with the name or alias of a character encoding to determine if they are equal, user agents must ignore the all characters in the ranges U+0009 to U+000D, U+0020 to U+002F, U+003A to U+0040, U+005B to U+0060, and U+007B to U+007E (all whitespace and punctuation characters in ASCII) in both names, and then perform the comparison case-insensitively.

For instance, "GB_2312-80" and "g.b.2312(80)" are considered equivalent names.

When a user agent would otherwise use an encoding given in the first column of the following table, it must instead use the encoding given in the cell in the second column of the same row. Any bytes that are treated differently due to this encoding aliasing must be considered parse errors.

| Input encoding | Replacement encoding | References |

|---|---|---|

| EUC-KR | Windows-949 | [EUCKR] [WIN949] |

| GB2312 | GBK | [GB2312] [GBK] |

| GB_2312-80 | GBK | [RFC1345] [GBK] |

| ISO-8859-1 | Windows-1252 | [RFC1345] [WIN1252] |

| ISO-8859-9 | Windows-1254 | [RFC1345] [WIN1254] |

| ISO-8859-11 | Windows-874 | [ISO885911] [WIN874] |

| KS_C_5601-1987 | Windows-949 | [RFC1345] [WIN949] |

| TIS-620 | Windows-874 | [TIS620] [WIN874] |

| x-x-big5 | Big5 | [BIG5] |

The requirement to treat certain encodings as other encodings according to the table above is a willful violation of the W3C Character Model specification. [CHARMOD]

User agents must not support the CESU-8, UTF-7, BOCU-1 and SCSU encodings. [CESU8] [UTF7] [BOCU1] [SCSU]

Support for UTF-32 is not recommended. This encoding is rarely used, and frequently misimplemented.

This specification does not make any attempt to support UTF-32 in its algorithms; support and use of UTF-32 can thus lead to unexpected behavior in implementations of this specification.

Given an encoding, the bytes in the input stream must be converted to Unicode characters for the tokeniser, as described by the rules for that encoding, except that the leading U+FEFF BYTE ORDER MARK character, if any, must not be stripped by the encoding layer (it is stripped by the rule below).

Bytes or sequences of bytes in the original byte stream that could not be converted to Unicode characters must be converted to U+FFFD REPLACEMENT CHARACTER code points.

Bytes or sequences of bytes in the original byte stream that did not conform to the encoding specification (e.g. invalid UTF-8 byte sequences in a UTF-8 input stream) are errors that conformance checkers are expected to report.

One leading U+FEFF BYTE ORDER MARK character must be ignored if any are present.

All U+0000 NULL characters in the input must be replaced by U+FFFD REPLACEMENT CHARACTERs. Any occurrences of such characters is a parse error.

Any occurrences of any characters in the ranges U+0001 to U+0008, U+000E to U+001F, U+007F to U+009F, U+D800 to U+DFFF , U+FDD0 to U+FDDF, and characters U+FFFE, U+FFFF, U+1FFFE, U+1FFFF, U+2FFFE, U+2FFFF, U+3FFFE, U+3FFFF, U+4FFFE, U+4FFFF, U+5FFFE, U+5FFFF, U+6FFFE, U+6FFFF, U+7FFFE, U+7FFFF, U+8FFFE, U+8FFFF, U+9FFFE, U+9FFFF, U+AFFFE, U+AFFFF, U+BFFFE, U+BFFFF, U+CFFFE, U+CFFFF, U+DFFFE, U+DFFFF, U+EFFFE, U+EFFFF, U+FFFFE, U+FFFFF, U+10FFFE, and U+10FFFF are parse errors. (These are all control characters or permanently undefined Unicode characters.)

U+000D CARRIAGE RETURN (CR) characters, and U+000A LINE FEED (LF) characters, are treated specially. Any CR characters that are followed by LF characters must be removed, and any CR characters not followed by LF characters must be converted to LF characters. Thus, newlines in HTML DOMs are represented by LF characters, and there are never any CR characters in the input to the tokenisation stage.

The next input character is the first character in the input stream that has not yet been consumed. Initially, the next input character is the first character in the input.

The insertion point is the position (just before

a character or just before the end of the input stream) where content

inserted using document.write() is actually

inserted. The insertion point is relative to the position of the character

immediately after it, it is not an absolute offset into the input stream.

Initially, the insertion point is uninitialized.

The "EOF" character in the tables below is a conceptual character

representing the end of the input stream. If the

parser is a script-created parser, then the

end of the input stream is reached when an explicit "EOF" character (inserted by the document.close()

method) is consumed. Otherwise, the "EOF" character is not a real

character in the stream, but rather the lack of any further characters.

When the parser requires the user agent to change the encoding, it must run the following steps. This might happen if the encoding sniffing algorithm described above failed to find an encoding, or if it found an encoding that was not the actual encoding of the file.

Initially the insertion mode is "initial". It can change to "before html", "before head", "in head", "in head noscript", "after head", "in body", "in table", "in caption", "in column group", "in table body", "in row", "in cell", "in select", "in select in table", "in foreign content", "after body", "in frameset", "after frameset", "after after body", and "after after frameset" during the course of the parsing, as described in the tree construction stage. The insertion mode affects how tokens are processed and whether CDATA blocks are supported.

Seven of these modes, namely "in head", "in body", "in table", "in table body", "in row", "in cell", and "in select", are special, in that the other modes defer to them at various times. When the algorithm below says that the user agent is to do something "using the rules for the m insertion mode", where m is one of these modes, the user agent must use the rules described under that insertion mode's section, but must leave the insertion mode unchanged (unless the rules in that section themselves switch the insertion mode).

When the insertion mode is switched to "in foreign content", the secondary insertion mode is also set. This secondary mode is used within the rules for the "in foreign content" mode to handle HTML (i.e. not foreign) content.

When the steps below require the UA to reset the insertion mode appropriately, it means the UA must follow these steps:

select element, then

switch the insertion mode to "in select" and abort these steps.

(fragment case)

td or

th element and last is

false, then switch the insertion mode to "in cell" and abort these steps.

tr

element, then switch the insertion mode to "in row" and abort these

steps.

tbody, thead,

or tfoot element, then switch the

insertion mode to "in table body" and abort these steps.

caption element, then switch the

insertion mode to "in caption" and abort these steps.

colgroup element, then switch the

insertion mode to "in column group" and abort these steps. (fragment case)

table element, then switch the insertion

mode to "in

table" and abort these steps.

head

element, then switch the insertion mode to "in body" ("in body"! not

"in head"!)

and abort these steps. (fragment case)body element, then switch the insertion

mode to "in

body" and abort these steps.

frameset element, then

switch the insertion mode to "in frameset" and abort these

steps. (fragment case)

html element, then: if the head element pointer is

null, switch the insertion mode to "before head", otherwise, switch

the insertion mode to "after head". In either case, abort these steps. (fragment case)Initially the stack of open elements is empty. The stack grows downwards; the topmost node on the stack is the first one added to the stack, and the bottommost node of the stack is the most recently added node in the stack (notwithstanding when the stack is manipulated in a random access fashion as part of the handling for misnested tags).

The "before

html" insertion mode creates the html root element node, which is then added to the

stack.

In the fragment case, the stack

of open elements is initialized to contain an html element that is created as part of that

algorithm. (The fragment case skips the "before html"

insertion mode.)

The html node, however it is created,

is the topmost node of the stack. It never gets popped off the stack.

The current node is the bottommost node in this stack.

The current table is the last table element in the stack of

open elements, if there is one. If there is no table element in the stack of

open elements (fragment case), then the current table is the first element in the stack of open elements (the html element).

Elements in the stack fall into the following categories:

The following HTML elements have varying levels of special parsing

rules: address, area, base,

basefont, bgsound, blockquote, body, br,

center, col, colgroup, dd,

dir, div, dl, dt, embed, fieldset,

form, frame, frameset, h1, h2, h3, h4, h5, h6, head, hr,

iframe,

img, input,

isindex, li, link, listing, menu, meta,

noembed, noframes, noscript, ol,

optgroup, option, p, param,

plaintext, pre, script, select,

spacer, style, tbody, textarea, tfoot, thead, title, tr,

ul, and wbr.

The following HTML elements introduce new scopes for various parts of the

parsing: applet, button,

caption, html, marquee, object, table, td and

th.

The following HTML elements are those that end up in the list of active formatting elements: a, b,

big, em, font,

i, nobr, s,

small, strike, strong, tt, and u.

All other elements found while parsing an HTML document.

Still need to add these new elements to the lists:

event-source, section, nav,

article, aside, header,

footer, datagrid, command

The stack of open elements is said to have an element in scope or have an element in table scope when the following algorithm terminates in a match state:

Initialise node to be the current node (the bottommost node of the stack).

If node is the target node, terminate in a match state.

Otherwise, if node is a table element, terminate in a failure state.

Otherwise, if the algorithm is the "has an element in scope" variant (rather than the "has an element in table scope" variant), and node is one of the following, terminate in a failure state:

Otherwise, if node is an html element, terminate in a failure state.

(This can only happen if the node is the topmost

node of the stack of open elements, and prevents

the next step from being invoked if there are no more elements in the

stack.)

Otherwise, set node to the previous entry in the stack of open elements and return to step 2. (This will never fail, since the loop will always terminate in the previous step if the top of the stack is reached.)

Nothing happens if at any time any of the elements in the stack of open elements are moved to a new location in,

or removed from, the Document tree. In particular, the stack

is not changed in this situation. This can cause, amongst other strange

effects, content to be appended to nodes that are no longer in the DOM.

In some cases (namely, when closing misnested formatting elements), the stack is manipulated in a random-access fashion.

Initially the list of active formatting elements is empty. It is used to handle mis-nested formatting element tags.

The list contains elements in the formatting

category, and scope markers. The scope markers are inserted when entering

applet elements, buttons, object elements, marquees, table cells, and

table captions, and are used to prevent formatting from "leaking" into

applet elements, buttons, object elements, marquees, and tables.

When the steps below require the UA to reconstruct the active formatting elements, the UA must perform the following steps:

This has the effect of reopening all the formatting elements that were opened in the current body, cell, or caption (whichever is youngest) that haven't been explicitly closed.

The way this specification is written, the list of active formatting elements always consists of elements in chronological order with the least recently added element first and the most recently added element last (except for while steps 8 to 11 of the above algorithm are being executed, of course).

When the steps below require the UA to clear the list of active formatting elements up to the last marker, the UA must perform the following steps:

Initially the head element

pointer and the form

element pointer are both null.

Once a head element has been parsed

(whether implicitly or explicitly) the head element pointer gets set to point to this node.

The form element

pointer points to the last form element that was opened

and whose end tag has not yet been seen. It is used to make form controls

associate with forms in the face of dramatically bad markup, for

historical reasons.

The scripting flag is set to "enabled" if the

Document with which the parser is associated was with script when the parser was created, and

"disabled" otherwise.