Contents | Part A

| Part B | References

Implementation Techniques for

Authoring Tool Accessibility Guidelines 2.0:

Part B: Support the production of accessible content

Editor's Draft 6 March 2006

- Editors of this chapter:

- Jutta Treviranus - ATRC, University of Toronto

- Jan Richards - ATRC, University of Toronto

- Tim Boland - NIST

Copyright © 1994-2003

W3C® (MIT, ERCIM, Keio), All Rights Reserved.

W3C liability, trademark, document

use, and software

licensing rules apply. Your interactions with this site are in

accordance

with our public and

Member privacy

statements.

Notes:

- These techniques are informative (i.e. non-normative).

- The list of techniques for each success criteria are not exhaustive. Rather, these techniques represent an illustrative sampling of approaches. There may be many other ways a tool might be designed and still meet the normative criteria contained in the success criteria.

- Some techniques are labeled as "[Sufficient]". These techniques are judged by the AUWG to meet the success criteria to which they apply. Conditional wording may limit the applicability of any given sufficient technique to a particular type of content or authoring tool. Inclusion does not imply that the description will be verified or is verifiable.

- Some techniques are labeled as "[Advisory]". These techniques are included as additional information.

Guideline B.1: Enable the production of accessible content

ATAG Checkpoint B.1.1: Support content types that enable the creation of Web content that conforms to WCAG. [Priority 1] (Rationale)

Techniques for Success Criteria 1 (Any authoring tool that chooses the content type used for publication on the Web for the author must always choose content types for which a published content type-specific WCAG benchmark exists.):

Applicability: This success criteria is not applicable to authoring tools that always let the author choose the content type for authoring.

Technique B.1.1-1.1 [Sufficient]: Providing a content type-specific WCAG benchmark (in the ATAG 2.0 conformance profile) for all content types that are automatically chosen, either because a content type is the only one that is supported for a given task or because a shortcut is provided that allows new content to be created with a minimum of steps.

Techniques for Success Criteria 2 (Any authoring tool that allows authors to choose the content type used for publication on the Web must always support at least one content type for which a published content type-specific WCAG benchmark exists and always give prominence to those content types.):

Applicability: This success criteria is not applicable to authoring tools that do not let the author choose the content type for authoring.

Technique B.1.1-2.1 [Sufficient] : Whenever the author is given the option to choose a content type, providing at least one of the content types has had a content type-specific WCAG benchmark (in the ATAG 2.0 conformance profile). Also, giving prominence in the user interface (i.e. listed ahead of other content types, listed on the first page of choices, etc.) to content types with benchmark documents.

Technique B.1.1-2.2 [Advisory]: Displaying a warning when the author chooses to create Web content with a content type that lacks a content type-specific WCAG benchmark (in the ATAG 2.0 conformance profile).

General Techniques for Checkpoint B.1.1:

Applicability: Techniques in this section are not necessarily applicable to any one success criteria, and may apply more generally to the checkpoint as a whole.

Technique B.1.1-0.1 [Advisory]: Consulting content type-specific WCAG benchmark in ATAG 2.0 for guidance on how to create a benchmark document for a content type that does not already have one.

Technique B.1.1-0.2 [Advisory]: Supporting W3C Recommendations, which are reviewed for accessibility, wherever appropriate. References include:

Technique B.1.1-0.3 [Advisory]: For tools that dynamically generate Web content, using HTTP content negotiation to deliver content in the preferred content type of the end-user.

ATAG

checkpoint B.1.2: Ensure that the tool preserves all unrecognized markup and accessibility

information during transformations and conversions. [Priority 2] (Rationale)

Techniques for Success Criteria 1 (During all transformations and conversions supported by the authoring tool, the author must be notified before any unrecognized markup is permanently removed.):

Applicability: This success criteria is not applicable to authoring tools that do not perform either transformations or conversions that are unable to preserve unrecognized markup.

Technique B.1.2-1.1[Sufficient]: When an transformation or conversion operation (e.g. opening a document) is to be performed that cannot be performed on unrecognized markup and unrecognized markup is detected, providing the author with the option of allowing the tool to remove the markup and proceed with the operation or preserving the markup by canceling the operation.

Technique B.1.2-1.2 [Advisory]: Providing the author the option to confirm or override removal of markup during transformation or conversion operations either on a change-by-change basis or as a batch process.

Techniques for Success Criteria 2 (During all transformations and conversions supported by the authoring tool, accessibility information must always be handled according to at least one of the following: (a) preserve it in the target format such that the information can be "round-tripped" (i.e. converted or transformed back into its original form) by the same authoring tool, or (b) preserve it in some other way in the target format, or (c) remove it only after the author has been notified and the content has been preserved in its original format.):

Applicability: This success criteria is not applicable to authoring tools that do not perform either transformations or conversions.

Technique B.1.2-2.1 [Sufficient] : Ensuring that after transformations and conversions, any accessibility information that was stored in the original content is present in the resulting content, in such a way that the resulting content can be transformed or converted back to the original form with the accessibility information preserved. This is usually only possible in markup structures or content types that are equally rich in their ability to represent information.

Technique B.1.2-2.2 [Sufficient]: Ensuring that after transformations and conversions, any accessibility information that was stored in the original content is present in the resulting content, but in such a wy that this content cannot be transformed or converted back to the original format with the accessibility information intact.

Technique B.1.2-2.3 [Sufficient]: Ensuring that when a transformation or conversion is to be performed that cannot be performed while preserving accessibility information, the author is warned that accessibility information (if any exists) will be lost and is given the option of allowing the tool to proceed to create the resulting content while saving a backup copy of the original content or preserving the original content markup by canceling the transformations and conversion.

General Techniques for Checkpoint B.1.2:

Applicability: Techniques in this section are not necessarily applicable to any one success criteria, and may apply more generally to the checkpoint as a whole.

Technique B.1.2-0.1 [Advisory]: Implementing best practices for

transformation and conversion, such as:

- Allow authors to edit transformation or conversion templates to specify the way presentation conventions should be converted into structural markup.

- Ensure that changes to graphical layouts do not reduce readability when the document is rendered serially. For example, confirm the linearized reading order with the author.

- Avoid transforming text into images. Use style sheets for presentation

control, or use an XML application (e.g. SVG [SVG]) that keeps the text as text.

If this is not possible, ensure that the text is available as equivalent

text for the image.

- When importing images with associated descriptions into a markup document,

make the descriptions available through appropriate markup.

- When transforming a table to a list or list of lists, ensure that table

headings are transformed into headings and that summary or caption information

is retained as rendered content.

- When converting linked elements (i.e. footnotes, endnotes, call-outs,

annotations, references, etc.) provide them as inline content or maintain

two-way linking.

- When converting from an unstructured word-processor format to markup,

ensure that headings and list items are transformed into appropriate structural

markup (appropriate level of heading or type of list, etc.).

- When developing automatic text translation functions, strive to make the resulting text as clear and simplest

as possible.

Technique B.1.2-0.2 [Advisory]: Notifying the author before changing the content type (including the DTD).

Technique B.1.2-0.3 [Advisory]: Providing the author

with explanations of automatic changes made during transformations and conversions.

ATAG Checkpoint B.1.3:

Ensure that when the tool automatically generates content it conforms to WCAG. [Relative Priority] (Rationale | Note)

Techniques for Success Criteria 1 (All markup and content that is automatically generated by the

authoring tool (i.e. not authored "by hand") must always conform to WCAG.):

Applicability: This success criteria is not applicable to authoring tools that do not automatically generate markup and content (e.g. some simple text editors).

Technique B.1.3-1.1 [Sufficient]: Ensuring that any action that the authoring tool takes alone that causes Web Content to be added or modified has the result of not introducing new contraventions of the content type-specific WCAG benchmark. Contravening actions are allowed when they are specifically requested by the author (e.g. they choose to insert a specific element by name from a list) or when the author has failed to properly comply with an information request that would have prevented the contravention (e.g. ignored a short text label prompt for an image).

Technique B.1.3-1.2 [Advisory]: Using prompting to elicit information from the author (see Checkpoint B.2.1).

Technique B.1.3-1.3 [Advisory]: Ensuring that any templates used by automatic generation processes meet the content type-specific WCAG benchmark (see Checkpoint B.1.4).

ATAG Checkpoint B.1.4

: Ensure that all pre-authored content for the tool conforms to WCAG. [Relative Priority] (Rationale | Note)

Techniques for Success Criteria 1 (Any Web content (e.g., templates, clip art, example pages, etc.) that is bundled with the authoring tool or preferentially licensed to the users of the authoring tool (i.e. provided for free or sold at a discount), must conform to WCAG when used by the author.):

Applicability: This success criteria is not applicable to authoring tools that do not bundle or preferentially license pre-authored content.

Technique B.1.4-1.1 [Sufficient]:ensuring that any Web content that is bundled with the authoring tool (e.g. on the installation CD-ROM) or preferentially licensed to the users of the authoring tool (i.e. provided for free or sold at a discount), meets the content type-specific WCAG benchmark.

Technique B.1.4-1.2

[Advisory]: Authoring Web content to be bundled using ATAG 2.0 conformant authoring tools and ensuring it passes accessibility checks.

Technique B.1.4-1.3 [Advisory] : Providing pre-authored graphical content in content types

that allow for accessible annotation to be included in the files, such as

SMIL [SMIL], PNG [PNG], and SVG [SVG].

Technique B.1.4-1.4 [Advisory]: Ensuring interoperability between any equivalent alternatives provided for pre-authored content and any authoring tool features for managing,

editing, and reusing equivalent alternatives (see

Checkpoint B.2.5).

Guideline B.2: Support the author in the production of accessible content

@@BF: prompting for accessibility localization@@

@@we must ensure accessibility of examples (i.e. that they

meet GL1)@@

@@more help designing well - e.g. alternatives to image

maps@@

ATAG Checkpoint B.2.1: Prompt and assist the author to create content that conforms to WCAG. [Relative Priority] (Rationale)

Implementation Notes:

- Prompting in the ATAG 2.0 context is not to be interpreted as necessarily implying intrusive prompts, such as pop-up dialog boxes. Instead, ATAG 2.0 uses prompt in a wider sense, to mean any tool initiated process of eliciting author input that is triggered by author actions (e.g. adding or editing content that requires accessibility information from the author in order to prevent the introduction of accessibility problems). The reason for this is that it is crucial that that accessibility information

be correct and complete. This is more likely to occur if the author has been convinced to provide the information voluntarily. Therefore, overly restrictive mechanisms are not recommended for meeting this checkpoint.

- Assisting refers to helping the author in potentially many different ways (although the assistance required for strictly meeting the success criteria is quite limited) including making use of heuristics, database retrieval, markup support etc.

- In many cases, prompting and assisting will be provided simultaneously (e.g. a prompt for information that includes assistance in the form of some default answers).

- The author experience of prompting will be very similar to that of checking for some implementations. For example, in a tool that checks continuously for accessibility problems, the markings used to highlight found problems can be considered to be a form of prompting. Similarly, the author experience of assisting will be closely related to that of repairing for certain implementations. For example, a repair that offers previously utilized text labels for an image is assisting the author.

- When possible prompting should be incorporated early in the authoring workflow to prevent accessibility

problems from accumulating to such an extent that when checking (see Checkpoint B.2.2) and repairing (see Checkpoint B.2.3) are attempted the list of problems is impractically large.

- Consideration

should be given to the promotion and integration of the accessibility solutions involved as required by Guideline B.3. In particular, accessibility

prompting:

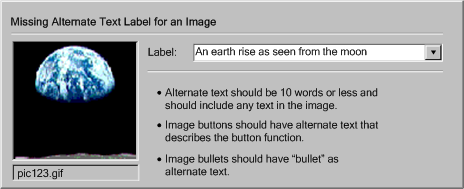

Techniques for Success Criteria 1 (Every time that content that requires accessibility information from the author in order to conform to WCAG is added or updated, then the authoring tool must inform the author that this additional information is required (e.g. via input dialogs, interactive feedback, etc.).):

Applicability: This success criteria is applicable to all authoring tools.

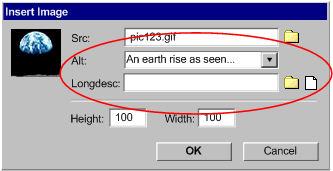

Technique B.2.1-1.1 [Sufficient]: Ensuring that, whenever content that requires accessibility information from the author in order to agree with the content type-specific WCAG benchmark is added/updated, the author is informed of the need for the additional information by the presentation of the input controls for that information within the adding/editing interface.

Technique B.2.1-1.2 [Sufficient]: Ensuring that, whenever content that disagrees with the content type-specific WCAG benchmark exists, that requires accessibility information to repair (including during adding/editing of the content in question), the tool displays indicators to that author that identify the location of the problem.

Technique

B.2.1-1.3 [Advisory]: Using prompting mechanisms and assisting mechanisms that are appropriate for the type of information in question (see Prompting for Various Types of Accessibility Information).

Technique B.2.1-1.4 [Advisory]: Checking all textual entries for spelling, grammar, and reading level (where applicable).

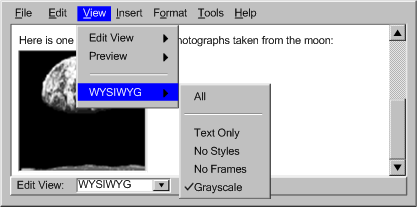

Technique B.2.1-1.5 [Advisory]: Providing multiple preview modes and a warning to authors that there are many other less predictable ways in which a page may be presented (aurally, text-only, text with pictures separately, on a small screen, on a large screen, etc.). Some possible document views include:

- an alternative content view (with images and other multimedia replace by any alternative content)

- a monochrome view (to test contrast)

- a collapsible structure-only view

- no scripts view

- no frames view

- no style sheet view

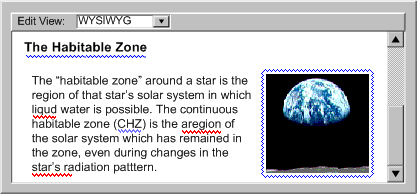

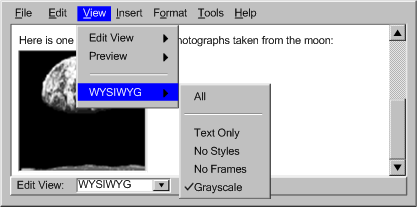

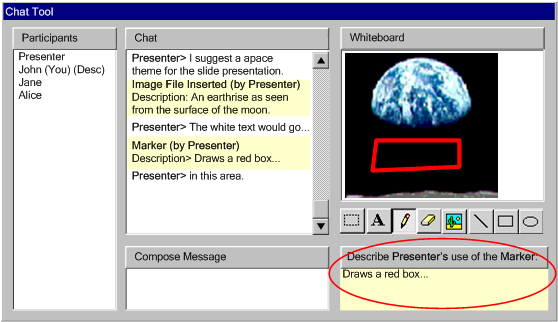

Example B.2.1-1.5: This illustration shows a WYSIWYG authoring interface with a list of rendering options displayed. The options include "All" (i.e. render as in a generic browser), "text-only" (i.e. non-text items replaced by textual equivalents), "no styles", "no frames", and "grayscale" (used to check for sufficient contrast). (Source: mockup by AUWG)

[longdesc missing]

Prompting for Various Types of Accessibility Information:

This list is meant

to cover techniques of prompting and assisting for many, but not all, of

the most common accessible authoring practices:

(1):

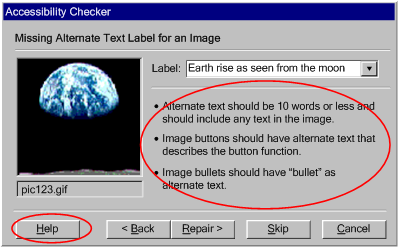

Prompting and assisting for short text labels (e.g. alternate text, titles,

short text metadata fields, rubies for ideograms):

- (a) Prompts for short text strings may be afforded relatively little

screen real estate because they are usually intended to elicit entries

of ten words or less.

- (b) Provide a rendered view of the object for the author to examine

while composing the label.

- (c) Implement automated routines to detect and offer labels for objects

serving special functions (e.g. "decorative", "button",

"spacer", "horizontal rule", etc.).

- (d) Use editable text entry boxes with drop-down lists that let the author add

new text and also assist the author by providing the option to select from reusable or special

function label text, as describe in (c) (see Example

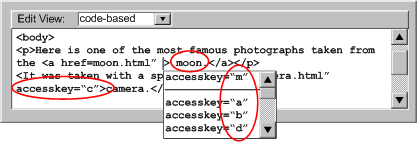

(1a)).

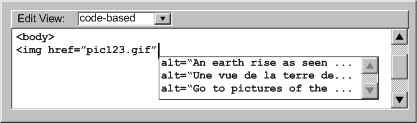

- (e) In code-based tools, prompt the author with short text labels that are already marked up appropriately (see

Example (1b)).

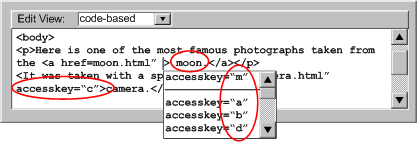

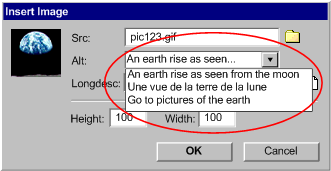

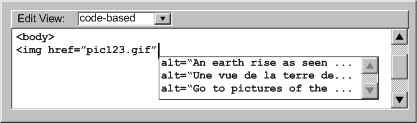

Example (1b): This illustration shows a code-based authoring interface

for short text label prompting. The drop-down menu was triggered when

the author typed quotation marks (") to close the

Example (1b): This illustration shows a code-based authoring interface

for short text label prompting. The drop-down menu was triggered when

the author typed quotation marks (") to close the href

attribute. (Source: mockup by AUWG)

Description:

(2):

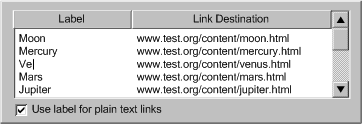

Prompting and assisting for multiple text labels (e.g. image map area labels):

- (a) Prompts for image map text labels may be similar to those for short

text labels with allowance made for rapidly adding several labels

for one image map. (see Example

(2))

- (b) Provide a preview of the image map areas.

- (c) Provide the URI of image map areas as a hint for the labels.

- (d) Offer to automatically generate a set of plain text links from the

labels that the user completes. (see Example

(2))

(3):

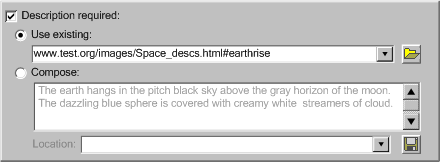

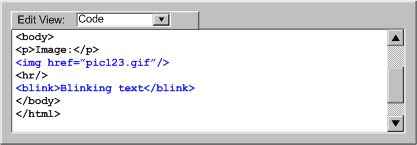

Prompting and assisting for long text descriptions (e.g. longdesc text,

table summaries, site information, long text metadata fields):

- (a) Begin by prompting the author about whether the inserted object

is adequately described with an existing short text label. Providing a

view of the page with images turned off may help the author decide. (see

Example (3))

- (b) If the short description is inadequate, prompt the author for the

location of a pre-existing description.

- (c) If the author needs to create a description, provide a special writing

utility that includes a rendered view of the object and description writing

advice.

- (d) Implement automated routines that detect and ignore some objects

that do not require long text descriptions (e.g. bullets, spacers, horizontal

rules).

(4):

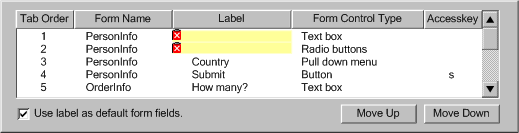

Prompting and assisting for form field labels:

- (a) Present the form fields and ask the author to select text that is

serving as a label or to enter a new label. (see

Example (4))

- (b) Alert authors to form fields that are missing labels.

(5):

Prompting and assisting for form field place-holders:

- (a) Prompts for form field place-holders may be similar to those for

short text labels.

- (b) Provide authors with the option of directly selecting nearby text

strings that are serving implicitly as labels.

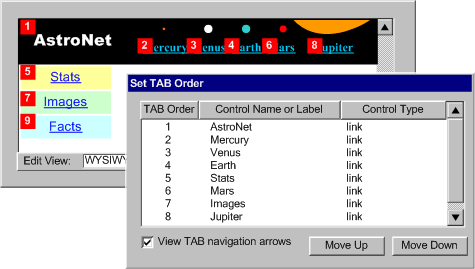

(6): Prompting

and assisting for TAB order sequence:

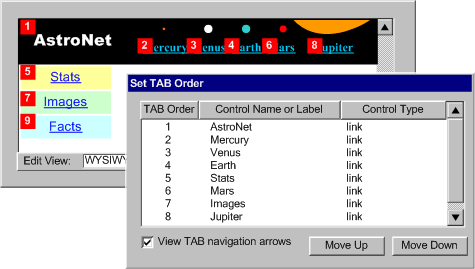

- (a) Provide the author with a numbering tool that they can use to select

controls to create a TAB preferred sequence. (see Example

(6))

- (b) Where there are only a few links that change in each page of a collection,

ask the author to confirm whether these links receive focus first. If

so, then the tool can appropriately update the tabindex order.

- (c) Provide a list of links and controls to check the TAB order.

Example (6): This illustration two views of a "Set TAB Order"

utility that lets the author visualize and adjust the TAB order of a document:

as a mouse-driven graphical overlay on the screen and as a keyboard accessible

list.

Example (6): This illustration two views of a "Set TAB Order"

utility that lets the author visualize and adjust the TAB order of a document:

as a mouse-driven graphical overlay on the screen and as a keyboard accessible

list.

[longdesc missing]

[longdesc missing]

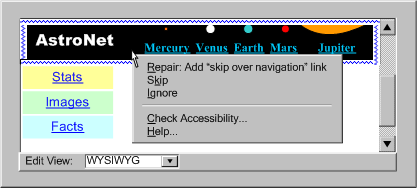

(7):

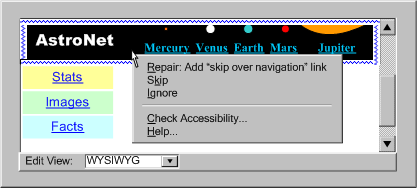

Prompting and assisting for navigational shortcuts (e.g. keyboard shortcuts,

skip links, voice commands, etc.):

- (a) Prompt authors to add skip over navigation links for sets of common

navigation links. (see Example (7a))

- (b) Prompt authors with a list of links that are candidates for accesskeys

because they are common to a number of pages in a site.

- (c) Manage accesskey lists to ensure consistency across sites and to

prevent conflicts within pages.(see Example

(7b))

Example (7a): This illustration shows a mechanism that detects repeating

navigation elements and asks the author whether they want to add a skip

navigation link (Source: mockup by AUWG)

Example (7a): This illustration shows a mechanism that detects repeating

navigation elements and asks the author whether they want to add a skip

navigation link (Source: mockup by AUWG)

Example (7b): This illustration shows a code-based authoring interface

suggesting accesskey values. Notice that the system suggests "m"

because it is the first letter of the link text ("moon"). The

letter "c" does not appear in the list because it is already

used as an accesskey later in the document (for the link "camera").

(Source: mockup by AUWG)

Example (7b): This illustration shows a code-based authoring interface

suggesting accesskey values. Notice that the system suggests "m"

because it is the first letter of the link text ("moon"). The

letter "c" does not appear in the list because it is already

used as an accesskey later in the document (for the link "camera").

(Source: mockup by AUWG)

[longdesc

missing]

[longdesc

missing]

(8):

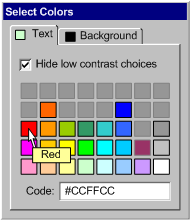

Prompting and assisting for contrasting colors:

- (a) Assemble color palettes with insufficiently contrasting colors excluded

(see Example (8)) or identified.

- (b) Provide gray scale and black and white views or suggest the author

activate the system operating system high contrast mode.

- (c) Emphasize "Web-safe" colors.

(9):

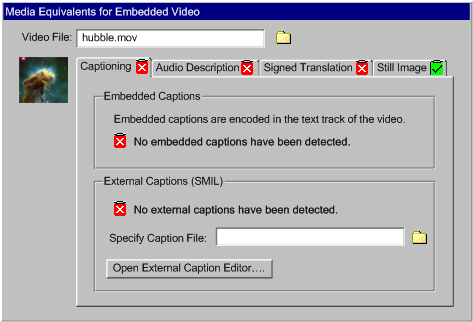

Prompting and assisting for alternative resources for multimedia (transcripts,

captions, video transcripts, audio descriptions, signed translations, still

images, etc.):

- (a) Prompt the author for the location of a pre-existing alternative

resources for multimedia (see Example

(9)).

- (b) Although producing alternative resource for multimedia can be a

complex process for long media files, production suites do exist or authoring

tools can include simple utilities, with built-in media players, for producing

simple alternative resources.

- (c) The tool should be able to access alternative resources for multimedia,

which may be incorporated into existing media or exist separately.

(10):

Prompting and assisting for Metadata:

- (a) For metadata information fields requiring information similar to

that discussed in the other sections of this technique, see the relevant

section. For example: short text labels ((1)), long text descriptions

((3)), and alternative resources for multimedia((9)).

- (b) When prompting for terms in a controlled vocabulary, allow the author

to choose from lists to prevent spelling errors.

- (c) Provide the option of automating the insertion of information that

easily stored and reused (e.g. author name, author organization, date,

etc.).

- (d) Automate metadata discovery where possible.

- (e) Provide the option of storing licensing conditions within metadata.

(see, for example, the Creative Commons licenses [CREATIVE-COMMONS],

or the GPL [GPL], LGPL [LGPL], BSD [BSD], and other software licenses)

- (f) Consider characterizing accessibility of content using IMS AccessForAll

Meta-data Specification [IMS-ACCMD]<http://www.imsproject.org/accessibility/index.cfm#version1pd>

to facilitate personalized content delivery.

(11):

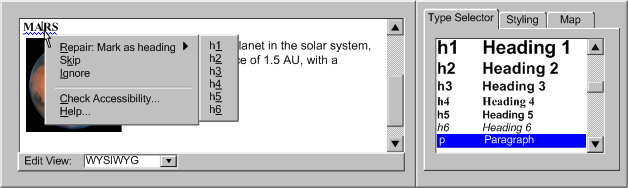

Prompting and assisting for document structure:

- Alert the author to the occurrence of unstructured content in a way

that is appropriate to the workflow of the tool.

- Provide the author with options for creating new content that is structured,

such as:

- templates (with pre-defined structure),

- wizards (that introduce structure to content through a series of

system-generated queries), or

- real time validators (that may be set by the author to prevent the

creation of improperly structured content)

- Provide the author with options for imposing structure on existing unstructured

content.

- For tools that support explicit structural mechanisms offer authors

the opportunity to use those mechanisms. For example, for DTD or schema

based structures, provide validation in accordance to the applicable

DTD or schema.

- For tools that do not support explicit structural mechanisms, offer

authors the option of deriving structure from format styles. For example,

provide authors a mechanism to map presentation markup that follows

formatting conventions into structural elements. For example, patterns

of text formatting may be interpreted as headings (see

Example (11)) and multiple lines of text beginning items

with certain typographical symbols, such as "*" or "-",

may be interpreted as list items.

- Provide structure-based editing features, such as:

- hide/show content blocks according to structure,

- shift content blocks up, down, and sideways through the document

structure, or

- hierarchical representation or network diagram view of the document

structure, as appropriate.

- Provide fully automated checking (validation)

for structure.

- Provide fully automated or semi-automated

repair for structure.

- It is not necessary to prohibit editing in an unstructured mode. However,

the tool should alert authors to the fact that they are working in an

unstructured mode.

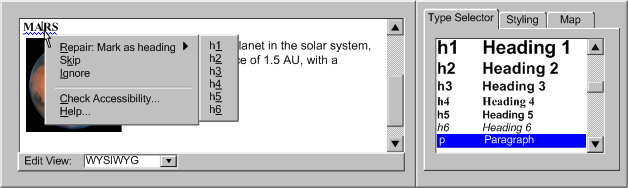

Example

(11): This illustration shows a tool that detects opportunities

for enhancing structure and alerts the author. (Source: mockup by AUWG)

Example

(11): This illustration shows a tool that detects opportunities

for enhancing structure and alerts the author. (Source: mockup by AUWG)

[longdesc missing]

[longdesc missing]

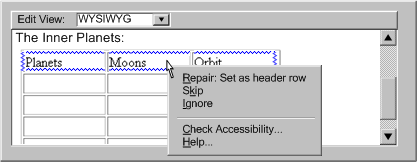

(12):

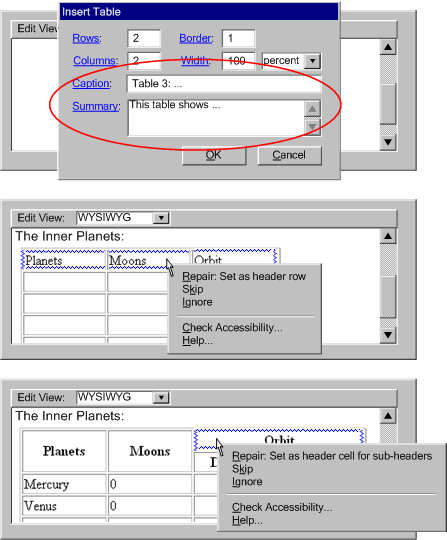

Prompting and assisting for tabular structure:

- (a) Prompt the author to identify tables as used for layout or data

or implement automated detection mechanisms.

- (b) Differentiate utilities for table structure from utilities for document

layout - use this when tables are identified as being for layout.

- (c) Prompt the author to provide header information.(see

Example (12))

- (d) Prompt the author to group and split columns, rows, or blocks of

cells that are related.

- (e) Provide the author with a linearized view of tables (as tablin does).

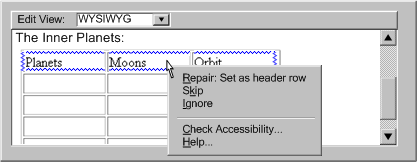

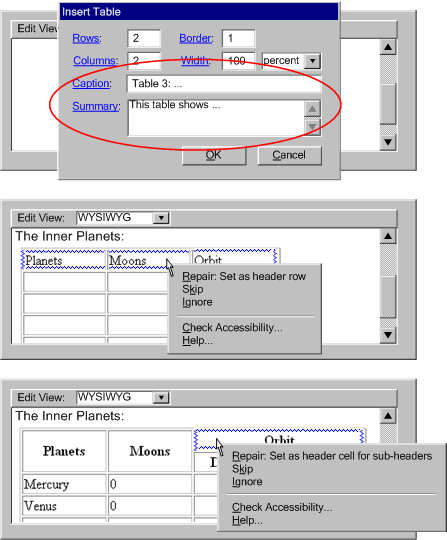

Example (12): This illustration shows a tool that

prompts the author about whether the top row of a table is a row of table

headers. (Source: mockup by AUWG)

Example (12): This illustration shows a tool that

prompts the author about whether the top row of a table is a row of table

headers. (Source: mockup by AUWG)

[longdesc

missing]

[longdesc

missing]

(13):

Prompting and assisting for style sheets:

- (a) Use style sheets, according to specification, as the default mechanism

for presentation formatting and layout.

- (b) If content is created with a style sheet format, along with a content

format, the use of that style format must also meet the requirements of

WCAG.

- (c) Conceal the technical details of style sheet usage to a similar

degree as for usage of other markup formats supported by the tool.

- (d) Assist the author by detecting structural markup (e.g. header tags, etc.) that has been misused

to achieve presentation formatting and, with author permission, transforming

it to use style sheets. (see Example (13a))

- (e) Prompt the author to create style classes and rules within and across

document, rather than using more limited in-line styling.

- (f) Assist the author by recognizing patterns in style sheet use and

converting them into style classes and rules.

- (g) Provide the option of editing text content independently of style

sheet layout and presentation formatting. (see

Example (13b))

- (h) Assist the author with the issue of style sheet browser compatibility

by guiding them towards standard practices and detecting the existence

of non-standard practices.

- (i) Assist the author by providing a style sheet validation function.

- (j) Maintain a registry of styles for ease of re-use.

- (k) For prompting and assisting with specific types of information required

by style sheets, see the other sections in this technique. For example:

font/background colors (see (8)) and document structure (see (12)).

- (l) Consult the following references: Accessibility Features of CSS [CSS Access], CSS2.1 specification [CSS21], and XML Accessibility Guidelines [XML Access].

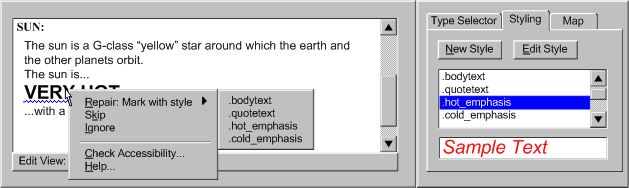

Example (13): This illustration shows a prompt that indicates that

a heading has been misused to indicate emphasis. Use of style sheets is

suggested instead and a list of styles already used in the document is

provided. (Source: mockup by AUWG)

Example (13): This illustration shows a prompt that indicates that

a heading has been misused to indicate emphasis. Use of style sheets is

suggested instead and a list of styles already used in the document is

provided. (Source: mockup by AUWG)

(14): Prompting

and assisting for clearly written text:

- (a) Prompt the author to specify a default language of a document.

- (b) Provide a thesaurus function.

- (c) Provide a dictionary lookup system that can recognize changes of

language, terms outside a controlled vocabulary as well as known abbreviation

or acronym expansions.

- (d) Provide an automated reading level status. (see

Example (14a))

- (e) Prompt the author for expansions of unknown acronyms, recognizable

in some languages as collections of uppercase letters. (see Example

(14b))

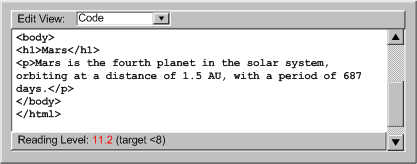

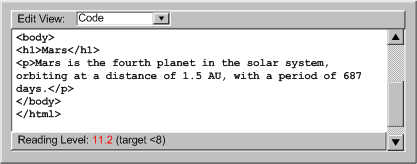

Example (14a): This illustration shows an authoring

interface that indicates the reading level of a page and whether it exceeds

a limit determined by the author's preference settings. (Source: mockup

by AUWG)

Example (14a): This illustration shows an authoring

interface that indicates the reading level of a page and whether it exceeds

a limit determined by the author's preference settings. (Source: mockup

by AUWG)

[longdesc missing]

[longdesc missing]

Example

(14b): This illustration shows an authoring interface

that prompts the author to enter an acronym expansion. (Source: mockup

by AUWG)

Example

(14b): This illustration shows an authoring interface

that prompts the author to enter an acronym expansion. (Source: mockup

by AUWG)

[longdesc missing]

[longdesc missing]

(15): Prompting

and assisting for device independent handlers:

- (a) During code development, prompt the author to include device-independent

means of activation.

- (b) Prompt authors to use device-independent events (e.g., DOM 2

onactivate

[DOM]) instead of device-specific events (e.g., onclick),

or route multiple events (onclick and onkeypress)

through the same functions.

- (c) Prompt authors to add a DOM

onfocus event to elements

that are targeted with the onmouseover event.

- (d) Prompt authors about using events for which no common device-independent

analogue exists (e.g.

ondblclick) and avoid these events

as default options.

(16):

Prompting and assisting for non-text supplements to text:

- (a) Prompt the author to provide icons for buttons, illustrations for

text, graphs for numeric comparisons, etc. (see

Example (16))

- (b) Where subject metadata is available, look up appropriate illustrations.

- (c) If the author has identified content as instructions then provide

templates or automated utilities for extracting flow charts, etc.

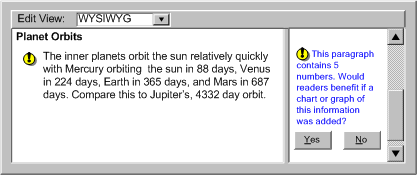

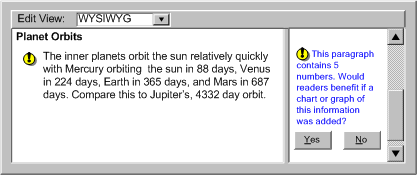

Example (16): This illustration shows an authoring

interface for prompting the author about whether a paragraph that contains

many numbers might be made more clear with the addition of a chart or

graph. (Source: mockup by AUWG)

Example (16): This illustration shows an authoring

interface for prompting the author about whether a paragraph that contains

many numbers might be made more clear with the addition of a chart or

graph. (Source: mockup by AUWG)

[longdesc

missing]

[longdesc

missing]

(17): Prompting and assisting the author to make use of up to

date Web Content Types:

- (a) Default to the most up to date formats available whenever the author

has not specified a content type.

- (b) Give up to date formats a higher prominence during

content type selection.

- (c) Provide tools that convert content in older formats into more up

to date formats.

- (d) Fully or partially automate some of the more complex aspects of

more up to date formats, including document structure (see (11))

and use of style sheets (see (13)).

Techniques for Success Criteria 2 (Whenever the tool provides instructions to the author, either the

instructions (if followed) must lead to the creation of Web content that conforms to WCAG, or the author must be informed that following the instructions would lead to Web content accessibility problems.):

Applicability: This success criteria is applicable to all authoring tools.

Technique B.2.1-2.1 [Sufficient]: Consistently labeling help documents or other documentation that, if followed exactly by the author, would lead to content being created or modified to disagree with the content type-specific WCAG benchmark.

Technique B.2.1-2.2 [Sufficient] : Remove any instruction text that, if followed exactly by the author, would lead to content being created or modified to disagree with the content type-specific WCAG benchmark.

ATAG Checkpoint B.2.2: Check for and inform the author of accessibility problems. [Relative Priority] (Rationale | Note)

Executive Summary:

Despite prompting assistance from the tool (see

Checkpoint B.2.1), accessibility problems may still be introduced. For example,

the author may cause accessibility problems by hand coding or by opening content

with existing accessibility problems for editing. In these cases, the prompting

and assistance mechanisms that operate when markup is added or edited (i.e.

insertion dialogs and property windows) must be backed up by a more general

checking system that can detect and alert the author to problems anywhere within

the content (e.g. attribute, element, programmatic object, etc.). It is preferable

that this checking mechanisms be well integrated with correction mechanisms

(see Checkpoint 3.3), so that when

the checking system detects a problem and informs the author, the tool immediately

offer assistance to the author.

Implementation Notes: During implementation of this checkpoint, consideration should be given to the promotion and integration of the accessibility solutions involved as required by Guideline B.3 of the guidelines. In particular, accessibility prompting:

Techniques for Success Criteria 1 (An individual check must be associated with each requirement in the content type benchmark document (i.e. not blanket statements such as "does the content meet all the requirements").):

Applicability: This success criteria is not applicable to authoring tools that are not capable of introducing accessibility problems (e.g. tools that highly constrain author actions).

Technique B.2.2-1.1 [Sufficient]: Providing at least one check for check for each requirement in the content type benchmark document (see Note, Levels of Checking Automation).

Techniques for Success Criteria 2 (For checks that are associated with a type of element (e.g. img), each element instance must be individually identified as potential accessibility problems. For checks that are relevant across multiple elements (e.g. consistent navigation, etc.) or apply to most or all elements (e.g. background color contrast, reading level, etc.), the entire span of elements must be identified as potential accessibility problems, up to the entire content if applicable.):

Applicability: This success criteria is not applicable to authoring tools that are not capable of introducing accessibility problems (e.g. tools that highly constrain author actions).

Technique B.2.2-2.1 [Advisory]: Identifying elements (e.g. by line number in code view or by highlighting their renderings in a WYSIWYG view).

Technique B.2.2-2.2 [Sufficient]: Displaying manual checks in a way that balances the need for the author to make specific changes to some some kinds of content (e.g. non-text objects, acronyms, table cells, etc.) while not overwhelming the author with numerous manual checks for other kinds of content that can be checked more generally (e.g. background color contrast, reading level, etc.).

Techniques for Success Criteria 3 (If the authoring tool relies on author judgment to determine if a potential accessibility problem is correctly identified, then the message to the author must be tailored to that potential accessibility problem (i.e. to that requirement in the context of that element or span of elements).):

Applicability: This success criteria is not applicable to authoring tools that are not capable of introducing accessibility problems (e.g. tools that highly constrain author actions).

Technique B.2.2-3.1 [Sufficient]: The wording of author information prompts during checking for a potential problem supports author judgement by answering the following questions: "What part of the content should be examined?" and "What is be present or absent in the event that the problem exists?".

Techniques for Success Criteria 4 (The authoring

tool must present checking as an option to the author at or before

the completion of authoring.):

Applicability: This success criteria is not applicable to authoring tools that are not capable of introducing accessibility problems (e.g. tools that highly constrain author actions).

Technique B.2.2-4.1 [Sufficient]: Providing accessibility checking as an action (e.g. as a menu item, etc.) at all times.

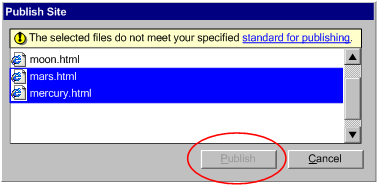

Technique B.2.2-4.2 [Sufficient] : If accessibility checking is not alwasy available as an action, prompting the author to perform an accessibility check if the author chooses to close or publish the content.

General Techniques for Checkpoint B.2.2:

Applicability: Techniques in this section are not necessarily applicable to any one success criteria, and may apply more generally to the checkpoint as a whole.

Technique B.2.2-0.1 [Advisory]: Automating as much checking as possible.

Where necessary provide semi-automated checking. Where neither of these options

is reliable, provide manual checking (see Levels of Checking Automation).

Technique B.2.2-0.2 [Advisory]: Consulting the Techniques For Accessibility Evaluation and Repair Tools [WAI-ER @@change to AERT@@] Public Working Draft for evaluation and repair algorithms related to WCAG 1.0.

Technique B.2.2-0.3 [Advisory]: For certain types of programmatic content, enabling checking by rendering or executing the content as part of the checking process. This may apply to manual as well as automated or semi-automated checking processes.

Technique B.2.2-0.4 [Advisory]: For tools that allow authors to create their own templates, advising the author that templates should be held to a high accessibility standard, since they will be repeatedly reused. Enhancing this by making an accessibility checks mandatory before saving templates (see Checkpoint B.2.2).

Levels of Checking Automation:

This list is representative but not complete.

(a) Automated Checking:

In automated checking, the tool is able to check for accessibility problems

automatically, with no human intervention required. This type of check is

usually appropriate for checks of a syntactic nature, such as the use of

deprecated elements or a missing attribute, in which the meaning of text

or images does not play a role.

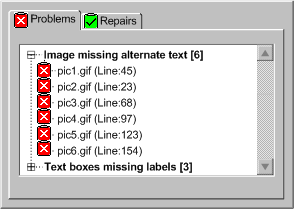

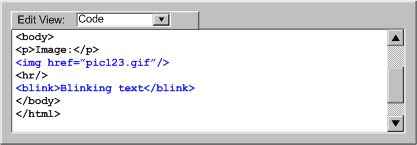

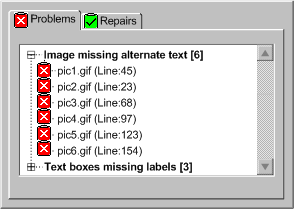

Example (a1): This illustration shows a summary interface for a code-based

authoring tool that displays the results of an automated check. (Source:

mockup by AUWG)

Example (a1): This illustration shows a summary interface for a code-based

authoring tool that displays the results of an automated check. (Source:

mockup by AUWG)

[longdesc missing]

[longdesc missing]

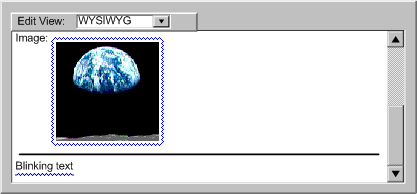

Example (a2): This illustration shows an interface that displays the

results of an automated check in a WYSIWYG authoring view using blue squiggly

highlighting around or under rendered elements, identifying accessibility

problems for the author to correct. (Source: mockup by AUWG)

Example (a2): This illustration shows an interface that displays the

results of an automated check in a WYSIWYG authoring view using blue squiggly

highlighting around or under rendered elements, identifying accessibility

problems for the author to correct. (Source: mockup by AUWG)

[longdesc missing]

[longdesc missing]

Example (a3): This illustration shows an authoring interface of an

automated check in a code-level authoring view. In this view, the text

of elements with accessibility problems is shown in a blue font, instead

of the default black font. (Source: mockup by AUWG)

Example (a3): This illustration shows an authoring interface of an

automated check in a code-level authoring view. In this view, the text

of elements with accessibility problems is shown in a blue font, instead

of the default black font. (Source: mockup by AUWG)

[longdesc

missing]

[longdesc

missing]

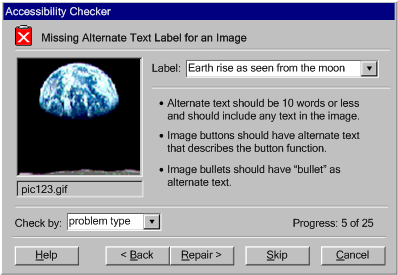

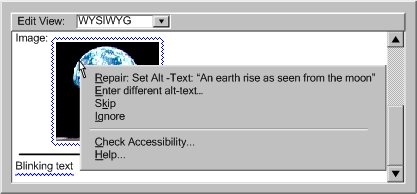

(b) Semi-Automated Checking:

In semi-automated checking, the tool is able to identify potential problems,

but still requires human judgment by the author to make a final decision

on whether an actual problem exists. Semi-automated checks are usually most

appropriate for problems that are semantic in nature, such as descriptions

of non-text objects, as opposed to purely syntactic problems, such as missing

attributes, that lend themselves more readily to full automation.

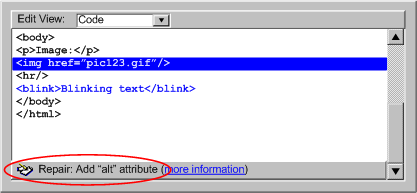

(c) Manual Checking:

In manual checking, the tool provides the author with instructions for detecting

a problem, but does not automate the task of detecting the problem in any

meaningful way. As a result, the author must decide on their own whether

or not a problem exists. Manual checks are discouraged because they are

prone to human error, especially when the type of problem in question may

be easily detected by a more automated utility, such as an element missing

a particular attribute.

ATAG

Checkpoint B.2.3: Assist authors in repairing accessibility problems. [Relative Priority] (Rationale | Note)

Executive Summary of Techniques:

Once a problem has been detected by the author or, preferably by the tool (see

Checkpoint B.2.2), the tool may assist the author to correct the problem.

As with accessibility checking, the extent to which accessibility correction

can be automated depends on the nature of the particular problems. Some repairs

are easily automated, whereas others that require human judgment may be semi-automated

at best.

Implementation Notes: During implementation of this checkpoint, consideration should be given to the promotion and integration of the accessibility solutions involved as required by Guideline B.3 of the guidelines. In particular, accessibility prompting:

Techniques for Success Criteria 1 (For each potential accessibility problem identified by the checking function (required in checkpoint B.2.2) provide at least one of the following: repair instructions, for the author to follow, that are specific to the accessibility problem; or an automated repair or semi-automated repair mechanism.):

Applicability: This success criteria is not applicable to authoring tools that are not capable of introducing accessibility problems (e.g. tools that highly constrain author actions).

Technique B.2.3-1.1 [Advisory]: Automate as much repairing as possible. Where necessary provide semi-automated repairing. Where neither of these options is reliable, provide manual repairing.

Technique B.2.3-1.2 [Advisory]: Using a degree of automation Automating as much repairing as possible.

Where necessary provide semi-automated repairing. Where neither of these options

is reliable, provide manual repairing.

Technique B.2.3-1.3 [Advisory]: Implement a special-purpose correcting interface where appropriate. When problems require some human judgment, the simplest solution is often to display the property editing mechanism for the offending element. This has the advantage that the author is already somewhat familiar with the interface. However, this practice suffers from the drawback that it does not necessarily focus the author's attention on the dialog control(s) that are relevant to the required correction. Another option is to display a special-purpose correction utility that includes only the input field(s) for the information currently required. A further advantage of this approach is that additional information and tips that the author may require in order to properly provide the requested information can be easily added. Notice that in the figure, a drop-down edit box has been used for the short text label field. This technique might be used to allow the author to select from text strings used previously for the alt-text of this image (see ATAG Checkpoint 3.5 for more).

Technique B.2.3-1.4 [Advisory]: Checks can be automatically sequenced. In cases where there are likely to be many accessibility problems, it may be useful to implement a checking utility that presents accessibility problems and repair options in a sequential manner. This may take a form similar to a configuration wizard or a spell checker (see Figure 3.3.5). In the case of a wizard, a complex interaction is broken down into a series of simple sequential steps that the author can complete one at a time. The later steps can then be updated "on-the-fly" to take into account the information provided by the author in earlier steps. A checker is a special case of a wizard in which the number of detected errors determines the number of steps. For example, word processors have checkers that display all the spelling problems one at a time in a standard template with places for the misspelled word, a list of suggested words, and "change to" word. The author also has correcting options, some of which can store responses to affect how the same situation can be handled later. In an accessibility problem checker, sequential prompting is an efficient way of correcting problems. However, because of the wide range of problems the checker needs to handle (i.e. missing text, missing structural information, improper use of color, etc.), the interface template will need to be even more flexible than that of a spell checker. Nevertheless, the template is still likely to include areas for identifying the problem (WYSIWYG or code-based according to the tool), suggesting multiple solutions, and choosing between or creating new solutions. In addition, the dialog may include context-sensitive instructive text to help the author with the current correction.

@@TB: Author should know what is the sequencing criteria@@

Technique B.2.3-1.5 [Advisory]: Where a tool is able to detect site-wide errors, allow the author to make site-wide corrections. This should not be used for equivalent alternatives when the function is not known with certainty (see ATAG Checkpoint B.2.4).

Technique B.2.3-1.6 [Advisory]: Provide a mechanism for authors to navigate sequentially among uncorrected accessibility errors. This allows the author to quickly scan accessibility problems in context.

Technique B.2.3-1.7 [Advisory]: Consult the Techniques For Accessibility Evaluation and Repair Tools [WAI-ER @@change to AERT@@] Public Working Draft document for evaluation and repair algorithms related to WCAG 1.0.

Levels of Repair Automation:

This list is representative, but not complete.

(a) Repair Instructions: In manual repairing, the tool provides the author with instructions for making the necessary correction, but does not automate the task in any substantial way. For example, the tool may move the cursor to start of the problem, but since this is not a substantial automation, the repair would still be considered "manual". Manual correction tools leave it up to the author to follow the instructions and make the repair by themselves. This is the most time consuming option for authors and allows the most opportunity for human error.

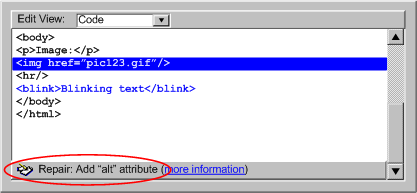

Example B.2.3.1(a): This illustration shows a sample manual repair. The problems have already been detected in the checking step and the selected offending elements in a code view have been highlighted. However, when it comes to repairing the problem, the only assistance that the tool provides is a context sensitive hint. The author is left to make sense of the hint and perform the repair without any automated assistance. (Source: mockup by AUWG)

Example B.2.3.1(a): This illustration shows a sample manual repair. The problems have already been detected in the checking step and the selected offending elements in a code view have been highlighted. However, when it comes to repairing the problem, the only assistance that the tool provides is a context sensitive hint. The author is left to make sense of the hint and perform the repair without any automated assistance. (Source: mockup by AUWG)

[longdesc missing]

[longdesc missing]

(b) Semi-Automated: In semi-automated repairing, the tool can provide some automated assistance to the author in performing corrections, but the author's input is still required before the repair can be complete. For example, the tool may prompt the author for a plain text string, but then be capable of handling all the markup required to add the text string to the content. In other cases, the tool may be able to narrow the choice of repair options, but still rely on the author to make the final selection. This type of repair is usually appropriate for corrections of a semantic nature.

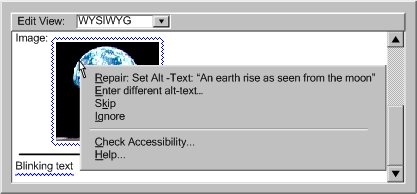

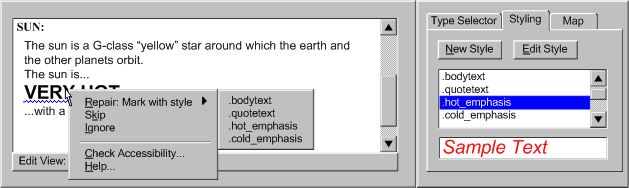

Example B.2.3.1(b): This illustration shows a sample of a semi-automated repair in a WYSIWYG editor. The author has right-clicked on an image highlighted by the automated checker system. The author must then decide whether the label text that the tool suggests is appropriate. Whichever option the author chooses, the tool will handle the details of updating the content. (Source: mockup by AUWG)

Example B.2.3.1(b): This illustration shows a sample of a semi-automated repair in a WYSIWYG editor. The author has right-clicked on an image highlighted by the automated checker system. The author must then decide whether the label text that the tool suggests is appropriate. Whichever option the author chooses, the tool will handle the details of updating the content. (Source: mockup by AUWG)

[longdesc missing]

[longdesc missing]

(c) Automated: In automated tools, the tool is able to make repairs automatically, with

no author input required. For example, a tool may be capable of automatically

adding a document type to the header of a file that lacks this information.

In these cases, very little, if any, author notification

is required. This type of repair is usually appropriate for corrections

of a syntactic or repetitive nature.

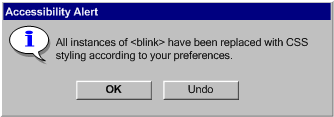

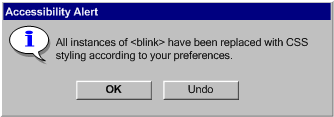

Example B.2.3.1(c): This illustration shows a sample of an announcement

that an automated repair has been completed. An "undo " button

is provided in case the author wishes to reverse the operation. In some

cases, automated repairs might be completed with no

author notification at all. (Source: mockup by AUWG)

Example B.2.3.1(c): This illustration shows a sample of an announcement

that an automated repair has been completed. An "undo " button

is provided in case the author wishes to reverse the operation. In some

cases, automated repairs might be completed with no

author notification at all. (Source: mockup by AUWG)

[longdesc

missing]

[longdesc

missing]

ATAG Checkpoint B.2.4: Do not automatically generate equivalent alternatives or reuse previously

authored alternatives without author confirmation, except when the function is

known with certainty. [Priority 1] (Rationale)

Techniques for Success Criteria 1 (The tool must never use automatically generated equivalent alternatives for a non-text object (e.g. a short text label generated from the file name of the object).):

Applicability: This success criteria is not applicable to authoring tools that do not support non-text objects.

Technique B.2.4-1.1 [Sufficient]: Leaving the out the relevant markup for the alternative equivalent

(e.g. attribute, element, etc.), rather than including the attribute

with no value or with automatically-generated content if the author has not specified an alternative

equivalent. Leaving out the markup

will increase the probability that the problem will be detected by checking

algorithms.

Techniques for Success Criteria 2: When a previously authored equivalent alternative is available (i.e. created by the author, pre-authored content developer, etc.), the tool must prompt the author to confirm insertion of the equivalent unless the function of the non-text object is known with certainty (e.g. "home button" on a navigation bar, etc.).

Applicability: This success criteria is not applicable to authoring tools that do not automatically insert any equivalent alternates (including empty values).

Technique B.2.4-2.1 [Sufficient]: If human-authored equivalent alternatives are available for an object that is being inserted (through a management functionality

(see Checkpoint 3.5) or equivalent

alternatives bundled with pre-authored content (see Checkpoint 2.6)),

then offering the alternatives to the author as default text, requiring confirmation. Inserting the alternatives without author confirmation is not required when the function of the object is known with certainty as in the following situations: (a) the tool totally controls its use (e.g. a button graphic on a generated tool bar); (b) the author has linked the current object instance to the same

URI(s) as the object was linked to when the equivalent alternative was stored;

or (c) there is semantic role information stored for the object that matches that for the stored alternative.

Technique B.2.4-2.2 [Advisory]: If a non-text object has already been used in

the same document, offering the alternative information that was supplied

for the first or most recent use as a default.

Technique B.2.4-2.3 [Advisory]: If the author changes the alternative equivalent for a non-text object,

asking the author whether all instances of the object with the same

known function should also be updated.

ATAG Checkpoint B.2.5: Provide functionality for managing, editing, and reusing alternative

equivalents. [Priority 3] (Rationale)

Techniques for Success Criteria 1 (The authoring tool must always keep a record of alternative equivalents that the author inserts for particular non-text objects in a way that allows the text equivalent to be offered back to the author for modification and re-use if the same non-text object is reused.):

Applicability: This success criteria is not applicable to authoring tools that do not support non-text objects.

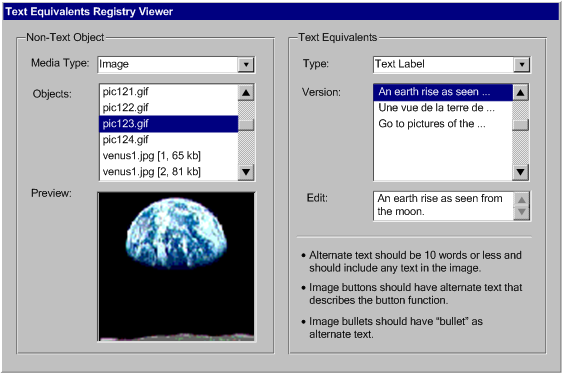

Technique B.2.5-1.1 [Sufficient] : Maintaining a registry

that associates object identity information with alternative information (e.g. making use of the Resource Description Framework (RDF) [RDF10]). Whenever an object

is used and an equivalent alternative is collected, via prompting (see Checkpoint B.2.1)

or repair (see Checkpoint B.2.3) the object's identifying information and the alternative information is added to the registry. The stored alternative

information is presented back to the author as default text in the appropriate field, whenever

the associated object is inserted (see also Checkpoint B.2.4).

Technique B.2.5-1.2 [Advisory]: Providing a entry editing capability. (see Example B.2.5-1.2)

Technique B.2.5-1.3 [Advisory]: Extending the registry for equivalent alternatives to store synchronized alternatives for multimedia (e.g. captions or audio descriptions for video).

Technique B.2.5-1.4 [Advisory]: Allowing several different versions of alternative information to be associated with a single object.

Technique B.2.5-1.5 [Advisory]: Ensuring that the stored alternative information required

for pre-authored content (see Checkpoint 2.6) is made interoperable with the management

system to allow the alternative equivalents to be retrieved whenever the

pre-authored content is inserted.

Technique B.2.5-1.6 [Advisory]: Using the stored alternatives to support keyword searches of the object database (to simplify the task of

finding relevant images, sound files, etc.). A paper describing a method to

create searchable databases for video and audio files is available (refer

to [SEARCHABLE]).

Technique B.2.5-1.7 [Advisory]: Allowing the equivalents alternatives registry to be made shareable between authors.

ATAG Checkpoint B.2.6: Provide the author with a summary of accessibility status. [Priority 3] (Rationale)

Techniques for Success Criteria 1 (The authoring tool must provide an option to view a list of all known accessibility problems (i.e. detected by automated checking or identified by the author) prior to completion of authoring.):

Applicability: This success criteria is not applicable to authoring tools that are not capable of producing Web content with accessibility problems (e.g. a tool that uses limited high-level author input to automatically produce dependably accessible content).

Technique B.2.6-1.1 [Sufficient]: Providing, at all times, the option to view a single consolidated list of all of the accessibility problems that are detected by the checking function (see Checkpoint

B.2.2), organized by problem type and number of instances.

Technique B.2.6-1.2 [Advisory]: Storing accessibility

status information in an interoperable form (e.g. using Evaluation and Repair Language

[EARL]).

Technique B.2.6-1.3 [Advisory]: Providing direct links to additional help and repair assistance from the list of accessibility problems.

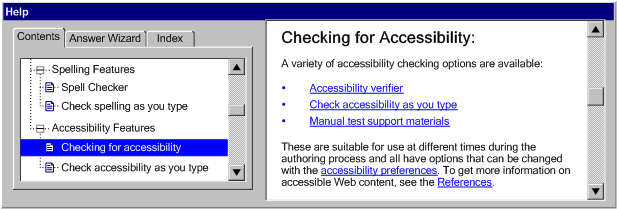

ATAG

Checkpoint B.2.7: Document in the help system all features of the tool that support the production of accessible

content. [Priority 2] (Rationale)

Implementation Notes: During implementation of this checkpoint, consideration should be given to the promotion and integration of the accessibility solutions involved as required by Guideline B.3 of the guidelines. In particular, accessibility prompting:

Techniques for Success Criteria 1 (All features that play a role in creating accessible content must be documented in the help system.):

Applicability: This success criteria is not applicable to authoring tools that are not capable of producing Web content with accessibility problems (e.g. a tool that uses limited high-level author input to automatically produce dependably accessible content).

Technique B.2.7-1.1 [Sufficient]: Ensuring that the help system answers

the question "What features of the tool encourage the production

of accessible content?" with reference to features that meet Checkpoint B.2.1, B.2.2, B.2.3. Also ensuring that, for each feature identified, the help system answers the question "How are these features operated?".

Technique B.2.7-1.2 [Advisory]: Providing direct links from the features that meet Checkpoint B.2.1, B.2.2, B.2.3 to context sensitive

help on how to operate the features.

Technique B.2.7-1.3 [Advisory]: Providing direct links from within the accessibility related documentation that launch the relevant accessibility features.

ATAG Checkpoint B.2.8:

Ensure that accessibility is demonstrated in all documentation and help, including

examples. [Priority 3] (Rationale)

Techniques for Success Criteria 1 (All examples of markup and screenshots of the authoring

tool user interface that appear in the documentation and help must demonstrate accessible Web content.):

Applicability: This success criteria is not applicable to authoring tools that are not capable of producing Web content with accessibility problems (e.g. a tool that uses limited high-level author input to automatically produce dependably accessible content).

Technique B.2.8-1.1 [Sufficient]: Ensuring that all examples of code pass the tool's own accessibility checking mechanism (see Checkpoint B.2.1) (see Example B.2.8-1.1). Also ensuring that all screenshots of the authoring interface show the authoring interface in a state that corresponds to full and proper use of the accessibility features of tool (e.g. prompts filled in, optional accessibility features turned on, etc.).

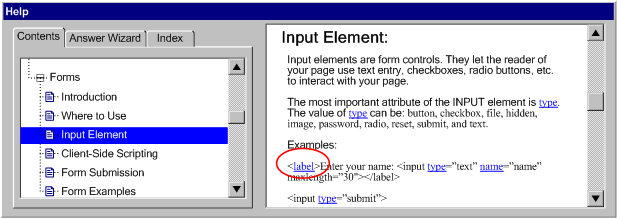

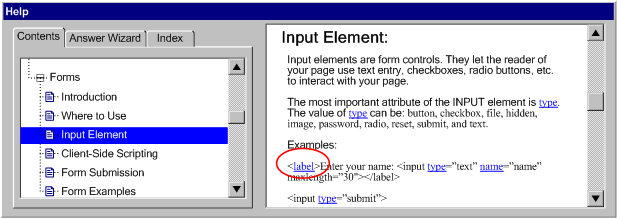

Example B.2.8-1.1: This illustration shows documentation for

the

Example B.2.8-1.1: This illustration shows documentation for

the input element in this code-level authoring tool makes use

of the label element in order to reinforce the routine nature

of the pairing. (Source: mockup by AUWG)

[longdesc

missing]

[longdesc

missing]

Technique B.2.8-1.2 [Advisory]: Providing, In the documentation, at least

one model of each accessibility practice in the relevant

WCAG techniques document for each language supported by

the tool. Include all levels of accessibility practices.

Technique B.2.8-1.3 [Advisory]: Ensuring that plug-ins that update the accessibility features

of a tool also update the documentation examples.

ATAG Checkpoint B.2.9: Provide a tutorial on the process of accessible authoring. [Priority 3] (Rationale)

Techniques for Success Criteria 1 (A tutorial on the accessible authoring process for the specific authoring

tool must be provided.):

Applicability: This success criteria is not applicable to authoring tools that are not capable of producing Web content with accessibility problems (e.g. a tool that uses limited high-level author input to automatically produce dependably accessible content).

Technique B.2.9-1.1 [Sufficient]: Providing a tutorial that contains a method for using the authoring tool to increase the accessibility of Web content. The tutorial begins at the typical starting point for the tool (e.g. empty document) and describes how accessibility prompting and assistance can be used as content is being added or modified. The tutorial also covers when and how checking and repair should be performed.

Technique B.2.9-1.2 [Advisory]: Ensuring that wherever rationales appear, they avoid referring to accessibility features

as being exclusively for particular groups (e.g. "for blind authors"). Instead, they emphasize the importance of accessibility

for a wide range of content consumers, from

those with disabilities to those with alternative viewers (see "Auxiliary

Benefits of Accessibility Features", a W3C-WAI resource).

Technique B.2.9-1.3 [Advisory]: Providing a dedicated accessibility

section.

Technique B.2.8-1.4 [Advisory]: Providing context-sensitive help definitions for accessibility-related terms.

Technique B.2.8-1.5 [Advisory]: Including pointers to more information on accessible Web authoring, such as WCAG and other accessibility-related resources.

Technique B.2.8-1.6 [Advisory]: Including pointers to relevant content type specifications. This is particularly relevant for languages that are easily hand-edited, such as most XML languages.

Technique B.2.8-1.7 [Advisory]: Calling author attention to accessibility-related idiosyncrasies of the tool compared to other authoring tools that create the same kind of content (e.g. that content must be saved before an automatic accessibility check becomes active).

Guideline B.3: Promote and integrate accessibility solutions

This guideline requires that authoring tools must promote accessible authoring practices within the tool as well as smoothly integrate any functions added to meet the other requirements in this document.

ATAG Checkpoint B.3.1: Ensure

that the most accessible option for an authoring task is given priority. [Priority

2] (Rationale)

Techniques for Success Criteria 1 (When the author has more than one authoring option for a given task (e.g. changing the color of text can be changed with presentation markup or style sheets) then any options that conform to WCAG must have equal or higher prominence than any options that do not.):

Applicability: This success criteria is not applicable to authoring tools that have only one method for performing each task.

Technique

B.3.1-1.1 [Sufficient]: Ensuring that, if there are two or more authoring options for performing

the same authoring task (e.g. setting color, inserting multimedia, etc.)

and one option results in content that agrees with the relevant content type-specific WCAG benchmark while the other does not,

the more accessible option is given authoring

interface prominence.

(see Example B.3.1-1.1)

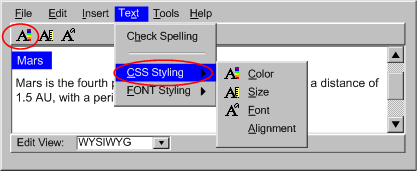

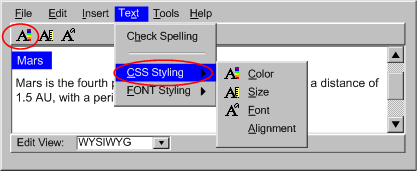

Example B.3.1-1.1: This illustration shows an authoring tool that supports

two methods for setting text color: using CSS and using

Example B.3.1-1.1: This illustration shows an authoring tool that supports

two methods for setting text color: using CSS and using font.

Since using CSS is the more accessible option, it is given a higher prominence

within the authoring interface by: (1) the "CSS Styling" option

appearing above the "FONT Styling" option in the drop down Text

menu, and (2) the CSS styling option being used to implement the one-click

text color formatting button in the tool bar. (Source: mockup by AUWG)

[longdesc missing]

[longdesc missing]

Technique

B.3.1-1.2 [Sufficient]: Completely removing less accessible options simplifies the

task of meeting this success criteria.

Techniques for Success Criteria 2 (Any choices of content types or authoring practices presented to the author (e.g., in menus, toolbars or dialogs) that will lead to the creation of content that does not conforms to WCAG must be marked or labeled so that the author is aware of the consequences prior to making the choice.):

Applicability: This success criteria is not applicable to authoring tools that do not provide choices that will lead directly to accessibility problems.

Technique B.3.1-2.1 [Sufficient]: Ensuring that, when an option will lead directly to content being created using a content type for which there is not a content type-specific WCAG benchmark or content that does not agree with the relevant content type-specific WCAG benchmark, the author is warned (e.g. be a warning message, by an informational icon, etc.).

Technique B.3.1-2.2 [Sufficient]: Completely removing choices that will lead directly to content being created using a content type for which there is not a content type-specific WCAG benchmark or content that does not agree with the relevant content type-specific WCAG benchmark simplifies the task of meeting this success criteria.

ATAG Checkpoint B.3.2: Ensure that accessibility prompting, checking, repair functions, and

documentation are always clearly available to the author [Priority 2] (Rationale)

Rationale: If the features that support accessible authoring

are difficult to find and activate, they are less likely to be used. Ideally,

these features should be turned on by default.

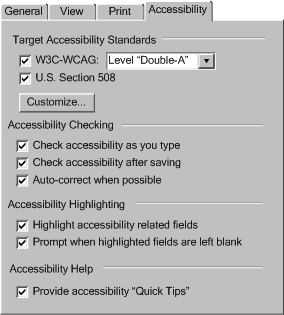

Techniques for Success Criteria 1 (All accessibility prompting, checking, repair functions and documentation

that is continuously active must always be enabled by default and if the author disables them (e.g. from a preferences screen), then the tool must inform the author that this may increase the risk of accessibility problems.):

Applicability: This success criteria is not applicable to authoring tools that do not provide continuously active accessibility prompting, checking, repair functions, and

documentation.

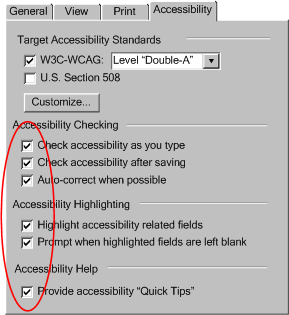

Technique B.3.2-1.1 [Sufficient]: Ensuring that continuously active processes

related to accessibility that can be turned on or off (e.g. "as you type"-style

checkers) are active by default (see Example B.3.2-1.1(a)) and informing the author that

disabling any continuously active process may cause accessibility problems

that might not occur otherwise (see Example B.3.2-1.1(b)).

Techniques for Success Criteria 2 (All user interface controls for accessibility prompting, checking, repair functions and documentation

must have at least the same prominence as the controls for prompting, checking, repair and documentation for other types of Web content problems (e.g. markup validity, program code syntax, spelling and grammar).):

Applicability: This success criteria is not applicable to authoring tools that do not provide accessibility prompting, checking, repair functions, and documentation.

Sufficiency: Technique B.3.2-2.1, Technique B.3.2-2.2, and Technique B.3.2-2.3, in combination , are sufficient to meet this checkpoint.

Technique B.3.2-2.1 [Sufficient in combination] : Ensuring that prompting for accessibility information

has the same prominence as prompting for information critical to content correctness (e.g. a tool that prompts the author for a required multimeida file name attribute has prompts with the same prominence

for short text labels and long descriptions for that object (see Example B.3.2-2.1).

Technique B.3.2-2.2 [Sufficient in combination]: Ensuring that utilities for checking and repairing accessibility problems

has the same prominence as utilities fotrchecking for information critical to content correctness (e.g. a tool that checks for spelling, grammar, or code syntax will

have checks with the same prominence as checking for accessibility problems (see Example B.3.2-2.2)).

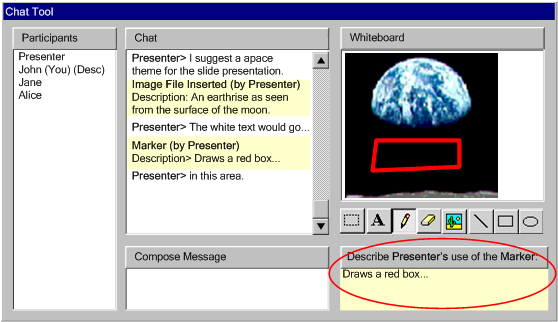

Example B.3.2-2.2: This illustration shows an authoring interface that checks

for and displays spelling and accessibility errors with the same prominence.

In this case, the author has activated a right-click pop-up menu that includes

spelling and accessibility repair options. (Source: mockup by AUWG)

Example B.3.2-2.2: This illustration shows an authoring interface that checks

for and displays spelling and accessibility errors with the same prominence.

In this case, the author has activated a right-click pop-up menu that includes

spelling and accessibility repair options. (Source: mockup by AUWG)

Technique B.3.2-2.3 [Sufficient in combination]: Ensuring that documentation for accessibility has the

same prominence as documentation for information critical to content correctness.

(e.g. a tool that documents any aspect of its operation will have documentation

with the same prominence for accessibility).

General Techniques for Checkpoint B.3.2:

Applicability: Techniques in this section are not necessarily applicable to any one success criteria, and may apply more generally to the checkpoint as a whole.

Technique B.3.2-0.1 [Advisory]: Grouping input controls when several

pieces of information are all required from the author as part of an accessible authoring practice.

ATAG Checkpoint B.3.3:

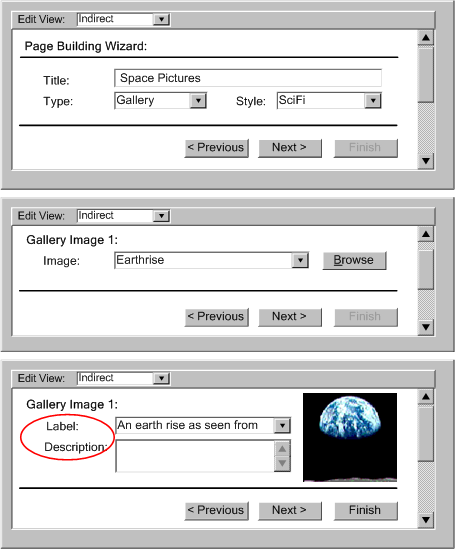

Ensure that sequential authoring processes integrate accessible authoring practices.[Priority 2] (Rationale)

Techniques for Success Criteria 1 (Interactive features that sequence author actions (e.g. object insertion dialogs, templates, wizards) must provide any accessibility prompts relevant to the content being authored at or before the first opportunity to complete the interactive feature (without canceling).):

Applicability: This success criteria is not applicable to authoring tools that do not provide interactive features that help sequence author actions (e.g. many basic text editors).

Technique B.3.3-1.1 [Sufficient]: Ensuring that any feature that sequences authoring actions (by allowing different defined sets of actions at different points in a process) integrates accessibility prompting in such a way that there is no opportunity to finalize an authoring decision before prompting related to the accessibility implications of that decision.

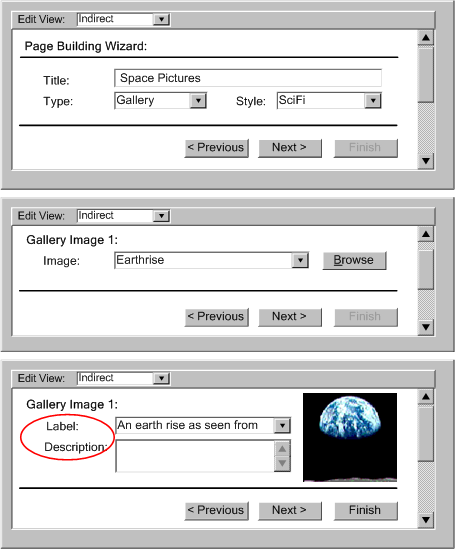

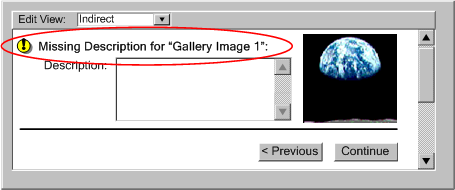

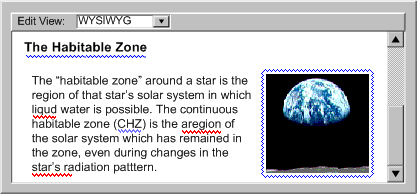

Example B.3.3-1.1: This illustration shows three screens of a page building wizard (indirect authoring). The wizard prompts the author for a few key pieces of information that are used to tailor the process, in this case to create a gallery page. Since the accessibility-related prompts ("label" and "description") occur on the third step of the wizard, the "Finish" button is unavailable until then to prevent the author from bypassing this step. (Source: mockup by AUWG)

Example B.3.3-1.1: This illustration shows three screens of a page building wizard (indirect authoring). The wizard prompts the author for a few key pieces of information that are used to tailor the process, in this case to create a gallery page. Since the accessibility-related prompts ("label" and "description") occur on the third step of the wizard, the "Finish" button is unavailable until then to prevent the author from bypassing this step. (Source: mockup by AUWG)

[longdesc missing]

[longdesc missing]

Technique B.3.3-1.2

[Advisory]: Integrate checking and repairing into sequencing mechanisms (e.g. design aids, wizards, templates).

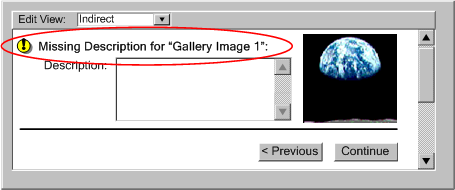

Example B.3.3-1.2: This illustration shows the wizard screen that immediately follows the sequence in Example B.3.3-1.1. Since no text was entered in the description field, checking, and repairing occurs immediately in the sequence of the wizard interface. (Source: mockup by AUWG)

Example B.3.3-1.2: This illustration shows the wizard screen that immediately follows the sequence in Example B.3.3-1.1. Since no text was entered in the description field, checking, and repairing occurs immediately in the sequence of the wizard interface. (Source: mockup by AUWG)

Technique B.3.3-1.3 [Advisory]: Choosing timing options that prompt early in the workflow. This averts

the need for more disruptive checking and repair later in the workflow. Timing

options include: Negotiated Interruption, Scheduled

Interruption, and Immediate Interruption.

(a) Negotiated Interruption:

A negotiated interruption is caused by interface mechanisms (e.g. icons

or highlighting of the element, audio feedback) that alert the author to

a problem, but remain flexible enough to allow the author to decide whether

to take immediate action or address the issue at a later time. Since negotiated

interruptions are less intrusive than immediate or scheduled interruptions,

they can often be better integrated into the design workflow and have the

added benefit of informing the author about the distribution of problems

within the document. Although some authors may choose to ignore the alerts

completely, it is not recommended that authors be forced to fix

problems as they occur. Instead, it is recommended that negotiated interruption

be supplemented by scheduled interruptions at major

editing events (e.g., when publishing), when the tool should alert the

author to the outstanding accessibility problems.

Example B.3.3-1.3 (a): This illustration shows an example of a negotiated interruption.