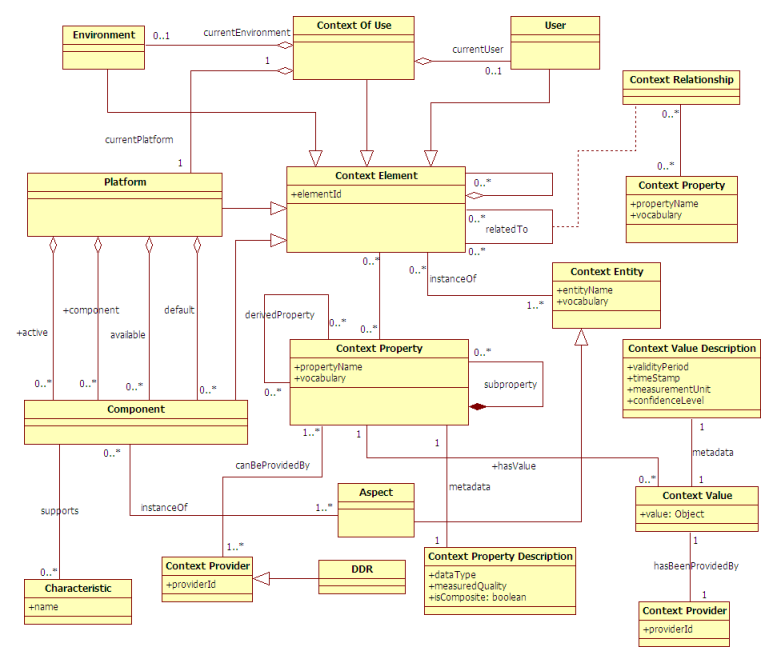

2.2.1

The Context of Use

Context is an all-embracing term. Composed of “con”

(with) and “text”, context refers to the meaning that

must be inferred from the adjacent text. As a result, to be

operational, context can only be defined in relation to a purpose,

or finality [CRO02]. In the field of context-aware computing a

definition of Context that has been largely used is provided

by [DEY00]: Context is any information that can be

used to characterize the situation of entities (i.e. whether a

person, place or object) that are considered relevant to the

interaction between a user and an application, including the user

and the application themselves. Context is typically the location,

identity and state of people, groups and computational and physical

objects.

While the above definition is rather general, thus encompassing

many aspects, it is not directly operational. Hence, we hereby

define the Context of Use of an interactive system

as a dynamic, structured information space that includes the

following entities:

- a model of the User, U, (who is intended to

use or is actually using the system)

- the hardware-software Platform, P, (which

includes the set of computing, sensing, communication, and

interaction resources that bind together the physical environment

with the digital world)

- the social and physical Environment, E,

(where the interaction is actually taking place).

Thus, a context of use is a triple composed by (U, P,

E)

The User represents the human being (or a human

stereotype) who is interacting with the system. The characteristics

modelled or relevant can be very dependant on the application

domain. Specific examples are age, level of experience, the

permissions, preferences, tastes, abilities and disabilities, short

term interests, long term interests, etc. In particular,

perceptual, cognitive and action disabilities may be expressed in

order to choose the best modalities for the rendering and

manipulation of the interactive system.

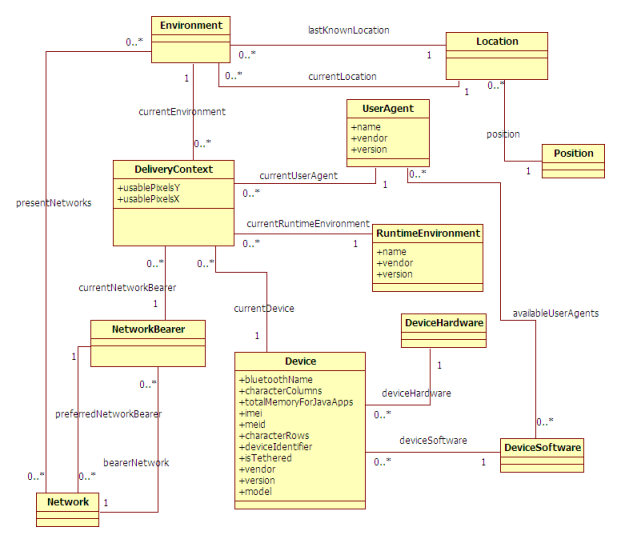

The Platform is modeled in terms of resources, which in

turn, determine the way information is computed, transmitted,

rendered, and manipulated by users. Examples of resources include

memory size, network bandwidth, and input and output interaction

devices. [CCB02] distinguishes between elementary

platforms (e.g. laptop, PDA, mobile phone), which are built

from core resources (e.g. memory, display, processor) and

extension resources (e.g. external displays, sensors,

mice), and clusters, which are built from elementary

platforms. Resources motivate the choice for a set of input and

output modalities and, for each modality, the amount of information

made available. W3C's Delivery Context

Ontology [DCONTOLOGY] is intended to define a concrete

Platform Model.

The Environment denotes the set of objects, persons

and events that are peripheral to the current activity but that may

have an impact on the system and/or users behavior, either now or

in the future

(Coutaz and Rey, 2002). According to our

definition, an environment may encompass the entire world. In

practice, the boundary is set up by domain analysts whose role is

to elicit the entities that are relevant to the case at hand.

Specific examples are: user's location, ambient sound, lighting or

weather conditions, present networks, nearby objects, user's social

networks, level of stress ...

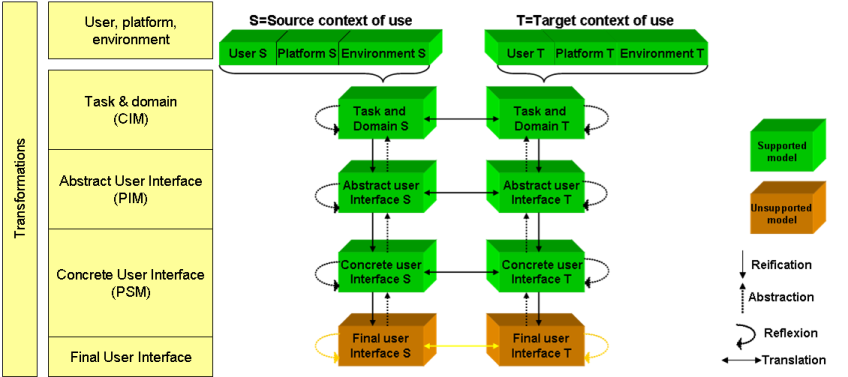

The relationship between a UI and its contexts of use leads to the

following definitions:

- Multi-target (or multi-context) UI

- A multi-target (or multi-context) UI supports multiple types of users,

platforms and environments. Multi-user,

multi-platform and multi-environment UIs are specific classes of multi-target

UIs which are, respectively,

sensitive to user, platform and environment variations. [CCTLBV03]

- Adaptive UI

- An Adaptive UI

refers to a UI capable of being

aware of the context of use and to (automatically) react

to changes of this context in a continuous way (for instance, by

changing the UI presentation,

contents, navigation or even behaviour).

- Adaptable UI

- An Adaptable UI

can be tailored according to a set of predefined options.

Adaptability normally requires an explicit human intervention. We

can find examples of UI

adaptability on those word processors where the set of buttons

contained by toolbars can be customized by end users.

- Plastic UI

- A Plastic UI is a

multi-target UI that

preserves usability across multiple targets. Usability is

not intrinsic to a system. Usability can only be validated against

a set of properties set up in the early phases of the development

process. [CCTLBV03]