To start the component and recognize the handwriting data, a

startRequest event from the IM to the ink recognition component is

sent. The data field of the event contains InkML representation of

the ink data.

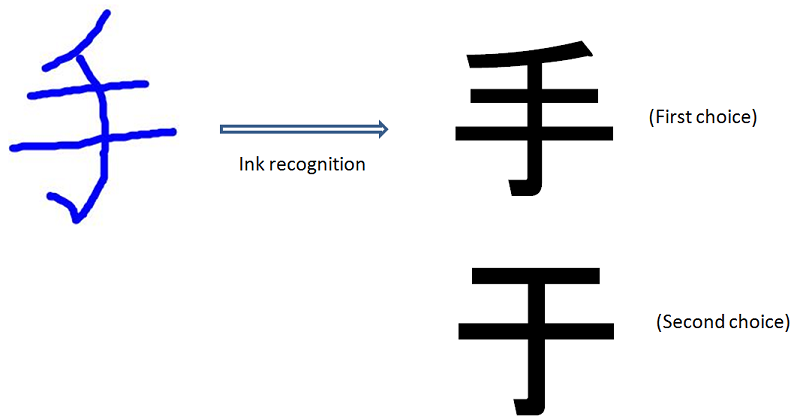

Along with the ink, additional information such as the reference

co-ordinate system and capture device's resolution may also be

provided in the InkML data. The below example shows that the ink

strokes have X and Y channels and the ink has been captured at a

resolution of 1000 DPI. The example ink data contains strokes of

the Japanese character "手" (te) which means "hand".

<mmi:mmi xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:startRequest source="uri:inkRecognizerURI" context="URI-1" requestID="request-1">

<mmi:data>

<ink:ink version="1.0" xmlns:ink="http://www.w3.org/2003/InkML">

<ink:definitions>

<ink:context id="device1Context">

<ink:traceFormat id="strokeFormat>

<ink:channel name="X" type="decimal">

<ink:channelProperty name="resolution" value="1000" units="1/in"/>

</ink:channel>

<ink:channel name="Y" type="decimal">

<ink:channelProperty name="resolution" value="1000" units="1/in"/>

</ink:channel>

</ink:traceFormat>

</ink:context>

</ink:definitions>

<ink:traceGroup contextRef="#device1Context">

<ink:trace>

106 81, 105 82, 104 84, 103 85, 101 88, 100 90, 99 91, 97 97,

89 105, 88 107, 87 109, 86 110, 84 111, 84 112, 82 113, 78 117,

74 121, 72 122, 70 123, 68 125, 67 125, 66 126, 65 126, 63 127,

57 129, 53 133, 47 135, 46 136, 45 136, 44 137, 43 137, 43 137

</ink:trace>

<ink:trace>

28 165, 29 165, 31 165, 33 165, 35 164, 37 164, 38 164, 40 163,

42 163, 45 163, 49 162, 51 162, 53 162, 56 162, 58 162, 64 160,

69 160, 71 159, 74 159, 76 159, 78 159, 86 157, 91 157, 95 157,

96 157, 99 157, 101 157, 103 157, 109 155, 111 155, 114 155,

116 155, 119 155, 121 154, 124 154, 126 154, 127 154, 129 154,

131 154, 134 153, 135 153, 136 153, 137 153, 138 153, 139 153,

140 153, 141 153, 142 153, 143 153, 144 153, 145 153, 145 153

</ink:trace>

<ink:trace>

10 218, 12 218, 14 218, 20 216, 25 216, 28 216, 31 216, 34 216,

37 216, 45 216, 53 216, 58 215, 60 215, 63 215, 68 215, 72 215,

74 215, 77 215, 85 212, 88 212, 94 210, 100 208, 105 208, 107 208,

109 208, 110 208, 111 207, 114 207, 115 207, 119 207, 121 207,

123 207, 124 207, 128 206, 130 205, 131 205, 134 205, 136 205,

137 205, 138 205, 139 204, 140 204, 141 204, 142 204, 143 204,

144 204, 145 204, 146 204, 147 204, 148 204, 149 204, 150 204,

151 203, 152 203, 153 203, 154 203, 155 203, 156 203, 158 203,

159 202, 160 202, 161 202, 162 202, 163 202, 164 202, 165 202,

166 202, 167 202, 168 202, 169 202, 170 202, 171 202, 172 202,

173 202, 173 201, 173 201

</ink:trace>

<ink:trace>

78 128, 78 127, 79 127, 79 128, 80 129, 80 130, 81 132, 82 133,

82 134, 83 135, 84 137, 85 139, 86 141, 87 142, 88 144, 89 146,

94 152, 95 153, 96 155, 98 160, 99 162, 100 165, 101 167, 101 169,

102 173, 102 176, 102 181, 102 183, 102 185, 102 186, 104 192,

104 195, 104 197, 104 199, 104 201, 104 203, 104 205, 104 206,

104 207, 104 208, 104 209, 104 210, 104 211, 104 213, 104 214,

104 215, 104 216, 104 217, 104 218, 104 220, 103 222, 102 223,

102 224, 102 223, 102 224, 103 225, 103 228, 103 229, 103 230,

103 231, 103 232, 103 233, 103 236, 103 239, 103 242, 103 243,

103 247, 103 248, 102 249, 102 250, 102 251, 101 251, 100 253,

99 255, 99 256, 98 257, 97 258, 97 259, 96 260, 96 261, 95 262,

95 263, 94 264, 94 265, 93 266, 93 267, 92 268, 91 269, 91 270,

90 271, 90 272, 89 273, 89 274, 88 275, 88 276, 87 276, 87 277,

86 277, 86 278, 85 279, 85 280, 84 281, 83 282, 82 284, 82 285,

81 285, 80 286, 79 287, 78 288, 77 288, 77 289, 76 290, 75 290,

75 291, 74 291, 74 290, 74 289, 74 288, 74 287, 73 287, 73 286,

73 285, 72 284, 72 281, 71 280, 70 279, 70 278, 69 277, 68 276,

67 275, 65 274, 62 272, 60 271, 59 271, 58 270, 57 270, 56 269,

55 268, 54 268, 53 267, 52 267, 51 267, 49 267, 48 267, 48 266,

48 266

</ink:trace>

</ink:traceGroup>

</ink:ink>

</mmi:data>

</mmi:startRequest>

</mmi:mmi>

As part of support for the life cycle events, a modality

component is required to respond to a startRequest event with a

startResponse event. Here's an example of a startResponse from the

ink recognition component to the IM informing the IM that the ink

recognition component has successfully started.

Here's an example of a startResponse event from the ink

recognition component to the IM in the case of failure, with an

example failure message. In this case the failure message indicates

that the recognition failed due to invalid data format of the

handwriting data.