| Performance |

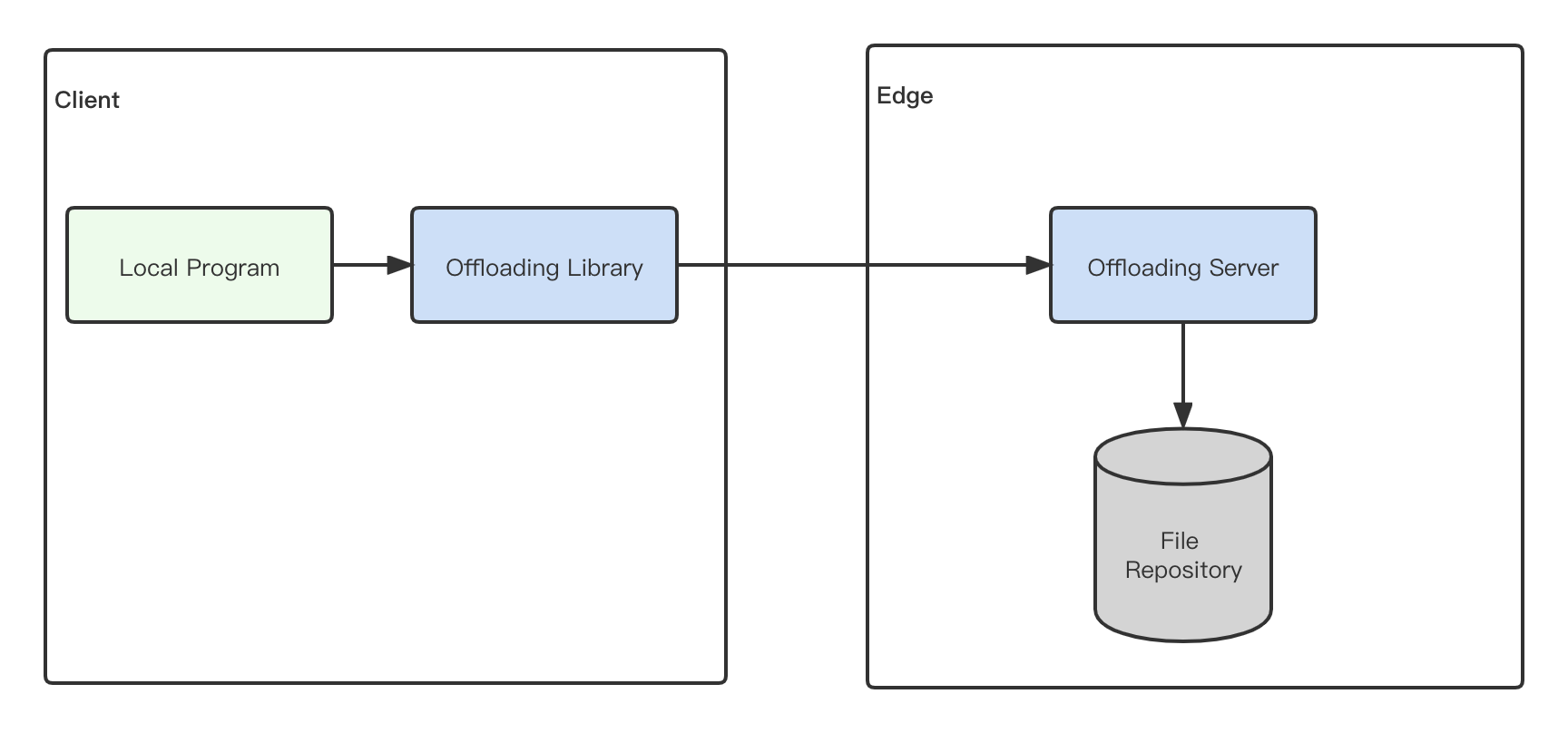

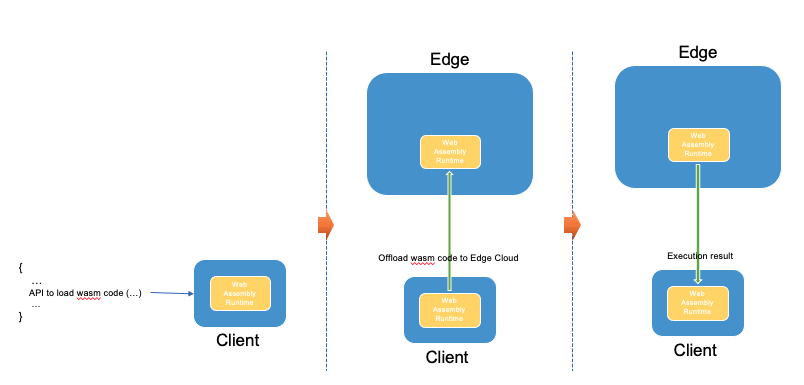

R1: Client Offload |

Clients should be able to offload computing intensive work

to an Edge Resource. |

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Resiliency |

R2a: Application-Directed Migration |

The application should be able to explicitly manage migration

of work between Computing Resources.

This may include temporarily running a workload on

multiple Computing Resources to hide transfer latency.

|

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

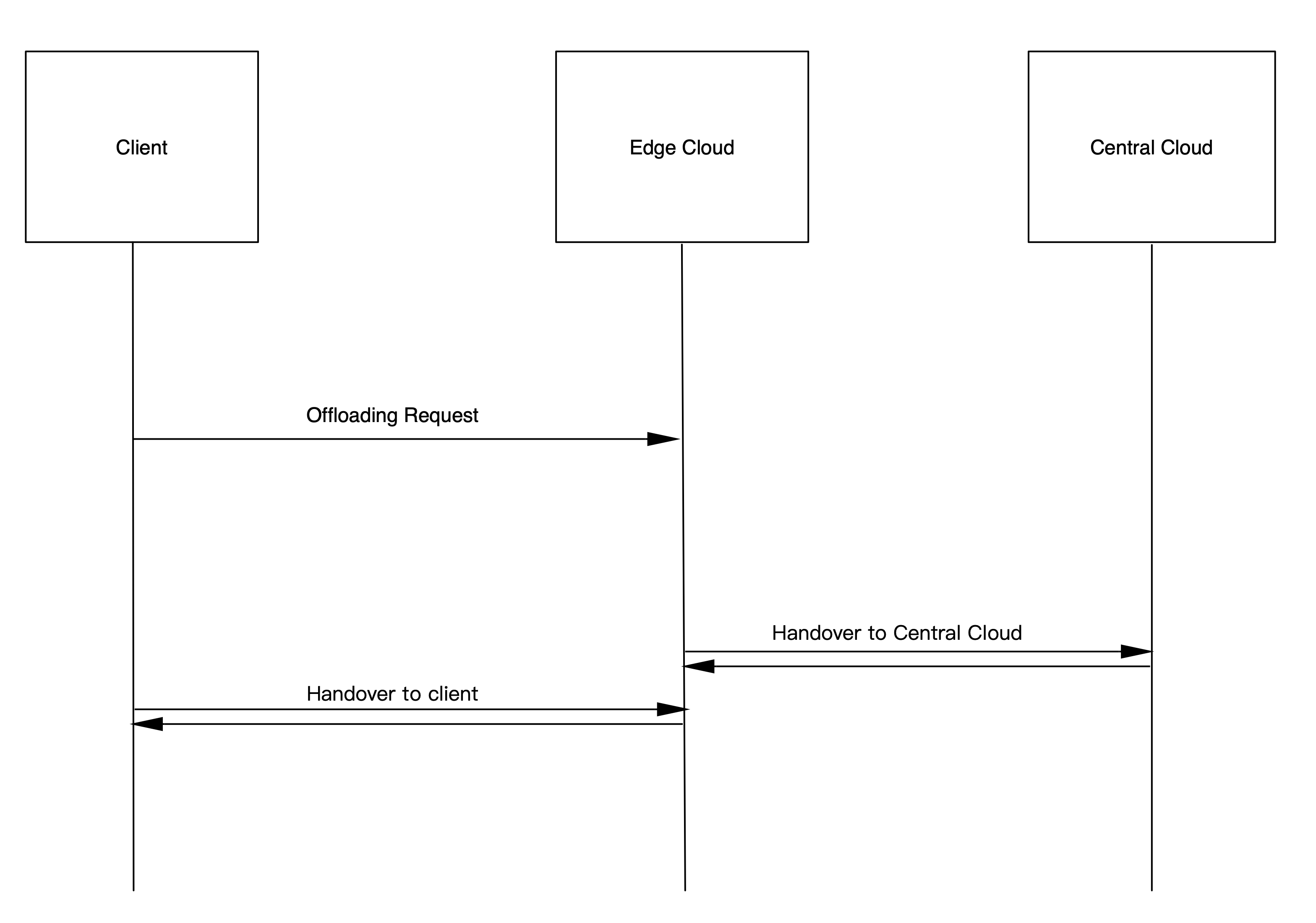

| Resiliency |

R2b: Live Migration |

The Edge Cloud should be able to transparently migrate

live (running) work between Computing Resources.

This includes between Edge Resources, Cloud Resources,

and back to the client, as necessary.

If the workload is stateful,

this includes state capture and transfer. |

UC-CA

UC-VR

UC-VC

|

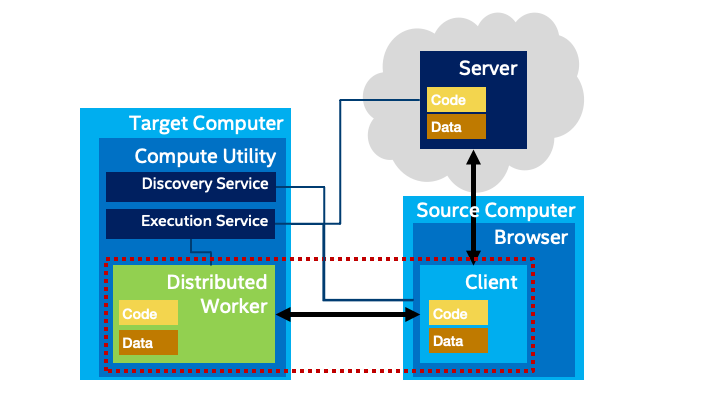

| Flexibility |

R3: Discovery |

A client should be able to dynamically enumerate available

Edge Resources. |

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Flexibility |

R4: Selection |

A client should be able to select between available resources,

including making a decision about whether offload is appropriate

(e.g., running on the client may be the best choice).

This selection may be automatic or application-directed,

and may require metadata or measurements

of the performance and latency of Edge Resources,

and may be static or dynamic.

To do: perhaps break variants down into separate sub-requirements.

Also, it needs to be clear about how this is different

from the out-of-scope issue "Offload policy".

|

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Flexibility |

R5: Packaging |

A workload should be packaged so it can be executed on

a variety of Edge Resources.

This means either platform independence OR a means to

negotiate which workloads can run where. |

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Flexibility |

R6: Persistence |

It should be possible for a workload to be run "in the background",

possibly event-driven, even if the client is not active.

This also implies lifetime management (cleaning

up workloads under some conditions,

such as if the client has not connected for a certain amount

of time, etc.) |

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Security, Privacy |

R7: Confidentiality and Integrity |

The client should be able to control and protect the data used

by an offloaded workload.

Note: this may result in constraints upon the selection of offload targets, but

it also means data needs to be protected in transit, at rest, etc.

|

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Control |

R8: Resource Management. |

The client should be able to control

the use of resources by an

offloaded workload on a per-application basis.

Note: If an Edge Resource has a usage charge, for example,

a client may want to set quotas on offload,

and some applications may need more resources than others.

This may also require a negotiation,

e.g., a workload may have minimum requirements,

making offload mandatory on limited clients.

This is partially about QoS as it relates to performance

(making sure a minimum amount of resources is available)

but is also about controlling charges (so a web app does

not abuse the edge resources paid for by a client).

|

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Scalability |

R9: Statelessness |

It should be possible to identify workloads that

are stateless so they can be run in a more scalable

manner, using FaaS cloud mechanisms.

|

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Compatibility |

R10: Stateful |

It should be possible to run stateful workloads,

to be compatible with existing client-side

programming model expectations.

|

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Performance |

R11: Parallelism |

It should be possible to run multiple workloads in

parallel and/or express parallelism within a single workload.

|

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Performance |

R12: Asynchronous |

The API for communicating with a running workload

should be non-blocking (asynchronous) to hide the

latency of remote communication and allow the

main (user interface) thread to run in parallel with the

workload (even if the workload is being run on the client).

|

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Security |

R13: Sandboxing |

A workload should be specified and packaged in such

a way that it can be run in a sandboxed environment and its

access to resources can be managed.

|

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|

| Performance, Compatibility |

R14: Acceleration |

A workload should have (managed) access to accelerated

computing resources when appropriate, such as AI accelerators.

Note: Since the availability of these resources may vary between

Computing Resources these need to be taken into account

when estimating performance and selecting a Computing

Resource to use for offload. Access to such resources

should use web standards, e.g., standard WASM/WASI APIs.

|

UC-CA

UC-VR

UC-CG

UC-SA

UC-VC

UC-MLA

UC-IVPU

UC-PWMP

UC-BR

UC-LVB

UC-ALPR

UC-RNA

UC-CM

|