Report

Table of contents

Executive summary

W3C organized a Workshop on Wide Color Gamut (WCG) and High Dynamic Range (HDR) for the Web, with prerecorded talks for online viewing, an live discussions taking place during September 2021. This workshop connected the Web developer, content creation and color science communities and explored evolutions of the web platform to address near-term requirements for Wide Color Gamut, and slightly longer-term requirements for High Dynamic Range.

After a call for submissions and curation by the Program Committee, 15 talks were announced in August 2021, grouped into five thematic sections:

- HDR Introduction (minutes)

- WCG: CSS Color 4 (minutes)

- HDR: Compositing and tone mapping (minutes)

- WCG & HDR: Color creation and manipulation (minutes)

- WCG & HDR: Canvas, WebGL, WebGPU (minutes)

Over the course of September, workshop attendees and speakers participated in five live discussion sessions. After these, over the next year, many follow-up discussions and standardization activities were sparked by the workshop, in particular to work on next steps and to further develop themes from the workshop talks.

The main outcomes are that:

- Standardization efforts on WCG and HDR are in-place and ongoing at W3C, the International Color Consortium, the Alliance for Open Media, and other fora

- A Color API for the Web is currently being incubated in WICG, and when more mature will require formation of a new W3C Working Group

- Interoperability for WCG-aware Web specifications will be a focus for the next year, including Interop 2022

- Canvas has already been extended to WCG, and HDR in Canvas is at the prototype stage

- Handling of HDR content, in particular HDR tone mapping for a wide range of displays and viewing environments, needs significant further discussion and experimentation.

The Legacy, sRGB Web

Since 1996, color on the Web has been locked into a narrow-gamut, low dynamic-range colorspace called sRGB. Originally derived from a standard for broadcast High Definition Television (HDTV) and intended to be a lowest common denominator for interoperability, it has since become an increasing burden to creative expression and a key differentiator between the web, on the one hand, and native applications and entertainment devices which have kept up with, indeed driven, advances in technology.

Display technologies have vastly improved since the bulky, cathode-ray tube displays of the 1990s. The most obvious change is display resolution, but the range of displayable colors (the gamut), accompanied by a reduction in reflected glare, an increase in peak and average brightness, and thus an increase in dynamic range, are also notable.

Wide Color Gamut

Colors more vivid than a typical display are common in nature. The varied orange hues of a spectacular sunset, the irridescent blues and greens of a butterfly or a bird's wing, the colors in a firework display, even the colors seen in ordinary grass, lie outside the sRGB gamut.

Meanwhile the movie and TV industries have moved beyond P3 to an even wider-gamut colorspace, ITU Rec BT.2020, which can display an astonishing 150% more colors than sRGB. Professional displays used in color grading movies can display 90% or more of the 2020 gamut; history shows that similar capabilities will become available in the consumer market soon.

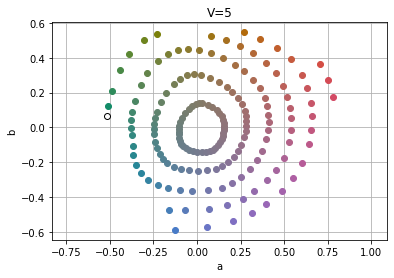

The chromaticity diagram shows the gamut of these three color spaces. The Web needs to provide access to these colors - not just for video or images, but for everyday Web content.

High Dynamic Range

The human eye can perceive a vast range of brightnesses, from dimly-seen shapes under moonlight to the glare of sunlight reflected from metallic surfaces. This is termed the dynamic range and is measured by the luminance of the brightest displayable white, divided by the luminance of the deepest black. sRGB, with a theoretical peak luminance of 80 cd/m², and a viewing flare of 5% (0.8 cd/m²) giving a total dynamic range of 100.

Display P3, which is often used with a peak white luminance of around 200 cd/m² and a black luminance of 0.80 cd/m², has a total dynamic range of 250. The luminance limits are set by power consumption and heating, if the entire display is set to the maximum brightness white; and also user comfort, as the white is a typical background for text and represents a "paper white" or "media white". In the broadcast industry, this is termed standard dynamic range (SDR). It falls far short of what the human eye can perceive.

In nature, very bright objects occupy a very small fraction of the visual field. Also, we can see detail in an almost dark room. By taking advantage of these two aspects - scene by scene lightness changes and localized highlights, a display can produce a wider dynamic range by turning down the backlight in dark scenes and turning it up in bright ones; in addition, if each small portion of the screen has its own backlight, small highlights much brighter than a paper white can be produced, for a small area and for a small time. This is called High Dynamic Range (HDR).

As an example, the broadcast standard ITU BT.2100, with the PQ electro-optical transfer function, will display a paper white at around 200 cd/m². But the deepest black is 0.001 cd/m²; and the peak, short-term, small-area white is 10,000 cd/m² giving a total dynamic range of ten million. While this is a theoretical peak, reference monitors with peak luminance of 1,000 cd/m² to 4,000 cd/m² are in widespread use for movie and TV production. Consumer devices with peak luminances of 500 to 1200 cd/m² are becoming common.

HDR is in widespread daily use for the delivery of streaming movies, broadcast television, on gaming consoles, and even for the recording and playback of HDR movies on high-end modile phones. It is also starting to be used for still images. The Web, meanwhile, is currently stuck with Standard Dynamic Range.

Standardization

It is all very well to complain that consumer entertainment technology is far in advance of the Web (which is true), but what can be done about it? Are entirely new standards needed, or can existing ones be smoothly extended, adding capabilities already common in the broadcast industry?

In addition, we need to compare like with like. The experiences of watching a blockbuster movie in a darkened room with a home theatre setup, or watching a live sports broadcast inside under afternoon daylight, or catching news items on a phone while commuting in broad daylight, are necessarily different.

And so, just as we are used to reponsive Web designs that adjust to different display resolutions, content for the Web needs to adaptable for different color gamuts, for different peak luminances, and in particular for a very wide range of viewing conditions.

Topics discussed during the live sessions

HLG, PQ, and the Optical-Optical Transfer Function

The Perceptual Quantizer (PQ) and Hybrid Log-Gamma (HLG) transfer functions are used for HDR content and are defined in ITU BT.2100.

HDR content is mastered on a reference display with a known peak luminance, and in a standardized, dim, viewing environment. Displaying that content on a display with different (typically lower) peak luminance and different (brighter, indeed much brighter) viewing environment requires a change to the Optical-Optical Transfer Function or “overall system gamma”.

For content encoded in absolute luminance, display-referred (PQ), these modifications are undefined. In PQ, the OOTF for the mastering or grading environment is burnt into the signal and so a different environment requires a different signal. Dolby Vision IQ is one way to handle display of PQ-encoded data with differing ambient light levels.

For content encoded as relative-luminance, scene-refered (HLG) these modifications are straightforward and defined in SMPTE ST.2084. In HLG, the OOTF is provided by the display, so displays of different brightnesses, and different viewing conditions, can all use the same signal.

This is not just something which affects bright highlights or deep shadows; the crucial mid-tone values need to be adjusted as well.

Extended range (linear, or not) sRGB for WCG and also HDR

Once a high-bitdepth RGB representation is available (16 bits per component, float or half-float) then it is possible to represent out of gamut colors with negative component values or values greater than one; the latter can also represent HDR colors.

This concept came up repeatedly during discussions, sometimes to better align with GPU hardware, sometimes to offer an easy (fast, inaccurate) backwards compatibility story with video hardware or software pipelnes which assumed sRGB or assumed BT.709 content.

The poor behavior of some systems, in particular LUT-based systems, when presented with negative component values was mentioned at several points in discussions. This can be seen as a bug in individual implementations, or a fundamental incompatibility when interfacing extended to non-extended systems.

OKLab

OKLab is a very new, perceptually uniform opponent color space with applications in image rendering, gradient creation, and color gamut mapping. It avoids known defects in spaces such as CIE Lab, while avoiding the computational complexity of spaces such as CAM16 or distance metrics like deltaE2000.

The choice of a cube-root transfer function was discussed. In that context, this review by Raph Levein is interesting:

I found Björn’s arguments in favor of pure cube root to be not entirely compelling, but this is perhaps an open question. Both CIELAB and sRGB use a finite-derivative region near black. Is it important to limit derivatives for more accurate LUT-based calculation? Perhaps in 2021, we will almost always prefer ALU to LUT. The conditional part is also not ideal, especially on GPUs, where branches can hurt performance.

File formats for HDR images

File formats for 2D image HDR distribution also came up in discussion. Krita is currently using an older proposal, Using the ITU BT.2100 PQ EOTF with the PNG Format, which signals PQ using the filename (not the contents) of an ICC profile. Viewing applications which use the provided profile are reported to produce a dim, badly rendered result.

The new PNG `cICP` Coding-independent code points chunk will solve this problem, providing color space identification without constraining to a single view rendering, and also allowing a choice between HLG and PQ. The PNG specification is developed and maintained at W3C.

AVIF is another possible export format, with WCG and HDR support. It is an open standard developed by the Alliance for Open Media .

WCG & HDR: Color creation and manipulation

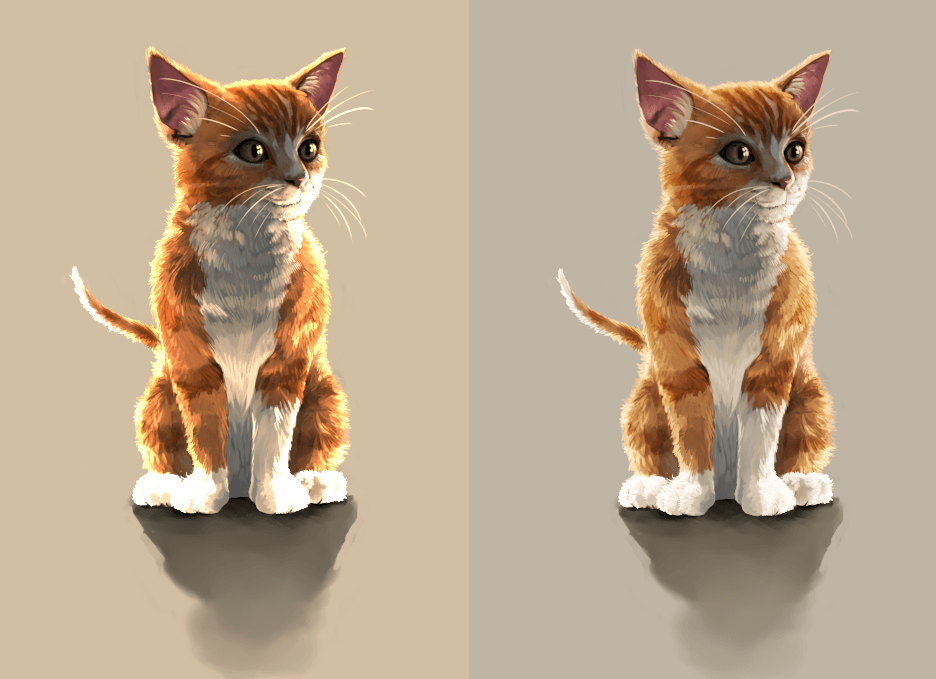

The challenges of creating and previewing HDR content were discussed, and compared to the relative simplicity of an ICC-based SDR proofing workflow. Even sharing HDR content and SDR dialog boxes on the same screen can be problematic.

As with 3D, content is best created and manipulated in a scene-referred, linear light intensity color space. View transforms can preview this for SDR and for HDR, before the final content is encoded in HLG or PQ for distribution.

HDR Tone Mapping

Tone mapping of some sort is clearly needed when the peak luminance of the display and/or the ambient light level and surround luminance differ from the values used in mastering. This does not just affect the deep shadows and the hilights; acceptable presentation also requires adjustment of the mid-tones to compensate for the differing viewing conditions. The simplest "mapping" is to clip out of range values and perform no other adjustment. This produces unrealistic flattened highlights, garish color haloes, and unviewable light and dark scenes. The most complex and effective scene-dependent tone mappings require manipulation in some HDR preceptually uniform space, such as ICTCP or Jzazbz, typically operating on the lightness component only. In between are simpler sigmoidal transforms, operating directly on the RGB components or on luminance; these still inntroduce hue and saturation changes. There is a clear tension between the effort required to produce acceptable HDR tone mapping and the acceptability of the result; the optimum point will depend on factors such as where is the display chain this mapping is done and the availability of hardware support.

Reverse Tone Mapping, where SDR content is converted to give a more HDR presentation, is also an active area of research and commercial interest.

There are no universally good HDR Tone Mapping options, and much more work is needed to investigate this important aspect of HDR display given the wide range of viewing conditions in which eb content is consumed.

iccMAX, complexity, and profiles

Compared to the capabilities of the widely-implemented ICC version 2 (often used for display profiling), and the incremental improvements in precision and repeatability provided by ICC version 4 (widely used in commercial printing, and also for display profiling), the set of capabilities provided by iccMAX are vastly expanded.

Simple improvements like lifting the restriction to a D50 white point, or adding a floating point calculator element, are joined by more fundamental changes such as full spectral processing, non-standard observers, material transforms, and Bidirectional Reflectance Distribution Functions (BDRF, for materials which change color depending on the angle you look at them). These capabilities are starting to have impact on, for example, high quality printing with glosses and metallics in the packaging industry.

At the same time, implementors and users are concerned about the greatly increased scope and often ask if they have to implement everything? The answer is no, Interoperability Conformance Specifications define precise subsets, as implementation targets for particular industries or categories of application.

For WCG and HDR displays, for example, the Extended Range part 1 profile adds floating point HDR support (while retaining the D50 profile connection space from ICC v4) while the part 2 profile supports using a native whitepoint such as D65, avoiding needless chromatic adaptation transforms.

Next steps

Ongoing standardization efforts

The workshop brought a renewed focus to CSS Color 4 and 5, produced by the CSS Working Group, with increased implementer interest and a flurry of in-depth issues and bug reports. After a great deal of detailed specification work, nine months after the workshop live events, in July 2022, CSS Color 4 advanced to Candidate Recommendation. It has also now replaced CSS Color 3 as the official CSS definition of color, in the CSS 2022 Snapshot.

Definitions from CSS Color 4 are now referenced by other specifications:

- the linear-light sRGB space is referenced by SVG 2 and Filter Effects (for filters).

- `display-p3` is referenced by HTML (for 2D Canvas PredefinedColorSpace)

- color interpolation is referenced by CSS Images 4 (for gradient interpolation)

The VideoConfiguration, HdrMetadateType, ColorGamut and TransferFunction APIs of the Media Capabilities API specification, produced by the Media Working Group, have been advanced through five working drafts since the live sessions of this workshop, and are in development in Chrome.

In June 2022, the International Color Consortium (ICC) proposed a revision to the ICC.2 specification, adding new parametric curve types specifically for the PQ and HLG transfer functions. A new ToneMap element was also proposed, which would act on a luminance channel to avoid hue shifts.

The W3C PNG Working Group is creating a third edition of the PNG specification. Amongst other improvements, this includes CICP metadata, which allows HDR images to be encoded in PNG using the ITU BT.2100 color space, with either HLG or PQ transfer functions.

Display P3 was, at the time of the workshop, informally specified in developer documentation. The ICC Displays WG, working together with representatives from Apple Computer, created a primary documentation source for Display P3, which differs from the DCI P3 color space in many ways. This specification is being referenced from CSS Color 4, Canvas 2D, and also from ongoing work in medical imaging, for example.

A need to extend both SVG filters and CSS Filter Effects to add WCG support was identified.

The fact that Compositing and Blending is currently specified to take place in gamma-encoded sRGB space was mentioned several times. There is a need to add a linear-light compositing option for CSS. The Canvas HDR proposal will add a linear-light option to Canvas 2D context, resulting in linear-light compositing.

Color API Working Group

In July 2021 the CSS WG held a breakout session on Web Platform Color API. It was resolved to incubate the API in the Web Incubator Community Group. A draft specification exists.

Once the specification is more mature and has some implementer momentum, it may be appropriate to create a dedicated Working Group to advance the specification along the Recommendation track.

WCG Interoperability

Given that a couple of implementations have added CSS WCG support, either experimental or shipping, the next stage is broad interoperability so that WCG can be relied upon.

The Interop 2022 initiative, announced in March 2022, has been widely reported:

- Interop 2022: browsers working together to improve the web for developers

- Web devs need to keep an eye on Interop 2022 benchmark to make life easier

- Working together on Interop 2022

- Microsoft Teams with Google, Apple, and Mozilla to Form Interop 2022

- Bocoup and Interop 2022

- All major browsers assemble for the first time to improve web development

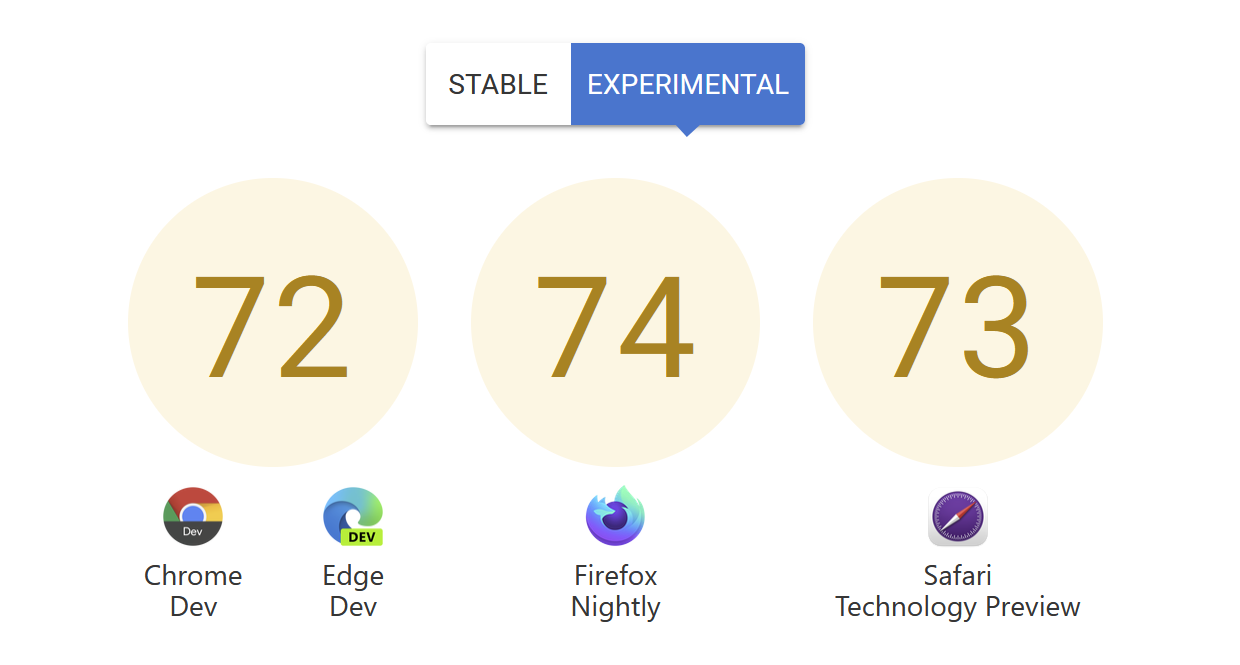

Ten key features were identified as targets for Interop 2022. A prerequisite for inclusion was that the specification was mature enough to be implemented in browsers as specified, and that sufficient tests existed to allow a meaningful measure of interoperability for the feature in question. One of these ten features, Color Spaces and Functions, covers:

- The predefined WCG RGB spaces from CSS Color 4

- The CIE Lab, CIE LCH, OKLab and OKLCH spaces from CSS Color 4

- CSS Gamut mapping, in OKLCH

- The color-mix() function, from CSS Color 5

- The color-contrast() function, from CSS Color 5

Thus, by the end of 2022, the aim is to have excellent interoperability on these key CSS WCG features, among all current browsers. Results can be seen on the Interop 2022 dashboard

Also, in July 2022 BFO Publisher was released, a CSS to PDF converter with excellent CSS support. The test results show a 97.4% pass rate on those WPT tests for CSS Color 4 and 5 which do not rely on script.

However, for maximum interoperability, older browsers also need to be catered for and this is where browser shims, pre-processors and build tools can allow authoring in a more modern form of CSS while still providing a usable result in older browsers.

Lightning CSS is a CSS parser, transformer, bundler and minifier, written in Rust and callable from Parcel, Rust or Node, which parses ore modern CSS syntax and outputs compatible down-level CSS to support specific older targetted browsers. It supports CSS Color 4 , and recently added support for CSS Color Level 5 as well.

PostCSS is another CSS preprocessor, extendable with the use of plugins. There now exist plugins for the CSS Color 4 color() notation, for lab and oklab, and for the WCG colorspace support for CSS gradients .

Prototyping HDR in Canvas

Between Jan 2021 and Feb 2022, In the Color on the Web community group, the Canvas High Dynamic Range proposal was widely discussed and refined. This was aided by several presentations at the workshop. The consensus now is that prototyping and experimental implementation is needed, to gain practical experience with it, especially in deciding some of the details regarding default color space conversion math.

In October 2021, a Javascript implementation of Hybrid Log Gamma (HLG) was started.

In November 2021, Chromium posted an Intent to Prototype: High Dynamic Range Support for HTMLCanvasElement which has an associated tracking bug.

HDR Discussion and outreach

There was a clear sense at the workshop that for many topics, in particular the ones related to HDR, this was the beginning of a conversation rather than the conclusion of one. There is a need for ongoing discussion of certain aspects, to raise awareness and as a prelude to standardization.

In September and October, in the Color on the Web community group, there was ongoing discussion of interconversion between sRGB/Rec BT.709 and Rec BT.2100 with the PQ and HLG transfer functions, while in October Exposing HDR metadata in bitstreams through WebCodecs API and Display on operating systems requiring tone-mapping were discussed. .

In March 2022, ICC held an HDR Experts' Day. Of the 11 talks at that event, 5 of the speakers also spoke at this workshop.