Group photo from the Media and Entertainment IG F2F meeting during TPAC 2018 in Lyon

(Some more photos are available online :)

<cpn> scribenick: cpn

https://www.w3.org/2011/webtv/wiki/images/c/c1/2018-10-22_WebTVIntro-v2-.pdf

Mark_Vickers: [Introduces the

IG]

... We're here to represent media, wanting to improve the web for

media of all kinds.

... What we're interested in is being a focal point for bringing in

what's new in the media industry to W3C, to guide things along,

improve the direction of the web to improve the platform.

... We changed the name from Web&TV IG. We've had three waves

of work. The first was when HTML5 was fairly new, in 2011.

... HTML video, potential for being a rich platfom.

... The media architecture became a rich architecture over time.

There have been point releases, now working collaboration with

WHATWG.

... The core part is in HTML itself.

... These things can take a long time. MSE and EME, which were two

extensions originally envisaged as part of HTML5, but actually

published as extensions.

... And now there's work on a second generation of MSE and

EME.

... The Web as a media app platform. Apps that run across lots of

different kinds of platforms.

... A good example is Media Timed Events.

... Scope is everything from cameras, editing, distribution,

presentation.

... All of these are topics which are covered in this group,

related to media.

... As an Interest Group, the focus of what we do is use cases and

requirements.

... We don't really get into the syntax of what an API should look

like, Working Groups or Community Groups do that.

... Can we achieve our goals in terms of media functionailty?

... If you want to get involved in the detail, you can join the WGs

or CGs.

... We also coordinate with outside organizations.

... TV standards groups, incorporating HTML5 in their standards. We

help coordinate that.

... MPEG, ATSC, HbbTV. Communicate what we have in the web, and

take liaisons from them.

... Groups like MPEG, drive new requirements.

... Work with the horizontal groups at W3C. Accessibility,

internationalisation, security.

... How do we get things done?

... The goal isn't to get a spec, it's to get into the content of

the Web.

... Ideas come from us as members, or outside groups, and we form a

Task Force. We normally have one or two at a time.

... If there's an existing spec needing a new feature, we can raise

a bug report to the spec authors. That's often the fastest and most

effective way to get things done.

... Sometimes a whole new feature needing a whole new spec.

... W3C started Community Groups to incubate new work.

... We have spun out several CGs over the years.

... But now, most of our work goes into one CG, the WICG.

... WICG has all the browser vendors involved in a significant way,

involvement from W3C and WHATWG.

... It's an effective way to get things started.

... When something goes to a WG or CG, we remain involved, bring

our media expertise to help out.

... We have two kinds of activities:

... [list of previous and current Task Forces]

... We have a monthly talk on a variety of topics.

... We want to have your ideas for new talks. Presentation and

Q&A.

... These have been really useful. Worth looking into.

... One of our goals for today is to reinvigorate ourselves for new

Task Forces.

Barbara: Something I was surprised not on the list, was how media relates to the Immersive Web.

Mark_Vickers: At any time, it's what people interested in.

Chris: We had a call on 360 video and

Immersive Web

... Fraunhofer Fokus presented their 360 streaming solution

... https://www.w3.org/2018/01/04-me-minutes.html

<kaz> scribenick: kaz

Chris: In this morning's session,

we'll hear a series of updates

... on how web standards are used by the various TV standards

bodies.

... We also have an item to cover the Media Timed Events TF.

... In the afternoon, we'll be looking into Media Incubations by

CG.

Mark_Vickers: This morning is about

the activity of the IG.

... The afternoon is about related groups, and the end, planning

for the next stage.

https://docs.google.com/presentation/d/19mNdvTntqywnlvBpQPrI4ZQ4fktyNo7lhK5ysB7KGbM/edit

Chris: Today and tomorrow we have

this Interest Group, of course, also Timed Text WG, and WebRTC

WG.

... On Wednesday there are breakout sessions. Some of the proposed

topics that may be of interest to us include Machine Learning for

the Web, Gaming on the Web, Video Metadata Cue Support Including

Geolocation, and WebGPU.

... On Thursday and Friday there's quite a number of media-related

groups: Audio WG, Second Screen WG/CG, Immersive Web WG,

... Audio Description CG, Color on the Web CG,

... HTTPS in the Local Network CG, which is looking at how to do

secure communication between devices on a local network.

https://docs.google.com/presentation/d/1EzKKeXVHLNwk87nc132M93sJkJIRitiu3IDcqF_zlWU/edit

Chris: Mark mentioned earlier the

monthly calls we have.

... There are two purposes for these. The first is to bring us

together as a community,

... so that we're talking with each other more. But more

importantly, they're about reviewing current incubations,

... and identifying requirements for potential new Web APIs.

... We've had 10 monthly calls since TPAC 2017.

... They happen on the first Tuesday of each month.

... Mounir presented the Media Capabilities API, which is a way to

query for media output device capabilities,

... such as resolution, supported media formats, encodings,

etc.

... requirements for Media Source Extensions, etc.

... The IG made contributions to this, in particular regarding CMAF

support.

... Input was asked for HDR related capabilities.

... This is still an open issue, something the IG could contribute

to.

... As mentioned before, we had a call on 360 degree video and

WebXR.

... Items that came up there included the need for a secure media

path for 360 degree video,

... and issues with MSE, e.g., low latency buffering, reliability

of replacing segments in a single SourceBuffer, and switching

between multiple SourceBuffers.

Barbara: Are these issues raised on the CG side?

Chris: Yes, for incubation and future

discussion.

... We touched on some issues with MSE, bringing the requirements

to the WICG.

... This call also identified need for projection metadata, link up

with work on MPEG OMAF, for rendering on the web.

... We had a call about scalable media delivery on the web, with

HTTP server push.

... My colleague Lucas Pardue discussed issues around large scale

live media distribution.

... We have an internet draft at IETF on HTTP over multicast

QUIC

... Using multicast as an alternative service to unicast

deliver.

... If the client has the capability, it can switch over.

... We're interested in unidirectional delivery of HTTP

resources,

... and identified the need for a way to notify a web app of push

delivery from the server.

... The last call we had, this month, was on WebRTC for video

streaming.

... We had Peter Thatcher from the WebRTC working group on the

call.

... This identified a couple of things.

... Coming back to MSE again, the need for low latency, and ideas

around per-frame injection, buffer delay controls,

... and interpolation controls so that, for example, you could

maintain a continuous audio stream while dropping video frames

until network conditions allow the video to resume.

... Also the use of QUIC transport with MSE.

... This is currently not accepted an issue by the WebRTC WG.

... The IG members might want to say this is important for

us.

... The next one to mention, was Web Platform Tests and CE devices,

presenterd by Fraunhofer FOKUS.

... They have contributions to make to the Web Platform Tests, such

as the test runner for CE devices, test dashboard and test

harness.

... This was contributed by the CTA WAVE project.

... something follow up during the week.

... And one final issue is frame accuate seeking in HTML5

video,

... the use case being browser-based video editing and

production.

... Various other use cases as well, such as editing video from

security cameras.

... From a Web Platform viewpoint, there's no easy way to step or

seek video frame by frame.

... It's quite difficult to control very precisely using the

existing mechanism.

... Solutions implemented often involve rendering the frames as

static images server side.

Eric_Siow: Thanks for introducing the

topics.

... Which group would work on this? A CG? or outside the W3C?

Mark_Vickers: I'm not sure this just

related to MSE.

... There's a related group called WHATWG, which maintains

HTML.

... We can raise issues there using GitHub,

... or possibly initiate work within WICG.

Chris: We could follow the same way

as the Media Timed Event TF,

... i.e., summarize the use cases and clarify the requirements, and

then input to the WICG.

... Somebody on the discussion thread also mentioned the need for

more accurate timing synchronization for subtitle caption

rendering,

... so that captions are shown or removed aligned with scene

changes, which requires frame level accuracy.

... Any comments on MSE extensions?

Kaz: I thought some of the requirements were proposed by NHK several years ago?

<cpn> scribenick: cpn

https://www.w3.org/2011/webtv/wiki/images/3/3f/2018_TPAC_-_WAVE_Update_v2.pdf

Mark_Vickers: There are number of CE

devices, TVs, games consoles that use HTML5 as an app

environment.

... PCs and mobile devices also use it. But unlike these, there's a

lot less consistency.

... Fragmentation of streaming formats, device capabilities,

straying from standard conventions on the web.

... It's hard to write one HTML5 media app to run across all smart

TVs. We want to bring them more in line, as with PC and

mobile.

... We're writing three specs. The Web Media API, which is focusing

on W3C specs. A Content spec, focusing on MPEG, and work referenced

in ETSI and IETF.

... There are dozens of specs.

... Bringing them together is a playback spec.

... [shows list of WAVE members]

... The content specification uses CMAF, an MPEG spec, brought

together all the major parts of the industry, one standard

format.

... It's built around fragmented MPEG-4, similar to DASH, Apple

added CMAF, committed to a common format.

... MPEG does CMAF and defines standard profiles.

... CMAF has extensibility for bindings outside of MPEG.

... WAVE is building new media profiles.

... The first content spec has been written.

... [shows list of profiles]

... There'll be an amendment to the spec later this year.

... AC3 is defined at ETSI.

... WAVE adds a level of program to support live linear content.

Where you change from one program to another, how to tie those all

together into one.

... Focusing on the HTML side, this spec is available as a free

download.

... We didn't want to stray from the W3C world, so we formed a Web

Media API CG.

... This is taking a look at what specs are supported across all

the four main browser codebases.

... If you're a CE product, these specs are supported across PC and

mobiles, so should be supported too.

... We're also providing a test suite and a best practice document

for writing web apps.

... The snapshot spec was published in December 2017. We have an

agreement with W3C to co-publish.

... In addition, we've built a test suite, built on top of Web

Platform Tests.

... We're trying to address second and third party browsers. A

first browser is Apple and Webkit, Google and Chrome.

... Three of the four codebases are open source. A second party

browser is taking a snapshot of one of these, integrating into a

TV.

... Third party browsers are where a manufacturer hires another

company who integrates the code.

... You need to specify what's in this browser. Can't just ask for

HTML5.

... A test suite, to see if this keeps up with the mainstream

browsers.

... Snapshot 2018 to be published in December, also available from

CTA.

... First version of development guidelines, the best practice

document.

... The Device Capabilities spec will deal with issues not

standardised or tested before. It asks if the APIs run well in

realistic conditions.

... Ad splicing, regional profile issues, long term playback

stability. Browsers may not run more than a day due to memory

leaks, for example.

... We are creating content streams for testing, using HTML APIs to

bring a higher level of quality.

... The test approach is self compliance, there's no logo

programme.

... The HTML5 API Test Suite is based on Web Platform Tests.

Modified the test runner to work on CE devices, using LRUD

keys.

... Will talk to WPT people about contributing that back to the

project.

... WPT is primarily oriented to first-party browser

developers.

... If I take this to a second or third party, what do I do with

test failures? What we want is to compare what they start with,

with what they end up with, to find errors introduced during

integration.

... In the test report, you can select which base browser to use,

to make sure your port is correct.

... Can select all 4 browsers, see what's supported across

them.

... It's available now, you can try it. Can report bugs into

GitHub.

Igarashi: There's no certification programme?

Mark_Vickers: That's right.

Igarashi: Minimum requirement is for APIs supported across all browsers.

Mark_Vickers: You can run the test associated with the browser you're developing from, or across all browsers.

<kaz> scribenick: kaz

https://www.w3.org/2011/webtv/wiki/images/7/76/W3C_Media%26Ent_IG_HbbTV_Update_2018.pdf

Chris: This is a recent update on

HbbTV, showing changes in the latest version.

... I'm not active within the HbbTV consortium myself, so this

information is from my colleagues.

... I'll skip over the use cases and basic features, I assume we

all know these.

... HbbTV is deployed to 35 countries, mainly Europe, over 300 apps

in use, 44 million devices.

... The latest revision is HbbTV 2.0.2.

... It includes HDR video, both PQ10 and HLG, as well as high frame

rate, e.g., 100Hz/120Hz.

... It also has next-generation audio, both AC-4 and MPEG-H.

... In terms of relationship to upcoming Web standards, HbbTV has

capability detection for HDR.

... There's an overlap with W3C Media Capabilities API, something

we should look closer at and send feedback if needed.

... HbbTV is likely to add MSE, and from a BBC point of view, we're

interested in the Web Media API spec.

... It identifies which Web APIs are stable, could it be referenced

by HbbTV in future?

... The BBC demonstrated dynamic content substitution at IBC

2018.

... There's blog post available that goes into detail, with a video

explainer.

... This is useful for personalization of program content and

targeted advertising.

... Operator Applications (OpApp) are HbbTV applications that

provide access to live channels and on-demand functionality,

replace the in-built UI from the set-top box.

... OpApp use cases, launch using the green button.

... OpApp status: it's in operation in some countries.

... One use of web standards of interest in OpApps is Web

Notifications.

... OpApps need a way to send time-critical messages to the user,

e.g., reminder for a scheduled event.

... Here are some links to the specs and presentations for more

detail.

... Any questions/comments?

<cpn> scribenick: cpn

https://www.w3.org/2011/webtv/wiki/images/4/45/RecentAchievementHybridcast_TPAC20181022.pdf

Masayo_Ikeo: I'll cover deployment

status and new APIs for Hybridcast ConnectX.

... ConnectX is a service to connect TV to mobile phone.

... Deployment status: up to 2018, we've published Hybridcast 2018

and operational guidelines.

... The number of Hybridcast receivers is increasing. Until August

2018 we have over 18 million Hybridcast receivers.

... In Sapporo, one of the two satellite broadcasters is operating

a daily service.

... New APIs for Hybridcast ConnectX. This is protocol version 2, published in

September 2018.

... Looking at V1, standardised inter-device protocol and inter-application control

APIs.

... DIAL and Websocket protocol for inter-device

communication.

... Main features in V2 are APIs for Hybridcast ConnectX, to

control the TV from companion devices.

... We standardised TV set information and tuning APIs, and a TV

status API.

... The architecture is a protocol over Websockets.

... The TV set has a RESTful HTTP API server. The companion device

has an HTTP client.

... Also standardised is a JavaScript extension API to give to a

companion screen HTML app.

... [typical sequence]

... 1. Media Availability API, 2. Channels Info API, 3. Start AIT

API (tune API)

... The companion can change the channel of the TV set and launch a

Hybridcast app (HTML) on the TV set.

... The task status API gets the status of a start AIT API

request.

... The receiver status API gets the status of the HTML5 browser,

whether it's running or not, the number of communicating apps over

Websockets.

... The use case is to communicate between a TV set and companion

devices, and to be able to move smoothly from the mobile app to the

programme on TV.

... A service may want to send a push notifiication, received on

the mobile phone.

... The mobile phone app launches the associated app to control the

TV set.

Mark_Vickers: The companion app gets the channel information from the TV, does it also get programme information from the TV or over broadband?

Masaya_Ikeo: It's just channel

information

... You can start watching VOD content initiated from a companion

app.

... The user selects a video, sends channel and AIT information to

the TV set, which tunes to the channel and launches the associated

Hybridcast app. This plays video in the MPEG-DASH player.

... This is based on the managed broadcasting environment,

important for Japanese broadcasters.

Igarashi: It seems similar to Chromecast use cases

Masaya_Ikeo: We specify the channel to tune from AIT information

Tomoyuki: You control the tuner on the TV on the set top box. Where is the Tuner API, in the companion or in the TV?

Masaya_Ikeo: We have to control the

tune and AIT information from the companion device.

... Other updates. Hybridcast video uses MPEG DASH with MSE.

... Standardised in 2017.

... Compatibility tests of the MSE library.

... TV tests need to pass to get the Hybridcast logo.

... Operational guidelines from September 2018. Supports BT.2100

and BT.2020. And BT.709 color space will be deprecated.

... Test for HDR handling performed in October 2017.

... The Hybridcast app requests HDR (HLG) video on HDR enabled

TVs.

... Operational guideline highlights the need for difference of

colour space between CSS and HDR video.

... [shows video] Smooth connection from news alert to live

news.

Igarashi: Will the TV launch the application if it's in standby?

Masaya_Ikeo: We'd like to do that, but it currently needs to be turned on, depends on the TV.

Kazuhiro_Hoya: The guidelines suggest that manufacturers should handle that, but it depends on each manufacturer if it's implemented.

Tomoyuki: Does this kind of notification require broadcaster specific notification system, or can it use Google or Microsoft push services, for example?

Masaya_Ikeo: It can do both.

Tomoyuki: I hope the relationship between W3C Push API and this system is clarified.

Mark_Vickers: This is showing a companion application with the TV. Is it used with applications that run on the TV directly, or only hybrid apps?

Igarashi: Hybridcast has started this for applications on TV, then focus on mobile centric use cases. It does both.

<kaz> https://www.w3.org/2011/webtv/wiki/images/9/96/Korean_FBMF_Introduction_to_W3C.pdf Youngsun's slides

Youngsun: I'll introduce our IBB

standard, Integrated broadcast and broadband.

... FBMF's mission is to define the specification for future Korean

broadcasting.

... It publishes two main specs: the transmission and reception for

terrestrial UHD TV, and the terrestrial UHD IBB service.

... Another mission is to cooperate with the government. After we

develop the specs, we publish our standards in the TTA.

... We have several members. Four major Korean broadcasters, and

Samsung and LGE as manufacturers.

... Also universities, and solution providers.

... The organisation has four sub-committees.

... The IBB subcommittee develops the standards for web

applications,

... another focuses on hybrid radio.

... The IBB service is very similar to HbbTV. IBB references HbbTV

2.0 and ATSC 3.0.

... Most of the web standards are referenced from HbbTV 2.0.

... The main goal of the IBB is to provide an interactive

service,

... also companion screen services.

... The IBB service specs consist of three major parts.

... Part 1 is common technology. Part 2 is application signaling,

Part 3 is the main part, the browser environment based on HbbTV

2.0.

... There are some extensions. HbbTV doesn't include MSE and EME,

so we've extended to include those.

... Part 4 is the companion screen service, based in HbbTV 2.0,

also defined in ATSC 3.0, IBB is a mix of these.

... Part 5 is the service guide, most of this came from ATSC

3.0.

... Part 6 is ACR.

... The IBB service is now in service in Korea, in particular for

the Pyeongchang Olympic games.

... KMS, MBC, SBS are now serving IBB.

... This is the first time presenting to W3C, I hope it's helpful

to understand the current situation in Korean broadcast.

Andreas: Is the IBB standard available in English?

Youngsun: Sorry, only Korean.

Mark_Vickers: I'd be interested to see the detailed list of API requirements.

Youngsun: Most of that is written in English, so you should be OK.

Igarashi: Is the IBB standard currently deployed? is FBMF working on extensions?

Youngsun: The spec is frozen and deployed, we're monitoring HbbTV and ATSC and we'll update if there's a chance to do so.

Igarashi: Chris mentioned HbbTV potentially using WAVE. Would that apply to IBB too?

Youngsun: We haven't discussed that

yet.

... We have an open mind to adopt new technologies where

possible.

Toshihiko_Yamakami: Do you have an official liaison with HbbTV?

Pierre: What's the long term roadmap for subtitles and captions?

Youngsun: We're using IMSC 1, no specific plans regarding that.

https://www.w3.org/2011/webtv/wiki/images/c/cf/ATSC-Activity-Report-2018-10-01_r1.pdf

Giri: I'm involved in ATSC 3.0

standardisation.

... ATSC is the standards body responsible for over the air

broadcast standards for US, also in Korea, Mexico, other areas in

Asia.

... The official liaison between ATSC and W3C is Mike Dolan, but he

couldn't be here.

... Some of this is work in progress.

... There have been some organisational changes. Work on ATSC 1.0

for many years, now subsumed, the technology is mature and getting

replaced.

... We've also taken the group developing the runtime specification

to ATSC 3.0, fully adheres to W3C standards, moved to S38 WG.

... Following W3C specs in TTML and IMSC 1.

... [shows org chart for ATSC]

... S38 is one of the critical areas for interaction between W3C

and ATSC.

... There have been a couple of new standards published since last

year. Region Service Availability and Dedicated Return

Channel.

... Critical for interactivity is A/331.

... Other, security specs, A/360 deals with requirements for

application signing, TLS requirements for broadband-connected

receivers. Also DRM requirements.

... W3C has the Web Media API snapshot, S38 analysed this, A/344

will incorporate it.

... Snapshotting web APIs has not been easy to do, so we're now

able to reference Web Media API.

... We also have implementation teams, basically teams that assist

developing best practices for specific deployments.

... Interactivity and personalisation is important.

... Educational activities.

... We have several Board members who are W3C participants.

... We have a monthly newsletter.

... Right now, we're developing recommended practices around A/331,

how content will be delivered and accessed from HTML

applications.

... We are looking into EME compatibility, need to blaze some new

ground ahead of W3C.

... We can't guarantee receiver will have internet access, so we

developed a broadcast way to deliver.

Andreas: You have a personalisation team?

Giri: ATSC personalisation is focused

on things you may expect, system settings, accessibility.

... Working with DASH-IF on ad insertion.

... The ATSC model is to allow a broadcaster to develop an

application that's bound to its service.

... An application can be sent in over-the-air broadcast. That can

deal with some of the personalisation aspects related to rendering

of the content.

https://www.w3.org/2011/webtv/wiki/images/f/f9/Media_Timed_Events_Task_Force.pdf

Giri: We created a TF this year in

the IG, myself and Chris co-chaired it.

... It's in recognition of problems we see in industry of how to

render interactive media related to streaming media shown in the

browser.

... Existing technologies don't suffice, so what needs to

change?

... TF formed to figure out what the problem is. Goal to provide

input to other incubator groups at W3C.

... A simple example of a media timed event is closed captions.

They are meant to be rendered in a certain way and at a certain

time.

... Not with respect to wall clock time, rather the media

timeline.

... As we know, captions don't display exactly at the time they're

supposed to. There's a lot of best effort involved.

... There's a way to embed captions in media tracks, but we've

moved to have separate tracks.

... Other things can be rendered with respect to a media timeline.

Banner images, audio, static or dynamic overlays.

... These are emcompassed by what we call a media timed event. It

contains a payload such that the page can render it at the right

time.

Pierre: Is the requirement only for the client to be able to be told where the timeline is, or is it for embedding the media?

Giri: The DASH approach classifies

events into two categories: in-band and out-of-band.

... In-band events are part of the media track, using the emsg

box.

Pierre: There's also in-band TTML.

Giri: A web page can't generally

handle that without assistance from the media player, to pass the

event up to the application.

... Using TextTrack metadata cue.

... In DASH, can also pass events in the manifest (MPD).

... The JavaScript client first acquires the MPD XML document. It's

a listing of where it can retrieve media segments. Also can provide

event data. This is an out of band event stream.

... These can be handled directly by the web page, doesn't need the

player.

... We have a couple of problems with both of these methods.

... MPD eventing puts a dependence on the application, for parsing

the events and executing them, i.e, acting on the event

payload.

... We don't necessarily know what the event contains.

... In-band events are also an issue, particularly emsg events. No

browser passes these up to the application today.

... It's quite controversial, been raised over and over again on

the Chromium developer page.

... We have an issue where in-band events are ignored. Or in a

caption track metadata cue, may not be passed to the application in

a timely manner.

Pierre: What's the expectation if you don't know what's in there?

Giri: That's part of the problem. The

HTML way is using text. The text could be some proprietary protocol

between content provider and web application developer.

... emsg is opaque data. There may have been some issues there with

what to do the data when you encounter it. But you could treat as

metadata.

Eric: This is exactly what we do in

Webkit.

... We have proposed extensions to DataCue, so we pass up enough

context to allow a web app to recognise the data. There's a

namespace, e.g, ID3, MPEG4 metadata.

... There's a key, and data of type 'any' in the IDL. It's up to

the web app to interpret it.

Pierre: The source of that doesn't have to be an emsg box, could be anything.

Eric: Yes. It works with HLS, ID3.

Pierre: In other standards, I see a generic mechanism. How do you prevent someone putting video in there?

Eric: You can't.

Giri: You can't prevent resources from being inlined, could be an image.

Pierre: That's the danger.

Eric: ID3 allows you to put images, as a feature.

Mark_Vickers: I don't see the concern. It's an application building block, so has a large variety of use cases.

Pierre: The implicit concern is that instead of trying to seek consensus on what that data is, you end up with a black box.

Mark_Vickers: Some things will be

standardised, and more over time.

... Some will be application speciifc, and that's fine too. Same as

any network protocol.

Giri: In the task force this year, we

surveyed existing methods. DASH being the impetus for this

work.

... In HbbTV, DASH events are converted to DataCue using TextTrac

carriage. We analysed this in ATSC.

... W3C methods, TextTracks, WebVTT.

... There was a DataCue that could be potentially used as

correspondence to TextTrackCue, but without wide deployment.

... There's also ongoing MPEG work on web interactivity in track

data, define DOM rendering on the media time.

Chris: That's under liaison with W3C, we sought TAG advice.

Giri: It's complicated, because of

the web security model.

... DASH-IF is working to define an eventing API, trying to give

some meaning to event data using scheme id.

... Also rendering of timed metadata, and what kind of guidelines

make sense.

... The HTML spec has the "time marches on" algorithm, which seems

to allow up to a half second between receiving the cue and passing

it up.

... Delay is problematic for ad insertion.

Pierre: Also a problem with the binary approach, hard to have requirements on timing.

Nigel: HTML spec says up to 250 ms, but implementations vary. Firefox is within a few milliseconds, Chrome up to 250 ms later.

Eric: Did you file a bug?

... File a PR saying it's not adequate. It's not just a spec

issue.

Giri: HbbTV sent a liaison to W3C

about 5 years ago saying this is a problem for live media.

... Filing an issue is enough.

Eric: Things have changed, different people working on the spec. Also conditions in the market are different, but complaining won't fix it.

Mark_Vickers: Filing a PR to change the spec is a great idea.

Giri: Live media wasn't considered a key use case for HTML media back then.

Nigel: There's an interesting thing for the IG here, but what number should we ask for here? I could ask for 20 milliseconds to get to frame accurate, but this may be too much.

Pierre: If synchronising to audio, 20 ms may be the upper limit.

Giri: We've created a white paper, in

the TF GitHub repo

... https://w3c.github.io/me-media-timed-events

... We made reference to the BBC guidelines for caption

rendering.

... We've made one solid recommendation to WICG, resurrecting

DataCue specifically for emsg.

... There are some open issues relating to the ongoing work that we

didn't try to solve this year.

... The DASH-IF work needs to come to some resolution before we see

if has impact on W3C specs.

... Also CMAF.

... There are some ways forward. We could publish the existing

document as an IG note, as a snapshot, and continue to revise

it.

... Continue collaboration between the TF and the WICG.

... [shows the use case and requirements document]

Mark_Vickers: Regardless of what we do in addition, we should publish this as a note.

Giri: As Nigel mentioned, there's a big discussion in the M&E IG GitHub on frame accurate sync. There are several other related use cases there.

Mark_Vickers: In the Use cases Section, is there a level of time described there?

Giri: We didn't get into that, but we can certainly do it.

Chris: Should we continue to include the MPEG carriage in web resources draft? It has security concerns, and folks not so active in the TF.

Mark_Vickers: I think it's fine, just referencing it here?

Giri: [shows WICG submission]

<ericc> https://discourse.wicg.io/t/media-timed-events-api-for-mpeg-dash-mpd-and-emsg-events/3096

Giri: If the CMAF work gets to a point where we need something from W3C, we can do that separately.

Mark_Vickers: DataCue was included in HTML5, but it seems not to be there now. Why has that happened? Are people not certain this is needed? We have transport messages in CMAF, which is more forward looking

Eric: At the time there was a

fundamental disagreement about having a generic way to handle

in-band metadata.

... People at Google thought there should be a different type of

cue for each type of metadata.

... I don't agree with that, as you can put anything you want into

HLS, so you can't have a spec for it.

... At this point I think the right thing to do is for someone to

write a proposal with specific text and send a PR against the

spec.

Mark_Vickers: You said you have an extension of DataCue with extra fields?

Eric: I describe this in my WICG reply. It's shipping in Webkit for the last 5 years

Mark_Vickers: From my point of view,

TV has used embedded events for many events.

... It's just a building block where you have a media stream and

want synchronised stuff to happen.

... Usually, it's not a lot of data, just a cue.

... MPEG transport was easy about allowing you to put stuff

in.

... That older world was one-way broadcast, included things you

didn't need, e.g., sending 6 languages at the same time. We don't

wan't to bring that.

... We want to preserve the idea of media related cues.

Rob: I'm developing WebVMT. I used

the WebVTT structure for geolocation data on the web.

... I'm proposing a breakout session on Wednesday for general video

related cues.

... I invite this group for wider discussion.

... I don't want to destabilise WebVTT, which is in CR status. But

I do want a more general platform support for this and future

formats.

Eric: I'd guess that the spec editors

would be more amenable to a discussion about a generic way to have

timed metadata now than they were before.

... I think it's worth making an effort.

Mark_Vickers: The interactive TV stuff had an application in the client, a game show with questions and answers, or sports programmes with event data. So needs to be open ended.

Pierre: Is there enough experience that we can have concrete requirements, signaling of content, required accuracy? Are we close enough to doing that?

Eric: The requirements document has

more information than I do.

... A concrete step is to create a WICG GitHub repo.

Chris: What's needed to move forward? Do we need support from all 4 browsers at WICG?

Mark_Watson: Not necessarily, just one is enough.

Chris: So, Eric, if you're in support, we can move it forwards.

Mark_Watson: You may need to pester the WICG chairs.

Mark_Vickers: Concrete next steps are

to publish the IG note.

... I want to congratulate the TF on its work.

<nigel> I noted that if we're proposing a new spec for a generic data cue then it's important that for concrete cases the data model is either defined or referenced, so it can be used downstream. It's not about file formats!

Mark_Vickers: Write a submission to WICG for the generic case.

<kaz> [lunch till 2pm]

<scribe> scribenick: cpn

https://www.w3.org/wiki/images/d/d8/Spatial-navigation-tpac2018-media%26entertainment.pdf

Jihye: We've made some progress since

TPAC last year.

... What is Spatial Navtigation? It allows two-dimensional focus on

web pages.

... On TVs, you typically use remote controls, and sit far

away.

... The directional arrow keys are more intuitive.

... This API is not just for TV devices, also gamepad input for

other devices.

... There are various kinds of layouts emerging, especially grid

layout.

... The conventional method of navigating between UI elements using

the Tab key is not good.

... Spatial navigation is more intuitive for users.

... From LG's point of view, we focused on this topic because of TV

based web browsers.

... We made our own JavaScript library, as there's no browser

support.

... Use cases include Google image search, Google docs, F7 shortcut

in Firefox. Vivaldi browser, based-on blink, enables spatial

navigation using JavaScript libraries.

... There are some JavaScript libraries that support spatial

navigation, showing there is need for this.

... We first introduced Spatial Navigation last year. My colleague

Florian and I have written a draft spec, shared in the CSS WG

F2F.

... In September we released a polyfill.

... We collected use cases to define the primitive features.

... Customisation is important, a single space to solve the issues

and avoid regressions.

... A simple way for developers and users.

... It should be easy to predict where focus will go.

... The spec is in two parts, the processing model and spatial

navigation heuristics. It's about selecting the best target from a

given element in the page.

... The basic behaviour is an active element with the focus, if you

press the right arrow, candidates are selected from focusable

elements on the right side.

... We also need to consider different behaviour of controlling the

UI in browsers.

... The arrow keys already work for scroll bars and editing text

areas.

... We wanted to avoid harming those default behaviours.

... When the focus is on the scrollable area, the keys will affect

the scroll bar.

... If the scroll bar reaches the end, the focus moves to the next

element downwards.

... We have APIs for customising the spatial navigation behaviour.

The first is window.navigate(direction).

... It finds the best target and moves the focus.

... When the developer uses this API, they can modify the key

combination, e.g., using WASD or shift+arrow keys.

... Another API for customising the behaviour. For example, a

Twitter feed in a web page using an iframe element

... Developers can make it avoid sending the focus to the

iframe.

... With grouped elements, if there are some focusable elements in

one gorup, they are semantically related. In this case moving the

focus is not dependent on moving to the closest.

... In future, we're thinking of using the nav-up etc values in

CSS.

... LG built a polyfill, and some demos.

... Next steps: We are talking to engineers at Google about

implementing this in Blink, also from an accessibility point of

view.

... Also connected to engineers at Vewd.

... And Vivaldi is looking to use Spatial Navigation instead of

their own implementation.

Glenn: You mentioned the nav

properties. I was involved speccing those maybe a decade ago.

... The take-up has been relatively low in industry except maybe in

TV.

... You're defining the auto semantics. Do you see much use made of

the nav-* properties in industry?

... It requires authors to carefully use those functions. Do you

expect to see more use of nav-* properties if you go forward with

this work?

Jihye: Actually, there may be less

use of those properties. It is more intuitive for developers to

design those kinds of layouts and match the elements they want to

give the next focus.

... In that sense, the properties will be useful.

Kaz: You may want to talk to the accessibility people.

Mark_Vickers: This could make navigation more efficient for accessibilty related devices. A good motivation to adopt this technology.

Glenn: The problem has been that the

auto semantics is unspecified, so each browser does their own

thing.

... This work, to the extent it lays out a specific algorithm,

helps give more interoperable behaviour

<tidoust> scribenick: tidoust

Cbris: It seems you're making good progress. For us, in the IG, what can we do to help you in that process?

<cpn> scribenick: cpn

Jihye: I know IG members are using

existing solutions for spatial navigation. I want to hear from them

to feed into the standard.

... Does this spec make sense, for example?

Francois: You have two parts to the spec, the CSS part and the API part. Would you leave out the API part, if there were feedback suggesting that?

Jihye: There are features that more

match to CSS than JavaScript, so while making progress with the

spec, I want to do it in one spec - with either CSS or JavaScript

or both.

... If there are connections to other specs, we could talk about

combining.

Mark_Vickers: I guess your preference would be to have integration in browsers. An alternative could be to create an open source implementation to share in the TV industry, that could be useful.

Karen: What percent of navigation on TVs is the remote? Is there now a move to voice?

Mark_Watson: The vast majority is through remote, yes.

https://www.w3.org/2011/webtv/wiki/images/3/3d/TTWG_and_ME_IG_joint_meeting.pdf

Nigel: We want to look at future

requirements in the context of Timed Text.

... TTWG status update. We've been working super hard for 2-3

years, 3 specs all at the same time.

... TTML1 3rd edition, which includes fixes and clarifications from

TTML2.

... TTML2 has a whole bunch of new features. Ask me separately for

details.

... IMSC1.1, that is the profile of TTML2 intended for global

distribution of subtitles and captions.

... For example, Japanese language support is there.

... There's an AC review open until 1st November, encourage your AC

rep to support.

... We have a tight timeline for publication, want to go to Rec on

13th November.

... Thierry put together our timeline, we've stuck to it.

... We have test suites, implementation reports.

... We have WebVTT at CR status, for some time.

... David Singer proposed that we stop working on it, because of

lack of activity.

... That means publishing as a WG Note.

... Unless there's a proposal to pick it up to work on it, that's

what would happen.

Mark_Vickers: WebVTT is implemented, so what does this mean?

Nigel: It's partially

implemented.

... David's proposal is to treat it as a living standard in the

CG.

... The Timed Text CG has been quiet for a number of years. It's

not clear what will happen practically.

Andreas: A question that was difficult to answer in the past, is what VTT version you should rely on.

Mark_Vickers: Work on EI 708 compatibility. Was that achieved?

David_Singer: Yes, that's there. It's

in the Apple implementations, but not quite complete.

... Some of the polyfills implement some of it.

Andreas: If it moves from CR to WG Note, does the CR become subserseded or obsolete?

David_Singer: Process mandates that

you move from CR to WG Note.

... The Latest version in the doc will point to the Note.

... The CG will continue to exist.

Nigel: One other publication we're

working on is our IANA TTML profile registry.

... Needs work to update based on profiles published since last

update.

... That document is there to allow signalling to processors to

know what kind of processing is required to handle a particular

kind of TTML document.

... These are all formats of timed text, typically used for

subtitles and captions in video.

... We have an erratum in TTML1 about the type registration.

... For TTML2, we're gathering requirements for a 2nd

edition.

... It's a good time to start thinking about requirements for

TTML3.

Glenn: Does the current charter contain either a 2nd edition of TTML2 or a TTML3?

Nigel: I believe so, yes.

... We have a session tomorrow morning on live submission of TTML.

What, if anything, needs to be done on TTML there?

... I don't think anything is needed from a client device

perspective.

https://www.w3.org/2011/webtv/wiki/images/3/3a/Tpac-2018-media-imsc-on-mdn.pdf

Andreas: IMSC is a stable, mature

standard, widely used. It's a web technology even though not

implemented in the browser.

... It's not in the scope of the specs to guide users, so we need

more approachable information for web developers.

... We have created tutorials on MDN, a basic introduction to

IMSC.

... We're taking an interactive approach to teaching web

developers, with interactive examples.

... You can test out the available features.

... Next steps are to set up a reference section to document the

attributes, expand the tutorials.

... We need help with this.

Pierre: MDN has been an essential part of helping people author HTML, the goal here is to help people do similar with IMSC.

<nigel> Glenn: It's worth pointing out that the presentation uses the polyfill that Pierre largely wrote.

https://www.w3.org/2011/webtv/wiki/images/2/2b/Tpac-2018-media-imsc-in-browser.pdf

Andreas: IMSC is a stable deployed

caption standard with an active community.

... The TextTrack API is generic and format independent, but only

WebVTT is implemented.

... Can we define a generic cue format?

... We end up with something very similar to what's defined in

WebVTT, TTML, IMSC.

... If we look ahead, there's not been a lot of effort in the

browser community.

... We had a subtitle technology conference at IRT in Munich, and

also a user meeting at IBC.

... We want to discuss what the role of the IG could be? Document

use cases, requirements, and gaps?

Mark_Vickers: Could this be a joint TF between the two groups?

Andreas: I want to see if there's a interest in the idea.

Nigel: The child element of the video that specifies that the track might require IMSC.

Mark_Watson: If it's native, the text could be available to accessibility tools.

Pierre: Also a way to have user preferences.

Mark_Watson: We find it convenient to allow users to set a preference in our service, so we don't want a browser preference.

Jer_Noble: That's not going to fly.

We have to have a way to allow users to override.

... We have a requirement that we have to allow users to

override.

Nigel: We have different constituencies, need something that works well for everyone.

Pierre: An actionable item is to have

this discussion.

... [discussion of precedence of overrides]

Eric: There's a need to define what native support means.

Nigel: It's not just about the

presentation of the IMSC subtitles themselves, but the UX of the

player more broadly.

... There's a set of priorities for UI elements for a video

screen.

Chris: Next steps, an IG task force?

Mark_Vickers: We'll document the case for a TF.

https://www.w3.org/2011/webtv/wiki/images/f/f0/Tpac-2018-media-subitles-in-360.pdf

Andreas: We are documenting

signalling of access services, needed for decent user

interfaces.

... IMAC is a European project with several partners, including

Motion Spell, RNIB in the UK.

... One part of the effort is subtitles, reabilility, text that is

comfortable to use.

... Three different approaches. One is to always place the

subtitles in the same place in the field of view.

... Another way that uses speaker identification, requires

knowledge of the space you're navigating. Here we see the subtitles

with arrows that show where the speaker is.

... You need to know where the speaker is located in 360

space.

... Another option is to use a fixed position. So you need to

position the subtitle correctly in space.

... To use IMSC for that, it would need to be standardised

somewhere.

... Is doing a gap analysis in scope for the M&E IG, or

possibly start a TF?

Chris: Have you talked to people in the Immersive Web group?

Andreas: We discussed at TPAC last year, they want to see some implementation, then bring it back to them.

Chris: It clearly fits multiple groups' interests, Immersive Web, TTWG, MEIG.

Mark_Vickers: Historically we haven't

done much in VR.

... The other group may have the ability to bring the correct

requirements, or bring those people in.

... Would this be the group to see where the requirements are, and

then look to other groups to see where these may be addressed?

David_Singer: Everybody is talking

about it, VRIF, 3GPP, not necessarily at MPEG.

... There's a tension between user flexibility for where to look,

and where the audio's coming from.

Igarashi: Is that related to the previous discussion on native support?

Andreas: We could invite people from VRIF, other groups, have an IG call.

Chris: That's a good next step for this.

Nigel: TTWG is interested to know if

there are additional styling requirements coming up.

... One from the BBC is for news captions using a particular visual

style. You can almost duplicate that style using CSS and CSS

animations.

... But not all the CSS features are available in TTML now.

... The reason for making the client do that is to make it more

accessible.

... Audio features, 3D stereoscopic display in TTML.

... Are there other requirements?

Glenn: I have some Karaoke features to add.

Nigel: HDR and WCG.

Chris: There's a Color on the Web CG

meeting for this on Thursday.

... [discussion of perceived depth]

<kaz> https://docs.google.com/presentation/d/115EDI5pmviCQRfW3IDXKuSJERxYjkrAsZXkTLRwr9FE/edit Mark Watson's slides

Mark_Watson: There are three things

in incubation in WICG related to EME.

... Persistent usage record, how keys are used and when. This was

in v1 but there were no interoperable implementations.

... We expect there to be implementations soon, Chrome and Edge

have announced intent to implement.

Mark_Vickers: This enables important end user features.

Mark_Watson: It allows us to do

auditing of concurrent streams. Users should only be able to watch

a number of concurrent streams in one account.

... HDCP detection, secure enforcement is done by the DRM.

Important to detect what level of HDCP is supported.

... You avoid a scenario where you request 4K keys and then find

out you can't support it.

... Not sure of implementation status.

Mounir: It's implemented in Chrome.

Mark_Watson: Not sure about how to test.

Jer_Noble: It's a common problem with WPT. You need the test environment to provide the HDCP level to the test.

Mark_Watson: Or a robot!

... The third one is the encryption mode. There are more than 2

encryption modes. Some only support Common Encryption mode (cenc),

others only cbcs.

... This is at the proposal stage, so we need to write a spec for

this. There's an explainer.

Jer_Noble: We added support for that.

Francois: There hasn't been much discussion in GitHub.

Mark_Watson: I think the 2nd and 3rd items are very straightforward.

Mounir: You can see the history of the discussion in the Media Capabilities issues.

Mark_Vickers: Is it something that would go into both Media Capabilities and EME?

Mark_Watson: It was moved out of Media Capabilities.

Mounir: It's a capability of the screen somehow, but we moved it to EME when we wanted it to be in a proper spec.

Mark_Vickers: It's incubated, and being implemented, will the spec be updated?

Mark_Watson: We should talk about it when we have the interoperable implementations?

<kaz> MSE and related APIs: https://wicg.github.io/media-source/ https://wicg.github.io/media-capabilities/ https://wicg.github.io/media-playback-quality/

Mounir: For Media Source Extensions,

the only incubation I know of is the change type, codec

switching.

... Media Capabilities is an API to expose decoding information,

also future facing, exposing stream information,

... such as whether the decoding is smooth and power

efficient.

... It's in Chrome, not in Firefox, Safari has an initial

implementation.

... Safari returns "not smooth" to everything but HEVC, which is

not entirely true.

... Chrome uses historic decoding of the device, with

benchmarking.

Chris: This morning we talked about low latency with MSE.

Jer_Noble: The big discussion was how

a player could play through a gap and continuous audio.

... Also control of the video latency / buffering

Chris: For Media Capabilities, detecting HDR displays?

Mark_Watson: Support is there for

video but not in CSS. Also codec strings that don't declare the

HDR.

... There are scenarios where you may have a laptop with an HDR

display, connected to a screen that's non-HDR.

Mounir: Media Capabilities is used by

YouTube. Netflix has done some experimentation with it.

... At Google I/O we gave some specific numbers about the

improvements we get.

... Media Playback Quality. Not much to say here. We wanted to

change this to provide better information. The existing spec is a

cut/paste with a few changes.

... Media Capabilities will tell you what you can play before

playing, and Media Playback Quality will give you information to

adapt during playback.

... Media Playback Quality provides information about dropped

frames with MSE, but it may mean different things in different

browsers.

<kaz> User interface APIs: https://wicg.github.io/picture-in-picture/ https://wicg.github.io/spatial-navigation/

Mounir: Picture in Picture. Safari

started doing this as a proprietary API, an always-on-top floating

video window.

... You can ask a video to request picture in picture, it's only

implemented for . A common request is for it to work with any

element.

... On Android the system picture in picture doesn't allow user

interaction.

... We got some feedback from Safari, it's on the Webkit roadmap.

Not in Firefox, positive feedback from Microsoft. Thinking of a V2

based on developer feedback.

... This may allow some customisation.

Chris: Caption rendering with Picture in Picture?

Jer_Noble: We do caption rendering as long as you use the native APIs. We're not providing custom rendering.

Nigel: Is it readable?

Jer_Noble: The picture in picture is about the same size as a mobile phone.

Mark_Watson: Use presentation API to do picture in picture?

Mounir: Yes. Not sure its the best way to do it.

Francois: It's the same design as the Remote Playback API.

Mark_Watson: We have some interactive kids content, with UI content rendered on the video. Couldn't do this with Picture in Picture if it's just the video.

Mounir: There's a security concern.

On desktop, because it's an always-on-top window, you could use

that to trick the user into thinking there's a virus.

... Any interaction is going to be really hard. You'd have to click

precisely to do interaction.

Jer_Noble: There's potential with Media Session to provide input in a limited way.

Mounir: It's a solution we're interested in for V2.

Barbara: Have you thought about the

immersive experience?

... Then interaction becomes more important.

<kaz> And more: https://wicg.github.io/mediasession/ https://wicg.github.io/shape-detection-api/

Mounir: Media Session has had no

activity for a while. It's on Chrome for Android.

... This API provides metadata to the browser about what's

playing.

... If you go to the BBC and watch a show, the API provides

information about the show, a poster image. The information can be

cached by the website.

... Media Session handles the next and previous buttons. It's

popular, used by a few websites.

... Chrome is looking at implementing it on desktop. Could extend

using Picture in Picture.

... We got a feature request to add duration. The spec is stable,

we may do a V2.

Jer_Noble: We have buttons on the

touchbar for previous/next.

... The benefit of Media Session is that it allows Netflix and

YouTube to integrate the player state with native controls.

Francois_Beaufort: Shape detection is

three detection things: text detection, barcode detection, face

detection APIs.

... Used on Android and other platforms. The implementation is not

perfect.

Francois: It's based on images, so you have to grab frames to use it with a video.

Jeff: There are lots of things in incubation. The IG doesn't meet F2F often. Is there a general view that some of these things want to move to the Rec track?

Mounir: I'd want to move most of

those APIs to a Media WG.

... We have nothing on the Rec track currently. Media Session is

ready.

... Francois and I have discussed that.

Francois: Two questions: is there

general support to create a WG, and then scoping it.

... Also, what do we do with EME?

Chris: This is the first time we've discussed this openly in the IG.

Eric_Siow: Is the proposal to move to a WG, from a CG?

Barbara: Immersive Web has a CG and a WG. Concern if you take it away from the CG?

Mounir: Was the Immersive Web CG created before WICG?

Francois: No

Mark_Watson: I think transitioning

some of these things to a WG process is somewhat overdue.

... There's no group agreement yet that there's a scope with a

timescale.

... That mode of working would help bring in people who aren't

browser vendors.

... The browser implementers prefer to have this process that's

controlled by them.

... I would like to see us move them along. For EME, nobody is

interested in having a repeat of the previous discussions.

... The objective for us is just to have the spec there, however

it's done, we don't want to go through that again.

Mark_Vickers: I agree, but also don't

want to mess things up.

... MSE and EME are very related, and need to be maintained

together. I think they should be published by the same group.

... Earlier today, we talked about our Media Timed Events TF, has a

proposal in the WICG discourse.

... We decided to have somebody propose a WICG GitHub repo. I would

put this into the same bag to be considered.

... Would all these go in a W3C WG? Some may be changes to HTML, so

that's a WHATWG thing.

Mounir: The other specs aren't related to HTML, so should be fine.

Mark_Vickers: I'd be fine if they all get done in a WG.

Mounir: I'd rather not add stuff to HTML unless it's deeply embedded.

<Zakim> nigel, you wanted to ask if the proposal is for a new WG or for adding to the scope of an existing WG

Nigel: Is this an existing WG or a new one?

Francois: That can be discussed. Seems good to have a media focused WG. The other group could be Web Platform WG, but it's doing a lot already.

Jeff: A comment on Mark's suggestion

that EME and MSE are related, so should go to the same group.

... It's not necessary, other things closely related are in

different groups. An example is WebRTC, closely related to IETF

RTC-Web.

... We're making it work, different patent policies. We could

separate MSE from EME if we wanted to.

Mark_Vickers: I just want to avoid leaving EME with no place to publish.

Jeff: This group may be interested in

an AB conversation about a year ago.

... When we were completing EME, what should the strategy be?

... EME needs to have a place. There's a high toll for doing it.

Their advice was not to create a v.Next for merely an incremental

change.

Pierre: Are you inviting EME to start again in W3C?

Jeff: I don't think I said

that.

... I don't know the detail, if it's a minor or a major change. I'm

just trying to give input from stakeholders.

Pierre: So, what happens if this effort starts again?

PLH: Regarding MSE and EME, when the

previous WG was operating, they had alternating calls on MSE and

EME.

... If we need two charters, it's not a problem.

Mark_Vickers: There's a big overlap in authors. I'm not taking a firm stance here, though.

PLH: A charter with EME in it will

generate a big storm. How to answer that?

... Starting EME in W3C, we wanted to have the conversation. A

charter would need to get past the AC.

... We don't have a charter today for EME.

Pierre: The alternative is not doing EME at W3C.

Jeff: This is the first time we're talking about it, so we just have considerations, no points of view yet.

Mark_Vickers: With those changes,

it's a 1.1 release not a 2.0.

... There was a lot of productive conversation about this, and a

lot of unproductive conversation.

... We didn't get to have the provisions for security researchers

discussion.

... The majority passed it through the gates. Having published v1,

publishing v1.1 would keep it alive, we should follow through with

that and follow through on the conversation.

... It is the thinking of this group, consistently, as that we

should do this.

Jeff: It's true, we never had the

conversation. My view is to do that before we send the charter out,

before people are being asked to take a position.

... How do we have the conversation with them, see if they don't

object this time?

... One of the reasons we should consider separating them is to

allow more time to socialise the middle ground for EME. The other

specs may not need so much time.

... When we chartered EME in 2013, what the Director actually

chartered was finding a way to support protected content through

web standards.

... But he very specifically said that a particular solution was

not agreed to.

... We didn't know at that time that EME was going to be the

solution.

Mark_Watson: I do seem to remember

some scoping, whether to design an entire system including the key

management. That was not considered to be in scope.

... The changes on the table are small, and don't constitute a V2.

What's the approach, given it's an incremental addition of

functionality?

Jeff: We have time to discuss. It depends on the details. I suggest looking at that offline.

Mark_Vickers: If we want to go with the others first, and hold off with this, I'd be flexible.

<jeff> [The team's thinking about "content protection" being in scope, rather than EME being a solution in 2013 --> https://www.w3.org/blog/2013/05/perspectives-on-encrypted-medi/]

Mark_Watson: Given we're talking about incremental additions, we need to have it documented somewhere so people are confident implementing.

Nigel: You could scope the WG to only the delta.

Mark_Vickers: I see how things are so improved with respect to the previous situation with Flash. Implementations are more efficient, there's vastly improved video for the web.

Francois: I'm hearing some positive feedback about transitioning some of these specs to Rec track. I can work with Mounir on a draft charter.

Jeff: Somebody should take the action to see what kind of proposal would be OK for middling opponents to EME.

Mounir: What are we trying to avoid here? Is it W3C members, the public, the press? All of those?

Jeff: All of the those, plus. W3C

goes through a formal process to approve. Lots of people will

formally object.

... Will need TimBL to get personally involved again, and it's not

the best time to get his attention.

... We'll have objections with two separate paths. We have to

follow our process.

Dan: I wonder if it's worth

informally asking the community.

... If it's framed clearly that it's water under the bridge, this

is already out there, so there are more problems if the spec is not

maintained.

... It can be worked out, but now there are a lot of assumptions

about the pushback.

Pierre: Assuming we do that, and there's a formal objection, even from the same person citing the same references. Would those objections be disposed of immediately?

Jeff: I don't know how the director would rule. Likely it wouldn't be an identical objection.

Mark_Watson: With the scope of the changes we have on the table, if you're certain that we would get formal objections, use of Jeff's, PLH's, Tim's time wouldn't be proportionate.

Jeff: We have a new fast path for simple maintenance.

Francois: But these are new features.

Mark_Watson: I don't see any need for

a big new version, it's working fine.

... Sounds like we should understand our alternative options.

Mark_Vickers: Would you object to put

the other things into a Media group, and a separate group for

EME?

... I'd be happy to help think about that.

Jeff: If you flip the conversation - EME is out there, not going away, but here some new features. But add things we'll do for security researchers.

Pierre: Those discussions weren't simple. Protecting security researchers had different meanings.

Jeff: And they were done under time pressure.

Francois: Two actions. One to draft the scope for the non-EME media specs. The other is to get back to the objectors.

Mark_Vickers: I'd want to know what they want to see, what they think before we start chartering.

Jeff: Also talk to browser vendors about the security researchers, will they want to pick it up?

https://www.w3.org/2011/webtv/wiki/images/3/30/AD_CG_presentation_TPAC_2018.pdf

Nigel: The goal is to create an audio

description of what's in video.

... Also to provide an accessible presentation of AD by providing

the text of AD to players,

... so they can make them available to screen readers, for example

to use with a Braille display.

... The basis for working on this is that TTML has some new audio

features, based on the Web Audio and Web Speech APIs.

... We think a short profile of TTML is enough.

... There are 18 participants in the CG and we have an editor. The

BBC has an implementation.

... Participation is very welcome.

... If it goes we'll we could add it to the TTWG charter, make it a

Rec track document.

... We want to make switching between programme audio and AD as

seamless as possible.

... I thought it's relevant and interesting for the IG.

Mark_Vickers: Lots of interesting

topics today.

... Native support for IMSC.

Pierre: Intersection with fingerprinting surface.

Andreas: It's generally about improving subtitle and caption support in the browser.

Pierre: My suggestion is to start with IMSC.

Mark_Vickers: We can convene a group

to look at that.

... We could have an IG call on EME, open to objectors.

Chris: Also the frame accurate

seeking topic.

... We'll collate the suggestions for new task forces and call

topics after the meeting.

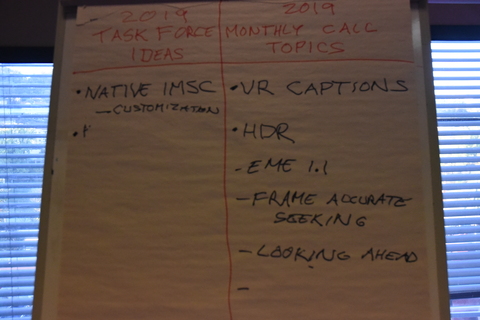

Task Force ideas and Monthly call topics

[adjourned]