W3C Media Fragments Working Group

Raphaël Troncy (EURECOM)

<raphael.troncy@eurecom.fr>,

Erik Mannens (IBBT MediaLab, University of Ghent)

<erik.mannens@ugent.be>

Raphaël Troncy (EURECOM)

<raphael.troncy@eurecom.fr>,

Erik Mannens (IBBT MediaLab, University of Ghent)

<erik.mannens@ugent.be>

Duration: September 2008 - January 2011

Pointers:

15 (active) Participants:

Provide URI-based mechanisms for uniquely identifying fragments for media objects on the Web, such as video, audio, and images.

Photo credit: Robert Freund

Silvia is a big fan of Tim's research keynotes. She used to watch numerous videos starring Tim for following his research activities and often would like to share the highlight announcements with her collaborators.

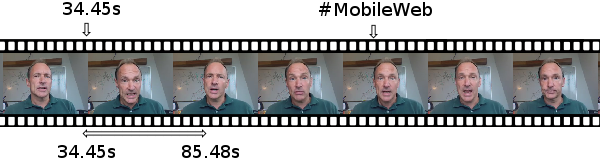

Silvia is interested in TweeTube that will allow her to share video directly on Twitter but she would like to point and reference only small temporal sequences of these longer videos. She would like to have a simple interface, similar to VideoSurf, to edit the start and end time points delimiting a particular sequence, and get back in return the media fragment URI to share with the rest of the world.

She would also like to embed this portion of video on her blog together with comments and (semantic) annotations.

Lena would like to browse the descriptive audio tracks of a video as she does with Daisy audio books, by following the logical structure of the media.

Audio descriptions and captions generally come in blocks either timed or separated by silences. Chapter by chapter and then section by section she eventually jumps to a specific paragraph and down to the sentence level by using the "tab" control as she would normally do in audio books.

The descriptive audio track is an extra spoken track that provides a description of scenes happening in a video. When the descriptive audio track is not present, Lena can similarly browse through captions and descriptive text tracks which are either rendered through her braille reading device or through her text-to-speech engine.

http://www.w3.org/2008/WebVideo/Fragments/WD-media-fragments-reqs/

http://www.example.com/video.ogv#t=10,20

http://www.example.com/video.ogv#xywh=160,120,320,240

http://www.example.com/video.ogv#track=audio

http://www.example.com/video.ogv#id=chapter-1

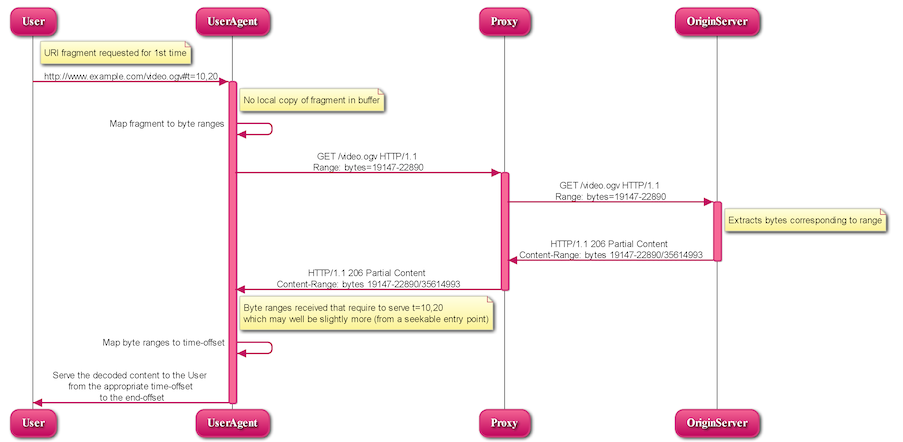

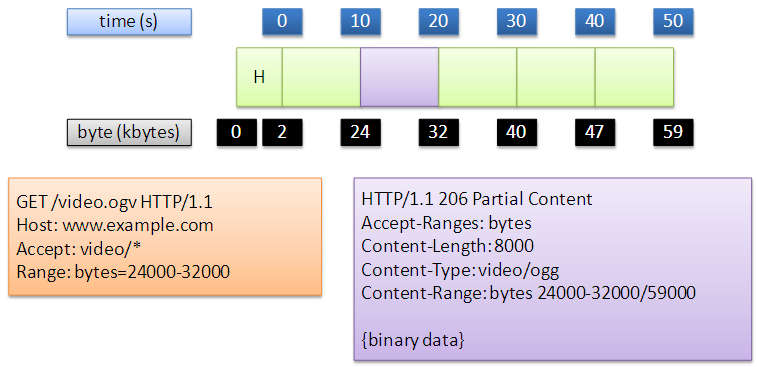

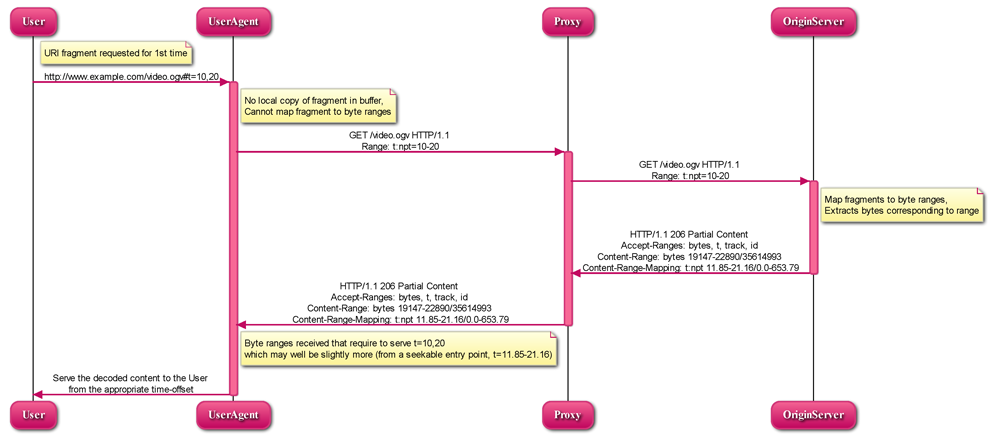

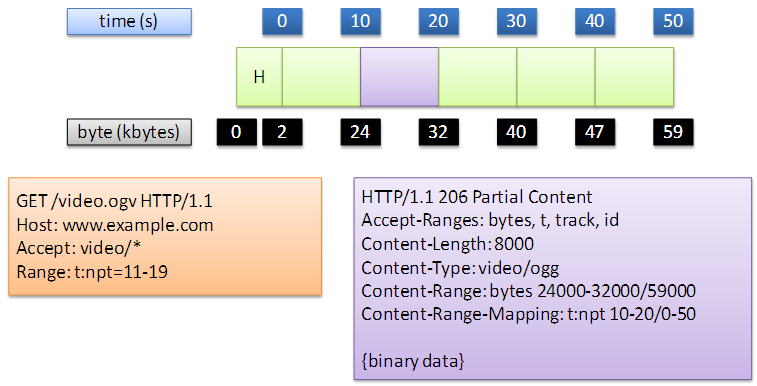

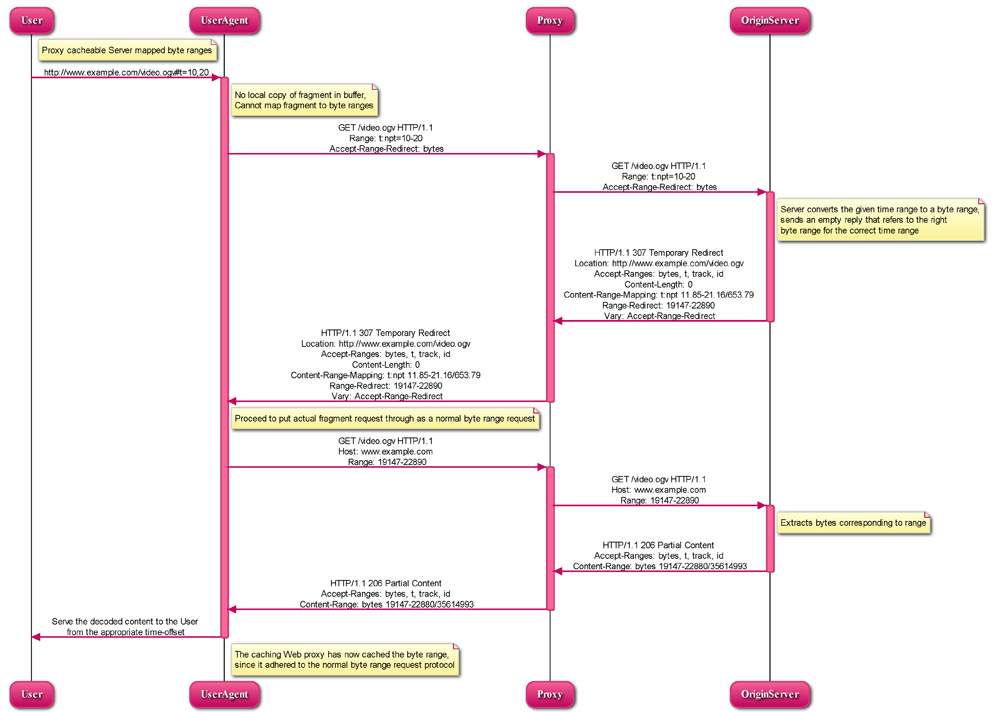

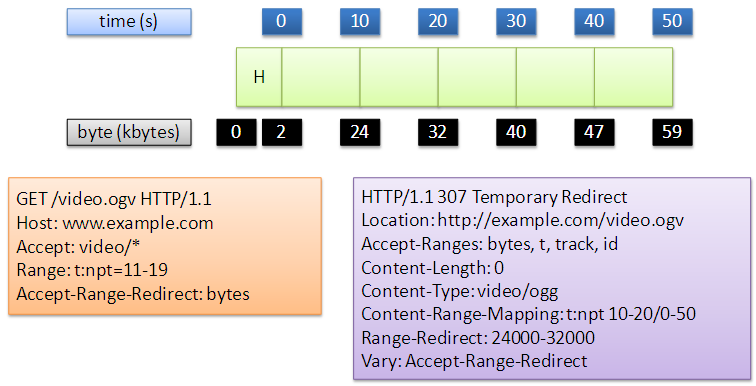

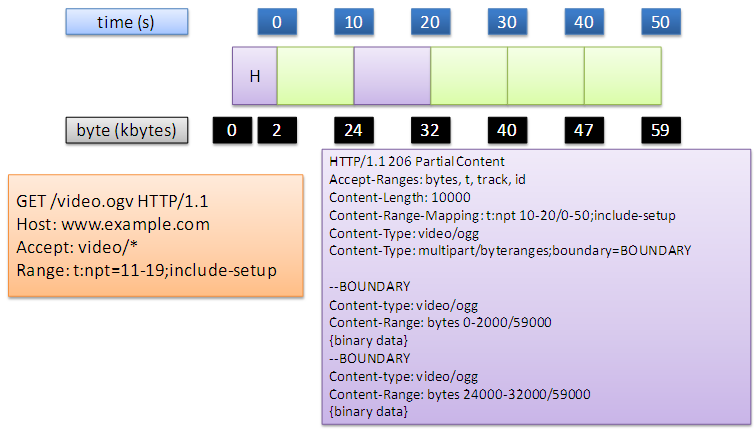

General principle is that smart UA will strip out the fragment definition and encode it into custom http headers ...

(Media) Servers will handle the request, slice the media content and serve just the fragment while old ones will serve the whole resource.