Multimedia systems typically contain digital documents of mixed media types,

which are indexed on the basis of strongly divergent metadata standards. This

severely hamplers the inter-operation of such systems. Therefore, machine

understanding of metadata comming from different applications is a basic

requirement for the inter-operation of distributed Multimedia systems. In this

document, we present how interoperability among metadata,

vocabularies/ontologies and services is enhanced using Semantic Web

technologies. In addition, it provides guidelines for semantic

interoperability, illustrated by use cases. Finally, it presents an overview of

the most commonly used metadata standards and tools, and provides the general

research direction for semantic interoperability using Semantic Web

technologies.

This section describes the status of this document at the time of its

publication. Other documents may supersede this document. A list of

Final Incubator Group Reports is available. See also the

W3C technical reports index at http://www.w3.org/TR/.

This document was developed by the W3C

Multimedia Semantics Incubator Group, part of the

W3C Incubator Activity.

Publication of this document by W3C as part of the

W3C Incubator Activity indicates no endorsement of its content by W3C, nor

that W3C has, is, or will be allocating any resources to the issues addressed

by it. Participation in Incubator Groups and publication of Incubator Group

Reports at the W3C site are benefits of

W3C Membership.

Incubator Groups have as a

goal to produce work that can be implemented on a Royalty Free basis, as

defined in the W3C Patent Policy. Participants in this Incubator Group have

made no statements about whether they will offer licenses according to the

licensing requirements of the W3C Patent Policy for portions of this

Incubator Group Report that are subsequently incorporated in a W3C

Recommendation.

This document targets at people with an interest in semantic interoperability,

ranging from non-professional end-users that have content are manually

annotating their personal digital photos to professionals working with digital

pictures in image and video banks, audiovisual archives, museums, libraries,

media production and broadcast industry, etc.

Discussion of this document is invited on the public mailing list

public-xg-mmsem@w3.org (public

archives). Public comments should include "[MMSEM-Interoperability]" as

subject prefix .

This document uses a bottom-up approach to provide a simple extensible framework to improve interoperability of

applications related to some key use cases discussed in the XG.

In this section, we present several use cases showing interoperability problems

with multimedia metadata formats. Each use case starts with an example

illustrating the main problem, and proposed after a possible solution using

Semantic Web technologies.

Introduction

Currently, we are facing a market in which, for example, more than 20 billion digital photos

are taken per year in Europe [GFK].

The number of tools, either for desktop machines or web-based, that perform

automatic as well as manual annotation of the content is

increasing. For example, a large number of personal photo management tools extract

information from the so called EXIF [EXIF] header and add

this information to the photo description. These tools typically allow to tag and describe

single photos. There are also many web-based tools that allow to upload photos to

share them, organize them and annotate them. Web sites such as [Flickr]

allow tagging on the large scale. Sites like [Riya]

provide specific services such as face detection and face

recognition of personal photo collections. Photo community sites such as [Foto

Community] allow an organization of the photos in categories and

allow rating and commenting on them. Even though our photos today find more and

more tools to manage and share them, these tools come with different

capabilities. What remains difficult is finding, sharing, reusing photo

collections across the borders of tools and sites. Not only the way in which

photos are automatically and manually annotated is different but also the

way in which this metadata is described and represented finds many different

standards. At the beginning of the management of personal photo collections is

the semantic understanding of the photos.

Motivating Example

From the perspective of an end user let us consider the following scenario to

describe what is missing and needed for next generation digital photo services.

Ellen Scott and her family were on a nice two-week vacation in Tuscany.

They enjoyed the sun at the beaches of the Mediterranean, appreciating the

great culture in Florence, Siena and Pisa, and traveling on the traces of the

Etruscans through the small villages of the Maremma. During their marvelous

trip, the family was taking pictures of the sightseeing spots, the landscapes

and of course from the family members. The digital camera they use is already

equipped with a GPS receiver, so every photo is stamped not only with the time

when, but also with the geo-location where it has been taken.

Photo annotation and selection

Back home, the family uploads about 1000 pictures from the camera to the

computer and wants to create an album for grand dad. On this computer, the

family uses a photo management tool which both extracts some basic

features such as the EXIF header, but also allows for entering tags and personal

descriptions. Still fulfilled with the memories of the nice trip the mother of

the family labels most of the photos. With a second tool, the tour of the GPS

receiver and the photos are merged using the time stamp. As a results, each of

the photos is geo-referenced with the GPS position stored in the EXIF header.

However, showing all the photos would take an entire weekend. So Ellen starts

to create an excerpt of their trip with the highlights. Her

photo album software takes in the 1000 pictures and makes suggestions for the

selection and the arrangement of the pictures in a photo album. For example,

the album software shows her a map of Tuscany and visualises, where she has

taken which photos and groups them together making suggestions which photos

would best represent this part of the vacation. For places for which the

software detects highlights, the system offers to add information about the

place to the album, stating that on this Piazza in front of the Palazzo Vecchio

there is the copy of Michelangelo's famous David statue. Depending on the

selected style, the software creates a layout and the distribution of all

images over the pages of the album taking into account color, spatial and

temporal clusters and template preference. So, in about 20 minutes Ellen has

finished the album and orders a paper version as well as an online-version. The

paper album is delivered to her by mail three days later. It looks great, and

the explaining texts that her software has almost automatically added to the

pictures are informative and help her remembering the great vacation. They show

the album to grandpa and he can take his time to study their vacation and the

wonderful Tuscany.

Exchanging and sharing photos

Selecting the most impressive photos, the son of the family uploads a nice set

of photos to Flickr, to give his friends an impression of the great vacation.

Unfortunately, all the descriptions and annotations from the personal

photo management system are lost after the Web upload. Therefore, he adds a few

own tags to the Flickr photos to describe the places, events, persons of the

trip. Even the GPS track is lost and he places the photos again on the Flickr

map application to geo-reference them. One friend finds a cool picture from the

Spanish Stairs in Rome by night and would like to get the photo and its

location from Flickr. This is difficult again as a pure download of the photo

does not retain the geo-location. When aunt Mary visits the Web album and

starts looking on the photos she tries to download a few onto her laptop to

integrate them into her own photo management software. Now aunt Mary would like

to incorporate some of the pictures of her nieces and nephews into her photo

management system. And again, the system imports the photos but the precious

metadata that mother and sun of family Miller have already annotated twice are

gone.

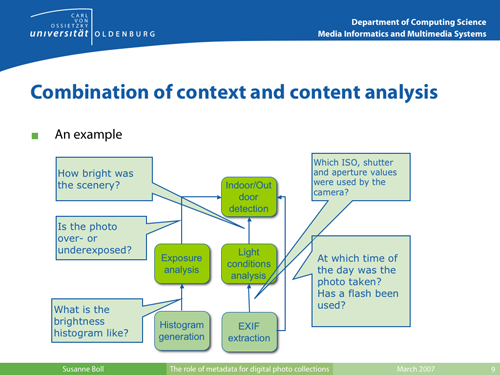

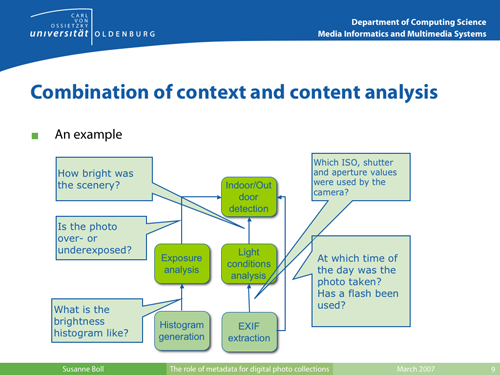

The fundamental problem: semantic content understanding

Indoor/Outdoor detection with signal analysis and context analysis.

Image courtesy of

Susanne Boll, used with permission.

What is needed is a better and more effective automatic annotation of digital

photos that better reflects one's personal memory of the events captured by the

photos an allows different applications to create value-added services on top

of them such as the creation of a personal photo album book. For understanding

the personal photos and overcoming the semantic gap, digital cameras leave us

with files like dsc5881.jpg, a very poor reflection of the actual event. Is is

a 2D visual snapshot of a multi-sensory personal experience. The quality of

photos is often very limited (snapshots, over exposed, blurred, ...). On the

other hand digital photos come with a large potential for semantic

understanding the photos. Photographs are always taken in context. In

contrast to analogical photography, digital photos provide us with explicit

contextual information (time, flash, aperture, ...), a "unique id" such as the

timestamp allows to later merge contextual information with the pure image

content.

However, what we want to remember along with the photo is where it was, who was

there with us, what can be seen on the photo, what the weather was, if we liked

the event and so on. In recent years, it became clear that signal analysis

alone will not be the solution. In combination with the context of the photo,

such as the GPS position or the time stamp, some hard signal processing problems can be

solved better. So context analysis has gained much attention and became

important for photos and very helpful for photo understanding.

In the opposite figure, a simple example is given of how to

combine signal analysis and context analysis to achieve a better indoor/outdoor

detection of photos. And, not only with the advent of the Web 2.0 the actual

user came into focus. The manual effort of single user annotations but also

collaborative effects are considered to be important for semantic photo

understanding.

The role of metadata for this usage of photo collections is manyfold:

-

Save the experience: The central goal is to overcome the semantic gap and

represent as much of the humans impression of the moment when the photo was

taken.

-

Browse and find previously taken photos: Allow searching for events and

persons, places, moments in time, etc.

-

Share photos with the metadata with others: Give your annotated photos from

Flickr or from Foto Community to your friends' applications.

-

Use comprehensive metadata for value-added services of the photos: Create an

automatic photo collage or send a flash presentation to your aunt’s TV, notify

all friends that are interested in photos from certain locations, events, or

persons, etc.

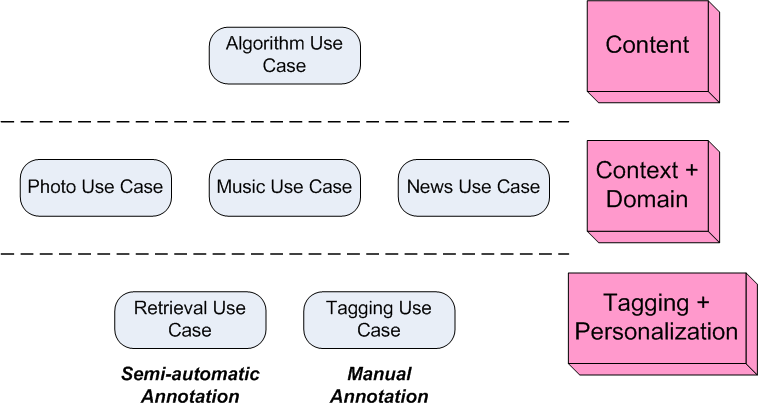

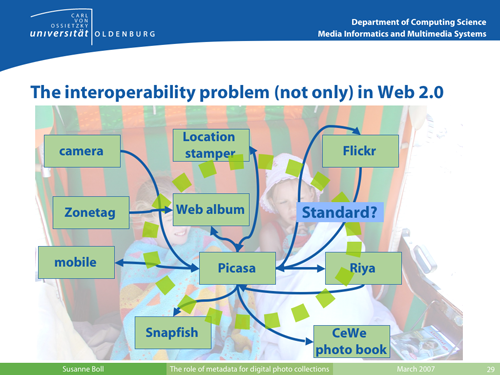

Photos usage.

Image courtesy of

Susanne Boll, used with permission.

The opposite figure illustrates the use of photos today and what we do with

our photos at home but also in the Web.

So the social life of personal photos can be summarized as:

-

Capturing: one or more persons capture and event, with one or different cameras

with different capabilities and characteristics

-

Storing: one or more persons store the photos with different tools on different

systems

-

Processing: post-editing with different tools that change the quality and maybe

the metadata

-

Uploading: some persons make their photos available on Web (2.0) sites

(Flickr); different sites offer different kinds of value-added services to the

photos (Riya)

-

Sharing: photos are given away or are given access to via email, Web sites,

print, ...

-

Receiving: photos from others are received via MMS, email, download, ...

-

Combining: Photos from own and different sources are selected and reused for

services like T-Shirt, Mugs, mouse pads, photo albums, collages, ...

For this, metadata plays a central role at all times and places of the social

life of our photos.

The multimedia semantics interoperability problem

The problem we have here is that metadata is created and

enhanced by different tools and systems and follows different standards and

representations. Even though there are many tools and standards that aim

to capture and maintain this metadata, they are not necessarily interoperable.

So on a technical level, we have the problem of a common representation of

metadata that is helpful and relevant for photo management, sharing and reuse.

Metadata and end user typically gets in touch with descriptive metadata that

stem from the context of the photo. At the same time, in more than a decade

many results in multimedia analysis have been achieved to extract many

different valuable features from multimedia content. For photos for example,

this includes color histograms, edge detection, brightness, texture and so on.

With MPEG-7, a very large standard has been developed that allows to describe

these features in a standardized way. However, both the size of the standard but also the many

optional attributes in the standard have lead to a situation in which MPEG-7 is

used only in very specific applications and has not been achieved as a world

wide accepted standard for adding (some) metadata to a media item. Especially

in the area of personal media, in the same fashion as in the tagging scenario,

a small but comprehensive shareable and exchangeable description scheme for

personal media is missing.

What is needed is a machine readable description that comes with each photo

that allows a site to offer valuable search and selection functionality on the

uploaded photos. Even though approaches for Photo Annotation have been proposed

they still do not address the wide range of metadata, annotations that could

and should be stored with an image in a standardized fashion.

-

EXIF [EXIF] is a standard that comprises many photographic

and capture relevant metadata. Even though the end user might use only a few of

the key/value pairs, they are relevant at least for photo editing and archiving

tools which read this kind of metadata and visualize it. So EXIF is a necessary

set of metadata which is needed for photos.

-

Tags from Flickr and other photo web sites and tools are metadata of low

structure but high relevance for the user and the use of the photos. Manually

added they reflect the users knowledge and understanding of the content which

can not be replaced by any automatic semantic extraction. Therefore, a

representation of these is needed. Depending on the source of tags is might be

of interest to relate the tags to their origin such as "taken from an

existing vocabulary", "from a suggested set of other tags" or

just "free tags". XMP seems to be a very promising standard as it

allows to define RDF-based metadata for photos. However, in the description of

the standard, it clearly states that it leaves the application dependent schema

/vocabulary definition to the application and only makes suggestions for a set

of "generic" sets such as EXIF, Dublin Core. So the standard could be

a good "host" for a defined photo metadata description scheme in RDF

but does not define it.

-

PhotoRDF [PhotoRDF] "describes a project for

describing & retrieving (digitized) photos with (RDF) metadata. It

describes the RDF schemas, a data-entry program for quickly entering metadata

for large numbers of photos, a way to serve the photos and the metadata over

HTTP, and some suggestions for search methods to retrieve photos based on their

descriptions." So wonderful, but the standard is separated into three

different schemas: Dublin Core [Dublin Core], a Technical Schema which

comprises more or less entries about author, camera and short description, and

a Content Schema which provides a set of 10 keywords. With PhotoRDF, the type

and number of attributes is limited, does not even comprise the full EXIF

schema and is also limited with regard to the content description of a photo.

-

The Extensible Metadata Platform or XMP [XMP] and the

IPTC-IIM-Standard [IIM] have been introduced to define how

metadata (not only) of a photo can be stored with the media element itself.

However, these standards come with their own set of attributes to describe the

photo or allow to define individual metadata templates. This is the killer for

any sharing and semantic Web search! What is missing is an actual standardized

vocabulary what information about a photo is important and relevant to a large

set of next generation digital photo services has not been reached.

-

The Image Annotation on the Semantic Web [MMSEM Image]

provides an overview of the existing standard such as those mentioned

above. At the same time it shows how diverse the world of annotation is. The

use case for photo annotation choses RDF/XML syntax of RDF in order to gain

interoperability. It refers to a large set of different standards and

approaches that can be used to image annotation but there is no unified view on

image annotation and metadata relevant for photos. The attempt here is to

integrated existing standards. If those however are too many, too

comprehensive, and might even have overlapping attributes is might not be

adopted as the common photo annotation scheme on the Web. For example, for the

low level features for example, there is only a link to MPEG-7.

-

The DIG35 Initiative Group of the International Imaging Industry Association

aims "provide a standardized mechanism which allows end-users to see

digital image use as being equally as easy, as convenient and as flexible as

the traditional photographic methods while enabling additional benefits that

are possible only with a digital format." [DIG35].

The DIG35 standards aims to define a standard set of metadata for digital

images that can be widely implemented across multiple image file formats. From

all the photo standards this is the broadest one with respect to typical photo

metadata and is already defined as a XML Schema.

-

MPEG-7 is far to big even though the standard comprises metadata elements that

are relevant also for a Web wide usage of media content. The advantage of

MPEG-7 is that one can define an own description scheme and with it collect a

subset of relevant feature related metadata with a photo. But, there is no

chance to actually include an entire XML-based MPEG-7 description of a photo

into the raw content. For the description of the content the use case refers to

three domain-specific ontologies: personal history event, location and

landscape.

Towards a solution

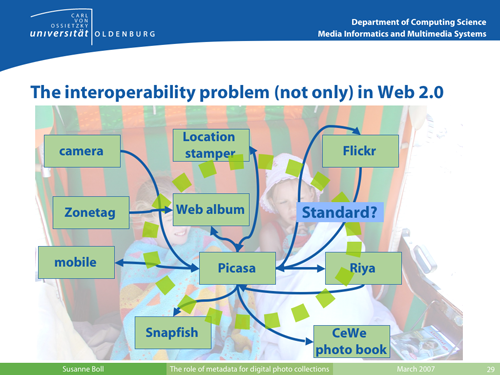

Toward a solution for photo metadata interoperability.

Image courtesy of

Susanne Boll, used with permission.

The result is clear, that there is not one standardized representation and

vocabulary for adding metadata to photos. Even though the different semantic

Web applications and developments should be embraced, a photo annotation

standard as a patchwork of too many different specifications is not helpful.

The opposite Figure illustrates some of the different actitivities, as

described aboce in the scenario, that people do with their photos and what

different standalone or web-based tools they use for this.

What is missing, however, for content management, search, retrieval, sharing

and innovative semantic (Web 2.0) applications is a limited and simple but at

the same time comprehensive vocabulary in a machine-readable, exchangeable, but

not over complicated representation is needed. However, the single standards

described only solve part of the problem. For example, a standardization of

tags is very helpful for a semantic search on photos in the Web. However, today

the low(er) level features are also lost. Even though the semantic search is

fine on a search level, for a later use and exploitation of a set of photos,

previously extracted and annotated lower-level features might be interesting as

well. Maybe a Web site would like to offer a grouping of photos along the color

distribution. Then either the site needs to do the extraction of a color

histogram or the photo itself brings this information already in in its

standardized header information. A face detection software might have found the

bounding boxes on the photo where a face has been detected and also provide a

face count. Then the Web site might allow to search for photos with two or more

persons on it. And so one. Even though low level features do not seem relevant

at first sight, for a detailed search, visualization and also later processing

the previously extracted metadata should be stored and available with the

photo.

Introduction

In recent years the typical music consumption behaviour has changed

dramatically. Personal music collections have grown favoured by technological

improvements in networks, storage, portability of devices and Internet

services. The amount and availability of songs has de-emphasized its value: it

is usually the case that users own many digital music files that they have only

listened to once or even never. It seems reasonable to think that by providing

listeners with efficient ways to create a personalized order on their

collections, and by providing ways to explore hidden "treasures" inside them,

the value of their collection will drastically increase.

Also, notwithstanding the digital revolution had many advantages, we can

point out some negative effects. Users own huge music collections that need

proper storage and labelling. Searching inside digital collections arise new

methods for accessing and retrieving data. But, sometimes there is no metadata

-or only the file name- that informs about the content of the audio, and that

is not enough for an effective utilization and navigation of the music

collection.

Thus, users can get lost searching into the digital pile of his music

collection. Yet, nowadays, the web is increasingly becoming the primary source

of music titles in digital form. With millions of tracks available from

thousands of websites, finding the right songs, and being informed of newly

music releases is becoming a problematic task. Thus, web page filtering has

become necessary for most web users.

Beside, on the digital music distribution front, there is a need to find ways

of improving music retrieval effectiveness. Artist, title, and genre keywords

might not be the only criteria to help music consumers finding music they like.

This is currently mainly achieved using cultural or editorial metadata ("artist

A is somehow related with artist B") or exploiting existing purchasing

behaviour data ("since you bought this artist, you might also want to buy this

one"). A largely unexplored (and potentially interesting) complement is using

semantic descriptors automatically extracted from the music audio files. These

descriptors can be applied, for example, to recommend new music, or generate

personalized playlists.

A complete description of a popular song

In [Pachet], Pachet classifies the music

knowledge management. This classification allows to create meaningful

descriptions of music, and to exploit these descriptions to build music related

systems. The three categories that Pachet defines are: editorial (EM), cultural

(CM) and acoustic metadata (AM).

Editorial metadata includes simple creation and production information (e.g.

the song C'mon Billy, written by P.J. Harvey in 1995, was produced by John

Parish and Flood, and the song appears as the track number 4, on the album "To

bring you my love"). EM includes, in addition, artist biography, album reviews,

genre information, relationships among artists, etc. As it can be seen,

editorial information is not necessarily objective. It is usual the case that

different experts cannot agree in assigning a concrete genre to a song or to an

artist. Even more diffcult is a common consensus of a taxonomy of musical

genres.

Cultural metadata is defined as the information that is implicitly present in

huge amounts of data. This data is gathered from weblogs, forums, music radio

programs, or even from web search engines' results. This information has a

clear subjective component as it is based on personal opinions.

The last category of music information is acoustic metadata. In this context,

acoustic metadata describes the content analysis of an audio file. It is

intended to be objective information. Most of the current music content

processing systems operating on complex audio signals are mainly based on

computing low-level signal features. These features are good at characterising

the acoustic properties of the signal, returning a description that can be

associated to texture, or at best, to the rhythmical attributes of the signal.

Alternatively, a more general approach proposes that music content can be

successfully characterized according to several "musical facets" (i.e. rhythm,

harmony, melody, timbre, structure) by incorporating higher-level semantic

descriptors to a given feature set. Semantic descriptors are predicates that

can be computed directly from the audio signal, by means of the combination of

signal processing, machine learning techniques, and musical knowledge.

Semantic Web languages allow to describe all this metadata, as well as

integrating it from different music repositories.

The following example shows an RDF description of an artist, and a song by

the artist:

<rdf:Description rdf:about="http://www.garageband.com/artist/randycoleman">

<rdf:type rdf:resource="&music;Artist"/>

<music:name>Randy Coleman</music:name>

<music:decade>1990</music:decade>

<music:decade>2000</music:decade>

<music:genre>Pop</music:genre>

<music:city>Los Angeles</music:city>

<music:nationality>US</music:nationality>

<geo:Point>

<geo:lat>34.052</geo:lat>

<geo:long>-118.243</geo:long>

</geo:Point>

<music:influencedBy rdf:resource="http://www.coldplay.com"/>

<music:influencedBy rdf:resource="http://www.jeffbuckley.com"/>

<music:influencedBy rdf:resource="http://www.radiohead.com"/>

</rdf:Description>

<rdf:Description rdf:about="http://www.garageband.com/song?|pe1|S8LTM0LdsaSkaFeyYG0">

<rdf:type rdf:resource="&music;Track"/>

<music:title>Last Salutation</music:title>

<music:playedBy rdf:resource="http://www.garageband.com/artist/randycoleman"/>

<music:duration>T00:04:27</music:duration>

<music:key>D</music:key>

<music:keyMode>Major</music:keyMode>

<music:tonalness>0.84</music:tonalness>

<music:tempo>72</music:tempo>

</rdf:Description

Lyrics as metadata

For a complete description of a song, lyrics must be considered as well.

While lyrics could in a sense be regarded as "acoustic metadata", they are per

se actual information entities which have themselves annotation needs. Lyrics

share many similarities with metadata, e.g. they usually refer directly to well

specified song, but acceptions exists as different artist might sing the same

lyrics sometimes even with different musical bases and styles. Most notably,

lyrics have often different authors than the music and voice that interprets

them and might be composed at a different time. Lyrics are not a simple text;

they often have a structure which is similar to that of the song (e.g. a

chorus) so they justify the use use of a markup language with a well specified

semantics. Unlike the previous types of metadata, however, they are not well

suited to be expressed using the W3C Semantic Web initiative languages, e.g. in

RDF. While RDF has been suggested instead of XML for for representig texts in

situation where advanced and multilayered markup is wanted [Ref RDFTEI], music

lyrics markup needs usually limit themselves to indicating particular sections

of the songs (e.g. intro, outro, chorus) and possibly the performing character

(e.g. in duets). While there is no widespread standard for machine encoded

lyrics, some have been proposed [LML][4ML] which in general fit the need for

formatting and differentiating main parts. An encoding in RDF of lyrics would

be of limited use but still possible with RDF based queries possible just

thanks to text search operators in the query language (therfore likely to be

limited to "lyrics that contain word X"). More complex queries could be

possible if more characters are performing in the lirics and each denoted by an

RDF entity which has other metadata attached to it (e.g. the metadata described

in the examples above).

It is to be reported however that an RDF encoding would have the disadvantage

of complexity. In general it would require a supporting software (for example

http://rdftef.sourceforge.net/) to be encoded as XML/RDF can be

difficultly written by hand. Also, contrary to an XML based encoding, it could

not be easily visualized in a human readable way by, e.g., a simple XSLT

transformation.

Both in case of RDF and XML encoding, interesting processing and queries

(e.g. conceptual similarities between texts, moods etc) would necessitate

advanced textual analysis algorithms well outside the scope or XML or RDF

languages. Interestingly however, it might be possible to use RDF description

to encode the results of such advanced processings. Keyword extraction

algorithms (usually a combination of statistical analysis, stemming and

linguistical processing e.g. using wordnet) can be successfully employed on

lyrics. The resulting reppresentative "terms" can be encoded as metadata to the

lyrics or to the related song itself.

Lower Level Acoustic metadata

"Acoustic metadata" is a broad term which can encompass both features which

have an immediate use in higher level use cases (e.g. those presented in the

above examples such as tempo, key, keyMode etc ) and those that can only be

interpreted by data analisys (e.g. a full or simplified representation of the

spectrum or the average power sliced every 10 ms). As we have seen, semantic

technologies are suitable for reppresenting the higher level acoustic metadata.

These are in fact both concise and can be used directly in semantic queries

using, e.g., SparQL. Lower level metadata however, e.g. the MPEG7 features

extracted by extractors like [Ref MPEG7AUDIODB] is very ill suited to be

represented in RDF and is better kept in mpeg-7/xml format for serialization

and interchange.

Semantic technologies could be of use in describing such "chunks" of low

level metadata, e.g. describing what the content is in terms of describing

which features are contained and at which quality. While this would be a

duplicaiton of the information encoded in the MPEG-7/XML, it might be of use in

semantic queries which select tracks also based on the availability of rich low

level metadata.

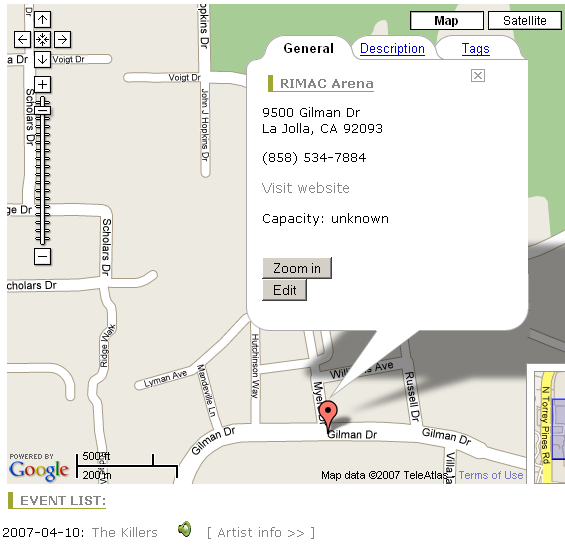

Motivating Example

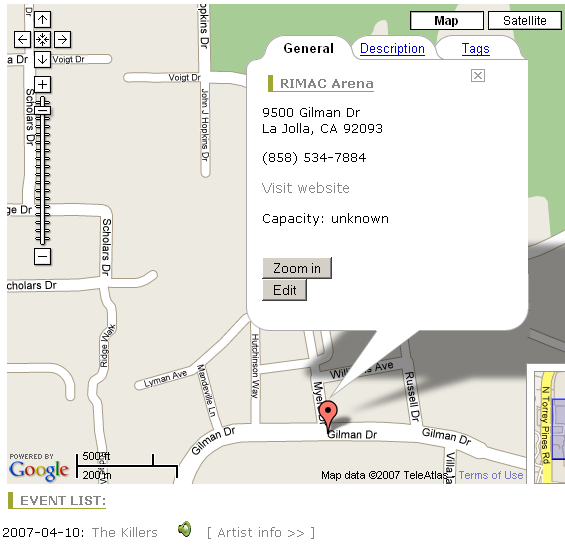

The next gig.

Image courtesy of

Oscar Celma, used with permission.

Commuting is a big issue in any modern society. Semantically Personalized

Playlists might provide both relief and actually benefit in time that cannot be

devoted to actively productive activities. Filippo commutes every morning an

average of 50+-10 minutes. Before leaving he connects his USB stick/mp3 player

to have it "filled" with his morning playlist. The process is completed in 10

seconds, afterall is just 50Mb. he is downloading. During the time of his

commute, Filippo will be offered a smooth flow of news, personal daily ,

entertainment, and cultural snippets from audiobooks and classes.

Musical content comes from Filippo personal music collection or via a content

provider (e.g. a low cost thanks to a one time pay license). Further audio

content comes from podcasts but also from text to speech reading blog posts,

emails, calendar items etc.

Behind the scenes the system works by a combination of semantic queries and

ad-hoc algorithms. Semantic queries operate on an RDF database collecting the

semantic reppresentation of music metadata (as explained in section 1), as well

as annotations on podcasts, news items, audiobooks, and "semantic desktop

items" that is represting Filippo's personal desktop information -such as

emails and calendar entries.

Ad-hoc algorithms operate on low level metadata to provide smooth transition

among tracks. Algorithms for text analysis provide further links among songs

and links within songs, pieces of news, emails etc.

At a higher level, a global optimization algorithm takes care of the final

playlist creation. This is done by balancing the need for having high priority

items played first (e.g. emails from addresss considered important) with the

overall goal of providing a smooth and entertaining experience (e.g.

interleaving news with music etc).

Semantics can help in providing "related information or content" which can be

put adjacent to the actual core content. This can be done in relative freedom

since the content can be at any time skipped by the user using simply the

forward button.

Upcoming concerts

John has been listening to the "Snow Patrol" band for a while. He discovered

the band while listening to one of his favorite podcasts about alternative

music. He has to travel to San Diego next week, and he is finding upcoming

concerts that he would enjoy there, and he asks his personalized semantic web

music service to provide him with some recommendations of upcoming gigs in the

area, and decent bars to have a beer.

<!-- San Diego geolocation -->

<foaf:based_near geo:lat='32.715' geo:long='-117.156'/>

The system is tracking user listening habits, so it detects than one song

from "The Killers" band (scrapped from their website) sounds similar to the

last song John has listened to from "Snow Patrol". Moreover, both bands have

similar styles, and there are some podcasts that contain songs from both bands

in the same session. Interestingly enough, the system knows that the Killers

are playing close to San Diego next weekend, thus it recommends to John to

assist to that gig.

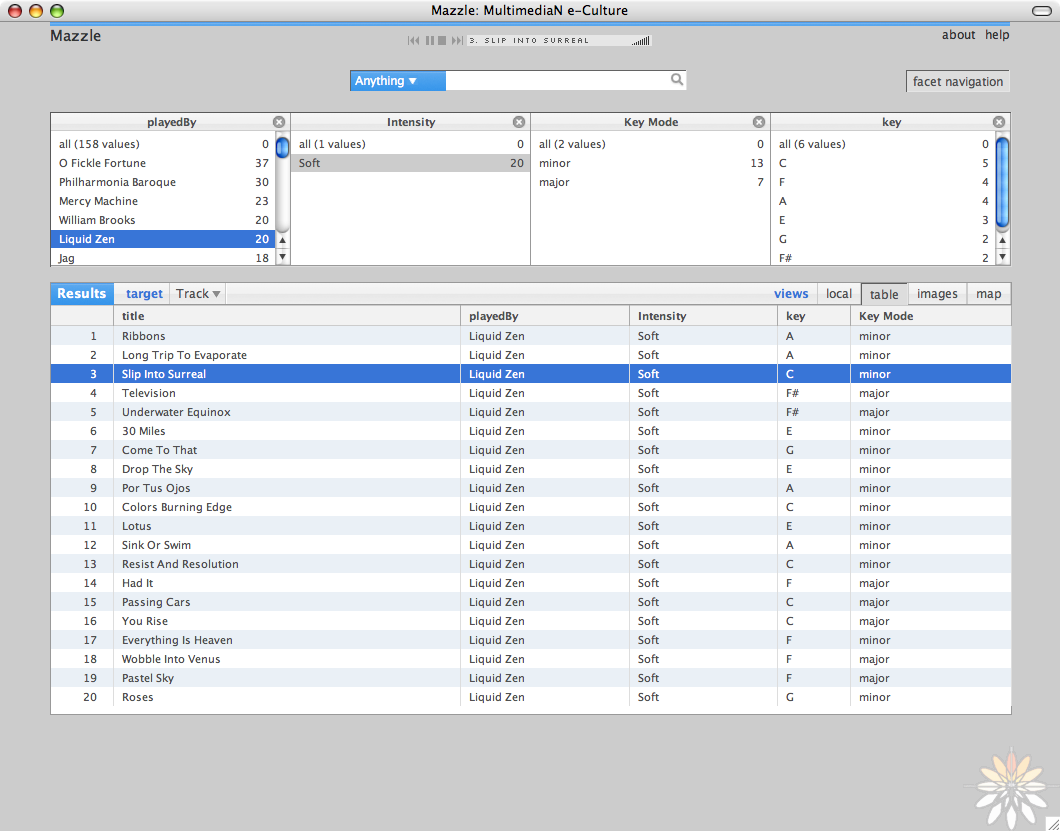

Facet browsing of Music Collections

Michael has a brand new (last generation-posh) iPod. He is looking for some

music using the classic hierarchical navigation

(Genre->Artist->Album->Songs). But the main problem is that he is not

able to find a decent list of songs (from his 100K music collection) to move

into his iPod. On the other hand, facet browsing has recently become popular as

a user friendly interface to data repositories.

/facet system [Hildebrand] presents a new and intuitive way to navigate large

collections, using several facets or aspects, of multimedia assets. /facet

extends browsing of Semantic Web data in four ways. First, users are able to

select and navigate through facets of resources of any type and to make

selections based on properties of other, semantically related, types. Second,

it addresses a disadvantage of hierarchy-based navigation by adding a keyword

search interface that dynamically makes semantically relevant suggestions.

Third, the /facet interface, allows the inclusion of facet-specific display

options that go beyond the hierarchical navigation that characterizes current

facet browsing. Fourth, the browser works on any RDF dataset without any

additional configuration.

Thus, based on a RDF description of music titles, the user

can navigate through music facets, such as Rhythm (beats per minute), Tonality

(Key and mode), Intensity of the piece (moderate, energetic, etc.)

A fully functional example can be seen at

http://slashfacet.semanticweb.org/music/mazzle

Nowadays, in the context of the World Wide Web, the increasing amount of

available music makes very difficult, to the user, to find music he/she would

like to listen to. To overcome this problem, there are some audio search

engines that can fit the user's needs (for example:

http://search.singingfish.com/, http://audio.search.yahoo.com/,

http://www.audiocrawler.com/,

http://www.alltheweb.com/?cat=mp3, http://www.searchsounds.net

and http://www.altavista.com/audio/).

Some of the current existing search engines are nevertheless not fully

exploited because their companies would have to deal with copyright infringing

material. Music search engines have a crucial component: an audio crawler, that

scans the web and gathers related information about audio files.

Moreover, describing music it not an easy task. As presented in section 1,

music metadata copes with several categories (editorial, acoustic, and

cultural). Yet, none of the audio metadata used in practice (e.g ID3, OGG

Vorbis, etc.) can fully describe all these facets. Actually, metadata for

describing music are mostly tags implemented in the Key-Value form

[TAG]=[VALUE], for instance, "ARTIST=The Killers".

The following section introduces, then, the mappings between current audio

vocabularies within the Semantic Web technologies. This will allow to extend

the description of a piece of music, as well as adding explicit semantics.

Integrating Various Vocabularies Using RDF

In this section we present a way to integrate several audio vocabularies into

a single one, based on RDF. For more details about the audio vocabularies, the

reader is refered to

Vocabularies - Audio Content Section, and

Vocabularies - Audio Ontologies Section.

This section will focus on the ID3 and OGG Vorbis metadata initiatives, as

they are the most used ones. Though, both vocabularies cope only editorial

data. Moreover, a first mapping with the

Music Ontology is presented, too.

ID3 is a metadata container most often

used in conjunction with the MP3 audio file format. It allows information such

as the title, artist, album, track number, or other information about the file

to be stored in the file itself (from Wikipedia).

The most important metadata descriptors are:

-

Artist name <=> <foaf:name></foaf:name>

-

Album name <=> <mo:Record><dc:title>Album

name</dc:title></mo:Record>

-

Song title <=> <mo:Track><dc:title>Album

name</dc:title></mo:Track>

-

Year

-

Track number <=> <mo:trackNum>Track

number</mo:trackNum></mo:Track>

-

Genre (from a predefined list of more than 100 genres) <=> <mo:Genre>Genre

name</mo:Genre>

OGG Vorbis metadata, called comments,

support metadata 'tags' similar to those implemented in the ID3. The metadata

is stored in a vector of strings, encoded in UTF-8

-

TITLE <=> <mo:Track><dc:title>Album

name</dc:title></mo:Track>

-

VERSION: The version field may be used to differentiate multiple versions of

the same track title

-

ALBUM <=> <mo:Record><dc:title>Album

name</dc:title></mo:Record>

-

TRACKNUMBER <=> <mo:trackNum>Track

number</mo:trackNum></mo:Track>

-

ARTIST <=> <foaf:name></foaf:name>

-

PERFORMER <=> <foaf:name></foaf:name> ???

-

COPYRIGHT: Copyright attribution

-

LICENSE: License information, eg, 'All Rights Reserved', 'Any Use Permitted', a

URL to a license such as a Creative Commons license

-

ORGANIZATION: Name of the organization producing the track (i.e ‘a record

label’)

-

DESCRIPTION: A short text description of the contents

-

GENRE <=> <mo:Genre>Genre name</mo:Genre>

-

DATE

-

LOCATION: Location where the track was recorded

RDFizing songs

We present a way to RDFize tracks based on the

Music Ontology.

Example: Search a song into MusicBrainz

and RDFize results. This first example shows how to query the MusicBrainz music repository, and RDFize the results based on the Music

Ontology. Try a complete example at

http://foafing-the-music.iua.upf.edu/RDFize/track?artist=U2&title=The+fly.

The parameters are song title (The Fly) and artist name (U2).

<mo:Track rdf:about='http://musicbrainz.org/track/dddb2236-823d-4c13-a560-bfe0ffbb19fc'>

<mo:puid rdf:resource='2285a2f8-858d-0d06-f982-3796d62284d4'/>

<mo:puid rdf:resource='2b04db54-0416-d154-4e27-074e8dcea57c'/>

<dc:title>The Fly</dc:title>

<dc:creator>

<mo:MusicGroup rdf:about='http://musicbrainz.org/artist/a3cb23fc-acd3-4ce0-8f36-1e5aa6a18432'>

<foaf:img rdf:resource='http://ec1.images-amazon.com/images/P/B000001FS3.01._SCMZZZZZZZ_.jpg'/>

<mo:musicmoz rdf:resource='http://musicmoz.org/Bands_and_Artists/U/U2/'/>

<foaf:name>U2</foaf:name>

<mo:discogs rdf:resource='http://www.discogs.com/artist/U2'/>

<foaf:homepage rdf:resource='http://www.u2.com/'/>

<foaf:member rdf:resource='http://musicbrainz.org/artist/0ce1a4c2-ad1e-40d0-80da-d3396bc6518a'/>

<foaf:member rdf:resource='http://musicbrainz.org/artist/1f52af22-0207-40ac-9a15-e5052bb670c2'/>

<foaf:member rdf:resource='http://musicbrainz.org/artist/a94e530f-4e9f-40e6-b44b-ebec06f7900e'/>

<foaf:member rdf:resource='http://musicbrainz.org/artist/7f347782-eb14-40c3-98e2-17b6e1bfe56c'/>

<mo:wikipedia rdf:resource='http://en.wikipedia.org/wiki/U2_%28band%29'/>

</mo:MusicGroup>

</dc:creator>

</mo:Track>

Example: The parameter is a URL that contains an MP3 file. In this case it

reads the ID3 tags from the MP3 file. See an output example at

http://foafing-the-music.iua.upf.edu/RDFize/track?url=http://www.archive.org/download/bt2002-11-21.shnf/bt2002-11-21d1.shnf/bt2002-11-21d1t01_64kb.mp3

(it might take a little while).

<mo:Track rdf:about='http://musicbrainz.org/track/7201c2ab-e368-4bd3-934f-5d936efffcdc'>

<dc:creator>

<mo:MusicGroup rdf:about='http://musicbrainz.org/artist/6b28ecf0-94e6-48bb-aa2a-5ede325b675b'>

<foaf:name>Blues Traveler</foaf:name>

<mo:discogs rdf:resource='http://www.discogs.com/artist/Blues+Traveler'/>

<foaf:homepage rdf:resource='http://www.bluestraveler.com/'/>

<foaf:member rdf:resource='http://musicbrainz.org/artist/d73c9a5d-5d7d-47ec-b15a-a924a1a271c4'/>

<mo:wikipedia rdf:resource='http://en.wikipedia.org/wiki/Blues_Traveler'/>

<foaf:img rdf:resource='http://ec1.images-amazon.com/images/P/B000078JKC.01._SCMZZZZZZZ_.jpg'/>

</mo:MusicGroup>

</dc:creator>

<dc:title>Back in the Day</dc:title>

<mo:puid rdf:resource='0a57a829-9d3c-eb35-37a8-d0364d1eae3a'/>

<mo:puid rdf:resource='02039e1b-64bd-6862-2d27-3507726a8268'/>

</mo:Track>

Example: Once the songs have been RDFized, we can ask last.fm

for the latest tracks a user has been listening to, and then RDFize them.

http://foafing-the-music.iua.upf.edu/draft/RDFize/examples/lastfm_tracks.rdf is an example that

shows the latest tracks a user (RJ) has been listening to.

You can try it at

http://foafing-the-music.iua.upf.edu/RDFize/lastfm_tracks?username=RJ

Introduction

More and more news is produced and consumed each day. News generally consists

of mainly textual stories, which are more and more often illustrated with

graphics, images and videos. News can be further processed by professional

(newspapers), directly accessible for web users through news agencies, or

automatically aggregated on the web, generally by search engine portal and not

without copyright problems.

For easing the exchange of news, the

International Press Telecommunication Council (IPTC) is currently

developping the NewsML G2 Architecture (NAR) whose goal is to provide a

single generic model for exchanging all kinds of newsworthy information, thus

providing a framework for a future family of IPTC news exchange standards

[NewsML-G2]. This family includes NewsML, SportsML, EventsML, ProgramGuideML and a

future WeatherML. All are XML-based languages used for describing not only the

news content (traditional metadata), but also their management and packaging,

or related to the exchange itself (transportation, routing).

However, despite this general framework, interoperability problems can occur.

News is about the world, so its metadata might use specific controlled

vocabularies. For example, IPTC itself is developing the IPTC News Codes [NewsCodes]

that currently contain 28 sets of controlled terms. These terms will be the

values of the metadata in the NewsML G2 Architecture. The news descriptions

often refer to other thesaurus and controlled vocabularies, that might come

from the industry (for example, XBRL [XBRL] in the financial domain), and all are

represented using different formats. From the media point of view, the pictures

taken by the journalist come with their EXIF metadata [EXIF]. Some videos might be

described using the EBU format [EBU] or even with MPEG-7 [MPEG-7].

We illustrate these interoperability issues between domain vocabularies and

other multimedia standards in the financial news domain. For example, the

Reuters Newswires and the

Dow Jones Newswires provide categorical

metadata associated with news feeds. The particular vocabularies of category

codes, however, have been developed independently, leading to clear

interoperability issues. The general goal is to improve the search and the

presentation of news content in such an heterogeneous environment. We provide a

motivating example that highlight the issues discussed above and we present a

potential solution to this problem, which leverages Semantic Web technologies.

Motivating Example

XBRL (Extended Business Reporting Language) [XBRL] is a standardized way of

enconding financial information of companies, and about the management

structure, location, number of employes, etc. of such entities. XBRL is

basically about "quantitative" information in the financial domain, and is

based on the periodic reports generated by the companies. But for many Business

Intelligence applications, there is also a need to consider "qualitative"

information, which is mostly delivered by news articles. The problem is

therefore how to optimally integrate information from the periodic reports and

the day to day information provided by specialized news agencies. Our goal is

to provide a platform that allows more semantics in automated ranking of

creditworthiness of companies. The financial news are playing an important role

since they provide "qualitative" information on companies, branches, trends,

countries, regions etc.

There are quite a few news feeds services within the financial domain,

including the Dow Jones Newswire and Reuters. Both Reuters and Dow Jones

provides an XML based representation and have associated with each article

metadata with date, time, headline, full story, company ticker symbol, and

category codes.

Example 1: NewsML 1 Format

We consider the news feeds similar to that published by Reuters, where

along with the text of the article, there is associated metadata in the form of

XML tags. The terms in these tags are associated with a controlled vocabulary

developed by Reuters and other industry bodies. Below is a sample news article

formatted in NewsML 1, which is similar to the structural format used by

Reuters. For exposition, the metadata tags associated with the article are

aligned with those used by Reurters.

<?xml version="1.0" encoding="UTF-8"?>

<NewsML Duid="MTFH93022_2006-12-14_23-16-17_NewsML">

<Catalog Href="..."/>

<NewsEnvelope>

<DateAndTime>20061214T231617+0000</DateAndTime>

<NewsService FormalName="..."/>

<NewsProduct FormalName="TXT"/>

<Priority FormalName="3"/>

</NewsEnvelope>

<NewsItem Duid="MTFH93022_2006-12-14_23-16-17_NEWSITEM">

<Identification>

<NewsIdentifier>

<ProviderId>...</ProviderId>

<DateId>20061214</DateId>

<NewsItemId>MTFH93022_2006-12-14_23-16-17</NewsItemId>

<RevisionId Update="N" PreviousRevision="0">1</RevisionId>

<PublicIdentifier>...</PublicIdentifier>

</NewsIdentifier>

<DateLabel>2006-12-14 23:16:17 GMT</DateLabel>

</Identification>

<NewsManagement>

<NewsItemType FormalName="News"/>

<FirstCreated>...</FirstCreated>

<ThisRevisionCreated>...</ThisRevisionCreated>

<Status FormalName="Usable"/>

<Urgency FormalName="3"/>

</NewsManagement>

<NewsComponent EquivalentsList="no" Essential="no" Duid="MTFH92062_2002-09-23_09-29-03_T88093_MAIN_NC" xml:lang="en">

<TopicSet FormalName="HighImportance">

<Topic Duid="t1">

<TopicType FormalName="CategoryCode"/>

<FormalName Scheme="MediaCategory">OEC</FormalName>

<Description xml:lang="en">Economic news, EC, business/financial pages</Description>

</Topic>

<Topic Duid="t2">

<TopicType FormalName="Geography"/>

<FormalName Scheme="N2000">DE</FormalName>

<Description xml:lang="en">Germany</Description>

</Topic>

</TopicSet>

<Role FormalName="Main"/>

<AdministrativeMetadata>

<FileName>MTFH93022_2006-12-14_23-16-17.XML</FileName>

<Provider>

<Party FormalName="..."/>

</Provider>

<Source>

<Party FormalName="..."/>

</Source>

<Property FormalName="SourceFeed" Value="IDS"/>

<Property FormalName="IDSPublisher" Value="..."/>

</AdministrativeMetadata>

<NewsComponent EquivalentsList="no" Essential="no" Duid="MTFH93022_2006-12-14_23-16-17" xml:lang="en">

<Role FormalName="Main Text"/>

<NewsLines>

<HeadLine>Insurances get support</HeadLine>

<ByLine/>

<DateLine>December 14, 2006</DateLine>

<CreditLine>...</CreditLine>

<CopyrightLine>...</CopyrightLine>

<SlugLine>...</SlugLine>

<NewsLine>

<NewsLineType FormalName="Caption"/>

<NewsLineText>Insurances get support</NewsLineText>

</NewsLine>

</NewsLines>

<DescriptiveMetadata>

<Language FormalName="en"/>

<TopicOccurrence Importance="High" Topic="#t1"/>

<TopicOccurrence Importance="High" Topic="#t2"/>

</DescriptiveMetadata>

<ContentItem Duid="MTFH93022_2006-12-14_23-16-17">

<MediaType FormalName="Text"/>

<Format FormalName="XHTML"/>

<Characteristics>

<Property FormalName="ContentID" Value="urn:...20061214:MTFH93022_2006-12-14_23-16-17_T88093_TXT:1"/>

...

</Characteristics>

<DataContent>

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<title>Insurances get support</title>

</head>

<body>

<h1>The Senate of Germany wants to constraint the participation of clients to the hidden reserves</h1>

<p>

DÃœSSELDORF The German Senate supports the point of view of insurance companies in a central point of the new law

defining insurance contracts, foreseen for 2008. In a statement, the Senators show disagreements with the proposal

of the Federal Government, who was in favor of including investment bonds in the hidden reserves, which in the

next future should be accessible to the clients of the insurance companies.

...

</p>

</body>

</html>

</DataContent>

</ContentItem>

</NewsComponent>

</NewsComponent>

</NewsItem>

</NewsML>

Example 2: NewsML G2 Format

If we consider the same data, but expressed in NewsML G2:

<?xml version="1.0" encoding="UTF-8"?>

<newsMessage xmlns="http://iptc.org/std/newsml/2006-05-01/" xmlns:xhtml="http://www.w3.org/1999/xhtml">

<header>

<date>2006-12-14T23:16:17Z</date>

<transmitId>696</transmitId>

<priority>3</priority>

<channel>ANA</channel>

</header>

<itemSet>

<newsItem guid="urn:newsml:afp.com:20060720:TX-SGE-SNK66" schema="0.7" version="1">

<catalogRef href="http://www.afp.com/newsml2/catalog-2006-01-01.xml"/>

<itemMeta>

<contentClass code="ccls:text"/>

<provider literal="Handelsblatt"/>

<itemCreated>2006-07-20T23:16:17Z</itemCreated>

<pubStatus code="stat:usable"/>

<service code="srv:Archives"/>

</itemMeta>

<contentMeta>

<contentCreated>2006-07-20T23:16:17Z</contentCreated>

<creator/>

<language literal="en"/>

<subject code="cat:04006002" type="ctyp:category"/> #cat:04006002= banking

<subject code="cat:04006006" type="ctyp:category"/> #cat:04006006= insurance

<slugline separator="-">Insurances get support</slugline>

<headline>The Senate of Germany wants to constraint the participation of clients to the hidden reserves</headline>

</contentMeta>

<contentSet>

<inlineXML type="text/plain">

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<title>Insurances get support</title>

</head>

<body>

<h1>The Senate of Germany wants to constraint the participation of clients to the hidden reserves</h1>

<p>

DÃœSSELDORF The German Senate supports the point of view of insurance companies in a central point of the new law defining

insurance contracts, foreseen for 2008. In a statement, the Senators show disagreements with the proposal of the Federal

Government, who was in favor of including investment bonds in the hidden reserves, which in the next future should be accessible

to the clients of the insurance companies.

...

</p>

</body>

</html>

</inlineXML>

</contentSet>

</newsItem>

</itemSet>

</newsMessage>

Example 3: German Broadcaster Format

The terms in the tags displayed just above are associated with a controlled

vocabulary developed by Reuters. If we consider the internal XML encoding that

has been proposed provisionally by a running European project (the MUSING

project) for the encoding of similar articles in German

Newspapers (mapping the HTML tags of the online articles into XML and adding

others), we have the following:

<ID>1091484</ID> # Internal encoding

<SOURCE>Handelsblatt</SOURCE> # Name of the newspaper we get the information from

<DATE>14.12.2006</DATE> # Date of publication

<NUMBER>242</NUMBER> # Numbering of the publication

<PAGE>27</PAGE> # Page number in the publication

<LENGTH>111</LENGTH> # The number of lines in the main article

<ACTIVITY_FIELD>Banking_Insurance</ACTIVITY_FIELD> # corresponding to the financial domain reported in the article

<TITLE>Insurances get support</TITLE>

<SUBTITLE>The Senate of Germany wants to constraint the participation of clients to the hidden reserves</SUBTITLE>

<ABSTRACT></ABSTRACT>

<AUTHORS>Lansch, Rita</AUTHORS>

<LOCATION>Federal Republic of Germany</LOCATION>

<KEYWORDS>Bank supervision, Money and Stock exchange, Bank</KEYWORDS>

<PROPERNAMES>Meister, Edgar Remsperger, Hermann Reckers, Hans Fabritius, Hans Georg Zeitler, Franz-Christoph</PROPERNAMES>

<ORGANISATIONS>Bundesanstalt für Finanzdienstleistungsaufsicht BAFin</ORGANISATIONS>

<TEXT>DÃœSSELDORF The German Senate supports the point of view of insurance companies in a central point of the new law

defining insurance contracts, foreseen for 2008. In a statement, the Senators show disagreements with the proposal of the

Federal Government, who was in favor of including investment bonds in the hidden reserves, which in the next future should

be accessible to the clients of the insurance companies....</TEXT>

Example 4: XBRL Format

Structured data and documents such as Profit & Loss tables can finally be

mapped onto existing taxonomies, like XBRL, which is an emerging standard for

Business Reporting.

XBRL definition in Wikipedia: "XBRL is an emerging XML-based standard to

define and exchange business and financial performance information. The

standard is governed by a not-for-profit international consortium

XBRL International Incorporated

of approximately 450 organizations, including regulators, government agencies,

infomediaries and software vendors. XBRL is a standard way to communicate

business and financial performance data. These communications are defined by

metadata set in taxonomies. Taxonomies capture the definition of individual

reporting elements as well as the relationships between elements within a

taxonomy and in other taxonomies.

The relations between elements supported, for the time being, (at least for

the German Accounting Principles expressed in the corresponding XBRL taxonomy,

see http://www.xbrl.de/) are:

-

child-parent

-

parent-child

-

dimension-element

-

element-dimension

In fact the child-parent/parent-child relation haves to be understood as

part-of relations within finanical reporting documents rather than as sub-class

relations, as we noticed in an attempt to formlize XBRL in OWL, in the context

of the European MUSING R&D project (http://www.musing.eu/).

The table below shows how a balance sheet looks like:

| structured P&L

|

2002 EUR

|

2002 EUR

|

2002 EUR

|

|

Sales

|

850.000,00

|

800.000,00

|

300.000,00

|

|

Changes in stock

|

171.000,00

|

104.000,00

|

83.000,00

|

|

Own work capitalized

|

0,00

|

0,00

|

0,00

|

|

Total output

|

1.021.000,00

|

904.000,00

|

383.000,00

|

|

...

|

|

|

|

|

Net income/net loss for the year

|

139.000,00

|

180.000,00

|

-154.000,00

|

|

|

2002

|

2001

|

2000

|

|

Number of Employees

|

27

|

25

|

23

|

|

....

|

|

|

|

There is a lot of variations in both the way the information can be displayed

(number of columns, use of fonts, etc.) but also in the terminology used: the

financial terms in the leftmost column are not normalized at all. Also the

figures are not normalized (clearly, the company has more than just "27"

employees, but it is not indicated in the table if we deal with 27000

employess). This makes this kind of information unable to be used by semantic

applications. XBRL is a very important step in the normalization of such data,

as can be seen in the following example displaying the XBRL encoding of the

kind of data that was presented just above in the table:

<group xsi:schemaLocation="http://www.xbrl.org/german/ap/ci/2002-02-15 german_ap.xsd">

<numericContext id="c0" precision="8" cwa="false">

<entity>

<identifier scheme="urn:datev:www.datev.de/zmsd">11115,129472/12346</identifier>

</entity>

<period>

<startDate>2002-01-01</startDate>

<endDate>2002-12-31</endDate>

</period>

<unit>

<measure>ISO4217:EUR</measure>

</unit>

</numericContext>

<numericContext id="c1" precision="8" cwa="false">

<entity>

<identifier scheme="urn:datev:www.datev.de/zmsd">11115,129472/12346</identifier>

</entity>

<period>

<startDate>2001-01-01</startDate>

<endDate>2001-12-31</endDate>

</period>

<unit>

<measure>ISO4217:EUR</measure>

</unit>

</numericContext>

<numericContext id="c2" precision="8" cwa="false">

<entity>

<identifier scheme="urn:datev:www.datev.de/zmsd">11115,129472/12346</identifier>

</entity>

<period>

<startDate>2000-01-01</startDate>

<endDate>2000-12-31</endDate>

</period>

<unit>

<measure>ISO4217:EUR</measure>

</unit>

</numericContext>

<t:bs.ass numericContext="c2">1954000</t:bs.ass>

<t:bs.ass.accountingConvenience numericContext="c0">40000</t:bs.ass.accountingConvenience>

<t:bs.ass.accountingConvenience numericContext="c1">70000</t:bs.ass.accountingConvenience>

<t:bs.ass.accountingConvenience numericContext="c2">0</t:bs.ass.accountingConvenience>

<t:bs.ass.accountingConvenience.changeDem2Eur numericContext="c0">0</t:bs.ass.accountingConvenience.changeDem2Eur>

<t:bs.ass.accountingConvenience.changeDem2Eur numericContext="c1">20000</t:bs.ass.accountingConvenience.changeDem2Eur>

<t:bs.ass.accountingConvenience.changeDem2Eur numericContext="c2">0</t:bs.ass.accountingConvenience.changeDem2Eur>

<t:bs.ass.accountingConvenience.startUpCost numericContext="c0">40000</t:bs.ass.accountingConvenience.startUpCost>

<t:bs.ass.accountingConvenience.startUpCost numericContext="c1">50000</t:bs.ass.accountingConvenience.startUpCost>

<t:bs.ass.accountingConvenience.startUpCost numericContext="c2">0</t:bs.ass.accountingConvenience.startUpCost>

<t:bs.ass.currAss numericContext="c0">571500</t:bs.ass.currAss>

<t:bs.ass.currAss numericContext="c1">558000</t:bs.ass.currAss>

<t:bs.ass.currAss numericContext="c2">394000</t:bs.ass.currAss>

</group>

In the XBRL example shown just above, one can see the normalization of the

periods for which the reporting is valid, and for the currency used in the

report. The annotation of the financial values of the financial items is then

proposed on the base of a XBRL tag (language independent) in the context of the

uniquely identified period (the "c0", "c1" etc), and with the encoded currency.

The XBRL representation is marking a real progress compared to the

"classical" way of displaying financial information. And as such XBRL allows

for some semantics, describing for example various types of relations. The need

for more semantics is mainly driven by applications requiring merging of the

quantitative information encoded in XBRL with other kind of information, which

is crucial in Business Intelligence scenarios, for example merging balance

sheet information with information coming from newswires or with information in

related domain, like politics. Therefore some initiatives started looking at

representing information encoded in XBRL within OWL, as the basic ontology

language representation in the Semantic Web community [Declerck], [Lara].

Potential Solution: Converting Various Vocabularies into RDF

In this section, we discuss a potential solution to the problems highlighted

in this document. We propose utilizing Semantic Web technologies for the

purpose of aligning these standards and controlled vocabularies. Specifically,

we discuss adding an RDF/OWL layer on top of these standards and vocabularies

for the purpose of data integration and reuse. The following sections discuss

this approach in more detail.

XBRL in the Semantic Web

We sketch how we convert XBRL to OWL. The XBRL OWL base taxonomy was manually

developed using the OWL plugin of the Protege knowledge base editor [Knublauch]. The

version of XBRL we used together with the Accounting Principles for German

consists of 2,414 concepts, 34 properties, and 4,780 instances. Overall, this

translates into 24,395 unique RDF triples. The basic idea during our export was

that even though we are developing an XBRL taxonomy in OWL using Protege, the

information that is stored on disk is still RDF on the syntactic level. We were

thus interested in RDF data base systems which make sense of the semantics of

OWL and RDFS constructs such as rdfs:subClassOf or owl:equivalentClass. We have

been experimenting with the Sesame open-source middleware framework for storing

and retrieving RDF data [Broekstra].

Sesame partially supports the semantics of RDFS and OWL constructs via

entailment rules that compute "missing" RDF triples (the deductive closure) in

a forward-chaining style at compile time. Since sets of RDF statements

represent RDF graphs, querying information in an RDF framework means to specify

path expressions. Sesame comes with a very powerful query language, SeRQL,

which includes (i) generalised path expressions, (ii) a restricted form of

disjunction through optional matching, (iii) existential quantifiation over

predicates, and (iv) Boolean constraints. From an RDF point of view, additional

62,598 triples were generated through Sesame's (incomplete) forward chaining

inference mechanism.

For proof of concept, we looked at the freely available financial reporting

taxonomies(http://www.xbrl.org/FRTaxonomies/)

and took the final German AP Commercial and Industrial (German Accounting

Principles) taxonomy (February 15, 2002;

http://www.xbrl-deutschland.de/xe news2.htm), acknowledged by XBRL

International. The taxonomy can be obtained as a packed zip file from

http://www.xbrl-deutschland.de/germanap.zip.

xbrl-instance.xsd specifies the XBRL base taxonomy using XML Schema. The file

makes use of XML schema datatypes, such as xsd:string or xsd:date, but also

defines simple types (simpleType), complex types (complexType), elements

(element), and attributes (attribute). Element and attribute declarations are

used to restrict the usage of elements and attributes in XBRL XML documents.

Since OWL only knows the distinction between classes and properties, the

correpondences between XBRL and OWL description primitives is not a one-to-one

mapping:

However, OWL allows to characterize properties more precisely than just

having only a domain and a range. We can mark a property as functional (instead

of being relational, the default case), meaning that it takes at most one

value. This clearly means that a property must not have a value for each

instance of a class on which it is defined. Thus a functional property is in

fact a partial (and must not necessarily be a total) function. Exactly the

distinction functional vs. relational is represented by the attribute vs.

element distinction, since multiple elements are allowed within a surrounding

context. However, at most one attribute-value combination for each attribute

name is allowed within an element:

| XBRL |

OWL |

| simple type |

class |

| complex type |

class |

| attribute |

functional property |

| element |

relational property |

Simple and complex types differs from one another in that simple types are

essentially defined as extensions of the basic XML Schema datatypes, whereas

complex types are XBRL specifications that do not build upon XSD types, but

instead introduce their own element and attribute descriptions. Here are simple

type specifications found in the base terminology of XBRL, located in the file

xbrl-instance.xsd:

Since OWL only claims that "As a minimum, tools must support datatype

reasoning for the XML Schema datatypes xsd:string and xsd:integer." [OWL, p. 30]

and because "It is not illegal, although not recommended, for applications to

define their own datatypes ..." [OWL, p. 29], we have decided to implement a

workaround that represents all the necessary XML Schema datatypes used in XBRL.

This was done by having a wrapper type for each simple XML Schema type. For

instance, "monetary" is a simple subtype of the wrapper type "decimal":

<restriction base="decimal"/>. Below we show the first lines of the

actual OWL version of XBRL we have implemented:

<?xml version="1.0"?>

<rdf:RDF xmlns="http://xbrl.dfki.de/main.owl#"

xmlns:protege="http://protege.stanford.edu/plugins/owl/protege#"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

xmlns:xsd="http://www.w3.org/2001/XMLSchema#"

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"

xmlns:owl="http://www.w3.org/2002/07/owl#"

xml:base="http://xbrl.dfki.de/main.owl">

<owl:Ontology rdf:about=""/>

<owl:Class rdf:ID="bs.ass.fixAss.tan.machinery.installations">

<rdfs:subClassOf>

<owl:Class rdf:ID="Locator"/>

</rdfs:subClassOf>

</owl:Class>

<owl:Class rdf:ID="nt.ass.fixAss.fin.loansToParticip.net.addition">

<rdfs:subClassOf>

<owl:Class rdf:about="#Locator"/>

</rdfs:subClassOf>

</owl:Class>

<owl:Class rdf:ID="nt.ass.fixAss.fin.loansToSharehold.net.beginOfPeriod.endOfPrevPeriod">

<rdfs:subClassOf>

<owl:Class rdf:about="#Locator"/>

</rdfs:subClassOf>

</owl:Class>

<owl:Class rdf:ID="nt.ass.fixAss.fin.gross.revaluation.comment">

<rdfs:subClassOf>

<owl:Class rdf:about="#Locator"/>

</rdfs:subClassOf>

</owl:Class>

<owl:Class rdf:ID="nt.ass.fixAss.fin.securities.gross.beginOfPeriod.otherDiff">

<rdfs:subClassOf>

<owl:Class rdf:about="#Locator"/>

</rdfs:subClassOf>

</owl:Class>

...

</owl:Ontology>

</rdf:RDF>

The German Accounting Principles taxonomy consists of 2,387 concepts, plus 27

concepts from the base taxonomy for XBRL. 34 properties were defined and 4,780

instance fnally generated.

Besides the ontologization of XBRL, we would propose to build an ontology on

the top of the taxonomic organization of NACE codes. Then we need a clear

ontological representation of the time units/information relevant in the

domain. And last but not least, we would also use all the

classification/categorization information of NewsML/IPTC to use more accurate

semantic Metadata for the encoding of the (financial) news articles.

EXIF in the Semantic Web

One of today's commonly used image format and metadata standard is the

Exchangeable Image File Format [EXIF]. This file format provides a standard

specification for storing metadata regarding image. Metadata elements

pertaining to the image are stored in the image file header and are marked with

unique tags, which serves as an element identifying.

As we note in this document, one potentional way to integrate EXIF metadata

with additinoal news/multimedia metadata formats is to add an RDF layer on top

of the metadata standards. Recently there has been efforts to encode EXIF

metadata in such Semantic Web standards, which we briefly detail below. We note

that both of these ontologies are semantically very similar, thus this issue is

not addressed here. Essentially both are a straightforward encodings of the

EXIF metadata tags for images. There are some syntactic differences,

but again they are quite similar; they primarily differ in their naming

conventions utilized.

The Kanzaki EXIF RDF Schema provides an encoding of the basic EXIF

metadata tags in RDFS. Essentially, these are the tags defined from Section 4.6

of [EXIF]. We also note here that relevant domains and ranges are utilized as

well. It additionally provides an EXIF conversion service, EXIF-to-RDF,

which extracts EXIF metadata from images and automatically maps it to

the RDF encoding. In particular the service takes a URL to an EXIF image and

extracts the embedded EXIF metadata. The service then converts this metadata to

the RDF schema and returns this to the user.

The Norm Walsh EXIF RDF Schema provides another encoding of the basic

EXIF metadata tags in RDFS. Again, these are the tags defined from Section 4.6

of [EXIF]. It additionally provides JPEGRDF, which is a Java application that

provides an API to read and manipulate EXIF meatadata stored in JPEG images.

Currently, JPEGRDF can can extract, query, and augment the EXIF/RDF data stored

in the file headers. In particular, we note that the API can be used to convert

existing EXIF metadata in file headers to the schema. The

resulting RDF can then be stored in the image file header, etc. (Note here that

the API's functionality greatly extends that which was briefly presented here).

Putting All That Together

Some text showing how this qualitative and quantitative information benefits

to interoperate ...

Introduction

Tags are what may be the simplest form of annotation: simple user-provided

keywords that are assigned to resources, in order to support subsequent

retrieval. In itself, this idea is not particularly new or revolutionary:

keyword-based retrieval has been around for a while. In contrast to the formal

semantics provided by the Semantic Web standards, tags have no semantic

relations whatsoever, including a lack of hierarchy; tags are just flat

collections of keywords.

There are however new dimensions that have boosted the popularity of this

approach and given a new perspective on an old theme: low-cost applicability

and collaborative tagging.

Tagging lowers the barrier of metadata annotation, since it requires minimal

effort on behalf of annotators: there are no special tools or complex interface

that the user needs to get familiar with, and no deep understanding of logic

principles or formal semantics required – just some standard technical

expertise. Tagging seems to work in a way that is intuitive to most people, as

demonstrated by its widespread adoption, as well as by certain studies

conducted on the field [Trant]. Thus, it helps bridging the 'semantic gap' between

content creators and content consumers, by offering 'alternative points of

access' to document collections.

The main idea behind collaborative tagging is simple: collaborative tagging

platforms (or, alternatively, distributed classification systems - DCSs [Mejias])

provide the technical means, usually via some sort of web-based interface, that

support users in tagging resources. What is the important aspect of this is

that they aggregate collections of tags that an individual uses, or his tag

vocabulary, called a personomy [Hotho], into what has been termed a folksonomy: a

collection of all personomies [Mathes, Smith].

Some of the most popular collaborative tagging systems are Delicious

(bookmarks), Flickr (images), Last.fm (music), YouTube (video), Connotea

(bibliographic information), steve.museum (museum items) and Technorati

(blogging). Using these platforms is free, although in some cases users can opt

for more advanced features by getting an upgraded account, for which they have

to pay. The most prominent among them are Delicious and Flickr, for which some

quantitative user studies are available [HitWise,

NetRatings]. These user studies document a

phenomenal growth, that indicates that in real-life tagging is a very viable

solution for annotating any type of resource.

Motivating Scenario

Let us view some of the current limitations of tag-based annotation, by

examining a motivating example:

Let's suppose that user Mary has an account on platform S1, that specializes in

images. Mary has been using S1 for a while, so she has progressively built a

large image collection, as well as a rich vocabulary of tags (personomy).

Another user, Sylvia, who is Mary's friend, is using a different platform, S2,

to annotate her images. At some point, Mary and Sylvia attended the same event,

and each one took some pictures with her own camera. As each user has her

reasons for choosing a preferred platform, none of them would like to change.

They would like however to be able to link to each other's annotated pictures,