Abstract

This is the report of the W3C Emotion Incubator Group (EmoXG) as specified

in the Deliverables section of its charter.

In this report we present requirements for information that needs to be

represented in a general-purpose Emotion Markup Language in order to be

usable in a wide range of use cases.

Specifically the report:

- describes the range of use cases in which an emotion markup language

would be needed,

- presents a rich structured collection of requirements arising from the

use cases,

- describes how these requirements coincide with scientific models of

emotion,

- begins to assess syntactic issues in view of a future specification, by

evaluating existing markup languages in the light of the collected

requirements.

The report identifies various areas which require further investigation

and debate. The intention is that it forms a major input into a new Incubator

Group which would develop a draft specification as a proposal towards a

future activity in the W3C Recommendation Track.

Status of this document

This section describes the status of this document at the time of its

publication. Other documents may supersede this document. A list of Final Incubator Group

Reports is available. See also the W3C

technical reports index at http://www.w3.org/TR/.

This document was developed by the W3C Emotion Incubator Group. It

represents the consensus view of the group, in particular those listed in the

acknowledgements, on requirements for a generally usable emotion

markup language. The document has two main purposes:

- elicit discussion with other groups, notably the MMI and VoiceBrowser

groups at W3C, in view of a possible collaboration towards future

standards;

- serve as the basis for a draft specification document which should be

the output of a successor Incubator Group.

Publication of this document by W3C as part of the W3C Incubator Activity indicates

no endorsement of its content by W3C, nor that W3C has, is, or will be

allocating any resources to the issues addressed by it. Participation in

Incubator Groups and publication of Incubator Group Reports at the W3C site

are benefits of W3C

Membership.

Incubator Groups have as a goal to

produce work that can be implemented on a Royalty Free basis, as defined in

the W3C Patent Policy. Participants in this Incubator Group have made no

statements about whether they will offer licenses according to the licensing

requirements of the W3C Patent Policy for portions of this Incubator

Group Report that are subsequently incorporated in a W3C Recommendation.

Table of Contents

Foreword: A Word

of Caution

1. Introduction

2. Scientific Descriptions of

Emotion

3. Use Cases

4. Requirements

5. Assessment of Existing

Markup Languages

6. Summary and

Outlook

7. References

8.

Acknowledgements

Appendix 1: Use

Cases

Appendix

2: Detailed Assessment of Existing Markup Languages

Foreword: A Word of Caution

This document is a report of the W3C Emotion Incubator group,

investigating the feasibility of working towards a standard representation of

emotions and related states in technological contexts.

This document is not an attempt to "standardise emotions", nor is it an

attempt to unify emotion theories into one common representation. The aim is

not to understand the "true nature" of emotions, but to attempt a transfer -

making available descriptions of emotion-related states in

application-oriented technological contexts, inspired by scientific

proposals, but not slavishly following them.

At this early stage, the results presented in this document are

preliminary; the authors do not claim any fitness of the proposed model for

any particular application purpose.

In particular, we expressly recommend prospective users of this technology

to check for any (implicit or explicit) biases, misrepresentations or

omissions of important aspects of their specific application domain. If you

have such observations, please let us know -- your feedback helps us create a

specification that is as generally usable as possible!

1. Introduction

The W3C Emotion Incubator group was chartered "to investigate the prospects of defining a

general-purpose Emotion annotation and representation language, which should

be usable in a large variety of technological contexts where emotions need to

be represented".

What could be the use of such a language?

From a practical point of view, the modeling of emotion related states in

technical systems can by important for two reasons.

1. To enhance computer-mediated or human-machine communication. Emotions

are a basic part of human communication and should therefore be taken into

account, e.g. in emotional Chat systems or emphatic voice boxes. This

involves specification, analysis and display of emotion related states.

2. To enhance systems' processing efficiency. Emotion and intelligence are

strongly interconnected. The modeling of human emotions in computer

processing can help to build more efficient systems, e.g. using emotional

models for time-critical decision enforcement.

A standardised way to mark up the data needed by such "emotion-oriented

systems" has the potential to boost development primarily because

a) data that was annotated in a standardised way can be interchanged

between systems more easily, thereby simplifying a market for emotional

databases.

b) the standard can be used to ease a market of providers for sub-modules

of emotion processing systems, e.g. a web service for the recognition of

emotion from text, speech or multi-modal input.

The work of the present, initial Emotion Incubator group consisted of two

main steps: firstly to revisit carefully the question where such a language

would be used (Use cases), and

secondly to describe what those use case scenarios require from a language

(Requirements). These

requirements are compared to the models proposed by current scientific theory

of emotions (Scientific descriptions). In addition,

existing markup languages are discussed with respect to the requirements (Existing

languages).

The specification of an actual emotion markup language has not yet been

started, but is planned as future work (Summary and Outlook). This deviation from the

original plan was the result of a deliberate choice made by the group - given

the strong commitment by many of the group's members to continue work after

the first year, precedence was given to the careful execution of the first

steps, so as to form a solid basis for the more "applicable" steps that are

the logical continuation of the group's work.

Throughout the Incubator Activity, decisions have been taken by consensus

during monthly telephone conferences and two face to face meetings.

The following report provides a detailed description of the work carried

out and the results achieved so far. It also identifies open issues that will

need to be followed up in future work.

The Incubator Group is now seeking to re-charter as an Incubator group for

a second and final year. During that time, the requirements presented here

will be prioritised; a draft specification will be formulated; and possible

uses of that specification in combination with other markup languages will be

outlined. Crucially, that new Incubator group will seek comment from the W3C

MMI and VoiceBrowser working groups. These comments will be decisive for the

decision whether to move into the Recommendation Track.

1.1 Participants

The group consisted of representatives of 16 institutions from 11

countries in Europe, Asia, and the US:

- German Research Center for Artificial Intelligence (DFKI) GmbH, Germany

*

- Deutsche Telekom AG, T-Com, Germany *

- University of Edinburgh, UK *

- Chinese Academy of Sciences, China *

- Ecole Polytechnique Federale de Lausanne (EPFL), Switzerland *

- University of Southern California Information Sciences Institute (USC /

ISI), USA *

- Universidad Politecnica de Madrid, Spain *

- Fraunhofer Gesellschaft, Germany *

- Loquendo, Italy

- Image, Video and Multimedia Systems Lab (IVML-NTUA), Greece

- Citigroup, USA

- Limsi, CNRS, France +

- University of Paris VIII, France +

- Austrian Institute for Artificial Intelligence (OFAI), Austria +

- Technical University Munich, Germany +

- Emotion.AI, Japan +

* Original sponsor organisation

+ Invited expert

It can be seen from this list that the interest has been broad and

international, but somewhat tilted towards the academic world. It will be one

important aim of a follow-up activity to produce sufficiently concrete output

to get more industrial groups actively interested.

2. Scientific Descriptions of Emotion

One central terminological issue to be cleared first is the semantics of

the term emotion, which has been used

in a broad and a narrow sense.

In its narrow sense, as it is e.g. used by

Scherer (2000), the term refers to what is also called a prototypical emotional episode (Russell

& Feldman Barrett 1999), full blown

emotion, or emergent emotion

(Douglas-Cowie et al. 2006): a short, intensive, clearly event triggered

emotional burst. A favourite example would be "fear" when encountering a

bear in the woods and fleeing in terror.

Especially in technological contexts there is a tendency to use the term

emotion(al) in a broad sense, sometimes for almost everything that cannot be

captured as purely cognitive aspect of human behaviour. More useful

established terms -- though still not concisely defined -- for the whole

range of phenomena that make up the elements of emotional life are "emotion-related states" and "affective states".

A number of taxonomies for these affective states have been proposed.

Scherer (2000), e.g., distinguishes:

- Emotions

- Moods

- Interpersonal stances

- Preferences/Attitudes

- Affect dispositions

This list was extended / modified by the HUMAINE group

working on databases: in Douglas-Cowie et al. (2006) the following list is

proposed (and defined):

- Attitudes

- Established emotion

- Emergent emotion (full-blown)

- Emergent emotion (suppressed)

- Moods

- Partial emotion (topic shifting)

- Partial emotion (simmering)

- Stance towards person

- Stance towards object/situation

- Interpersonal bonds

- Altered state of arousal

- Altered state of control

- Altered state of seriousness

- Emotionless

Emergent emotions -- not without

reason also termed prototypical emotional episodes -- can be viewed as

the archetypical affective states and many emotional theories focus on them.

Empirical studies (Wilhelm, Schoebi & Perrez 2004) on the other hand show

that while there are almost no instances where people report their state as

completely unemotional, examples of full-blown emergent emotions are really

quite rare. As the ever present emotional life consists of moods, stances

towards objects and persons, and altered states of arousal, these indeed

should play a prominent role in emotion-related computational applications.

The envisaged scope of an emotion representation language clearly comprises

emotions in the broad sense, i.e.

should be able to deal with different emotion-related states.

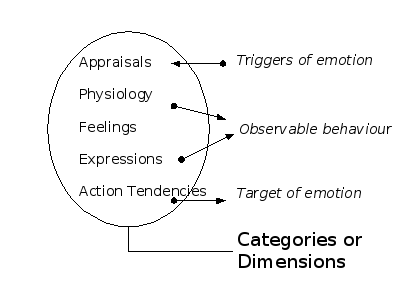

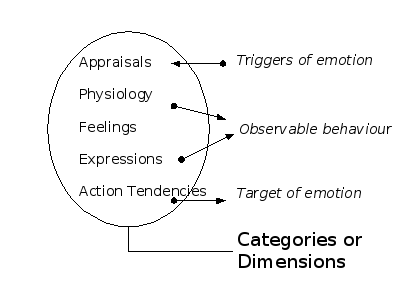

2.2 Different components of emotions

There is an old Indian tale called "The

blind men and the elephant" that enjoys some popularity in the

psychological literature as an allegory for the conceptual difficulties to

come up with unified and uncontroversial descriptions of complex phenomena.

In this tale several blind men who never have encountered an elephant before,

try to come up with an understanding of the nature of this unknown object.

Depending on the body part each of them touches they provide strongly

diverging descriptions. An elephant seems to be best described as a rope if

you hang to its tail only, is a tree if you just touched its legs, appears as

a spear if you encountered a tusk etc.

This metaphor fits nicely with the multitude of definitions and models

currently available in the scientific literature on emotions, which come

with a fair amount of terminological confusion added on top. There are no

commonly accepted answers to the questions on how to model the underlying

mechanism that are causing emotions, on how to classify them, on whether to

use categorial or dimensional descriptions etc. But leaving these questions

aside, there is a core set of components that are quite readily accepted

to be essentialcomponents of emergent

emotions.

Subjective component: Feelings.

Feelings are probably what is most strongly associated with the term emotion

in folk psychology and they have been claimed to make up an important part of

the overall complex phenomenon of emotion.

Cognitive component: Appraisals

The most prominently investigated aspect of this component is the role of --

not necessarily conscious -- cognitive processes that are concerned with the

evaluation of situations and events in the context of appraisal models (e.g.

Arnold 1960, Lazarus 1966), i.e. the role and nature of cognitive processes

in the genesis of emotions. Another aspect are modulating effects of emotions

on cognitive processes, such as

influences on memory and perception (e.g. narrowing of the visual field in

fear).

Physiological component:

Physiological changes both in the peripheral (e.g., heart-rate,

skin-conductivity) and the central system (e.g. neural activity) are

obviously one important component of emergent emotions. This component is

also strongly interconnected with other components in this list: e.g. changes

in the muscular tone, also account for the modulation of some expressive

features in speech (prosody, articulatory precision) or in the appearance

(posture, skin color).

Behavioral component: Action tendencies

Emotions have a strong influence on the motivational state of a subject.

Frijda (1986) e.g. associated emotions to a small set of action tendencies, e.g. avoidance (relates

to fear), rejecting (disgust) etc. Action tendencies can be viewed as a link

between the outcome of an appraisal process and actual actions.

Expressive component:

The expressive component comprises facial expressions but also body posture

and gesture and vocal cues (prosody, voice quality, affective bursts).

Different theories may still strongly disagree on the relative importance of these components and

on interactions and cause-and-effect relations between them. However, the

fact that these components are relevant to some extent seems relatively

uncontroversial.

3. Use cases

Taking a software engineering approach to the question of how to represent

emotion in a markup language, the first necessary step for the Emotion

Incubator group was to gather a set of use cases for the language.

At this stage, we had two primary goals in mind: to gain an understanding

of the many possible ways in which this language could be used, including the

practical needs which have to be served; and to determine the scope of the

language by defining which of the use cases would be suitable for such a

language and which would not. The resulting set of final use cases would then

be used as the basis for the next stage of the design process, the definition

of the requirements of the language.

The Emotion Incubator group is comprised of people with wide ranging

interests and expertise in the application of emotion in technology and

research. Using this as a strength, we asked each member to propose one or

more use case scenarios that would represent the work they, themselves, were

doing. This allowed the group members to create very specific use cases based

on their own domain knowledge. Three broad categories were defined for these

use cases:

- Data Annotation,

- Emotion Recognition

- Emotion Generation

Where possible we attempted to keep use cases within these categories,

however, naturally, some crossed the boundaries between categories.

A wiki was created to facilitate easy collaboration and integration of

each member's use cases. In this document, subheadings of the three broad

categories were provided along with a sample initial use case that served as

a template from which the other members entered their own use cases and

followed in terms of content and layout. In total, 39 use cases were entered

by the various working group members: 13 for Data Annotation, 11 for Emotion

Recognition and 15 for Emotion Generation.

Possibly the key phase of gathering use cases was in the optimisation of

the wiki document. Here, the members of the group worked collaboratively

within the context of each broad category to find any redundancies

(replicated or very similar content), to ensure that each use case followed

the template and provided the necessary level of information, to disambiguate

any ambiguous wording (including a glossary of terms for the project), to

agree on a suitable category for use cases that might well fit into two or

more and to order the use cases in the wiki so that they formed a coherent

document.

In the following, we detail each broad use case category, outlining the

range of use cases in each, and pointing out some of their particular

intricacies. Detailed descriptions of all use cases can be found in Appendix 1.

3.1. Data annotation

The Data Annotation use case groups together a broad range of scenarios

involving human annotation of the emotion contained in some material. These

scenarios show a broad range with respect to the material being annotated,

the way this material is collected, the way the emotion itself is

represented, and, notably, which kinds of additional information about the

emotion are being annotated.

One simple case is the annotation of plain text with emotion dimensions or

categories and corresponding intensities. Similarly, simple emotional labels

can be associated to nodes in an XML tree, representing e.g. dialogue acts,

or to static pictures showing faces, or to speech recordings in their

entirety. While the applications and their constraints are very different

between these simple cases, the core task of emotion annotation is relatively

straightforward: it consists of a way to define the scope of an emotion

annotation and a description of the emotional state itself. Reasons for

collecting data of this kind include the creation of training data for

emotion recognition, as well as scientific research.

Recent work on naturalistic multimodal emotional recordings has compiled a

much richer set of annotation elements (Douglas-Cowie et al., 2006), and has

argued that a proper representation of these aspects is required for an

adequate description of the inherent complexity in naturally occurring

emotional behaviour. Examples of such additional annotations are multiple

emotions that co-occur in various ways (e.g., as blended emotions, as a quick

sequence, as one emotion masking another one), regulation effects such as

simulation or attenuation, confidence of annotation accuracy, or the

description of the annotation of one individual versus a collective

annotation. In addition to annotations that represent fixed values for a

certain time span, various aspects can also be represented as continuous

"traces" -- curves representing the evolution of, e.g., emotional intensity

over time.

Data is often recorded by actors rather then observed in naturalistic

settings. Here, it may be desirable to represent the quality of the acting,

in addition to the intended and possibly the perceived emotion.

With respect to requirements, it has become clear that Data Annotation

poses the most complex kinds of requirements with respect to an emotion

markup language, because many of the subtleties humans can perceive are far

beyond the capabilities of today's technology. We have nevertheless attempted

to encompass as many of the requirements arising from Data Annotation, not

least in order to support the awareness of the technological community

regarding the wealth of potentially relevant aspects in emotion

annotation.

3.2 Emotion recognition

As a general rule, the context of the Emotion Recognition use case has to

do with low- and mid-level features which can be automatically detected,

either offline or online, from human-human and human-machine interaction. In

the case of low-level features, these can be facial features, such as Action

Units (AUs) (Ekman and Friesen 1978) or MPEG 4 facial action parameters

(FAPs) (Tekalp and Ostermann, 2000), speech features related to prosody

(Devillers, Vidrascu and Lamel 2005) or language, or other, less frequently

investigated modalities, such as bio signals (e.g. heart rate or skin

conductivity). All of the above can be used in the context of emotion

recognition to provide emotion labels or extract emotion-related cues, such

as smiling, shrugging or nodding, eye gaze and head pose, etc. These features

can then be stored for further processing or reused to synthesise

expressivity on an embodied conversational agent (ECA) (Bevacqua et al.,

2006).

In the case of unimodal recognition, the most prominent examples are

speech and facial expressivity analysis. Regarding speech prosody and

language, the CEICES data collection and processing initiative (Batliner et

al. 2006) as well as exploratory extensions to automated call centres

(Burkhardt et al., 2005) are the main factors that defined the essential

features and functionality of this use case. With respect to visual analysis,

there are two cases: in the best case scenario, detailed facial features

(eyes, eyebrows, mouth, etc.) information can be extracted and tracked in a

video sequence, catering for high-level emotional assessment (e.g. emotion

words). However, when analysing natural, unconstrained interaction, this is

hardly ever the case since colour information may be hampered and head pose

is usually not directed to the camera; in this framework, skin areas

belonging to the head of the subject or the hands, if visible, are detected

and tracked, providing general expressivity features, such as speed and power

of movement (Bevacqua et al., 2006).

For physiological data, despite being researched for a long time

especially by psychologists, no systematic approach to store or annotate them

is in place. However, there are first attempts to include them in databases

(Blech et al., 2005), and suggestions on how they could be represented in

digital systems have been made (Peter and Herbon, 2006). A main difficulty

with physiological measurements is the variety of possibilities to obtain the

data and of the consequential data enhancement steps. Since these factors can

directly affect the result of the emotion interpretation, a generic emotion

markup language needs to be able to deal with such low-level issues. The same

applies to the technical parameters of other modalities, such as resolution

and frame rate of cameras, the dynamic range or the type of sound field of

the chosen microphone, and algorithms used to enhance the data.

Finally, individual modalities can be merged, either at feature- or

decision-level, to provide multimodal recognition. In this case, features and

timing information (duration, peak, slope, etc.) from individual modalities

are still present, but an integrated emotion label is also assigned to the

multimedia file or stream in question. In addition to this, a confidence

measure for each feature and decision assists in providing flexibility and

robustness in automatic or user-assisted methods.

3.3 Generation

We divided the 15 use cases in the generation category into a number of

further sub categories, these dealt with essentially simulating modelled

emotional processes, generating face and body gestures and generating

emotional speech.

The use cases in this category had a number of common elements that

represented triggering the generation of an emotional behaviour according to

a specified model or mapping. In general, emotion eliciting events are passed

to an emotion generation system that maps the event to an emotion state which

could then be realised as a physical representation, e.g. as gestures, speech

or behavioural actions.

The generation use cases presented a number of interesting issues that

focused the team on the scope of the work being undertaken. In particular,

they showed how varied the information being passed to and information being

received from an emotion processing system can be. This would necessitate

either a very flexible method of receiving and sending data or to restrict

the scope of the work in respect to what types of information can be

handled.

The first sub set of generation use cases were termed 'Affective

Reasoner', to denote emotion modelling and simulation. Three quite different

systems were outlined in this sub category, one modelling cognitive emotional

processes, one modelling the emotional effects of real time events such as

stock price movements on a system with a defined personality and a large ECA

system that made heavy use of XML to pass data between its various

processes.

The next sub set dealt with the generation of automatic facial and body

gestures for characters. With these use cases, the issue of the range of

possible outputs from emotion generation systems became apparent. While all

focused on generating human facial and body gestures, the possible range of

systems that they connect to was large, meaning the possible mappings or

output schema would be large. Both software and robotic systems were

represented and as such the generated gesture information could be sent to

both software and hardware based systems on any number of platforms. While a

number of standards are available for animation that are used extensively

within academia (e.g., MPEG-4 (Tekalp and Ostermann, 2000), BML (Kopp et al.,

2006)), they are by no means common in industry.

The final sub set was primarily focused on issues surrounding emotional

speech synthesis, dialogue events and paralinguistic events. Similar to the

issues above, the generation of speech synthesis, dialogue events,

paralinguistic events etc. is complicated by the wide range of possible

systems to which the generating system will pass its information. There does

not seem to be a widely used common standard, even though the range is not

quite as diverse as with facial and body gestures. Some of these systems made

use of databases of emotional responses and as such might use an emotion

language as a method of storing and retrieving this information.

4. Requirements

Overview

The following represents a collection of requirements for an Emotion

Markup Language ("EmotionML") as they arise from the use cases specified

above. Each scenario described through the use cases has implicit

requirements which need need to be made explicit to allow for their

representation through a language. The challenge with the 39 use case

scenarios collected in the Emotion Incubator group was to structure the

extracted requirements in a way that reduces complexity, and to agree on what

should be included in the language itself and what should be described

through other, linked representations.

Work proceeded in a bottom-up, iterative way. From relatively unstructured

lists of requirements for the individual use case scenarios, a requirements

document was compiled within each of the three use case categories (Data

Annotation, Emotion Recognition and Emotion Generation). These three

documents differed in structure and in the vocabulary used, and emphasised

different aspects. For example, while the Data Annotation use case emphasised

the need for a rich set of metadata descriptors, the Emotion Recognition use

case pointed out the need to refer to sensor data and environmental

variables, and the use case on Emotion Generation requested a representation

for the 'reward' vs. 'penalty' value of things. The situation was complicated

further by the use of system-centric concepts such as 'input' and 'output',

which for Emotion Recognition have fundamentally different meanings than for

Emotion Generation. For consolidating the requirements documents, two basic

principles were agreed on:

- The emotion language should not try to represent sensor data, facial

expressions, environmental data etc., but define a way of interfacing

with external representations of such data.

- The use of system-centric vocabulary such as 'input' and 'output'

should be avoided. Instead, concept names should be chosen by following

the phenomena observed, such as 'experiencer', 'trigger', or 'observable

behaviour'.

Based on these principles and a large number of smaller clarifications,

the three use case specific requirements documents were merged into an

integrated wiki document. After several iterations of restructuring and

refinement, a consolidated structure has materialised for that document. The

elements of that document are grouped into sections according to the type of

information that they represent: (1) Information about the emotion

properties, (2) Meta-information about the individual emotion annotations,

(3) links to the rest of the world, (4) information about a number of global

metadata, and (5) ontologies.

4.1. Information about the emotion properties (Emotion

'Core')

4.1.1. Type of emotion-related phenomenon

The language should not only annotate emergent emotions, i.e. emotions in

the strong sense (such as anger, joy, sadness, fear, etc.), but also

different types of emotion-related states.

The emotion markup should provide a way of indicating which of these (or

similar) types of emotion-related/affective phenomena is being annotated.

The following use cases require annotation of emotion categories and

dimensions:

4.1.2. Emotion categories

The emotion markup should provide a generic mechanism to represent broad

and small sets of possible emotion-related states. It should be possible to

choose a set of emotion categories (a label set), because different

applications need different sets of emotion labels. A flexible mechanism is

needed to link to such sets. A standard emotion markup language should

propose one or several "default" set(s) of emotion categories, but leave the

option to a user to specify an application-specific set instead.

Douglas-Cowie et al. (2006) propose a list of 48 emotion categories that

could be used as the "default" set.

The following use cases demonstrate the use of emotion categories:

4.1.3. Emotion dimensions

The emotion markup should provide a generic format for describing emotions

in terms of emotion dimensions. As for emotion categories, it is not possible

to predefine a normative set of dimensions. Instead, the language should

provide a "default" set of dimensions, that can be used if there are no

specific application constraints, but allow the user to "plug in" a custom

set of dimensions if needed. Typical sets of emotion dimensions include

"arousal, valence and dominance" (known in the literature by

different names, including "evaluation, activation and power"; "pleasure,

arousal, dominance"; etc.). Recent evidence suggests there should be a fourth

dimension: Roesch et al. (2006) report consistent results from various

cultures where a set of four dimensions is found in user studies: "valence, potency, arousal, and unpredictability".

The following use cases demonstrate use of dimensions for representing

emotional states:

4.1.4. Description of appraisals of the emotion or of

events related to the emotion

Description of appraisal can be attached to the emotion itself or to an

event related to the emotion. Three groups of emotional events are defined in

the OCC model (Ortony, Clore, & Collins, 1988): the consequences of

events for oneself or for others, the actions of others and the perception of

objects.

The language will not cover other aspects of the description of events.

Instead, there will be a possibility to attach an external link to the

detailed description of this event according to an external representation

language. The emotion language could integrate description of events (OCC

events, verbal description) and time of event (past, present, future).

Appraisals can be described with a common set of intermediate terms

between stimuli and response, between organism and environment. The appraisal

variables are linked to different cognitive process levels in the model of

Leventhal and Scherer (1987). The following set of labels (Scherer et al.,

2004) can be used to describe the protagonist's appraisal of the event or

events at the focus of his/her emotional state:relevance, implications Agency

responsible, coping potential, compatibility of the situation with standards.

Use cases:

4.1.5 Action tendencies

It should be possible to characterise emotions in terms of the action

tendencies linked to them (Frijda, 1986). For example, anger is linked to a

tendency to attack, fear is linked to a tendency to flee or freeze, etc. This

requirement is not linked to any of the currently envisaged use cases, but

has been added in order to cover the theoretically relevant components of

emotions better. Action tendencies are potentially very relevant for use

cases where emotions play a role in driving behaviour, e.g. in the behaviour

planning component of non-player characters in games.

4.1.6. Multiple and/or complex emotions

The emotion markup should provide a mechanism to represent mixed

emotions.

The following use cases demonstrate use of multiple and / or complex

emotions:

4.1.7. Emotion intensity

The intensity is also a dimension. The emotion markup should provide an

emotion attribute to represent the intensity. The value of attribute

intensity is in [0;1].

The following use cases are examples for use of intensity information on

emotions:

4.1.8. Emotion regulation

According to the process model of emotion regulation described by Gross

(2001), emotion may be regulated at five points in the emotion generation

process: selection of the situation, modification of the situation,

deployment of attention, change of cognition, and modulation of experiential,

behavioral or physiological responses. The most basic distinction underlying

the concept of regulation of emotion-related behaviour is the distinction of

internal vs. external state. The description of the external state is out of

scope of the language - it can be covered by referring to other languages

such as Facial Action Coding System (Ekman et al. 2002), Behavior Mark-up

Language (Vilhjalmsson et al. 2007).

Other types of regulation-related information can represent genuinely

expressed/felt (inferred)/masked(how well)/simulated, or inhibition/masking

of emotions or expression, or excitation/boosting of emotions or expression.

The emotion markup should provide emotion attributes to represent the

various kinds of regulation. The value of these attributes should be in

[0;1].

The following use cases are examples for regulation being of interest:

4.1.9. Temporal aspects

This section covers information regarding the timing of the emotion

itself. The timing of any associated behaviour, triggers etc. is covered in

section 4.3 "Links to the rest of the world".

The emotion markup should provide a generic and optional mechanism for

temporal scope. This mechanism allows different way to specify temporal

aspects such as i) start-time + end-time, ii) start-time+duration, iii) link

to another entity (start 2 seconds before utterance starts and ends with the

second noun-phrase...), iv) a sampling mechanism providing values for

variables at even spaced time intervals.

The following use cases require the annotation of temporal dynamics of

emotion.:

4.2.1. Acting

The emotion markup should provide a mechanism to add special attributes

for acted emotions such as perceived naturalness, authenticity, quality, and

so on.

Use cases:

4.2.2. Confidence / probability

The emotion markup should provide a generic attribute enabling to

represent the confidence (or, inversely, uncertainty) of detection/annotation

or more generally speaking of probability to be assigned to one

representation of emotion to each level of representation (category,

dimensions, degree of acting, ...). This attribute may reflect the confidence

of the annotator that the particular value is as stated (e.g. that the user

in question is expressing happiness with confidence 0.8), which

is important especially in masked expressivity, or the confidence of an

automated recognition system with respect to the samples used for training.

If this attribute is supplied per modality it can be exploited in

recognition use cases to pinpoint the dominant or more robust of the existing

modalities.

The following use cases require the annotation of confidence:

4.2.3. Modality

It represents the modalities in which the emotion is reflected, e.g. face,

voice, body posture or hand gestures, but also lighting, font shape, etc.

The emotion markup should provide a mechanism to represent an open set of

values.

The following use cases require the annotation of modality:

4.3. Links to the "rest of the world"

Most use cases rely on some media representation. This could be video

files of users' faces whose emotions are assessed, screen captures of

evaluated user interfaces, audio files of interviews, but also other media

relevant in the respective context, like pictures or documents.

Linking to them could be

accomplished by e.g. an URL in an XML node.

The following use cases require links to the "rest of the world":

4.3.2. Position on a time line in externally linked

objects

The emotion markup should provide a link to a time-line. Possible values

of temporal linking are absolute (start- and end-times) and

relative and refer to external sources (cf. 4.3.1) like snippets (as

points in time) of media files causing the emotion.

Start- and end-times are important to mark onset and offset of an

emotional episode.

The following use cases require annotation on specific positions on a time

line:

4.3.3. The semantics of links to the "rest of the

world"

The emotion markup should provide a mechanism for flexibly

assigning meaning to those links.

The following initial types of meaning are envisaged:

- The experiencer (who "has" the emotion);

- The observable behaviour "expressing" the emotion;

- The trigger/cause/emotion-eliciting event of the emotion;

- The object/target of the emotion (the thing that the emotion is about).

We currently envisage that the links to media as defined in section 4.3.1

are relevant for all of the above. For some of them, timing information is

also relevant:

- observable behaviour

- trigger

The following use cases require annotation on semantics of the links to

the "rest of the world":

Representing emotion, be it for annotation, detection or generation,

requires the description of the context not directly related to the

description of emotion per se (e.g. the emotion-eliciting event) but also the

description of a more global context which is required for properly

exploiting the representation of the emotion in a given application.

Specifications of metadata for multimodal corpora have already been proposed

in the ISLE Metadata Initiative [IMDI]; but they did

not target emotional data and were focused on an annotation scenario.

The joint specification of our three use cases led to the identification

of four groups of global metadata: information on persons involved, the

purpose of classification i.e. the intended or used application, information

on the technical environment, and on the social and communicative

environment. Those are described in the following.

The following use cases require annotation of global metadata:

4.4.1. Info on Person(s)

Information are needed on the humans involved. Depending on the use case,

this would be the labeler(s) (Data Annotation), persons observed (Data

Annotation, Emotion Recognition), persons interacted with, or even

computer-driven agents such as ECAs (Emotion Generation). While it would be

desirable to have common profile entries throughout all use cases, we found

that information on persons involved are very use case specific. While all

entries could be provided and possibly used in most use cases, they are of

different importance to each.

Examples are:

- For Data Annotation: gender, age, language, culture, personality

traits, experience as labeler, labeler ID (all mandatory)

- For Emotion Recognition: gender, age, culture, personality traits,

experience with the subject, e.g. web experience for usability studies

(depending on the use case all or some mandatory).

- For Emotion Generation:gender, age, language, culture, education,

personality traits (again, use case dependant)

The following use cases need information on the person(s) involved:

4.4.2. Purpose of classification

The result of emotion classification is influenced by its purpose. For

example, a corpus of speech data for training an ECA might be differently

labelled than the same data used for a corpus for training an automatic

dialogue system for phone banking applications; or the face data of a

computer user might be differently labeled for the purpose of usability

evaluation or guiding an user assistance program.These differences are

application or at least genre specific. They are also independent from the

underlying emotion model.

The following use cases need information on the purpose of the

classification:

4.4.3. Technical environment

The quality of emotion classification and interpretation, by either humans

or machines, depend on the quality and technical parameters of sensors and

media used.

Examples are:

- Frame rate, resolution, colour characteristics of video sources;

- Dynamic range, type of sound field of microphones;

- Type of sensing devices for physiology, movement, or pressure

measurements;

- Data enhancement algorithms applied by either device or pre-processing

steps.

Also should the emotion markup be able to hold information on which way an

emotion classification has been obtained, e.g. by a human observer monitoring

a subject directly, or via a life stream from a camera, or a recording; or by

a machine, utilising which algorithms.

The following use cases need information on the technical environment:

4.4.4. Social and communicative environment

The emotion markup should provide a global information to specify

genre of the observed social and communicative environment and more

generally of the situation in which an emotion is considered to happen (e.g.

fiction (movies, theater), in-lab recording, induction, human-human,

human-computer (real or simulated)), interactional situation (number

of people, relations, link to participants).

The following use cases require annotation of the social and communicative

environment:

4.5. Ontologies of emotion descriptions

Descriptions of emotions and of emotion-related states are heterogeneous,

and are likely to remain so for a long time. Therefore, complex systems such

as many foreseeable real-world applications will require some information

about (1) the relationships between the concepts used in one description and

about (2) the relationships between different descriptions.

4.5.1. Relationships between concepts in an emotion

description

The concepts in an emotion description are usually not independent, but

are related to one another. For example, emotion words may form a hierarchy,

as suggested e.g. by prototype theories of emotions. For example, Shaver et

al. (1987) classified cheerfulness, zest, contentment, pride, optimism

enthrallment and relief as different kinds of joy, irritation, exasperation,

rage, disgust, envy and torment as different kinds of anger, etc.

Such structures, be they motivated by emotion theory or by

application-specific requirements, may be an important complement to the

representations in an Emotion Markup Language. In particular, they would

allow for a mapping from a larger set of categories to a smaller set of

higher-level categories.

The following use case demonstrates possible use of hierarchies of

emotions:

4.5.2. Mappings between different emotion

representations

Different emotion representations (e.g., categories, dimensions, and

appraisals) are not independent; rather, they describe different parts of the

"elephant", of the phenomenon emotion. Insofar, it is conceptually possible

to map from one representation to another one in some cases; in other cases,

mappings are not fully possible.

Some use cases require mapping between different emotion representations:

e.g., from categories to dimensions, from dimensions to coarse categories (a

lossy mapping), from appraisals onto dimensions, from categories to

appraisals, etc.

Such mappings may either be based on findings from emotion theory or they

can be defined in an application-specific way.

The following use cases require mappings between different emotion

representations:

- 2b "Multimodal

emotion recognition" - different modalities might deliver their

emotion result in different representations

- 3b "the ECA

system", it is based on a smaller set of emotion categories compared

with the list of categories that are generated in use case 3a, so a

mapping mechanism is needed to convert the larger category set to a

smaller set

- 3b speech synthesis

with emotion dimensions would require a mapping from appraisals or

emotion categories if used in combination with an affective reasoner (use

case 3a).

4.6. Assessment in the light of emotion theory

The collection of use cases and subsequent definition of requirements

presented so far was performed in a predominantly bottom-up fashion, and thus

captures a strongly application centered, engineering driven view. The

purpose of this section is to compare the result with a theory centered

perspective. A representation language should be as theory independent as

possible but by no means ignorant of psychological theories. Therefore a

crosscheck to which extent components of existing psychological models of

emotion are mirrored in the currently collected requirements is performed.

In Section 2, a

list of prominent concepts that have been used by psychologists in their

quest for describing emotions has been presented. In this section it is

briefly discussed whether and how these concepts are mirrored in the current

list of requirements.

Subjective component: Feelings.

Feelings have not been mentioned in the requirements at all.

They are not to be explicitly included in the representation for the moment

being, as they are defined as internal states of the subject and are thus not

accessible to observation. Applications can be envisaged where feelings might

be of relevance in the future though, e.g. if self-reports are to be encoded.

It should thus be kept as an open issue on whether to allow for an explicit

representation of feelings as a separate component in the future.

Cognitive component: Appraisals

As a references to appraisal-related theories the OCC model (Ortony et al

1988), which is especially popular in the computational domain, has been

brought up in the use cases, but no choice for the exact set of appraisal

conditions is to be made here. An open issue is whether models that make

explicit predictions on the temporal ordering of appraisal checks (Sander et

al., 2005) should be encodable to that level of detail. In general,

appraisals are to be be encoded in the representation language via

attributing links to trigger objects.

The encoding of other cognitive aspects, i.e. effects of emotions on the

cognitive system (memory, perception, etc.) is to be kept an open issue.

Physiological component:

Physiological measures have been mentioned in the context of emotion

recognition. They are to be integrated in the representation via links to

externally encoded measures conceptualised as "observable behaviour".

Behavioral component: Action tendencies

It remains an issue of theoretical debate whether action tendencies, in

contrast to actions, are among the set of actually observable concepts.

Nevertheless these should be integrated in the representation language. This

once again can be achieved via the link mechanism, this time an attributed

link can specify an action tendency together with its object or target.

Expressive component:

Expressions are frequently referred to in the requirements. There is

agreement to not encode them directly but again to make use of the linking

mechanisms to

observable behaviours.

Figure 1. Overview of how components of emotions are to be linked to external

representations.

Emergent emotions vs. other emotion-related states

It was mentioned before that the representation language should

definitively not be restricted to emergent emotions which have received most

attention so far. Though emergent emotions make up only a very small part of

the emotion-related states, they nevertheless are sort of archetypes.

Representations developed for emergent emotions should thus be usable as

basis for the encoding of other important emotion-related states such as

moods and attitudes.

Scherer (2000) systematically defines the relationship between emergent

emotions and other emotion-related states by proposing a small set of

so-called design features. Emergent emotions are defined as having a

strong direct impact on behaviour, high intensity, being rapidly changing and

short, are focusing on a triggering event and involve strong appraisal

elicitation. Moods e.g. are in contrast described using the same set of

categories, and they are characterised as not having a direct impact on

behaviour, being less intense, changing less quickly and lasting longer, and

not being directly tied to a eliciting event. In this framework different

types of emotion-related states thus just arise from differences in the

design features.

It is an open issue whether to integrate means similar to Scherer's design features in the language. Because

probably not many applications will be able to make use of this level of

detail, simple means for explicitly defining the type of an emotion related

state should be made available in the representation language anyway.

5. Assessment of Existing Markup Languages

Part of the activity of the group was dedicated to the assessment of

existing markup languages in order to investigate if some of their elements

or even concepts could fulfill the Emotion language requirements as described

in section 4. In the perspective of an effective Emotional Markup design it

will be in fact important to re-use concepts and elements that other

languages thoroughly define. Another interesting aspect of this activity has

been the possibility to hypothesize the interaction of the emotion markup

language with other existing languages and particularly with those concerning

multimodal applications.

Seven markup languages have been assessed, five of them are the result of

W3C initiatives that led to recommendation or draft documents, while the

remaining are the result of other initiatives, namely the projects HUMAINE

and INTERFACE.

5.1 Assessment methodology

The assessments were undertaken when the requirements of the emotion

language were almost consolidated. To this end, the members of the group

responsible for this activity adopted the same methodology that basically

consisted in identifying among the markup specifications those elements that

could be consistent with the emotional language constraints. In some cases

links to the established Emotion Requirements were possible, being the

selected elements totally fulfilling their features, while in other cases

this was not possible even if the idea behind a particular tag could

nevertheless be considered useful. Sometimes, to clarify the concepts,

examples and citations from the original documents were included.

These analyses, reported in Appendix 2, were initially published on the Wiki page,

available for comments and editing to all the members of the incubator group.

The structure of these documents consists of an introduction containing

references to the analyzed language and a brief description of its uses. The

following part reports a description of the selected elements that were

judged as fulfilling the emotion language requirements.

The five W3C Markup languages considered in this analysis are mainly

designed for multimedia application. They deal with speech recognition and

synthesis, ink and gesture recognition, semantic interpretation and the

writing of interactive multimedia presentations. Among the two remaining

markup languages, EARL (Schröder et al., 2006), whose aim is the annotation

and representation of emotions, is an original proposal from the HUMAINE

consortium. The second one, VHML, is a language based on XML sub-languages

such as DMML (Dialogue Manager Markup Language), FAML (Facial Animation

Markup Language) and BAML (Body Animation Markup Language).

In detail, the existing markup languages that have been assessed are:

- SMIL Synchronized Multimedia Integration Language (Version 2.1) W3C

Recommendation 13 December 2005 [SMIL ]

- SSML Speech Synthesis Markup Language (Version 1.0) W3C Recommendation

7 September 2004 [SSML ]

- EMMA Extensible MultiModal Annotation markup language W3C Working Draft

9 April 2007 [EMMA ]

- PLS Pronunciation Lexicon Specification (Version 1.0) W3C Working Draft

26 October 2006 [PLS ]

- InkML Ink Markup Language (InkML) W3C Working Draft 23 October 2006 [InkML ]

- EARL Emotion Annotation and Representation Language (version 0.4.0)

Working draft 30 June 2006 [EARL

]

- VHML Virtual Human Markup Language (Version 0.3) Working draft 21

October 2001 [VHML ]

5.2 Results

Many of the requirements of the emotion markup language cannot be found in

any of the considered W3C markups. This is particularly true for the emotion

specific elements, i.e. those features that can be considered the core part

of the emotional markup language. On the other hand, we could find

descriptions related to emotions in EARL and to some extent in VHML. The

first one in particular provides mechanisms to describe, through basic tags,

most of the required elements. It is in fact possible to specify the emotion

categories, the dimensions, the intensity and even appraisals selecting the

most appropriate case from a pre-defined list. Moreover, EARL includes

elements to describe mixed emotions as well as regulation mechanisms like for

example the degree of simulation or suppression. In comparison VHML, that is

actually oriented to the behavior generation use case, provides very few

emotion related features. It is only possible to use emotion categories (a

set of nine is defined) and indicate the intensity. Beyond these features

there is also the emphasis tag that is actually derived from the GML (Gesture

Markup Language) module.

Beyond the categorical and dimensional description of the emotion itself,

neither EARL nor VHML provide any way to deal with emotion-related phenomena

like for example attitudes, moods or affect dispositions.

The analyzed languages, W3C initiatives or not, offer nevertheless

interesting approaches for the definition of elements that are not strictly

related to the description of emotions, but are important structural elements

in any markup language. In this sense, interesting solutions to manage timing

issues, to annotate modality and to include metadata information were

found.

Timing, as shown in the requirements section, is an important aspect in

the emotional language markup. Time references are necessary to get the

synchronization with external objects and when we have to represent the

temporal evolution of the emotional event (either recognized, generated or

annotated). W3C SMIL and EMMA both provide solutions to indicate absolute

timing as well as relative instants with respect to a reference point that

can be explicitly indicated as in EMMA or can also be an event like in SMIL

standard. SMIL has also interesting features to manage the synchronization of

parallel events.

Metadata is another important element included in the emotional markup.

The W3C languages provide very flexible mechanisms that could allow the

insertion of any kind of information, for example related to the subject of

the emotion, the trigger event, and finally the object, into this container.

Metadata annotation is available in SMIL, SSML, EMMA and VHML languages

through different strategies, from simple tags like the info element proposed

by EMMA (a list of unconstrained attribute-value couples) to more complex

solutions like in SMIL and SSML where RDF features are exploited.

Also referring to modality the considered languages provide different

solutions, from simple to articulated ones. Modality is present in SMIL,

EMMA, EARL and VHML (by means of other sub languages). They are generally

mechanisms that describe the mode in which emotion is expressed (face, body,

speech, etc.). Some languages get into deeper annotations by considering the

medium or channel and the function. To this end, EMMA is an example of an

exhaustive way of representing modalities in the recognition use case. These

features could be effectively extended to the other use cases, i.e.

annotation and generation.

Regarding interesting ideas, some languages provide mechanisms that are

useful to manage dynamic lists of elements. An example of this can be found

in the W3C PLS language, where name spaces are exploited to manage multiple

sets of features.

6. Summary and Outlook

This first year as a W3C Incubator group was a worthwhile endeavour. A

group of people with diverse backgrounds collaborated in a very constructive

way on a topic which for a considerable time appeared to be a fuzzy area.

During the year, however, the concepts became clearer; the group came to

an agreement regarding the delimitation of the emotion markup language to

related content (such as the representation of emotion-related expressive

behaviour). Initially, very diverse ideas and vocabulary arose in a bottom-up

fashion from use cases; the integration of requirements into a consistent

document consumed a major part of the time.

The conceptual challenges encountered during the creation of the

Requirements document were to be expected, given the interdisciplinary nature

of the topic area and the lack of consistent guidelines from emotion theory.

The group made important progress, and has produced a structured set of

requirements for an emotion markup language which, even though it was driven

by use cases, can be considered reasonable from a scientific point of view.

A first step has been carried out towards the specification of a markup

language fulfilling the requirements: a broad range of existing markup

languages from W3C and outside of W3C were investigated and discussed in view

of their relevance to the EmotionML requirements. This survey provides a

starting point for creating a well-informed specification draft in the

future.

There is a strong consensus in the group that continuing the work is

worthwhile. The unanimous preference is to run for a second year as an

Incubator group, whose central aim is to convert the conceptual work done so

far into concrete suggestions and requests for comments from existing W3C

groups: the MMI and VoiceBrowser groups. The current plan is to provide three

documents for discussion during the second year as Incubator:

- a simplified Requirements document with priorities (in time for

face-to-face discussions at the Cambridge meeting in November);

- an "early Incubator draft" version of an EmotionML specification, after

6 months;

- a "final Incubator draft" version of an EmotionML specification, after

12 months.

If during this second year, enough interest from the W3C constituency is

raised, a continuation of the work in the Recommendation Track is

envisaged.

7. References

7.1 Scientific references

Arnold, M., (1960). Emotion and

Personality, Columbia University Press, New York.

Batliner, A., et al. (2006). Combining efforts for improving automatic

classification of emotional user states. In: Proceedings IS-LTC 2006.

Bevacqua, E., Raouzaiou, A., Peters, C., Caridakis, G., Karpouzis, K.,

Pelachaud, C., Mancini, M. (2006). Multimodal sensing, interpretation and

copying of movements by a virtual agent. In: Proceedings of Perception and Interactive

Technologies (PIT'06).

Blech, M., Peter, C., Stahl, R., Voskamp, J., Urban, B.(2005). Setting up

a multimodal database for multi-study emotion research in HCI. In: Proceedings of the 2005 HCI International

Conference, Las Vegas

Burkhardt, F., van Ballegooy, M., Englert, R., & Huber, R. (2005). An

emotion-aware voice portal. Proc. Electronic Speech Signal Processing

ESSP.

Devillers, L., Vidrascu, L., Lamel, L. (2005). Challenges in real-life

emotion annotation and machine learning based detection. Neural Networks 18,

407-422

Douglas-Cowie, E., et al. (2006). HUMAINE deliverable D5g: Mid Term Report

on Database Exemplar Progress. http://emotion-research.net/deliverables/D5g%20final.pdf

Ekman, P., Friesen, W. (1978). The Facial

Action Coding System. Consulting Psychologists Press, San Francisco

Ekman, P., Friesen, W. C. and Hager, J. C. (2002). Facial Action Coding System. The Manual on CD

ROM. Research Nexus division of Network Information Research

Corporation.

Frijda, N (1986). The Emotions. Cambridge: Cambridge University

Press.

Gross, J. J. (2001). "Emotion regulation in adulthood: timing is

everything." Current Directions in Psychological Science 10(6). http://www-psych.stanford.edu/~psyphy/Pdfs/2001%20Current%20Directions%20in%20Psychological%20Science%20-%20Emo.%20Reg.%20in%20Adulthood%20Timing%20.pdf

Kopp, S., Krenn, B., Marsella, S., Marshall, A., Pelachaud, C., Pirker,

H., Thórisson, K., & Vilhjalmsson, H. (2006). Towards a common framework

for multimodal generation in ECAs: the Behavior Markup Language. In

Proceedings of the 6th International Conference on Intelligent Virtual Agents

(IVA'06).

Lazarus, R.S. (1966). Psychological stress and the coping process.

McGraw-Hill. New York.

Leventhal, H., and Scherer, K. (1987). The Relationship of Emotion to

Cognition: A Functional Approach to a Semantic Controversy. Cognition and

Emotion 1(1):3-28.

Ortony, A Clore, G.L. and Collins A (1988). The cognitive structure of emotions.

Cambridge University Press, New York.

Peter, C., Herbon, A. (2006). Emotion representation and physiology

assignments in digital systems. Interacting

With Computers 18, 139-170.

Roesch, E.B., Fontaine J.B. & Scherer, K.R. (2006). The world of

emotion is two-dimensional - or is it? Paper presented to the HUMAINE Summer

School 2006, Genoa.

Russell, J. A.. & Feldman Barrett L (1999). Core Affect, Prototypical

Emotional Episodes, and Other Things Called Emotion: Dissecting the

Elephant Journal of Personalityand Social Psychology,

76, 805-819.

Sander, D., Grandjean, D., & Scherer, K. (2005). A systems approach to

appraisal mechanisms in emotion. Neural Networks: 18, 317-352.

Scherer, K.R. (2000). Psychological models of emotion. In Joan C. Borod

(Ed.), The Neuropsychology of Emotion (pp. 137-162). New York: Oxford

University Press.

Scherer, K. R. et al. (2004). Preliminary plans for exemplars: Theory.

HUMAINE deliverable D3c. http://emotion-research.net/deliverables/D3c.pdf

Schröder, M., Pirker, H., Lamolle, M. (2006). First suggestions for an

emotion annotation and representation language. In: Proceedings of LREC'06 Workshop on Corpora for

Research on Emotion and Affect, Genoa, Italy, pp. 88-92

Shaver, P., Schwartz, J., Kirson, D., and O'Connor, C. (1987). Emotion

knowledge: Further exploration of a prototype approach. Journal of Personality and Social

Psychology, 52:1061-1086.

Tekalp, M., Ostermann, J. (2000): Face and 2-d mesh animation in MPEG-4.

Image Communication Journal 15,

387-421

Vilhjalmsson, H., Cantelmo, N., Cassell, J., Chafai, N. E., Kipp, M.,

Kopp, S., Mancini, M., Marsella, S., Marshall, A. N., Pelachaud, C., Ruttkay,

Z., Thórisson, K. R., van Welbergen, H. and van der Werf, R. J. (2007). The

Behavior Markup Language: Recent Developments and Challenges. 7th

International Conference on Intelligent Virtual Agents (IVA'07), Paris,

France.

Wilhelm, P., Schoebi, D. & Perrez, M. (2004). Frequency estimates of

emotions in everyday life from a diary method's perspective: a comment on

Scherer et al.'s survey-study "Emotions in everyday life". Social Science

Information, 43(4), 647-665.

7.2 References to Markup Specifications

- [SMIL]

- Synchronized Multimedia Integration Language (Version 2.1), W3C

Recommendation 13 December 2005 http://www.w3.org/TR/SMIL/

- [SSML]

- Speech Synthesis Markup Language (Version 1.0) W3C Recommendation 7

September 2004 http://www.w3.org/TR/speech-synthesis

- [EMMA]

- Extensible MultiModal Annotation markup language W3C Working Draft 9

April 2007 http://www.w3.org/TR/emma/

- [PLS]

- Pronunciation Lexicon Specification (Version 1.0) W3C Working Draft

26 October 2006 http://www.w3.org/TR/pronunciation-lexicon/

- [InkML]

- Ink Markup Language (InkML) W3C Working Draft 23 October 2006 http://www.w3.org/TR/InkML

- [EARL]

- Emotion Annotation and Representation Language (version 0.4.0)

Working draft 30 June 2006 http://emotion-research.net/earl

- [VHML]

- Virtual Human Markup Language (Version 0.3) Working draft 21 October

2001 http://www.vhml.org/

- [IMDI]

- ISLE Metadata Initiative (IMDI). http://www.mpi.nl/IMDI/

8. Acknowledgements

The editors acknowledge significant contributions from the following

persons (in alphabetical order):

- Paolo Baggia, Loquendo

- Laurence Devillers, Limsi

- Alejandra Garcia-Rojas, Ecole Polytechnique Federale de Lausanne

- Kostas Karpouzis, Image, Video and Multimedia Systems Lab (IVML-NTUA)

- Myriam Lamolle, Université Paris VIII

- Jean-Claude Martin, Limsi

- Catherine Pelachaud, Université Paris VIII

- Björn Schuller, Technical University Munich

- Jianhua Tao, Chinese Academy of Sciences

- Ian Wilson, Emotion.AI

Appendix 1: Use Cases

Use case 1: Annotation of emotional data

Use case 1a: Annotation of plain text

Alexander is compiling a list of emotion words and wants to annotate, for

each word or multi-word expression, the emotional connotation assigned to it.

In view of automatic emotion classification of texts, he is primarily

interested in annotating the valence of the emotion (positive vs. negative),

but needs a 'degree' value associated with the valence. In the future, he is

hoping to use a more sophisticated model, so already now in addition to

valence, he wants to annotate emotion categories (joy, sadness, surprise,

...), along with their intensities. However, given the fact that he is not a

trained psychologist, he is uncertain which set of emotion categories to use.

Use case 1b: Annotation of XML structures and files

(i) Stephanie is using a multi-layer annotation scheme for corpora of

dialog speech, using a stand-off annotation format. One XML document

represents the chain of words as individual XML nodes; another groups them

into sentences; a third document describes the syntactic structure; a fourth

document groups sentences into dialog utterances; etc. Now she wants to add

descriptions of the 'emotions' that occur in the dialog utterances (although

she is not certain that 'emotion' is exactly the right word to describe what

she thinks is happening in the dialogs): agreement, joint laughter, surprise,

hesitations or the indications of social power. These are emotion-related

effects, but not emotions in the sense as found in the textbooks.

(ii) Paul has a collection of pictures showing faces with different

expressions. These pictures were created by asking people to contract

specific muscles. Now, rating tests are being carried out, in which subjects

should indicate the emotion expressed in each face. Subjects can choose from

a set of six emotion terms. For each subject, the emotion chosen for the

corresponding image file must be saved into an annotation file in view of

statistical analysis.

(iii) Felix has a set of Voice portal recordings and wants to use them to

train a statistical classifier for vocal anger detection. They must be

emotion-annotated by a group of human labelers. The classifier needs each

recording labeled with the degree of anger-related states chosen from a bag

of words.

Beneath this, some additional data must be annotated also:

- for the dialog design, beneath to know IF a user is angry, it's even

more important to know WHY the user is angry: is the user displeased with

the dialog itself, e.g. too many misrecognitions? Does he hate talking to

machines as a rule? Is he dissatisfied with the company`s service? Is he

simply of aggressive character?

- often voice portal recordings are

- -not human but DTMF tones or

background noises (e.g. a lorry driving by) -not directed to the

dialog but to another person standing beneath the user

- the classifier might use human annotated features for

training, e.g. transcript of words, task in application, function in

dialog, ... this should be annotated also

(iv) Jianhua allows listeners to label the speech with multiple emotions

to form the emotion vector.

Use case 1c: Chart annotation of time-varying signals

(e.g., multi-modal data)

(i) Jean-Claude and Laurence want to annotate audio-visual recordings of

authentic emotional recordings. Looking at such data, they and their

colleagues have come up with a proposal of what should be annotated in order

to properly describe the complexity of emotionally expressive behaviour as

observed in these clips. They are using a video annotation tool that allows

them to annotate a clip using a 'chart', in which annotations can be made on

a number of layers. Each annotation has a start and an end time.

The types of emotional properties that they want to annotate are many.

They want to use emotion labels, but sometimes more than one emotion label

seems appropriate -- for example, when a sad event comes and goes within a

joyful episode, or when someone is talking about a memory which makes them at

the same time angry and desperate. Depending on the emotions involved, this

co-occurrence of emotions may be interpretable as a 'blend' of 'similar'

emotions, or as a 'conflict' of 'contradictory' emotions. The two emotions

that are present may have different intensities, so that one of them can be

identified as the major emotion and the other one as the minor emotion.

Emotions may be communicated differently through different modalities, e.g.

speech or facial expression; it may be necessary to annotate these

separately. Attempts to 'regulate' the emotion and/or the emotional

expression can occur: holding back tears, hiding anger, simulating joy

instead. The extent to which such regulation is present may vary. In all

these annotations, a given annotator may be confident to various degrees.

In addition to the description of emotion itself, Jean-Claude and Laurence

need to annotate various other things: the object or cause of the emotion;

the expressive behaviour which accompanies the emotion, and which may be the

basis for the emotion annotation (smiling, high pitch, etc.); the social and

situational context in which the emotion occurs, including the overall

communicative goal of the person described; various properties of the person,

such as gender, age, or personality; various properties of the annotator,

such as name, gender, and level of expertise; and information about the

technical settings, such as recording conditions or video quality. Even if

most of these should probably not be part of an emotion annotation language,

it may be desirable to propose a principled method for linking to such

information.

(ii) Stacy annotates videos of human behavior both in terms of observed

behaviors and inferred emotions. This data collection effort informs and

validates the design of our emotion model. In addition, the annotated video

data contributes to the function and behavior mapping processes.

Use case 1d: Trace annotation of time-varying signals

(e.g., multi-modal data)

Cate wants to annotate the same clips as Jean-Claude (1c i), but using a

different approach. Rather than building complex charts with start and end

time, she is using a tool that traces some property scales continuously over

time. Examples for such properties are: the emotion dimensions arousal,

valence or power; the overall intensity of (any) emotion, i.e. the presence

or absence of emotionality; the degree of presence of certain appraisals such

as intrinsic pleasantness, goal conduciveness or sense of control over the

situation; the degree to which an emotion episode seems to be acted or

genuine. The time curve of such annotations should be preserved.

Use case 1e: Multiparty interaction

Dirk studies the ways in which persons in a multi-party discussion

expresses their views, opinions and attitudes. We are particularly interested

in how the conversational moves contribute to the discussion, the way an

argument is settled, how a person is persuaded both with reason and rhetoric.

He collects corpora of multi-party discussions and annotates them on all

kinds of dimensions, one of them being a 'mental state' layer in which he