B Examples of Life-Cycle Events

In this specification we use elements from a fictional "dcont"

namespace in some examples.

The W3C Ubiquitous Web

Application Working Group (UWA-WG) is developing such an ontology

and expects to define a "dcont" namespace. The examples below are

informative only and may, unintentionally, be incompatible with the

work of the UWA-WG.

For authoritative information on a (future) "dcont" namespace, please

consult the

Delivery Context Ontology

specification.

1. newContextRequest (from MC to IM)

(The definition of "media" and the details of the media element will

be discussed in the next draft.)

<mmi:mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:newContextRequest source="someURI" requestID="request-1">

<media id="mediaID1>media1</media>

<media id="mediaID2">media2</media>

<mmi:data xmlns:dcont="http://www.w3.org/2008/04/dcont">

<dcont:DeliveryContext>

...

</dcont:DeliveryContext >

</mmi:data>

</mmi:newContextRequest>

</mmi:mmi>

2. newContextResponse (from IM to MC)

<mmi:mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:newContextResponse source="someURI" requestID="request-1" status="success" context="URI-1">

<media>media1</media>

<media>media2</media>

</mmi:newContextResponse>

</mmi:mmi>

3. prepareRequest (from IM to MC, with external markup)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:prepareRequest source="someURI" context="URI-1" requestID="request-1">

<mmi:contentURL href="someContentURI" max-age="" fetchtimeout="1s"/>

</mmi:prepareRequest>

</mmi>

4. prepareRequest (from IM to MC, inline VoiceXML markup)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:prepareRequest source="someURI" context="URI-1" requestID="request-1" >

<mmi:content>

<vxml:vxml version="2.0">

<vxml:form>

<vxml:block>Hello World!</vxml:block>

</vxml:form>

</vxml:vxml>

</mmi:content>

</mmi:prepareRequest>

</mmi:mmi>

5. prepareResponse (from MC to IM, success)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:prepareResponse source="someURI" context="someURI" requestID="request-1" status="success"/>

</mmi:mmi>

6. prepareResponse (from MC to IM, failure)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:prepareResponse source="someURI" context="someURI" requestID="request-1" status="failure">

<mmi:statusInfo>

NotAuthorized

</mmi:statusInfo>

</mmi:prepareResponse>

</mmi:mmi>

7. startRequest (from IM to MC)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:startRequest source="someURI" context="URI-1" requestID="request-1">

<mmi:contentURL href="someContentURI" max-age="" fetchtimeout="1s">

</mmi:startRequest>

</mmi>

8. startResponse (from MC to IM)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:startResponse source="someURI" context="someURI" requestID="request-1" status="failure">

<mmi:statusInfo>

NotAuthorized

</mmi:statusInfo>

</mmi:startResponse>

</mmi:mmi>

9. doneNotification (from MC to IM, with EMMA result)

This requestID corresponds to the requestID of the "startRequest"

event that started it.

<mmi:mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:doneNotification source="someURI" context="someURI" status="success" requestID="request-1" >

<mmi:data>

<emma:emma version="1.0"

<emma:interpretation id="int1" emma:medium="acoustic" emma:confidence=".75" emma:mode="voice" emma:tokens="flights from boston to denver">

<origin>Boston</origin>

<destination>Denver</destination>

</emma:interpretation>

</emma:emma>

</mmi:data>

</mmi:doneNotification>

</mmi:mmi>

<mmi:mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:doneNotification source="someURI" context="someURI" status="success" requestID="request-1" >

<mmi:data>

<emma:emma version="1.0"

<emma:interpretation id="int1" emma:no-input="true"/>

</emma:emma>

</mmi:data>

</mmi:doneNotification>

</mmi:mmi>

11. cancelRequest (from IM to MC)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:cancelRequest context="someURI" source="someURI" immediate="true" requestID="request-1">

</mmi:cancelRequest>

</mmi>

12. cancelResponse (from MC to IM)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:cancelResponse source="someURI" context="someURI" requestID="request-1" status="success"/>

</mmi:cancelResponse>

</mmi:mmi>

13. pauseRequest (from IM to MC)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:pauseRequest context="someURI" source="someURI" immediate="true" requestID="request-1"/>

</mmi>

14. pauseResponse (from MC to IM)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:cancelResponse source="someURI" context="someURI" requestID="request-1" status="success"/>

</mmi:mmi>

15. resumeRequest (from IM to MC)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:resumeRequest context="someURI" source="someURI" requestID="request-1"/>

</mmi>

16. resumeResponse (from MC to IM)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:resumelResponse source="someURI" context="someURI" requestID="request-2" status="success"/>

</mmi:mmi>

<mmi:mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:extensionNotification name="appEvent" source="someURI" context="someURI" requestID="request-1" >

<applicationdata/>

</mmi:extensionNotification>

</mmi:mmi>

18. clearContextRequest (from the IM to the MC)

<mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:clearContextRequest source="someURI" context="someURI" requestID="request-2"/>

</mmi:mmi>

19. statusRequest (from the IM to the MC)

<mmi:mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:statusRequest requestAutomaticUpdate="true" source="someURI" requestID="request-3"/>

</mmi:mmi>

20. statusResponse (from the MC to the IM)

<mmi:mmi xmlns="http://www.w3.org/2008/04/mmi-arch" version="1.0">

<mmi:statusResponse automaticUpdate="true" status="alive" source="someURI" requestID="request-3"/>

</mmi:mmi>

C Event Schemas

mmi.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

NewContextRequest schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="NewContextRequest.xsd"/>

<xs:include schemaLocation="NewContextResponse.xsd"/>

<xs:include schemaLocation="ClearContextRequest.xsd"/>

<xs:include schemaLocation="ClearContextResponse.xsd"/>

<xs:include schemaLocation="CancelRequest.xsd"/>

<xs:include schemaLocation="CancelResponse.xsd"/>

<xs:include schemaLocation="CreateRequest.xsd"/>

<xs:include schemaLocation="CreateResponse.xsd"/>

<xs:include schemaLocation="DoneNotification.xsd"/>

<xs:include schemaLocation="ExtensionNotification.xsd"/>

<xs:include schemaLocation="PauseRequest.xsd"/>

<xs:include schemaLocation="PauseResponse.xsd"/>

<xs:include schemaLocation="PrepareRequest.xsd"/>

<xs:include schemaLocation="PrepareResponse.xsd"/>

<xs:include schemaLocation="ResumeRequest.xsd"/>

<xs:include schemaLocation="ResumeResponse.xsd"/>

<xs:include schemaLocation="StartRequest.xsd"/>

<xs:include schemaLocation="StartResponse.xsd"/>

<xs:include schemaLocation="StatusRequest.xsd"/>

<xs:include schemaLocation="StatusResponse.xsd"/>

<xs:element name="mmi">

<xs:complexType>

<xs:choice>

<xs:sequence>

<xs:element ref="mmi:newContextRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:newContextResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:clearContextRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:clearContextResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:cancelRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:cancelResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:createRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:createResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:doneNotification"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:extensionNotification"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:pauseRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:pauseResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:prepareRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:prepareResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:resumeRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:resumeResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:startRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:startResponse"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:statusRequest"/>

</xs:sequence>

<xs:sequence>

<xs:element ref="mmi:statusResponse"/>

</xs:sequence>

</xs:choice>

<xs:attributeGroup ref="mmi:mmi.version.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

mmi-datatypes.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:xs="http://www.w3.org/2001/XMLSchema" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" targetNamespace="http://www.w3.org/2008/04/mmi-arch">

<xs:annotation>

<xs:documentation xml:lang="en">

general Type definition schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:simpleType name="versionType">

<xs:restriction base="xs:decimal">

<xs:enumeration value="1.0"/>

</xs:restriction>

</xs:simpleType>

<xs:simpleType name="mediaContentTypes">

<xs:restriction base="xs:string">

<xs:enumeration value="media1"/>

<xs:enumeration value="media2"/>

</xs:restriction>

</xs:simpleType>

<xs:simpleType name="mediaAttributeTypes">

<xs:restriction base="xs:string">

<xs:enumeration value="mediaID1"/>

<xs:enumeration value="mediaID2"/>

</xs:restriction>

</xs:simpleType>

<xs:simpleType name="sourceType">

<xs:restriction base="xs:string"/>

</xs:simpleType>

<xs:simpleType name="targetType">

<xs:restriction base="xs:string"/>

</xs:simpleType>

<xs:simpleType name="requestIDType">

<xs:restriction base="xs:string"/>

</xs:simpleType>

<xs:simpleType name="contextType">

<xs:restriction base="xs:string"/>

</xs:simpleType>

<xs:simpleType name="statusType">

<xs:restriction base="xs:string">

<xs:enumeration value="success"/>

<xs:enumeration value="failure"/>

</xs:restriction>

</xs:simpleType>

<xs:simpleType name="statusResponseType">

<xs:restriction base="xs:string">

<xs:enumeration value="alive"/>

<xs:enumeration value="dead"/>

</xs:restriction>

</xs:simpleType>

<xs:simpleType name="immediateType">

<xs:restriction base="xs:boolean"/>

</xs:simpleType>

<xs:complexType name="contentURLType">

<xs:attribute name="href" type="xs:anyURI" use="required"/>

<xs:attribute name="max-age" type="xs:string" use="optional"/>

<xs:attribute name="fetchtimeout" type="xs:string" use="optional"/>

</xs:complexType>

<xs:complexType name="contentType">

<xs:sequence>

<xs:any namespace="http://www.w3.org/2001/vxml" processContents="skip" maxOccurs="unbounded"/>

</xs:sequence>

</xs:complexType>

<xs:complexType name="emmaType">

<xs:sequence>

<xs:any namespace="http://www.w3.org/2003/04/emma" processContents="skip" maxOccurs="unbounded"/>

</xs:sequence>

</xs:complexType>

<xs:complexType name="anyComplexType" mixed="true">

<xs:complexContent mixed="true">

<xs:restriction base="xs:anyType">

<xs:sequence>

<xs:any processContents="skip" minOccurs="0" maxOccurs="unbounded"/>

</xs:sequence>

</xs:restriction>

</xs:complexContent>

</xs:complexType>

</xs:schema>

mmi-attribs.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:xs="http://www.w3.org/2001/XMLSchema" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" targetNamespace="http://www.w3.org/2008/04/mmi-arch"

attributeFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

general Type definition schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:attributeGroup name="media.id.attrib">

<xs:attribute name="id" type="mmi:mediaAttributeTypes" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="mmi.version.attrib">

<xs:attribute name="version" type="mmi:versionType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="source.attrib">

<xs:attribute name="source" type="mmi:sourceType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="target.attrib">

<xs:attribute name="target" type="mmi:targetType" use="optional"/>

</xs:attributeGroup>

<xs:attributeGroup name="requestID.attrib">

<xs:attribute name="requestID" type="mmi:requestIDType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="context.attrib">

<xs:attribute name="context" type="mmi:contextType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="immediate.attrib">

<xs:attribute name="immediate" type="mmi:immediateType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="status.attrib">

<xs:attribute name="status" type="mmi:statusType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="statusResponse.attrib">

<xs:attribute name="status" type="mmi:statusResponseType" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="extension.name.attrib">

<xs:attribute name="name" type="xs:string" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="requestAutomaticUpdate.attrib">

<xs:attribute name="requestAutomaticUpdate" type="xs:boolean" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="automaticUpdate.attrib">

<xs:attribute name="automaticUpdate" type="xs:boolean" use="required"/>

</xs:attributeGroup>

<xs:attributeGroup name="group.allEvents.attrib">

<xs:attributeGroup ref="mmi:source.attrib"/>

<xs:attributeGroup ref="mmi:requestID.attrib"/>

<xs:attributeGroup ref="mmi:context.attrib"/>

</xs:attributeGroup>

<xs:attributeGroup name="group.allResponseEvents.attrib">

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<xs:attributeGroup ref="mmi:status.attrib"/>

</xs:attributeGroup>

</xs:schema>

mmi-elements.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:xs="http://www.w3.org/2001/XMLSchema" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" targetNamespace="http://www.w3.org/2008/04/mmi-arch"

attributeFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

general elements definition schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<!-- ELEMENTS -->

<xs:element name="statusInfo" type="mmi:anyComplexType"/>

<xs:element name="media">

<xs:complexType>

<xs:simpleContent>

<xs:extension base="mmi:mediaContentTypes">

<xs:attributeGroup ref="mmi:media.id.attrib"/>

</xs:extension>

</xs:simpleContent>

</xs:complexType>

</xs:element>

</xs:schema>

NewContextRequest.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

NewContextRequest schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:import namespace="http://www.w3.org/2008/04/dcont" schemaLocation="dcont.xsd"/>

<xs:element name="newContextRequest">

<xs:complexType>

<xs:sequence>

<xs:element ref="mmi:media" maxOccurs="unbounded"/>

<xs:element name="data">

<xs:complexType>

<xs:sequence>

<xs:element ref="dcont:DeliveryContext"/>

</xs:sequence>

</xs:complexType>

</xs:element>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

NewContextResponse.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

NewContextResponse schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="newContextResponse">

<xs:complexType>

<xs:sequence>

<xs:element ref="mmi:media" minOccurs="0" maxOccurs="unbounded"/>

<xs:element ref="mmi:statusInfo" minOccurs="0"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

PrepareRequest.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

PrepareRequest schema for MMI Life cycle events version 1.0.

The optional PrepareRequest event is an event that the Runtime Framework may send

to allow the Modality Components to pre-load markup and prepare to run (e.g. in case of

VXML VUI-MC). Modality Components are not required to take any particular action in

response to this event, but they must return a PrepareResponse event.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:element name="prepareRequest">

<xs:complexType>

<xs:choice>

<xs:sequence>

<xs:element name="contentURL" type="mmi:contentURLType"/>

</xs:sequence>

<xs:sequence>

<xs:element name="content" type="mmi:anyComplexType"/>

<!-- only vxml permitted ?? -->

</xs:sequence>

<!-- data really needed ?? -->

<xs:sequence>

<xs:element name="data" type="mmi:anyComplexType"/>

</xs:sequence>

</xs:choice>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

PrepareResponse.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

CreateResponse schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="prepareResponse">

<xs:complexType>

<xs:sequence>

<xs:element name="data" minOccurs="0" type="mmi:anyComplexType"/>

<xs:element ref="mmi:statusInfo" minOccurs="0"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

StartRequest.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

StartRequest schema for MMI Life cycle events version 1.0.

The Runtime Framework sends the event StartRequest to invoke a Modality Component

(to start loading a new GUI resource or to start the ASR or TTS). The Modality Component

must return a StartResponse event in response. If the Runtime Framework has sent a previous

PrepareRequest event, it may leave the contentURL and content fields empty, and the Modality

Component will use the values from the PrepareRequest event. If the Runtime Framework includes

new values for these fields, the values in the StartRequest event override those in the

PrepareRequest event.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:element name="startRequest">

<xs:complexType>

<xs:choice>

<xs:sequence>

<xs:element name="contentURL" type="mmi:contentURLType"/>

</xs:sequence>

<xs:sequence>

<xs:element name="content" type="mmi:anyComplexType"/>

<!-- only vxml permitted ?? -->

</xs:sequence>

<!-- data really needed ?? -->

<xs:sequence>

<xs:element name="data" type="mmi:anyComplexType"/>

</xs:sequence>

</xs:choice>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

StartResponse.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

CreateResponse schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="startResponse">

<xs:complexType>

<xs:sequence>

<xs:element name="data" minOccurs="0" type="mmi:anyComplexType"/>

<xs:element ref="mmi:statusInfo" minOccurs="0"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

DoneNotification.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

DoneNotification schema for MMI Life cycle events version 1.0.

The DoneNotification event is intended to be used by the Modality Component to indicate that

it has reached the end of its processing. For the VUI-MC it can be used to return the ASR

recognition result (or the status info: noinput/nomatch) and TTS/Player done notification.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="doneNotification">

<xs:complexType>

<xs:sequence>

<xs:element name="data" type="mmi:anyComplexType"/>

<xs:element ref="mmi:statusInfo" minOccurs="0"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

CancelRequest.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

CancelRequest schema for MMI Life cycle events version 1.0.

The CancelRequest event is sent by the Runtime Framework to stop processing in the Modality

Component (e.g. to cancel ASR or TTS/Playing). The Modality Component must return with a

CancelResponse message.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:element name="cancelRequest">

<xs:complexType>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

<xs:attributeGroup ref="mmi:immediate.attrib"/>

<!-- no elements -->

</xs:complexType>

</xs:element>

</xs:schema>

CancelResponse.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

CancelResponse schema for MMI Life cycle events version 1.0.

The CancelRequest event is sent by the Runtime Framework to stop processing in the Modality

Component (e.g. to cancel ASR or TTS/Playing). The Modality Component must return with a

CancelResponse message.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="cancelResponse">

<xs:complexType>

<xs:sequence>

<xs:element ref="mmi:statusInfo" minOccurs="0"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

PauseRequest.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

PauseRequest schema for MMI Life cycle events version 1.0.

The PauseRequest event is sent by the Runtime Framework to pause processing of a Modality

Component (e.g. to cancel ASR or TTS/Playing). The Modality Component must return with a

PauseResponse message.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:element name="pauseRequest">

<xs:complexType>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

<xs:attributeGroup ref="mmi:immediate.attrib"/>

<!-- no elements -->

</xs:complexType>

</xs:element>

</xs:schema>

PauseResponse.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

PauseResponse schema for MMI Life cycle events version 1.0.

The PauseRequest event is sent by the Runtime Framework to pause the processing of

the Modality Component (e.g. to cancel ASR or TTS/Playing). The Modality Component

must return with a PauseResponse message.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="pauseResponse">

<xs:complexType>

<xs:sequence>

<xs:element ref="mmi:statusInfo" minOccurs="0"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

ResumeRequest.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

ResumeRequest schema for MMI Life cycle events version 1.0.

The ResumeRequest event is sent by the Runtime Framework to resume a previously suspended

processing task of a Modality Component. The Modality Component must return with a

ResumeResponse message.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:element name="resumeRequest">

<xs:complexType>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

<xs:attributeGroup ref="mmi:immediate.attrib"/>

<!-- no elements -->

</xs:complexType>

</xs:element>

</xs:schema>

ResumeResponse.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

ResumeRequest schema for MMI Life cycle events version 1.0.

The ResumeRequest event is sent by the Runtime Framework to resume a previously suspended

processing task of a Modality Component. The Modality Component must return with a

ResumeResponse message.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="resumeResponse">

<xs:complexType>

<xs:sequence>

<xs:element ref="mmi:statusInfo" minOccurs="0"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

ExtensionNotification.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://www.w3.org/2008/04/mmi-arch" attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

ExtentionNotification schema for MMI Life cycle events version 1.0.

The extensionNotification event may be generated by either the Runtime Framework or the

Modality Component and is used to communicate (presumably changed) data values to the

other component. E.g. the VUI-MC has signaled a recognition result for any field displayed

on the GUI, the event will be used by the Runtime Framework to send a command to the

GUI-MC to update the GUI with the recognized value.

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:element name="extensionNotification">

<xs:complexType>

<xs:sequence>

<xs:element name="data" type="mmi:anyComplexType"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

<xs:attributeGroup ref="mmi:extension.name.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

ClearContextRequest.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" targetNamespace="http://www.w3.org/2008/04/mmi-arch"

attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

ClearContextRequest schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="clearContextRequest">

<xs:complexType>

<xs:sequence>

<xs:element ref="mmi:media" minOccurs="0" maxOccurs="unbounded"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

ClearContextResponse.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" targetNamespace="http://www.w3.org/2008/04/mmi-arch"

attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

ClearContextResponse schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="clearContextResponse">

<xs:complexType>

<xs:sequence>

<xs:element ref="mmi:media" minOccurs="0" maxOccurs="unbounded"/>

<xs:element ref="mmi:statusInfo" minOccurs="0"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

StatusRequest.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" targetNamespace="http://www.w3.org/2008/04/mmi-arch"

attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

ClearContextRequest schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="clearContextRequest">

<xs:complexType>

<xs:sequence>

<xs:element ref="mmi:media" minOccurs="0" maxOccurs="unbounded"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

StatusResponse.xsd

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema xmlns:dcont="http://www.w3.org/2008/04/dcont" xmlns:mmi="http://www.w3.org/2008/04/mmi-arch" xmlns:xs="http://www.w3.org/2001/XMLSchema" targetNamespace="http://www.w3.org/2008/04/mmi-arch"

attributeFormDefault="qualified" elementFormDefault="qualified">

<xs:annotation>

<xs:documentation xml:lang="en">

ClearContextResponse schema for MMI Life cycle events version 1.0

</xs:documentation>

</xs:annotation>

<xs:include schemaLocation="mmi-datatypes.xsd"/>

<xs:include schemaLocation="mmi-attribs.xsd"/>

<xs:include schemaLocation="mmi-elements.xsd"/>

<xs:element name="clearContextResponse">

<xs:complexType>

<xs:sequence>

<xs:element ref="mmi:media" minOccurs="0" maxOccurs="unbounded"/>

<xs:element ref="mmi:statusInfo" minOccurs="0"/>

</xs:sequence>

<xs:attributeGroup ref="mmi:group.allResponseEvents.attrib"/>

<xs:attributeGroup ref="mmi:target.attrib"/>

</xs:complexType>

</xs:element>

</xs:schema>

D Ladder Diagrams

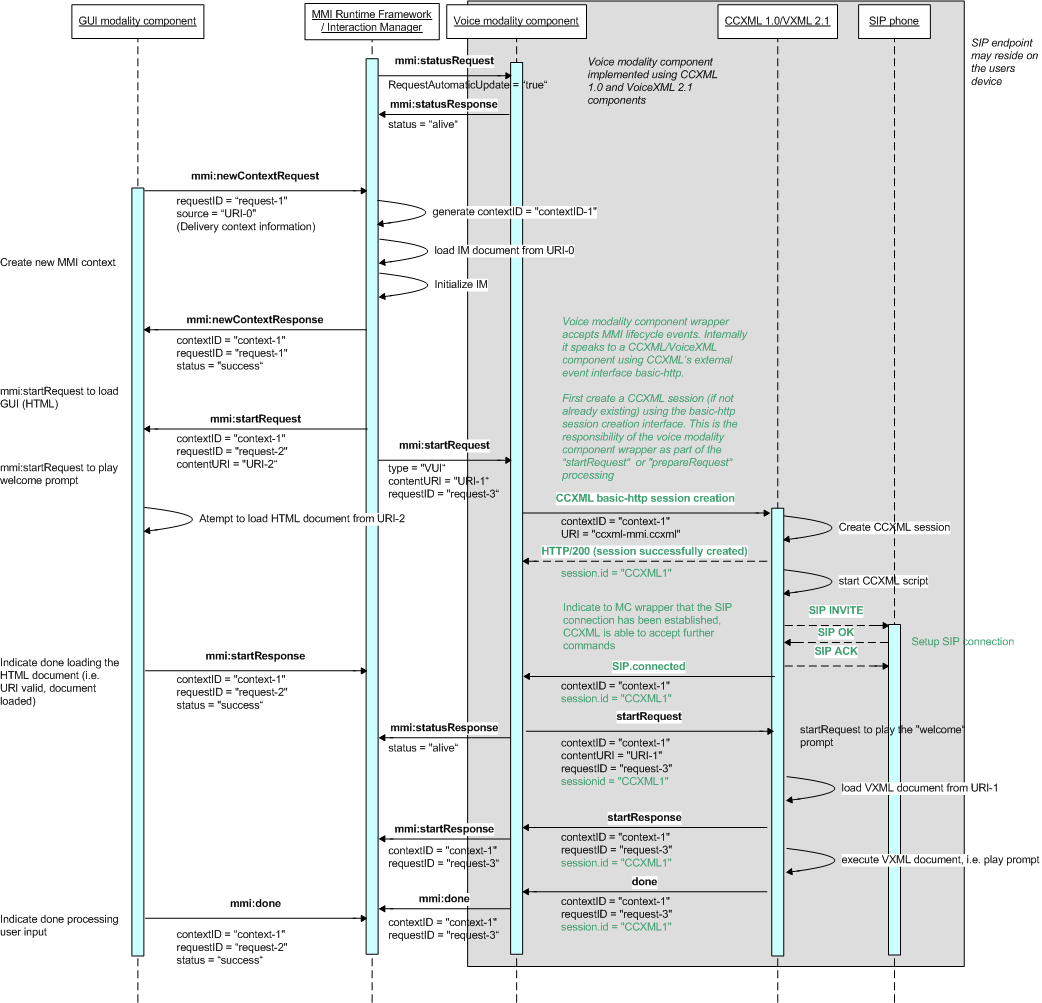

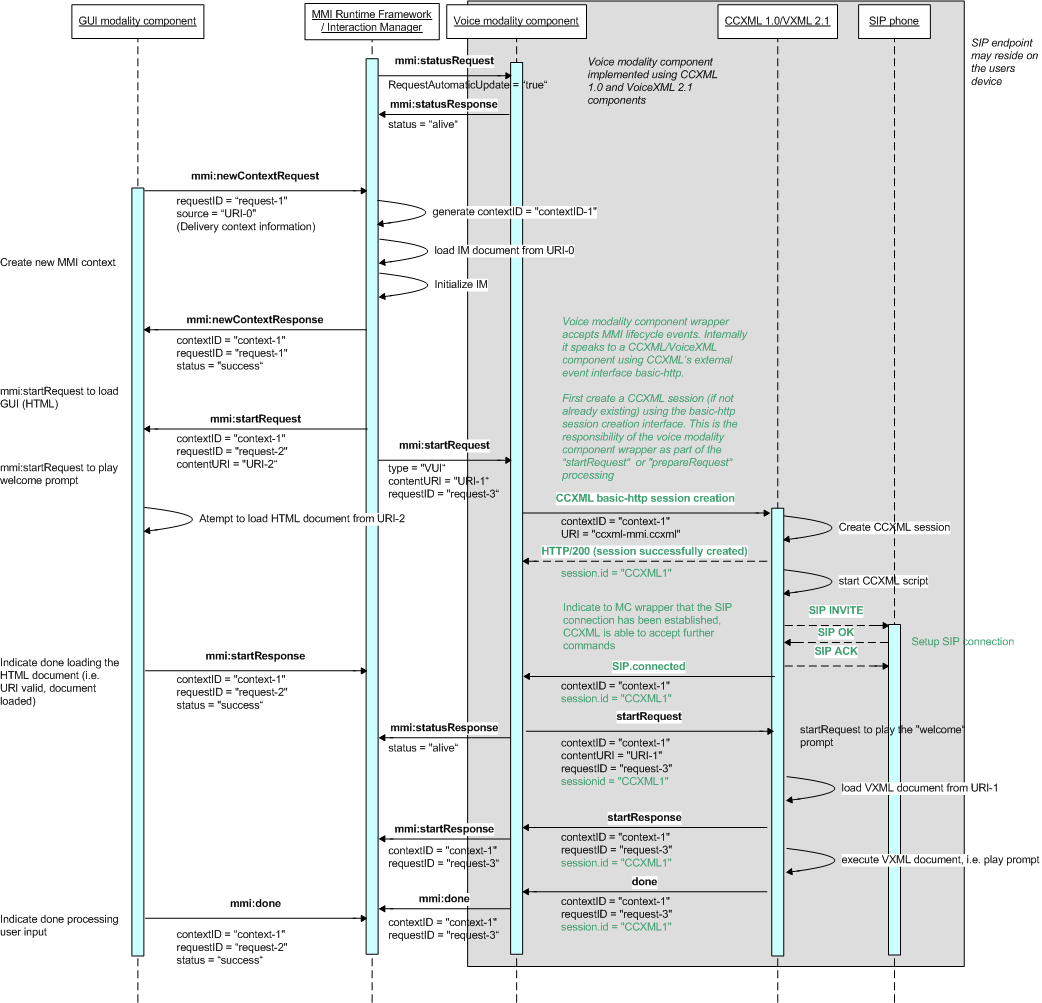

D.1 Creating a Session

The following ladder diagram shows a possible message sequence upon a session creation.

We assume that the Runtime Framework and a Interaction Manager session is already up and

running. The user starts a multimodal session for example by starting a web browser and

fetching a given URL.

The initial document contains scripts which providing the modality component

functionality (e.g. understanding XML formatted life cycle events) and message transport

capabilities (e.g. AJAX, but depends on the exact system implementation).

After loading the initial documents (and scripts) the modality component implementation

issues a mmi:newContextRequest message to the Runtime Framework. The Runtime Framework may

load a corresponding markup document, if necessary (could be SCXML), and initializes and

starts the Interaction Manager.

In this sceneario the Interaction Manager manager logic issues a number of mmi:startRequest

messages to the various modality components. One message is sent to the graphical modality

component (GUI) to instruct it to load a HTML document. Another message is sent to a voice

modality component (VUI) to play a welcome message.

The voice modality component has (in this example) to create a VoiceXML session.

As VoiceXML 2.1 does not provide an external event interface a CCXML session will be used for

external asynchronous communication. Therefore the voice modality component uses the session

creation interface of CCXML 1.0 to create a session and start a corresponding script. This

script will then make a call to a phone at the user device (which could be a regular phone

or a SIP soft phone on the user's device). This scenario illustrates the use of a SIP phone,

which may reside on the users mobile handset.

After successful setup of a CCXML session and the voice connection the voice modality

component instructs the CCXML browser to start a VoiceXML dialog and passing it a corresponding

VoiceXML script. The VoiceXML interpreter will execute the script and play out the welcome

message. After the execution of the VoiceXML script has finished, the voice modality component

notifies the Interaction Manager using the mmi:done event.

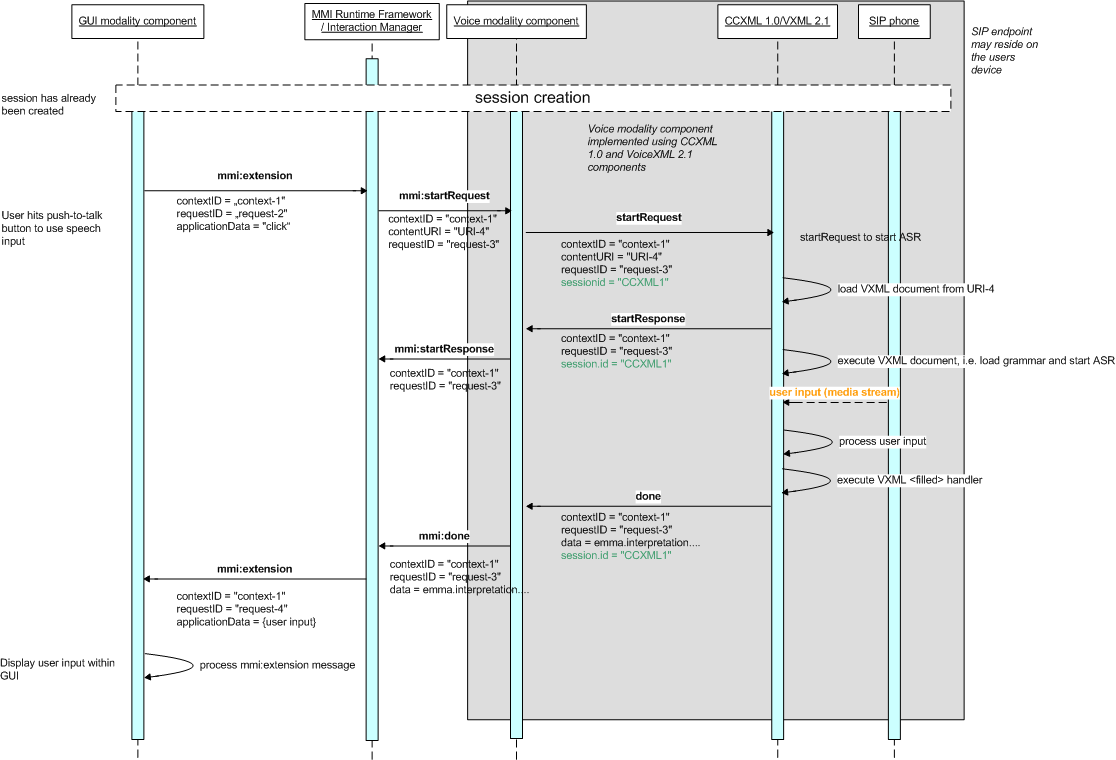

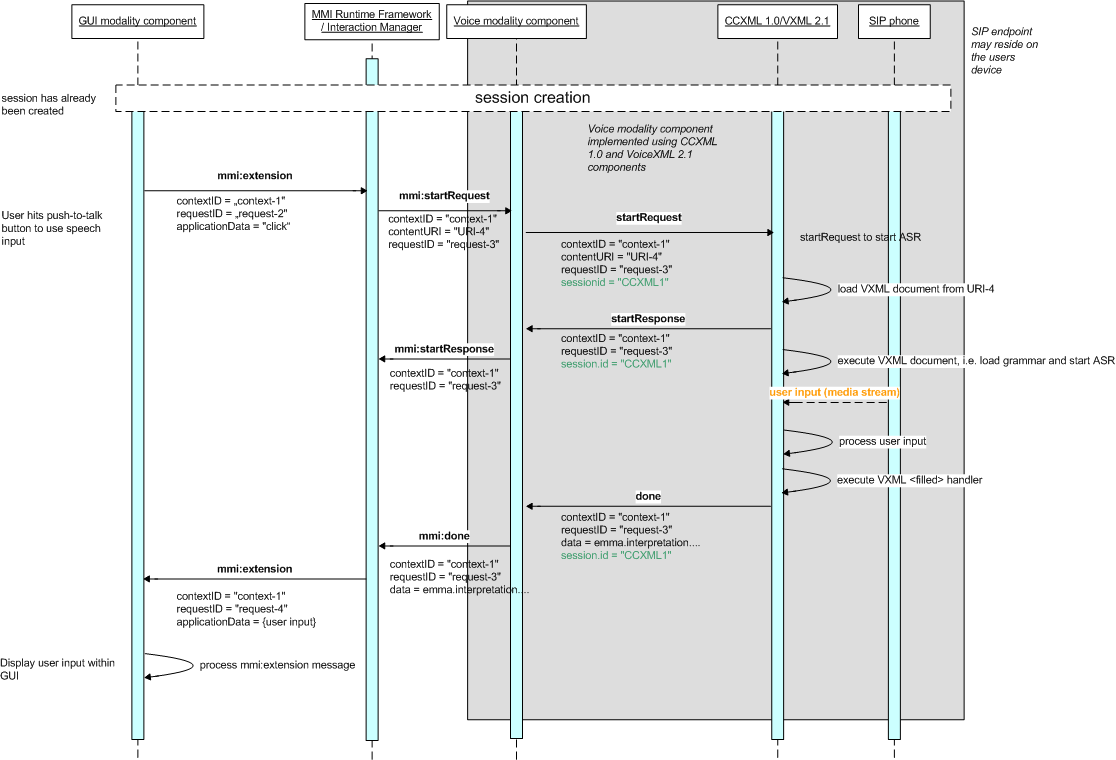

D.2 Processing User Input

The next diagram gives a example for the possible message flow while processing of user input.

In the given scenario the user wants to enter information using the voice modality component.

To start the voice input the user has to use the "push-to-talk" button. The "push-to-talk"

button (which might be a hardware button or a soft button on the screen) generates a

corresponding event when pushed. This event is issues as a mmi:extension event towards the

Interaction Manager. The Interaction Manager logic sends a mmi:startRequest to the voice

modality component. This mmi:startRequest message contains a URL which points to a

corresponding VoiceXML script. The voice modality component again starts a VoiceXML

interpreter using the given URL. The VoiceXML interpreter loads the document and executes it.

Now the system is ready for the user input. To notify the user about the availabilty of the

voice input functionality the Interaction Manager might send an event to the GUI upon receiving

the mmi:startResponse event (which indicates that the voice modality component has started to

execute the document). But note that this is not shown in the picture.

The VoiceXML interpreter captures the users voice input and uses a speech recognition

engine to recognize the utterance. The speech recognition result will be represented as an

EMMA document and sent to the interaction manager using the mmi:done message. The Interaction

Manager logic sends a mmi:extension message to the GUI modality component to instruct it to

display the recognition result.

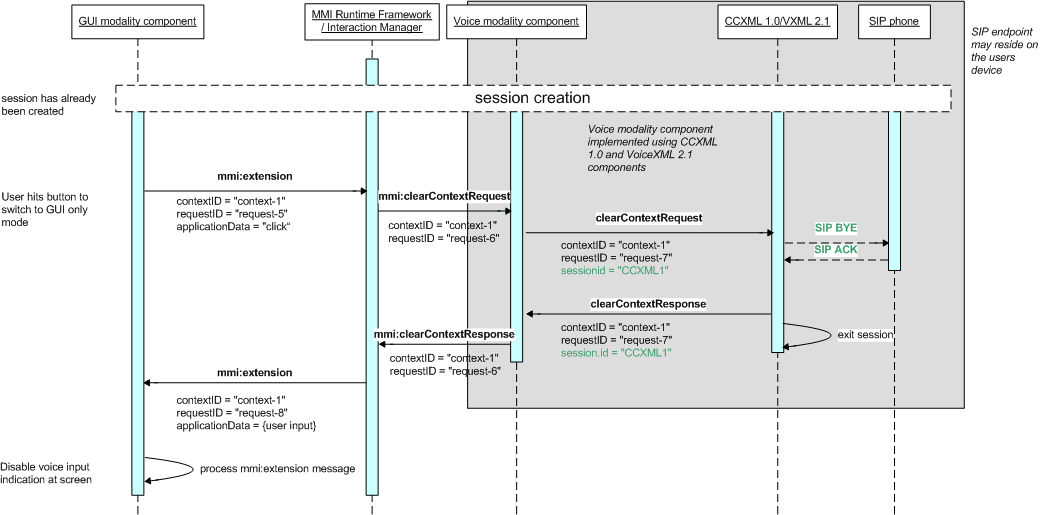

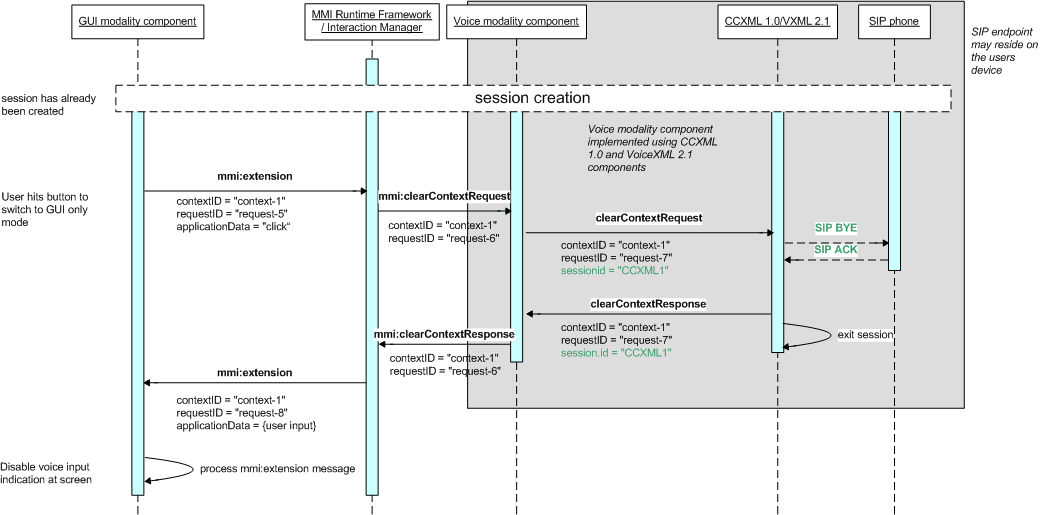

D.3 Ending a Session

In the following sceneario a modality component instance will be destroyed as a reaction

to a user input, e.g. because the user selected to change to the GUI only mode. In this case

a mmi:clearContextRequest will be issued to the voice modality component. The voice modality

component wrapper will then destroy the CCXML (and VoiceXML) session.

The application logic (i.e. the IM) may also decide to indicate the removed voice functionality

and disable an icon on the screen which indicates the availablity of the voice modality.