New Ideas

This page is dedicated for the discussion on new use cases and topics for the next round in 2014.

Template for Use Cases

This is a generic template for proposing use cases that should be discussed by the IG TFs. Note that TFs may extend the template if/as needed, so check with your TF moderator if you are not sure.

Submitter(s): company (name), ...

Tracker Issue ID: ISSUE-NN

Category How would you categorize this issue?: 1. gap in existing specifications (=> IG to draft a proposal for an existing WG) 2. require new specification/WG (=> IG to draft a proposal for W3C Director) 3. can be addressed as part of a guidelines document to be produced by the IG (=> proponent to draft some text for such document)

Description:

- high level description/overview of the goals of the use case

- Schematic Illustration (devices involved, work flows, etc) (Optional)

- Implementation Examples (e.g. JS code snippets) (Optional)

Motivation:

- Explanation of benefit to ecosystem

- Why were you not able to use only existing standards to accomplish this?

Dependencies:

- other use cases, proposals or other ongoing standardization activities which this use case is dependant on or related to

What needs to be standardized:

- What are the new requirements, i.e. functions that don't exist, generated by this use case?

Comments:

- any relevant comment that is good to track related to this use case

Use Cases

UC2-1 Audio Fingerprinting

Submitter(s): W3C (Daniel Davis)

Reviewer(s): Yosuke, Bin

Tracker Issue ID: ISSUE-NN

Category How would you categorize this issue?: 1. gap in existing specifications (=> IG to draft a proposal for an existing WG) 2. require new specification/WG (=> IG to draft a proposal for W3C Director) 3. can be addressed as part of a guidelines document to be produced by the IG (Currently unsure)

Description:

- The ability for a web page/app to detect what TV content is being viewed by recognizing its audio track. In other words, the user's device could listen to a media stream for a few seconds and then match that audio clip (fingerprint) against a database of fingerprints from known audio and video content. For example, Alice is watching When Harry Met Sally on TV. She opens the Twitter website on her tablet. The website listens to the TV's audio (she must grant permission first) and detects the movie. It then prefills a tweet for her to send to her followers, including a link to the movie's Wikipedia page or DVD page on Amazon.

Motivation:

- It may be possible to achieve this with existing web standards but this should be investigated. There may also be a need for a dedicated, simplified standard.

- Facebook have announced they're adding this feature to their mobile app, so you can share what you're currently watching/listening to. Official announcement.

Dependencies:

- Ongoing related standardization activities include getUserMedia for audio detection and maybe Web Audio API.

Gaps

- Client-server web interface (e.g. RESTful API) for fingerprinting services, both queries and returned results.

- Feature to access proprietary hash generators.

- In-browser capability to generate hashes.

Features For Declarative Interface:

- [Yosuke] There are three essential entities you need to specify in your web page/app to achieve this use case:

- audio source

- fingerprint generator or algorithm

- fingerprint service provider or database, ex. a URL.

- [Yosuke] Some additional information may help web page/app have better control over the service:

- timeout

- duration for fingerprinting

- [Yosuke] This feature should be asynchronous, ex. by returning promises, to avoid blocking other logics in your application while allowing a fingerprint service provider take some time to resolve the TV program.

- [Yosuke] I'm unsure if we need to standardise how we identify or designate TV programs.

What needs to be standardized:

- To be investigated

Architectural Variation of Existing Services

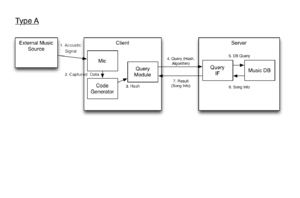

- Type A: Client-side fingerprinting

- AcoustID

- Home Page: http://acoustid.org/

- API Type:

- Hash Algorithm: Chromaprint

- AmpliFIND (libofa)

- Home Page: http://www.amplifindmusicservices.com/

- Hash Algorithm: (proprietary)

- Echonest

- Home Page: http://developer.echonest.com/

- API Type: HTTP

- Hash Algorithm: ENMFP, Echoprint

- Last.fm

- Home Page: http://www.last.fm/

- API Type: HTTP

- Hash Algorithm: "Computer Vision for Music Identification" algorithm

- MusicBrainz

- Home Page: http://musicbrainz.org/

- uses AcoustID

- used TRM and PUID (with libofa)

- Relatable TRM (looks not active)

- Home Page: http://www.relatable.com/tech/trm.html

- AcoustID

- Type ?:

- Shazam

- API: (Doesn't provide open API)

- Hash Algorithm: (proprietary) http://www.ee.columbia.edu/~dpwe/papers/Wang03-shazam.pdf

- Shazam

Comments:

- [Yosuke] This use case is likely to be implementable with existing Web standards, but not yet studied in detail from web application viewpoint. It's worth elaborating the use case and clarify how we can implement this type of use cases as a web app. We may find minor gaps and/or needs for high-level APIs.

- [Yosuke] Watermarking requires service providers to modify audio tracks to multiplex data onto it, while fingerprinting doesn't. This difference characterise each tech: you can add any information with watermarking while you can use fingerprinting only to identify what audio segment you are listening, and so on.

- There are three potential approaches:

- Sending audio data (e.g. 10 seconds) to a server for recognition (perhaps using Web Audio API and getUserMedia);

- An algorithmic function is available in the browser, sending the hashed data to a server for recognition (perhaps working with Web Audio WG);

- A module, perhaps similar to EME, is accessed from the browser to run a proprietary algorithm, sending the hashed data to a server for recognition (perhaps working with HTML WG Media TF).

- In future, we might want to reconsider the use of the term "hash", e.g. to acoustic characteristic. For guidance, we could work with e.g. the Voice Browser WG and Web Audio WG.

- Existing fingerprinting services tend to use their own web APIs. Standardization of these APIs is something to be considered. One example of this is the Media Fragments URI spec.

- A potential extension of this is video fingerprinting if there is demand.

UC2-2 Audio Watermarking

Submitter(s): W3C (Daniel Davis)

Reviewer(s): Yosuke, Bin

Tracker Issue ID: ISSUE-NN

Category How would you categorize this issue?: 1. gap in existing specifications (=> IG to draft a proposal for an existing WG) 2. require new specification/WG (=> IG to draft a proposal for W3C Director) 3. can be addressed as part of a guidelines document to be produced by the IG (Currently unsure)

Description:

- The ability for a web page/app to detect what TV content is being viewed by identifying an inaudible code in its audio track. In other words, the user's device could hear tones within a media stream that have been added by the content creator. They are inaudible to humans and enable the listening application to identify the media content and maybe other metadata. For example, Alice is watching Cosmos on TV. She opens the Fox website on her tablet. The website listens to the TV's audio (she must grant permission first) and detects the program and the episode. It then redirects her to the page for the episode she is viewing which has information to accompany the program (e.g. close-ups of planets, sky maps.).

Motivation:

- This is being looked into by the TV industry. For example, in January 2014 ATSC invited proposals for audio and video watermarking technologies.

- It may be possible to achieve this with existing web standards but this should be investigated. There may also be a need for a dedicated, simplified standard.

Dependencies:

- Ongoing related standardization activities include getUserMedia for audio detection and maybe Web Audio API.

Gaps

- No in-browser watermark decoder/extractor.

- No in-browser watermark encoder/multiplexor.

- No access to existing proprietary watermarking modules.

Features For Declarative Interface:

- [Yosuke] Essential entities to achieve this use case are:

- audio source

- audio watermark algorithm

- [Yosuke] This feature should be asynchronous because you need to listen some duration of the audio to decode watermarks.

- [Yosuke] The return value should be a string.

What needs to be standardized:

- To be investigated

Comments:

- [Yosuke] This use case is likely to be implementable with existing Web standards, but not yet studied in detail from web application viewpoint. It's worth elaborating the use case and clarify how we can implement this type of use cases as a web app. We may find minor gaps and/or needs for high-level APIs.

- [Yosuke] Watermarking requires service providers to modify audio tracks to multiplex data onto it, while fingerprinting doesn't. This difference characterise each tech: you can add any information with watermarking while you can use fingerprinting only to identify what audio segment you are listening, and so on.

- Audio fingerprinting may be a higher priority than audio watermarking because of more demand in the industry.

- A potential extension of this is video watermarking if there is demand.

- Common uses of audio (or video) watermarking include:

- Detection and prevention of media piracy;

- Track multiplexing (e.g. text tracks) within an analogue audio/video stream (probably not an issue for the web).

- It would probably be helpful to communicate with the Web Audio WG and Voice Browser WG. There could potentially be methods for adding and extracting watermark data at an inaudible frequency, e.g. in the Web Audio API.

Media playback adjustment

(Removed by submitter - this feature already exists as part of the HTML spec. See playbackRate and demo)

UC2-3 Identical Media Stream Synchronization

Submitter(s): W3C (Daniel Davis)

Reviewer(s): Kaz, Clarke

Tracker Issue ID: ISSUE-NN

Category How would you categorize this issue?: 1. gap in existing specifications (=> IG to draft a proposal for an existing WG)

Description:

- The ability for two or more identical media streams to play in sync on separate devices. This can either be used to create an immersive experience or to allow multiple people to enjoy the same content simultaneously. For example, Alice and Bob are sitting on a train. They want to watch a streaming video over the web on their separate devices. They both got to the same web page with a unique ID. Once Alice clicks play, Bob's video should start playing immediately, or it there's a delay in playing the video should jump forward to the position of Alice's video.

Motivation:

- This is being requested by the TV industry.

- It may be possible to achieve this with existing web standards but this should be investigated.

Dependencies:

- Possible related standardization activities included:

- HTML WG, especially Media Task Force, and Web Sockets

- SMIL [Kaz]

- SCXML and MMI Architecture [Kaz]

- Media Resource In-band Tracks CG

- Second Screen Presentation API CG/WG

- Network Service Discovery API

Gaps

- No ability to use existing synchronization data within a stream.

- No way to accurately synchronize the presentation of media elements on multiple devices (with the same media source).

What needs to be standardized:

- To be investigated

Comments:

- There may be value in considering the Media Fragments URI specification.

- Other standards organizations (e.g. ATSC, DLNA, HbbTV, IPTV Forum Japan) may have ongoing work addressing this so we should ask them about their plans.

- One possibility is the creation of a Media Synchronization Community Group

UC2-4 Related Media Stream Synchronization

Submitter(s): W3C (Daniel Davis)

Reviewer(s): Kaz, [Clarke]

Tracker Issue ID: ISSUE-NN

Category How would you categorize this issue?: 1. gap in existing specifications (=> IG to draft a proposal for an existing WG)

Description:

- The ability for two or more related media streams to play in sync. This could be between multiple media elements on a single web page or on separate devices. For example, Eve is watching a figure skating video. The video stream is available with multiple camera angles. She'd like to see an overall view of the event in one media element and a close-up of the skater in another media element. Both streams should be synchronised.

Motivation:

- This is being requested by the TV industry.

- It may be possible to achieve this with existing web standards but this should be investigated.

Dependencies:

- Possible related standardization activities are

- HTML, especially Media Task Force, and Web Sockets.

- SMIL [Kaz]

- SCXML and MMI Architecture [Kaz]

- Media Resource In-band Tracks CG

- Second Screen Presentation API CG/WG

- Network Service Discovery API

Gaps

- No ability to use existing synchronization data within a stream.

- No way to accurately synchronize multiple media elements in a single document (with different but related media sources).

- No way to deal with timestamp differences in related media streams. Note that the timestamp formats may differ in the streams, e.g. if one is 24 fps and one is 60 fps.

What needs to be standardized:

- To be investigated

Comments:

- There may be value in considering the Media Fragments URI specification.

- One solution is to bind multiple video/audio elements. The web author can add e.g. a "sync" button and the actual synchronization method is handled by the browser, perhaps reading synchronization data if it exists within the stream.

- Other standards organizations (e.g. ATSC, DLNA, HbbTV, IPTV Forum Japan) may have ongoing work addressing this so we should ask them about their plans.

- One possibility is the creation of a Media Synchronization Community Group.

UC2-5 Triggered Interactive Overlay

Submitter(s): AT&T (Bin Hu)

Reviewer(s): Daniel, [Yosuke]

Tracker Issue ID: ISSUE-NN

Category How would you categorize this issue?: 1. gap in existing specifications (=> can be addressed in TV Control API CG)

Description:

- Prerequisite:

- The user has a hybrid TV-capable set-top box (STB)

- The user has subscribed to a hybrid TV service from the TV service provider

- A web application is controlling/managing the channels in the STB from hybrid sources

- The web application is capable of triggering overlays on content, including linear streams

- Use Case:

- John is watching the live streaming of a San Francisco Giants v. Detroit Tigers baseball game.

- An overlay is triggered to suggest a movie (The Bachelor) to John. For example, it may show short clips of the trailer in the overlay with a suggestion such as ”Press OK to pause programming and watch the trailer, and get options for purchasing the movie”.

- John sees the Giants are winning so he decides to watch the movie. He presses the OK button and watches the trailer.

- A second overlay is triggered on top of the trailer to suggest John to purchase the movie on demand.

- John purchases the movie and starts watching. The overlay is gone.

- Derived Requirements:

- The triggered interactive overlay must have the ability to bind to the video it is associated with, including live, time-shifted or linear commercial programming.

- The triggered interactive overlay must have the ability to be kept alive/displayed to the TV viewer within the bounds of the video content length it is associated with, i.e. the overlay goes away when content it is bound to ends or the user switches channel to different video content.

- The triggered interactive overlay must have the ability to be displayed to the TV viewer over key event(s) within the video content.

- The triggered interactive overlay must have the ability to display or hide based on channel, and/or time, and/or date, or other criteria.

- The triggered interactive overlay must have the ability to be kept alive/displayed based on industry wide signaling technology.

Motivation:

- This is being requested by the TV industry. For example, this feature is already available in native platforms for TV and the TV industry requires this feature in the web platform as well.

- It may be possible to achieve this with ongoing effort in the TV Control API CG.

Dependencies:

- Possible related standardization activities are:

- HTML, including Media TF

- CSS

- SVG

- TV Control API CG

Gaps

- Extended media events to notify of things like section and scene changes (e.g. commercial break) and change of media source (e.g. change of channel).

- No standard to bind video content with in-browser objects.

What needs to be standardized:

- Triggering events and types, data objects/supplemental information of trigger. Others to be investigated.

Comments:

- [Daniel] Simple overlays are already possible with CSS and JavaScript. The dispatchEvent() function can be used to trigger events. There may still be gaps, though, which needs to be looked in to.

- [Yosuke] I think we need to develop a generic event model that notifies changes of parameters in transport streams, such as 'channelchange'.

- It seems there is a need for two-way communication between the overlay and the related media element. For messages/triggers from the overlay to the video (e.g. change channel), this should be addressed by the TV Control API CG. For messages/triggers from the video to the overlay, maybe there is a need for more video events. For example, `channelchange` or `sourcechange`, as well as in-video events (e.g. commercial break) such as `scenechange` or `sectionchange`. The triggers within streams already exist for broadcast but differ in each region. There is currently no way to listen for these triggers as DOM events.

- The overlay mechanism can be achieved in a variety of ways:

- The HTML5 <dialog> element;

- A standard `div` styled with CSS;

- Use of SVG, which has layering capabilities;

- This could also be achieved with Web Components.

UC2-6 Clean Audio

Submitter(s): W3C (Daniel Davis on behalf of Janina Sajka)

Reviewer(s):' Yosuke, Kaz

Tracker Issue ID: ISSUE-NN

Category How would you categorize this issue?: 1. gap in existing specifications (=> IG to draft a proposal for an existing WG) 2. require new specification/WG (=> IG to draft a proposal for W3C Director) 3. can be addressed as part of a guidelines document to be produced by the IG (Currently unsure)

Description:

- Bob enjoys going to the movies but as he's grown older he has lost more and more of his hearing. Understanding movie dialog amidst all the other background sounds has become more and more difficult for him. He could rely on captions but he'd really like to use his hearing whenever possible. He discovered that some TV programs provide an alternative audio track called "Clean Audio" and he now has an app on his mobile phone that allows him to select this alternate audio. His hearing therapist has also equalized the audio in a way that emphasizes the frequencies where his hearing works well, and de-emphasizes those where it doesn't work well. Now, he's able to listen to this alternative audio track on his mobile, on a headphone attached to his mobile, or even on his living room speakers by sending this alternative audio to his TV's speakers. He's also discovered that Clean Audio is available for certain movies at the cinema and that he can select that alternative track for listening over headphones connected to his mobile phone using the same app that he uses at home.

(Summary of full description on public-html-ally mailing list.)

Motivation:

- Proposed by the Media Accessibility sub-group.

Dependencies:

- Ongoing related standardization activities include getUserMedia for audio detection and maybe Web Audio API.

- Network Service Discovery API

- Second Screen Presentation API CG/WG

Gaps

- No way to accurately synchronize multiple media elements in a single document (with different but related media sources).

- No way to equalize content-protected (e.g. with EME) audio tracks.

What needs to be standardized:

- To be investigated

Comments:

- [Yosuke] If clean audio tracks are provided through HTML5 audio elements, you can select them through existing HTML5 media interfaces.

- [Yosuke] If clean audio tracks are provided as in-band resources of containers, you can select them through http://dev.w3.org/html5/html-sourcing-inband-tracks/, which is still an unofficial and on-going effort in the W3C Media Resource In-band Tracks CG.

- [Yosuke] You can equalise audio tracks through Web Audio API. https://dvcs.w3.org/hg/audio/raw-file/tip/webaudio/specification.html

- [Yosuke] However, it's highly likely for you to be unable to equalise audio tracks if they are delivered through Encrypted Media Extensions. https://dvcs.w3.org/hg/html-media/raw-file/tip/encrypted-media/encrypted-media.html

- [Yosuke] I think EME doesn't allow UAs to implement this through JavaScript and Web Audio API.

- If the clean audio track is provided as an audio element to be synchronized with a separate video element, this is dependent on the Related Media Stream Synchronization use case above.

Other Topics

Accessibility Extension for 1st Round Use Cases

Submitter(s): W3C (Kaz Ashimura & HTML Accessibility TF)

Tracker Issue ID: ISSUE-NN

Category How would you categorize this issue?: 1. gap in existing specifications

Description: [UC1] [UC2] [UC3] [UC4] [UC5] [UC6] [UC7] [UC8] [UC9] [UC10] [UC11] [UC12]

Motivation:

- TV services should be accessible.

Dependencies:

- Possible related standardization activities are HTML and TV Control API.

What needs to be standardized:

- TBD

Comments:

- '

Web and TV Accessibility Initiative

- Define '5 Star Web and TV accessibility'

- Similar to '5 Star Open Data' for Open Linked Data.

- Help improve awareness of Web and TV stakeholders

- Create a brief handbook or checklist of Web Media Accessibility Guideline for TV services

- Help Web and TV stakeholders use the guideline to make their service better

- Polish Web and TV use cases and APIs from media accessibility viewpoint

- Polish the 1st-round use cases [WIP]

- Polish second round use cases