Linguistic Linked Data for Content Analytics: a Roadmap

Executive Summary

As data is being created at an ever-increasing pace, more and more organisations will exploit insights generated from the data to optimize existing processes, improve decision making and to modify existing or even generate radically new business models.

Data has been in fact regarded as the oil of the new economy. Consequently, data linking and content analytics are the key technologies to refine this oil so that it can actually drive the motor of many applications. Refinement consists of: i) homogeneization, ii) linking, iii) semantic analysis, and iv) repurposing.

Homogeneization consists in making sure that the data is described using agreed terminologies and standardized vocabularies or ontologies in machine readable formats. Thus, data becomes interoperable and easily exploitable due to the semantic normalization that avoids conceptual mismatches. Refinement also includes linking data across datasets and sites (resulting in linked data), and is crucial to make data exploitable as a whole rather than as isolated, unrelated datasets. This also includes linking unstructured datasets that originate from different natural languages. In order to exploit data meaningfully, it needs to semantically analyzed. This holds in particular for unstructured data, e.g. textual data from which the key messages and facts need to be extracted and expressed with respect to standardized vocabularies. Structuring and linking unstructured data such as text is referred to as linguistic linked data (LLD). Finally, repurposing consists of transforming data so that it can be used for a different purpose than it was originally created for. Repurposing can include merging and mashing up datasets, format transformations, modifications to the data to fit different audiences (experts vs. novices), speakers of different languages, etc.

This document presents a roadmap to create the infrastructure that makes all the above possible and it focuses on three overarching application fields and needs: i) Global Customer Engagement Use Cases, ii) Public Sector and Civil Society Use Cases and iii) Linguistic Linked Data Life Cycle and Linguistic Linked Data Value Chain

With respect to Global Customer Engagement Use Cases, the challenge for the future will be to create ecosystems in which data from different sources and modalities come together, and builds the basis for developing omnichannel experiences for customers. This requires linking of data across modalities and techniques for repurposing and composing information items into stories and narrations that are more amenable and accessible to users. Consistency of message across channels, languages and audiences will be important and crucially supported by (linguistic) linked data technology. As we observe a shift from marketing activities characterized by a push and active recommendations to a new paradigm of customer engagement that is transparent as it represents a commodity that recognizes and fulfills customer needs in real time, richer linked semantic descriptions of users, products, contexts and intentions will be needed to support matchmaking.

In the area of Public Sector and Civil Society Use Cases, linked data can make an important contribution to the creation of a single digital market in which national barriers are overcome. A crucial ingredient for the creation of a single digital market is the development of ontologies and terminologies that harmonize the concepts used in different countries and jurisdictions, as a basis to reach interoperability and develop a new generation of (public) services that is implemented across countries. This is in spirit to the vision behind the Connecting Europe Facility (CEF). New robust methodologies for alignment of different conceptualizations originating from different cultural, national and linguistic contexts, as well as techniques for collaborative, cross-border ontology engineering will be needed. We also foresee that in the near future we will need a better understanding of the domains in which cross-lingual and cross-border communication is urgently needed as a basis to develop shared vocabularies that support language-independent communication in key domains.

As regards Linguistic Linked Data Life Cycle and Value Network Requirements, the future will have to bring an ecosystem in which linguistic resources are easily exploitable by content analytic providers and workflows in a way that provenance, licensing and metadata are clearly exposed to support trustful exploitation of resources. Future efforts will need to create principles for a market in which linguistic linked data, both open and closed, can be traded, developing new business models that include also non-monetary transactions. The goal will be to create an ecosystem in which both linguistic resources and services building upon these are: i) easily discoverable, ii) trustful and certified, iii) comparable and benchmarkable, iv) easily composable and exchangable, v) multilingual, vi) scalable. This involves two challenges: i) bringing the stakeholders together to establish principles for such a market, and ii) develop a technical infrastructure including standardization of APIs and vocabularies that supports plug&play principles.

The first draft of this document has been created by LIDER project partners (FP7 CSA, refererence number 610782 in the topic ICT-2013.4.1: Content analytics and language technologies). The analysis builds on the findings and forecasts of a number of existing public reports on the topic, by aggregating and analyzing different needs and forecasts expressed in these documents as a basis to identify application areas in content and big data analytics where linked data could represent a key enabling technology. Building on the insights about application areas where the potential of linked data technology, in particular linguistic linked data technology, is regarded as very high, a roadmap is defined that extrapolates the above mentioned findings to define a R&D roadmap that can support research organizations, enterprises and funding agencies in decision making and to prioritize R&D investments. A further goal for this roadmap is to define an R&D agenda at the intersection of the language resource, natural language processing and Big Data communities.

Background and Context

This roadmap is a product of four roadmapping events that the LIDER project has organized (see also [here]):

- 1st LIDER Roadmapping Workshop in Athens, 21st of March, 2014, collocated with the European Data Forum (EDF)

- 2nd LIDER Roadmapping Workshop in Madrid, 8th-9th of May, 2014, collocated with the Multilingual Web Workshop (MLW)

- 3rd LIDER Roadmapping Workshop in Dublin, 14th of June, 2014, collocated with Localization World Dublin

- 4th LIDER Roadmapping Workshop in Leipzig, 2nd of September, 2014, collocated with SEMANTICS

Further, the LIDER project has interacted with relevant stakeholders in the context of a number of community groups including:

- W3C Community Group on Linked Data & Language Technologies (LD4LT), with 79 participants

- W3C Community Group on Best Practices for Multilingual Linked Open Data (BMLOD), with 79 participants

- W3C Community Group on Ontology Lexica (ontolex), with 90 participants

Thus, this roadmap is based on the needs and predictions of close to 100 relevant stakeholders from both academia and industry, which have been gathered in the above mentioned events and community groups.

Introduction

Content is growing at an impressive, exponential rate. Exabytes of new data are created every single day (Pepper et al. 2014). In fact, data has been recently referred to as the oil (Palmer et al. 2006) of the new economy, where the new economy is understood as a new way of organizing and managing economic activity based on the new opportunities that the Internet provided for businesses (Alexander 1983).

There are several indicators that clearly corroborate that the exponential growth of data will continue:

- Volume: The data streams already generated today are huge. Only one hour of customer transaction data at Wal-Mart -- corresponding to 2.5 petabytes -- provides 167 times the amount of data housed for example by the Library of Congress.

- Growth Rate: 90% of the data available today has been generated in the last two years only (SINTEF, 2014). The International Data Corporation (IDC) estimates assume that all digital data created, replicated or consumed is growing by a factor of 30 between 2005 and 2020, doubling every two years. By 2020, it is assumed that there will be over 40 trillion gigabytes of digital data, corresponding to 5.200 gigabytes per person on earth (Gantz and Reinsel 2012).

- Internet of Everything: Cisco estimates that currently less than 1 percent of physical objects are connected to IP networks. However, this is estimated to change radically to up to 50 billion devices connected to the Internet by 2020, corresponding to between 6 and 7 devices per person on the planet. These 50 billion devices will constantly generate data at a scale without precedent.

Content analytics, i.e. the ability to process and generate insights from existing content, plays and will continue to play a crucial role for enterprises and organizations that seek to generate value from data, e.g. in order to inform decision and policy making.

A basic distinction can be made between structured and unstructured data. Structured data is essentially data that follows a given pre-defined schema or data model, such as data in standard relational or non-relational (including NoSQL) databases, or data expressed in Web languages such as the Resource Description Framework (RDF). Unstructured data does not follow a predefined schema and comprises texts, blogs, pictures, and sensor data.

Current estimates suggest that only half a percent of all data is being analyzed to generate insights (Gantz and Reinsel 2012). Furthermore, the vast majority of existing data are unstructured and machine-generated (Canalys 2012), with data automatically generated by mobile devices and sensors constituting the majority.

As corroborated by many analysts, substantial investments in technology, partnerships and research are required to reach an ecosystem comprising of many players and technological solutions that provide the necessary infrastructure, expertise and human resources necessary to make sure that organizations can effectively deploy content analytics solutions at large scale to generate relevant insights that support policy and decision making, or even to define completely new business models in a data-driven economy.

Assuming that such investments need to be and will be made, this report explores the role that Linked Data and Semantic Technologies can and will play in the field of content analytics and will generate a set of recommendations for organizations, funders and researchers on which technologies to invest as a basis to prioritize their investment in R&D as well as on optimizing their mid- and long-term strategies and roadmaps.

The main sources this report draws upon are the following:

- Forrester Research Report: TechRadarTM for AD&D Pros: Digital Customer Experience Technologies, QS 2013 (Yakkundi et al. 2013)

- iVIEW Report by the International Data Corporation (IDC): The Digital Universe in 2020: Big Data, Bigger Digital Shadows, and Biggest Growth in the Far East (Gantz and Reinsel 2012)

- The Global Information Technology Report 2014, published by the World Economic Forum

- Gartner Hype Cycle Special Report 2014

- Text Analytics 2014: User Perspectives on Solutions and Providers by Alta Plana Corporation (Grimes 2014)

- Report Rethinking Personal Data: A New lens for Strengthening Trust, published by the World Economic Forum (2014)

- The LT-Innovate Innovation Manifesto, published by LT Innovate (2014)

- Strategic Research Agenda for Multilingual Europe 2020, published by the META Technology Council (2012)

- Results of the Roadmapping Workshops organized by the LIDER project

- IEEE CS 2022 Report

- Call for Papers from the Linked Data and Semantic Web communities

The report is structured as follows: in the next Section General IT Trends we discuss general IT trends as identified by Gartner in order to position this roadmap with respect to major trends in the field of IT. Section Needs in Content Analytics summarizes the current needs in content analytics, focusing on the survey carried out by Alta Plana (see Section Survey on Text Analytics Needs). Further, we analyse the needs of the application development and delivery industry as identified by Forrester's TechRadarTM report (Yakkundi et al. 2013) in Section Application Development & Delivery (AD&D). Finally, we summarize the main outcomes of the LIDER roadmapping workshops organized by the LIDER consortium as part of the MLODE workshop and collocated with the SEMANTICS conference (see Section 4th LIDER Roadmapping Workshop).

Section The Funders' Perspective summarizes the main topics funded by the European Commission in the context of Horizon 2020. We discuss in particular the vision and objectives behind the EC's Connecting Europe Facility (CEF) program and how linked data technology can contribute to this vision.

The actual roadmap is presented in Section Roadmap, and mentions the most promising application areas and future directions for the role of linked data, and in particular linguistic linked data, in content analytics. Section Conclusion concludes and discusses ways forward, providing some recommendations for funders and researchers.

General IT Trends

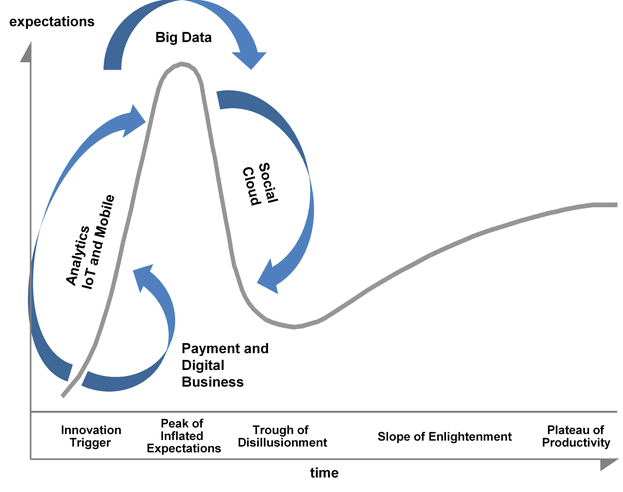

As a basis to identify general IT trends, we consider Gartner's well-known Hype Cycle from 2014. A visualization of the cycle can be found in Figure Gartner Hype Cycle 2014 The hype cycle focuses on newly emerging technologies as they move into mainstream adoption.

The topics mentioned in the hype cycle of 2014 are the following seven technologies

- Mobile: According to the analysis of Gartner, mobile technology is becoming the main vehicle for business applications, allowing organizations to reach more users than via other conventional channels. Due to the pervasiveness of mobile devices among customers, mobile technology is creating disruptive opportunities for business. Thus, Gartner is placing mobile technology as a technology moving rapidly to the peak.

- Internet of Things (IoT): The Internet of Things (IoT) is also considered as a technology moving rapidly to the peak. Given that many companies are defining a strategic agenda for digital business, it is only logical that technologies operating on the physical world will go digital and become part of the network. This is expected to have a large impact and even be transformational with respect to the digital business models and production process, e.g. in the manufacturing area.

- Analytics: As more and more data is generated, e.g. through devices connected to the network as part of the Internet of Things (see above), analytics over these data streams will be an essential ingredient. Analytics technologies are thus regarded as moving into the peak. Gartner foresees that the delivery of analytics as a service (business analytics PaaS) will be a major and important trend. A further trend can be observed in the convergence of information technology (IT) and operational technology (OT), corresponding to the growing use of IT solutions in OT vendors' products. The primary driver in bringing the two worlds together is the need to use analytics from diverse data to improve decision making across the supply chain.

- Big Data: Gartner predicts that Big Data is moving over the peak. Big Data is related to cost-effective information processing of high-volume, high-velocity and highly varied information assets to generate enhanced insights and thus support decision making. While the interest and demand for Big Data solutions is still undiminished, according to Gartner it is moving over the peak due to the convergence of solutions to a set of promising solutions and approaches. The movement of Big Data topics over the peak and the movement of analytics towards the peak clearly suggest that the hype and interest in information and data processing is fostering adoption of these technologies in the value chain.

- Cloud: According to Gartner, cloud technology is generally moving towards the trough. Key topics in the area of cloud technology which are still climbing the peak are cloud access security, cloud application development services, and cloud integration platform services. Topics that are moving over the peak towards the trough include cloud computing, private cloud computing, and hybrid cloud (referring to the coordinated use of cloud services across isolation and provider boundaries among public, private and community service providers, or between internal and external cloud services). Mobile cloud, referring to the use of cloud services for information sharing across devices, has moved quickly over the peak to the trough, indicating maturity for mainstream adoption. Personal cloud services, defined as the individual's collection of digital content, are moving off from the peak, due to their main usage for storage and synchronization, without providing any further significant added value.

- Social: Social technologies are regarded as having shown a significant shift from peak position to the trough. This is mainly due to the fact that users and vendors have realized that generating added and business value on the basis of social technologies is more challenging than expected. The move to the trough, on the other hand, clearly indicates that some social technologies are becoming mainstream, in particular in the area of digital business. Nevertheless, it is foreseen that social technologies, services and disciplines will soon become mainstream technologies within digital business. A technology that is expected to move towards the plateau are peer-to-peer communities, i.e. virtual collaboration environments fostering collaboration among people and organizations outside of the enterprise. Technology for analyzing social networks has moved into the trough due to the difficulty of collecting relevant and reliable data and because turning knowledge into action has been found to be difficult. Some technologies have completely fallen down toward the trough, including social gaming, social TV and social profiles.

- Payment and Digital Business: Technologies for integrating payment methods with loyality programs have moved off the trigger position to trigger midpoint position. Digital Business profiles are placed by Gartner right below the peak of inflated expectations, due to the high number of organizations claiming to have a digital strategy aligned with their overall business strategy.

Summary

The fact that the topics social and cloud are identified by Gartner as moving over the trough to the plateau of maturity clearly shows that these technologies are maturing and becoming part of the IT value chain. Gartner expects that by 2016 cloud and social will be so pervasive that 60% of organizations will have adopted them. The impact that cloud and social have on enabling organizations to run their operations more efficiently, drive new capabilities, serve customers and partners effectively, and respond to disruptive threats and opportunities in the market more rapidly is expected to continue to be key for organizations. The focus in the next years will be more on understanding how social and cloud-based solutions can be applied to generate added value. In any case, any roadmap such as this one in the area of IT will have to consider the prominent role that these two technologies will continue to play in the future. The third relevant technology, Big Data, is regarded by Gartner as moving beyond the peak of inflated expectations, leading to early signs of technological convergence and adoption. Big data can surely be regarded as a key trend in IT that will soon lead to mature solutions that generate valuable insights for enterprises that understand how to effectively make use of these techniques. In this line, IBM has predicted that most companies will have a dedicated role of a data scientist that oversees the organization and understands how to exploit data to optimize the business (Teerlink et al. 2014).

Needs in Content Analytics

This section analyses and summarizes the current main needs in content analytics industries and applications. It summarizes the main results from a survey carried out in 2014 by Seth Grimes from Alta Plana corporation to understand current needs in content analytics. We further reflect on the main results from the LIDER roadmapping workshop that was organized by the LIDER consortium in the context of the MLODE workshop in Leipzig. We further summarize the main challenges identified by Forrester in their TechRadarTM report ().

Survey on Text Analytics Needs

This section analyzes the top needs in the field of content analytics, focusing in particular on text analytics. The summary of needs here is based on the report Text Analytics 2014: User Perspectives on Solutions and Providers by Seth Grimes from Alta Plana (Grimes 2014). The survey described in the above report gathers responses from 200 participants from the content analytics sector. The survey targeted users or prospect users of text analytics technologies, integrators or consultants or managers as well as executive staff in those roles. Researchers and developers applying text analytics technologies were also invited to participate.

The four technology-related growth drivers for text analytic solutions identified by the survey are the following:

- Open Source: Open source is expected to lower barriers both to technology adoption for researchers and more sophisticated users

- API Economy: The availability of hosted, on-demand API-based web services is expected to lower entry barriers and provides enormous flexibility for adopters

- Data availability: availability of data is crucial and increasing

- Synthesis: Providing support for summarizing and synthesizing datasets, and providing answers to specific information needs is crucial.

The five key market drivers, i.e. application fields, that have the potential to generate business value identified are the following:

- Customer Interactions: including support for customer services and customer experience by deploying text analytics to transitional channels such as contact centers but increasingly also to social media, with the goal of optimizing service and fostering engagement.

- Omnichannel solutions: comprising the analysis and aggregation of data from different channels, e.g. survey, social media, news warranty, chat, contact center data, etc.

- Consumer and market insights: consisting in the deployment of text analytics solutions for market research and the generation of insights about market/customer needs, trends, and opinions. An observable trend is that social data is regarded as increasingly reliable and as a trusted source that can complement classical survey data and deliver complementary insights.

- Search and search-based applications: providing new search functionalities that go beyond enterprise search or online information retrieval to provide a platform for high-value applications that includes advertising, e-discovery and compliance, business intelligence and customer self-service.

- Health care and clinical medicine: providing new analytic solutions, e.g. supporting diagnosis or analysis of claims. These new solutions are expected to drive the market and go beyond mere text mining of scientific literature.

The key data sources relevant for text analytics solutions are:

- blogs and other social media (mentioned by 61% of respondents)

- news articles (42%)

- comments on blogs and articles (38%)

- online forums (36%)

Clearly, User Generated Content (UGC) scores top and can be assumed to continue to play a key role in the near future.

The top business applications identified are (considering only those mentioned by at least 20% of the respondents):

- voice of the customer (mentioned by 39% of respondents)

- brand/product/reputation management (38%)

- competitive intelligence (33%)

- search, information access or question answering (29%)

- Customer Relationship Management (CRM) (27%)

- Content management or publishing (25%)

- Online commerce (16%)

- Life sciences or clinical medicine (15%)

- E-discovery (14%)

- Insurance, risk management, or fraud detection (13%)

Summarizing the written comments provided by the respondents to the survey, we can identify the following trends, requirements and issues:

- Customization: A major issue is the required effort to customize existing solutions to a particular domain and application. A lack of domain-specific models has been identified.

- Usability: Usability of current text analytic solutions is regarded as generally low, with APIs that are difficult to use. Deployment of text analytics is regarded as requiring in-house expertise on NLP and data mining, which is clearly a bottleneck.

- Limitations: Accuracy of current solutions as well as depth of analysis is quite limited (see also Cieliebak et al. 2013)

- Cost/Effort: The cost and effort needed in selecting appropriate vendors and solutions, integrating their solutions, analysing results and generating valuable insights to obtain a ROI requires huge effort and cost. The learning curve for deployment of content analytic solutions is generally too high.

- Integration: Integration into existing workflows or systems, in particular existing Business Intelligence (BI) solutions, is regarded as difficult and requires a major effort.

The following information types are highly relevant in content analytics:

- topics and themes (66% of respondents)

- sentiments, opinions, attitudes, emotions, perceptions, intent (54%)

- relationships and/or facts (47%)

- named entities (56%)

- concepts (51%)

- document metadata (47%)

- other entities (34%)

- semantic annotation (31%)

- events (33%)

Important properties of content analytics solutions are:

- ability to generate categories or taxonomies (65% of respondents)

- ability to use specialized dictionaries, taxonomies, ontologies or extraction rules (54%)

- broad information extraction capability (53%)

- document classification (53% of respondents)

- deep sentiment, emotion, opinion, intent extraction (45%)

- low cost (44%)

- real time capabilities (43%)

- sentiment scoring (41%)

- support for multiple languages (40%)

- open source (37%)

- predictive-analytics integration (36%)

- big data capabilities (33%)

- ability to create custom workflows (33%)

- Business Intelligence (BI) integration (32%)

- sector adaptation (30%)

- support for data fusion (28%)

- hosted or Web service (on-demand API) (25%)

- media monitoring/analysis interface (22%)

Participants also mentioned the need to process content in languages other than English. Top languages (other than English) mentioned by at least 10% of respondents:

- Spanish (38% of respondents)

- French (36%)

- German (34%)

- Italian (18%)

- Chinese (16%)

- Portuguese (13%)

- Arabic (10%)

Summary

The main applications for textual content analytics are in the fields of i) analyzing the voice of the customer in the context of Customer Relationship Management (CRM), ii) brand, product and reputation management, iii) technology surveying and competitive intelligence, iv) content management and publishing, and v) search, information access and question answering. The main source of information is clearly user-generated content (blogs, social media, online forums etc.). While the main tasks required are rather conventional (topic extraction, document classification, entity extraction, relation and event extraction etc.), there is a clear need for analytics solutions that i) are tuned to the needs of particular domains, and ii) are able to generate and incorporate semantic domain-specific knowledge in the form of taxonomies, terminologies etc. to support domain customization.

The main issue with current text analytics technologies identified are clearly: i) lack of standard and flexible APIs, ii) high effort and resources needed for customization and domain adaptation, iii) level of expertise required to deploy and integrate solutions into own workflows, iv) lack of accuracy and depth in analysis (see also Cieliebak et al. 2013). Multilinguality is a further very important feature. Language coverage needs to be extended to address the most important languages: Spanish, French, German, Italian, Chinese, Portuguese and Arabic. Future generations of text analytics solutions will thus have to provide easy-to-use standard and flexible APIs, increase accuracy and depth of analysis and be able to process the most important languages as mentioned above. The ability to integrate additional background knowledge to make domain adaption easier will also be a key capability. Finally, solutions need to be easily integratable into existing workflows, processes and tool chains.

Application Development & Delivery (AD&D)

This section analyzes the main findings of the TechRadarTM report by Forrester (Yakkundi et al. 2013). Many digital companies are concerned with the challenge to invest and maintain an ecosystem of technology that supports digital customer experiences that are enjoyable, innovative and contextualized. Forrester defines the sum of these digital customer experience technologies as:

A solution that enables the management, delivery and measurement of dynamic, targeted, consistent content, offers products, and service interaction across digitally enabled customer touch points. (emphasis is ours)

The relevant technologies identified in the TechRadarTM report are the following:

- A/B and multivariate testing: Multivariate Testing (MTV) allows marketers and digital experience professionals to test different components of a digital experience, such as site design, site usability, campaign landing page with respect to some measure, etc.

- Digital Asset Management: Digital Asset Management (DAM) is software used to manage the creation, production, management, distribution and retention of rich media content including audio, video, graphical images and compound documents.

- eCommerce: eCommerce solutions provide companies with the capability to connect with, market, sell so and serve B2B and B2C customers across many digital touch points.

- Email marketing platforms: These tools help to build, execute and monitor email advertising campaigns.

- Mobile analytics: These analytics tools support the collection, analysis and measurement of mobile app traffic and user data to optimize mobile experience.

- Online video platforms: These are solutions that focus on distribution of video content.

- Optimization: Optimization refers to a set of tools that leverage exploratory, descriptive and predictive statistical techniques to drive relevant content, interactions and offerings to end users.

- Portals: Portals are software platforms that aggregate content, data, and applications and deliver them in a rich, personalized environment that supports digital customer experience.

- Product content management: Product Content Management (PCM) focuses on enabling and organization to identify or derive trusted product data and content across heterogeneous data environments.

- Recommendation engines: These tools help to recommend products, text or other content to visitors on different types of websites with the goal of delivering the most relevant and useful experience to the customer.

- Site search: Tools offering search capabilities over content from different systems in a portal or web site.

- Social depth platforms: Tools that integrate social content and experiences into marketing sites.

- Web analytics: Tools supporting the collection of usage data in web channels.

- Web content management: Web content management (WCM) software supports organizations in creating websites and online experiences as well as to create, manage and publish digital content across websites or multiple channels.

The holy grail to effectiveness identified by Forrester is to create contextualized, personalized multi-channel experiences for customers. The following key success factors can be identified:

- Multi-channel experience: multiple channels and target customers can be targeted to deliver a consistent message, also in different languages and modalities, integrating Web content management with product management and eCommerce solutions.

- Contextualization: Portals should be extended to manage and deliver relevant, tailored experiences including personal or individual information on a per-user basis.

- Holistic recommenders: rather than only performing product recommendations, one should create holistic recommender systems that provide pervasive support to users.

- Breaking down silos: customer data, product data and social media data needs to be brought together to deliver contextual cross-channel experiences.

- Adaptability & Control: marketing experts and line-of-business people need more control over digital channels in order to react dynamically to arising customer needs.

Summary

A key challenge in the area of application development and delivery consists in the combination of multiple channels to effectively target customers across channels. On the one hand, this requires to make sure that consistent messages are delivered across channels, which can be ensured by incorporating terminological and lexical resources for the particular domain and application. A further challenge is to foster the convergence of different subsystems (e.g. content management, product management, customer management) etc. into a rich and seamless semantic ecosystem that can support rich customer experiences and contextualized, personalized and situated media delivery and recommendations. A further key challenge consisting in supporting agility in the capture, integration and provision of content, allowing marketing experts to dynamically react to changing customer and market needs.

4th LIDER Roadmapping Workshop

The LIDER project organized a roadmapping workshop in Leipzig on September 2nd, 2014. This workshop was part of the Multilingual Linked Open Data for Enterprises (MLODE) workshop and collocated with the SEMANTICS conference. The program of the roadmapping workshop can be found online. The workshop featured the contributions of more than 10 companies and users from the area of content analytics, which shared with the workshop participants their view on current challenges and perspectives for the exploitation of Linked Data in content analytics tasks.

The main themes identified at the roadmapping workshop were the following six (prioritized with respect to importance according to the vote of the audience at the workshop): i) Resource Creation and Sharing, ii) Open Linked Publishing and Consumption, iii) Multilingual Semantic Content Analytics and Search, iv) Standardization of APIs, and v) Big text and data analytics.

Resource Creation and Sharing

One of the recurring topics of the roadmapping workshop was the need and lack of appropriate linguistic resources to facilitate training of content analytics solutions and in order to adapt content analytics solutions to specific domains. Industry participants stressed that business-friendly licenses that allow for the exploitation of data in commercial contexts are urgently needed. Resources that were most frequently mentioned as important were: terminologies, lexicographic resources, POS-tagged datasets (especially for user-generated content, e.g. tweets), treebanks, datasets annotated with sentiment, NER-annotated corpora as well as corpora in which named entities are linked to external knowledge bases or resources. In general, it was observed that while for most applications resources of middle-level quality are sufficient, for some applications in which quality is crucial highly curated and verified resources are needed. Lexica are a good example for this with a wide spectrum from largely automatically created resources (e.g. BabelNet or automatically generated WordNets) trough to highly curated and manually validated lexical resources such as Princeton WordNet, KDictionaries etc. An important question is how technology can support the quality vs. cost tradeoff in the creation of such resources and how to define human-machine collaborative workflows that increase quality of resources while at the same time minimizing the amount of work needed by experts. The community of content analytic solution vendors clearly mentioned the need for relevant resources for micro-domains, i.e. the availability of highly domain-specific resources (lexica, terminologies, annotated corpora, ontology of intentions) that would support domain adaptation and that could be widely reused across vendors.

Open Linked Data Publishing and Consumption

With respect to publishing data as open linked data, a nuanced perspective emerged during the LIDER roadmapping workshop. While having high-quality open and reusable data was clearly seen as a benefit (see the idea of an open commons mentioned by Alex Pentland (Pentland 2014)), it was also mentioned that the publication of linked data should take into account the principle of economy and efficiency, in particular taking into account that the cost for publishing, quality control etc. should not exceed the added value provided by the resource. Further, many internal datasets at organizations or companies can not be published because doing so would disclose private information about employees or even customers. Further, many datasets are specific to the particular structures and processes implemented in a certain organization and, when taken out of this context, do not provide any added value. In the future, more experiences are needed to understand the cost and value of publishing a certain dataset as linked data as well as metrics to monitor and quantify its impact and reuse. Methodologies that simplify the process of publishing data as linked data are also needed. In general, it was mentioned that the Linked Data effort should focus more on linking rather than only on publishing isolated datasets on the Web. In general, it was also mentioned that instead of focusing on the publication of large multi-purpose datasets, the focus in the future should be on publishing small and reusable buildings blocks that can be used for a specific but frequently recurring purpose. An important target would be to link concepts across languages, effectively creating a multilingual linked knowledge infrastructure that can be exploited in applications that need to provide multilingual support and cross the borders of languages.

Multilingual Semantic Content Analytics and Search

The need for robust and accurate text analytics solutions is still pressing. As identified by the MetaNet activities, the support for most European languages in terms of linguistic resources and natural language processing (NLP) tools is low. This situation was confirmed by the participants of the LIDER roadmapping workshop. Robust and accurate text analytics solutions are needed at different levels: POS tagging, NER recognition, NE linking, information extraction, sentiment analysis, etc. In general, participants expressed the clear need to go deeper and have text analytic solutions that extract deeper semantics including pragmatics including the intention of a customer. Solutions that can perform cross-lingual normalization of content, terms, and named entities are urgently needed, whereby translation is only one component of the needed technology infrastructure. Semantic search (e.g. via keywords, question answering) at the meaning level rather than at the string level is still one of the major needs in the market, as mentioned by several participants of the roadmapping workshop.

The Human Factor

The human factor was identified as an important aspect for the adoption and proliferation of content analytics solutions. In many cases, the expectations of potential end users lead to disappointment with the current state of the art of content analytics solutions. Instead of focusing and reporting performance figures of single tools, experiences need to be gathered on which performance figures are needed for which type of application, and end users need to be sensitized that tools are far from perfect, but that the performance levels can be sufficient for a particular purpose or application. In general, it was felt that the community of content analytics vendors, developers and researchers needs to invest in raising awareness of the benefits, limitations, success stories but also pitfalls of content analytics solutions and devise effective ways of communicating these aspects to potential customers and users.

Standardization of APIs

An important bottleneck in the field of content analytics providers is that each provider offers their own proprietary API, thus hindering easy exchange of solutions and composition of different solutions into more complex workflows. This makes the comparison of different solutions very difficult and further leads effectively to vendor locking as once a company has adopted a certain provider, given the high costs of adaptation (see Section Survey on Needs in Content Analytics), it is likely to stay with that vendor. The participants of the workshop expressed the desideratum of working towards standardization of APIs in content analytics. For one thing, this would make it easier to integrate different solutions into one complex product and would also support joint partnerships between different providers of content analytics solutions. Second, from the point of view of the customer, it would support cross-vendor comparison of technology to make more informed decisions. Finally, standardized APIs would also facilitate benchmarking and quality assessment of tools and services by running automated tests or evaluations, both by the research community but also as part of an open ecosystem of resources and tools.

Big text & data analytics

Surprisingly, the topic of big data analytics was prioritized lowest by the participants at the LIDER roadmapping workshop. One explanation for this is that Big Data as a hype has generated already some disappointment (this is in line with Gartner predicting Big Data to move down from the peak of inflated expectations (see Section General IT Trends). The need to process large amounts of data is clearly there and likely to move into mainstream and adoption, but the hype seems to be decreasing. The challenge of developing solutions that can scale to large streams of big data comprising unstructured data is still a crucial one. The integration of knowledge across languages and formats at larger scale is an important challenge to address.

Summary

The 4th LIDER Roadmapping Workshop was organized in Leipzig on September 2nd as part of the MLODE workshop and collocated with the SEMANTiCS conference. Several companies and users from the content analytics area joined the Roadmapping Workshop and active discussions happened among and with the participants in order to face current challenges on the exploitation of Linked Data in content analytics tasks.

Six main themes were identified during the workshop, going from Resource creation and Sharing (where participants pointed out the lack and the need of appropriate linguistic resources for - possibly, domain specific - content analytics solutions) to Open linked data publishing (with an outcry for economy and efficiency of the publication of linked data), as well as the need of support for resource-poor European languages and the need to raise awareness about the benefits and limitations of content analytics solutions.

Connecting Europe Facility (CEF)

The goal of the Connecting Europe Facility (CEF) program by the European Commission is to support the development of "high-performing, sustainable and efficiently interconnected trans-European networks in the fields of transport, energy and digital services" (European Commission, 2012). By this, it expects to contribute to increase growth, jobs and competitiveness for Europe. A budget of 50 billion EUR between 2014 and 2020 is foreseen for this.

The stated strategic objective of the CEF is to contribute to the development of a Digital Single Market and to effectively eliminate market fragmentation, making sure that cross-border public services are broadly available and accessible by millions of citizens and companies to connect to the single market.

A further goal is to contribute to the transformation of Europe into a knowledge-intensive, low-carbon and highly competitive economy. The CEF is investing thus in the creation of modern and flexible energy, transport and digital infrastructure networks. The preferred instrument for this are public-private partnerships funded through innovative financial instruments that make investment into infrastructure projects attractive.

With respect to the development of digital service infrastructures, the CEF Digital Service Infrastructures (DSIs) are expected to act as platforms on which innovative applications can be created and deployed, and to facilitate mobility of citizens and working across borders. To overcome service fragmentation and lack of interoperability due to national borders, the goal is to develop pan-European services that interoperate across borders and de-fragment the market, in particular in the areas of eGovernment, eProcurement and eHealth.

Funding via Horizon 2020 and CEF can contribute to reducing fragmentation between content analytics solutions. By implementing the needs for content analytics described in Section Survey on Needs in Text Analytics, it can help to make data and services operable across national borders. Linked Data technologies, in particular linguistic, possibly multilingual, linked data technologies, can be expected to play a major role. They can contribute to the interoperability of services e.g. by providing a means to align different conceptualizations or ontologies. This will improve data exchange and semantic interoperability.

For more information on the CEF digital agenda, see [here].

Linked Data in Research

In order to identify relevant topics that are on the current research agenda, we have analyzed the calls for papers of the year 2014 of four major conference in the fields of Semantic and Linked Data technologies: The World Wide Web Conference (WWW 2014), which featured a dedicated Semantic Web track, the International Semantic Web Conference (ISWC 2014), the European Semantic Web Conference (ESWC 2014), and SEMANTICS 2014.

Besides looking at the major conferences, we have also identified a number of workshops specifically dedicated to Linked Data issues, with particular emphasis on linguistic linked data:

- Linked Data on the Web Workshop (LDOW2014), collocated with WWW 2014

- Workshop on Linked Data in Linguistics (LDL-2014), collocated with LREC 2014

- Workshop on Semantic Web Enterprise Adoption and Best Practice (WASABI)

- Workshop on Linked Data Quality, collocated with SEMANTICS 2014

- 1st Workshop on Linked Data for Knowledge Discovery, collocated with ECML/PKDD 2014

- Workshop on Linked Open Data 2014 : Improving SME Competitiveness and Generating New Value, collocated with SEMANTICS 2014

World Wide Web Conference (WWW 2014)

The relevant topics for WWW 2014 in the Semantic Web track were the following:

- Infrastructure: Storing, querying, searching, serving Semantic Web data

- Linking, joining, integrating, aligning/reconciling Semantic Web data and ontologies from different sources

- Tools for annotation, visualization, interacting with Semantic Web data, building ontologies

- Knowledge Representation: Ontologies, representation languages, reasoning in the Semantic Web

- Applications that produce or consume Semantic Web data, including those in the enterprise, education, science, medicine, mobile, web search, social networks, etc.

- Extracting Semantic Web data from web pages and other sources

- Methodologies for the engineering of Semantic Web applications, including uses of Semantic Web formats and data in the development process itself.

European Semantic Web Conference (ESWC 2014)

ESWC 2014 included the following topics in the Open Linked Data Track:

- Linked Open Data extraction and publication

- Storage, publication and validation of data, links, and embedded LOD

- Linked data integration/fusion/consolidation

- Database, IR, NLP and AI technologies for LOD

- Creation and management of LOD vocabularies

- Linked Open Data consumption

- Linked data applications (e.g., eGovernment, eEnvironment, or eHealth)

- Dataset description and discovery

- Searching, querying, and reasoning in LOD

- Analyzing, mining and visualization of LOD

- Usage of LOD and social interactions with LOD

- Dynamics of LOD

- Architecture and infrastructure

- Provenance, privacy, and rights management; relationship between LOD and linked closed data

- Assessing data quality and data trustworthiness

- Scalability issues of Linked Open Data

International Semantic Web Conference (ISWC 2014)

The call for papers for ISWC 2014 included the following topics:

- Management of Semantic Web data and Linked Data

- Languages, tools, and methodologies for representing and managing Semantic Web data

- Database, IR, NLP and AI technologies for the Semantic Web

- Search, query, integration, and analysis on the Semantic Web

- Robust and scalable knowledge management and reasoning on the Web

- Cleaning, assurance, and provenance of Semantic Web data, services, and processes

- Information Extraction from unstructured data

- Supporting multilinguality in the Semantic Web

- User Interfaces and interacting with Semantic Web data and Linked Data

- Geospatial Semantic Web

- Semantic Sensor networks

- Query and inference over data streams

- Ontology-based data access

- Semantic technologies for mobile platforms

- Ontology engineering and ontology patterns for the Semantic Web

- Ontology modularity, mapping, merging, and alignment

- Social networks and processes on the Semantic Web

- Representing and reasoning about trust, privacy, and security

- Information visualization of Semantic Web data and Linked Data

- Personalized access to Semantic Web data and applications of Semantic Web technologies

- Semantic Web and Linked Data for Cloud environments

Linked Data on the Web Workshop, collocated with WWW 2014

The Linked Data on the Web Workshop (LDOW), collocated with the World Wide Web Conference in 2014, called for the following topics:

- Mining the Web of Linked Data

- large-scale derivation of implicit knowledge from the Web of Linked Data

- using the Web of Linked Data as background knowledge in data mining

- Integrating Large Numbers of Linked Data Sources

- linking algorithms and heuristics, identity resolution

- schema matching and clustering

- data fusion

- evaluation of linking, schema matching and data fusion methods

- Quality Evaluation, Provenance Tracking and Licensing

- evaluating quality and trustworthiness of Linked Data

- profiling and change tracking of Linked Data sources

- tracking provenance and usage of Linked Data

- licensing issues in Linked Data publishing

- Linked Data Publishing, Authoring and Consumption

- mapping and publication of various data sources as Linked Data

- authoring and curation of Linked Data

- Linked Data consumption interfaces and interaction paradigms

- visualization and exploration of Linked Data

- Linked Data Applications and Business Models

- application showcases including browsers and search engines

- marketplaces, aggregators and indexes for Linked Data

- business models for Linked Data publishing and consumption

- Linked Data as pay-as-you-go data integration technology within corporate contexts

- Linked Data applications for life-sciences, digital humanities, social sciences etc.

Workshop on Linked Data in Linguistics (LDL-2014)

The Workshop on Linked Data in Linguistics (LDL-2014), collocated with LREC 2014, mentioned the following topics in their call for papers:

- Use cases and project proposals for the creation, maintenance and publication of linguistic data collections that are linked with other resources

- Modelling linguistic data and metadata with OWL and/or RDF

- Ontologies for linguistic data and metadata collections

- Applications of such data, other ontologies or linked data from any subdiscipline of linguistics

- Descriptions of data sets, ideally following Linked Data principles

- Legal and social aspects of Linguistic Linked Open Data

Workshop on Semantic Web Enterprise Adoption and Best Practice (WASABI)

The Workshop on Semantic Web Enterprise Adoption and Best Practice (WASABI), collocated with EKAW 2014, had a special focus on Linked Data Lifecycle Management and called for the following topics:

- Surveys or case studies on Semantic Web technology in enterprise systems

- Comparative studies on the evolution of Semantic Web adoption

- Semantic systems and architectures of methodologies for industrial challenges

- Semantic Web based implementations and design patterns for enterprise systems

- Enterprise platforms using Semantic Web technology as part of the workflow

- Architectural overviews for Semantic Web systems

- Design patterns for semantic technology architectures and algorithms

- System development methods as applied to semantic technologies

- Semantic toolkits for enterprise applications

- Surveys on identified best practices based on Semantic Web technology

- Linked Data integration and change management

Workshop on Linked Data Quality

The Workshop on Linked Data Quality, collocated with SEMANTICS 2014, mentioned the following topics of interest in their call for papers:

- approaches targeting Linked Data in the areas of:

- quality assessment

- inconsistency detection

- cleansing, error correction, refinement

- versioning

- reputation and trustworthiness of web resources

- quality of ontologies

- quality modelling vocabularies

- frameworks for testing and evaluation

- data validators

- best practices for Linked Data management

- user experience

- empirical studies

1st Workshop on Linked Data for Knowledge Discovery (LD4KD)

The 1st Workshop on Linked Data for Knowledge Discovery (LD4KD), collocated with ECML/PKDD, included the following topics of interest in the call for papers:

- Linked Data for data pre-processing: cleaning, sorting, filtering or enrichment

- Linked Data applied to Machine Learning

- Linked Data for pattern extraction and behaviour detection

- Linked Data for pattern interpretation, visualization or optimization

- Reasoning with patterns and Linked Data

- Reasoning on and extracting knowledge from Linked Data

- Linked Data mining

- Link prediction or links discovery using KDD

- Graph mining in Linked Data

- Interacting with Linked Data for Knowledge Discovery

Linked Open Data 2014 : Improving SME Competitiveness and Generating New Value

The Workshop on Linked Open Data: Improving SME Competitiveness and Generating New Value, collocated with SEMANTICS 2014, included the following topics in their call for papers:

- Linked Data for SMEs

- Managing the Data Life cycle in SME environments

- Analytics for Improving Business Knowledge using Linked Data

- Transforming Data to Open and Linked formats

- Business Collaboration through Data Sharing and Alignment

Summary

Research in Linked Data is increasingly focusing on issues related to the consumption and application of Linked Data, also in commercial and SME contexts. Key aspects related to the consumption and exploitation of linked data include i) description and discovery of linked data, ii) validation and quality assurance and iii) provenance, privacy and rights management. The research community is thus increasingly working on the above mentioned issues and will eventually contribute to a linked data ecosystem where data trust, provenance and licensing information is taken into account and appropriately represented, and infrastructure is available to ensure for the low-cost publishing, discovery, validation and reuse of linked datasets. Further, current efforts consider how SMEs can exploit Linked Data technology as part of their workflows and in enterprise applications.

Roadmap

We structure the roadmap into three key application areas: i) Global Customer Engagement Use Cases; ii) Public Sector and Civil Society Use Cases; iii) Linguistic Linked Data Life Cycle and Linguistic Linked Data Value Chain.

The goal of the LIDER project is to identify actions and developments that need to happen in order for Linguistic Linked Data (LLD) technologies to impact the three fields identified above. We frame our predictions by indicating:

- Horizon: distinguishing between 1-2 years, 3-5 years, and 5-10 years from the publication of this report, with the horizon of 1-2 years corresponding essentially to initiatives that have already been started (e.g. European research projects funded under FP7 or started in 2014 under Horizon 2020), 3-5 years corresponding to inititatives planned but not started yet, e.g. as foreseen in the Horizon 2020 work program. The horizon 5-10 years corresponds to activities that are not yet foreseen in any plan or work program but are expected to emerge.

- Main Actors: identifying the main actors involved in the initiative and who will push the initiative. We distinguish here between the following actors: academica, industry, SMEs as well as partnerships between these actors.

- Means: instruments by which the initiative can be realized: collaborative research projects, cooperation between academia and industry, standardization, industrial pilots, mainstream adoption, academic proof-of-concept, etc.

Global Customer Engagement Use Cases

Media Publishing & Content Management

As identified by Forrester (Yakkundi et al. 2013), one of the key challenges in media publishing and content management for the years to come is the convergence of technologies to deliver a homogeneous multichannel experience to customers, or as stated explicitly in the report by Forrester "The dynamic nature of digital experiences requires technology that enables business users to manage, measure, and optimize what happens on web, mobile, and social channels". The key objective to achieve here is to combine different technologies to provide a contextualized digital experience to users, bringing together content, product and customer relationship management in one ecosystem. Portals are expected to continue playing an important role in delivering a personalized environment that supports digital customer experiences. The important issue is to realize a certain degree of agility at the content level to be able to quickly integrate new (external) data resources to react to new needs and interests of customers.

The key impact avenues for linked data based content analytics in media publishing and content management are thus the following:

- relying on Linked Data technologies to create unified information spaces of linked datasets bringing together datasets that have been isolated so far (e.g. product content, product data, customer data, social data etc.) to contribute to a unified experience (so called vertical clouds)

- exploit terminologies and ontologies available as linguistic linked data to achieve terminological consistency across channels

- agile import of datasets into portals to support changing customer needs and interests

- support for localization of content across channels by exploiting multilingual linguistic linked data resources

We expect that, in the future, linked data technologies for content publishing will mature to make multimodal and multilingual repurposing of content and storytelling feasible. It will be crucial in this to support access to knowledge -- and not only data -- by non-experts, e.g. content creators and consumers, developing interfaces that abstract from technical aspects, data models and query languages. This access should be in particular across media and across natural languages. This will ultimately lead to visual story generation from multiple sources including text, video and other modalities as well as new methods for re-purposing and composing heterogeneous content for different challenges, natural languages and audiences.

We need further best practices for linked data based content publishing as well as experience reports on the adoption of such best practices in verticals such as energy efficiency management, smart cities, healthcare, etc. together with best practices and business models for generating value ou of such resources. In particular, we advance that enterprises will realize in the coming years the potential of linked data to connect different media beyond separate annotations (cross-media links) and the potential of linked data to share such data across companies.

The integration of content comprises the creation of a seamless network of data and knowledge that spans multiple modalities as well as open and closed datasets in a way that is respectful to IP and corresponding licenses. Assuming that, machines will be mainly responsible in the future for discovering and mashing up content from heterogeneous sources in different formats, modalities and languages in such a way that copyright, IP, confidence and provenance of data is taken into account. Technically, this will require linked data-aware licensing servers which will be responsible for delivering information taking into account access rights, supporting machine-mediated access, extraction, aggregation, composition and repurposing of data.

A further challenge will be to deploy linked data technology to capture social interactions at large scale, annotating multiple media streams with respect to who says what to whom, what reactions are triggered by which content. This includes capturing emotions across modalities, bringing different modalities and languages together to analyze emotions and sentiment effectively.

In the long term we foresee that linked data will converge to create a seamless ecosystem in which structured and unstructured information from different modalities and languages will be integrated and linked, thus being exploitable by a new generation of methods and services that will support storytelling, and question answering over all these sources. This assumes that the technology stack for analysing content using natural language processing techniques at large scale has matured enough.

Supporting exploration of data by citizens and the larger public including analysts, journalists etc., is another challenge. This will effectively contribute to dealing with data and information overload.

Using terminologies and ontologies available as linguistic linked data to achieve terminological consistency across channels would certainly contribute to exploiting data in commercial contexts.

| Horizon | Prediction | Actors | Means |

|---|---|---|---|

| 1-2 Years | Increased linked data publishing in verticals and for different use cases, first ROI models emerge | Industry-Academia Partnerships | Productive Systems & Integrated Projects |

| 1-2 Years | Increased multilingual terminologies and ontologies in verticals and for different use cases and channels, first ROI models emerge | Industry-Academia Partnerships | Productive Systems & Integrated Projects |

| 1-2 Years | Effective solutions and interfaces for access to data for non-experts (content creators that are not experts) are in place | Academic and Industrial Cooperation | Pilots |

| 1-2 Years | Increased awareness about potential of linked data to connect different media beyond monomodal annotations (cross-media links) | Start-ups and Academia | Hand-on Workshops, Tutorials, Webinars |

| 3-5 Years | Robust and accurate techniques for linking ontologies across languages are available and successfully applied | Industry | Productive Systems |

| 3-5 Years | An increasing number of media publishing companies publish their data about programs, background information as part of the Web, following the early adopters such as BBC, NYT, etc. | Industry | Productive Systems |

| 3-5 Years | Multilingual and cross-media access and exploration of data by citizens becomes possible | Industry-Academia Partnerships | Research Projects and Pilots |

| 3-5 Years | Techniques for bringing together data from multiple modalities for holistic sentiment analysis mature and become productive | Industry | Productive Systems |

| 3-5 Years | Standards for annotation and exchange of multimodal datasets emerge | Industry-Academia Partnerships | Research Projects & Pilots |

| 3-5 Years | Methods for multimodal storytelling by repurposing, summarizing and composing existing and heterogeneous multimodal content are available | Industry-Academia Partnerships | Research Projects & Pilots |

| 3-5 Years | First solutions to combine open and public datasets with appropriate handling of IP and licenses emerge as well as first solutions to discover and assess trust and quality of linked data | Industry-Academia Partnerships | Research Projects and Pilots |

| 3-5 Years | Techniques for large-scale capturing of social interactions and their semantis across modalities emerge | Industry-Academia Partnerships | Research Projects and Pilots |

| 5-10 Years | Non-public data will be available as linked data on the Web; linked data-aware licensing servers take into account and reason about access rights in delivering content | Industry-Academia Partnerships | Research Projects & Pilots |

| 5-10 Years | Linked Data technology supports the seamless integration of structured and unstructured data available on the Web as well as querying across languages of this integrated data | Industry-Academia Partnerships | Research Projects & Pilots |

| 5-10 Years | Linked Data based multimodal and multilingual storytelling matures and is adopted | Industry | Productive Systems |

Marketing and Customer Relationship Management

Extrapolating from the importance of social aspects described in Section General IT Trends and the needs expressed both by the survey carried out by Alta Plana (see Section Survey on Content Analytics Needs, our own surveys (LIDER Project deliverable D1.1.1), as well as the findings from the 4th LIDER Roadmapping Workshop), we can assume that the analysis of the voice of the customer will play a major role in guiding marketing and advertising activities in the future. What will be needed in the future are robust techniques to extract and interpret the voice of the customer with i) a high level of accuracy, ii) across natural languages and modalities, and iii) analyzing sentiment at deeper levels beyond mere polarity to recognize also the intent of a user as a basis to generate actionable knowledge. The insights generated by methods to analyze the voice of the customer need to be converted into appropriate metrics that can be integrated into standard BI solutions to be correlated with other measures and in order to measure the impact and ROI of a certain marketing campaign. The holy grail of the advertising industry is to aggregate data from potential and actual customers ubiquitously as a basis to create deep personal profiles in order to provide personalized and contextualized recommendations that permeate the whole life of a user. An important challenge herein is to be able to process large amounts of big data in real time (see Section Big Data issue). With respect to creating deep personalized profiles of users, domain-specific background knowledge available as Linked data can be exploited to create rich semantic profiles that support contextualized, personalized and situated recommendation and interaction. Linked Data technologies can also play a key role in linking profiles of users across channels and sites. This needs to take into account the right of people for privacy (see Section Privacy).

In particular, linked data can contribute to this challenge by the following:

- sentiment lexica in different languages, including polarity information and link to intentions are available as part of the Linguistic Linked Open Data (LLOD), so that these lexica are easily integratable into standard sentiment analysis tools and workflows

- ontologies modeling intentions for a number of micro-domains become part of the LLOD

- datasets annotated with sentiment, subjectivity, polarity and potentially for irony for many languages become part of the LLOD

- robust methods for linking and identifying users across channels and sites as a basis to create aggregated user profiles

- robust and accurate methods for detecting sentiment, subjectivity and polarity and even irony for the major European languages as LLOD-aware services

- providing ontologies/taxonomies/terminologies that can be used to represent semantic profiles of users

- developing robust and accurate methods to identify users across sites and channels

- providing ontologies for modeling situations and contextual parameters to provide situated and contextualized recommendations

- providing domain-specific terminologies and ontologies lexicalized in multiple languages and across modalities to provide consistency across channels, languages and modalities

In general, we can expect disruptive changes to the current paradigm for marketing and customer relationship management. Marketing and advertising activities can be assumed to become totally transparent to the user, moving from an active push over advertising strategies that are embedded in social conversations and communications through to the recognition and fulfillment of intentions and needs in real time. Explicit marketing and advertising activities will loose importance as customer targeting and product placement becomes a commodity service that is perceived as a real added value by customers. This has several implications:

- Machine-to-machine communication: As advertising and marketing moves from a push through to a commodity service that recognizes and fulfills customer needs in real time, we can expect that both businesses and consumers will move from being real physical entities to being digital agents that interact and negotiate directly. This will radically change current business models.

- Rich semantic user profiles: Real-time recognition of needs and intentions requires rich linked information including semantic information about objects, individuals, groups, intentions, contexts, cultures, etc. This will require standardized ways for representing and linking such information.

As more and more private data is linked and profiles become more and more important, and online reputation becomes increasingly relevant for online transactions, new business models will emerge, such as offering to manage online profiles and reputation.

We also foresee that advertising and product placement will become a commodity service that fullfills needs in real time, thus becoming completely transparent, personalized and contextualized. However, this will lead to a situation in which users experience a lack of control and a most likely to a pushback in which users demand for more control of their personal data. Consequently, this will require new solutions and paradigms to empower users in revoking their personal data and solutions for unlinking datasets, thus giving back control to users about their personal data (see Section Privacy)

| Horizon | Prediction | Actors | Means |

|---|---|---|---|

| 1-2 Years | Paradigm shift from physical interaction with real customers to M2M (machine-2-machine) interaction | Industry | Paradigm Shift |

| 1-2 Years | Paradigm shift away from CRM as a push activity to a (moderated) only conversation | Academic and Industrial Cooperation | Paradigm Shift |

| 1-2 Years | Need for standardized vocabularies for describing user profiles, product information and their relations | Academia-Industry Partnerships | Standardization activites |

| 3-5 Years | Robust and accurate techniques for linking terminologies and ontologies across languages are available and successfully applied | Industry | Productive Systems |

| 3-5 Years | Advertising industry exploits rich semantic interlinked personal and product profiles to provide personalized and contextualized recommendations | Industry | Productive Systems |

| 3-5 Years | First solutions for centralized and trusted management and storage of personal data emerge that consider provenance and licensing terms and conditions and ensure compliance with these | Academia-Insdustry Partnerships | Research Projects & Pilots |

| 3-5 Years | Paradigm shift moving away from explicit advertising to transparent advertising, recognizing intentions and needs in real time to fulfill them, becoming a commodity beyond mere recommendations | Industry-Academia Partnerships | Research Projects and Pilots |

| 3-5 Years | Services for personal data tracking and revokation start to become available | Academica-Industry Parterships | Research Projects & Pilots |

| 3-5 Years | New business models emerge, e.g. for online reputation management and optimization of individuals, companies etc. | Start-ups, Academia | Pilots |

| 5-10 Years | Personalization in advertizing is increasingly perceived as a threat by end users and as interfering with their free choice, demand for more control over data arises; advanced solutions allowing users to manage their personal data arise | Industry-Academia Partnerships | Research Projects and Pilots |

| 5-10 Years | Methods and best practices for retracting and unlinking personal data emerge | Industry-Academia Partnerships | Research Projects and Pilots |

Public Sector and Civil Society Use Cases

Supporting the Creation of a Single Digital Market and the Connecting Europe Facility (CEF)

One of the main stated goals of the Connecting Europe Facility (CEF) is to overcome service fragmentation and lack of interoperability due to national borders, with the objective to develop pan-European services that interoperate across borders and de-fragment the market, in particular in the areas of eGovernment, eProcurement and eHealth.

For this, datasets exchanged across borders need to be harmonized both at a syntactic and semantic level. While interoperability at the syntactic level is being addressed already to some extent, establishing interoperability at the semantic level involves a long-term effort involving the alignment of concepts used in different countries and jurisdictions.

With its strong tradition working on ontology alignment and linking, the Semantic Technologies community has the potential to contribute to:

- the development of shared ontologies of key administrative and legal concepts across Europe

- linking of vocabularies and ontologies existing in different countries and jurisdiction to foster interoperability

- development of declarative specifications of workflows and processes, so that tools can reason about these, composing them to achieve some task

- collaborative ontology creation across languages and countries

- exploitation of terminologies and ontologies to ensure consistency of communication in public administration

| Horizon | Prediction | Actors | Means |

|---|---|---|---|

| 1-2 Years | Shared ontologies and terminologies for key administrative, financial and legal sub-domains emerge as part of the Linguistic Linked Open Data Cloud; these ontologies are lexicalized in multiple languages following standard vocabularies and best practices such as the lexicon model for ontologies | Academia-Industry Parternships | Research Projects & Pilots |

| 1-2 Years | Ontologies are increasingly linked across national contexts and published on the Linked Open Data cloud | Academic and Industrial Partnerships | Research Projects and Pilots |

| 1-2 Years | Most important domains in which terminological standardization across languages is needed to realize the vision of a Single Digital Market are identified | Academic and Industrial Partnerships | Research Projects and Pilots |

| 1-2 Years | A taxonomy of types of links that matches needs of the Single Digital Market is developed | Academia-Industry Partnerships | Standardization activites |

| 1-2 Years | Strive to reduce the amount of unstructured data exchanged by exploiting cross-lingual ontologies and terminologies that diminish the need to exchange unstructured messages; identify those fields where language-independent communication is applicable and feasible | Academia-Industry Partnerships | Standardization activites |

| 3-5 Years | First standards and best practices including ontologies to describe services, and products emerge that can be exploited in machine-2-machine negotiation and matchmaking (e.g. matching job offers to profiles) | Industry | Standardization and Pilots |

| 3-5 Years | Robust and accurate techniques for linking ontologies across languages are available and successfully applied | Industry | Productive Systems |

| 3-5 Years | The need to formally monitor compliance of actors across countries with European and other regulatory frameworks becomes obvious, and first solutions for expressing policies and regulations become available (e.g. using advanced logics such as deontic logics) | Academia | Research Projects |