This page uses Cognitive AI for a demo on smart homes. See explanation below.

Log:

Facts graph:

Rules graph:

See also information on chunks and rules.

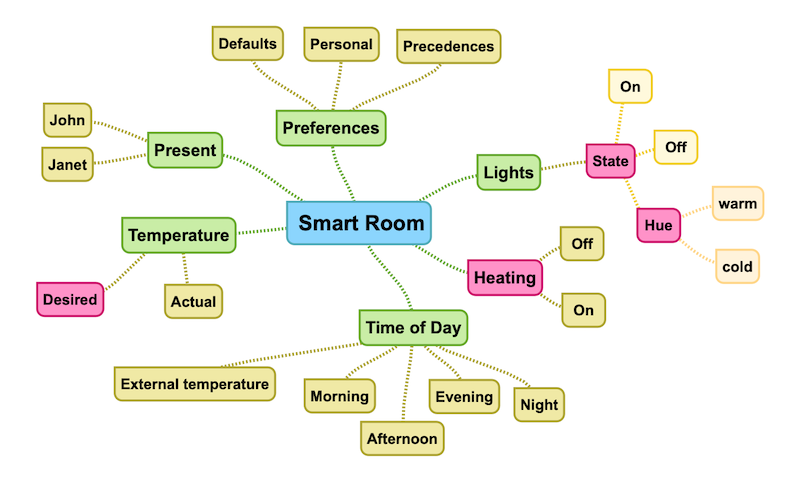

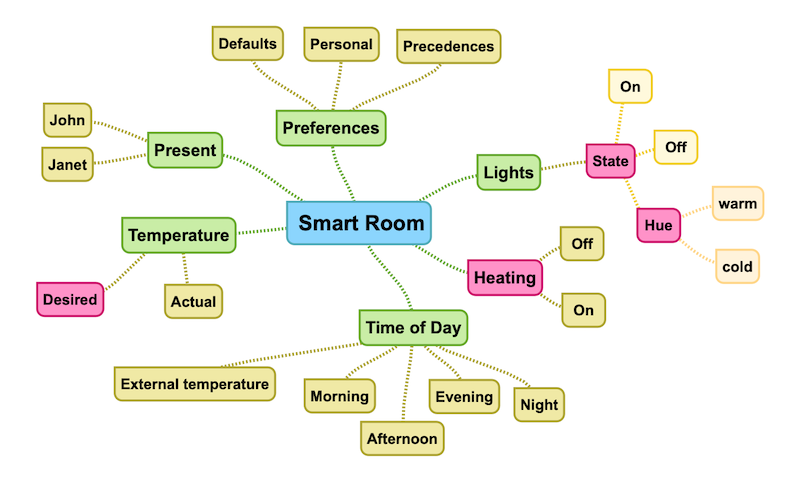

This demo was prepared for the AIOTI WG 3 task force on semantic interoperability, and features a room with sensors for temperature, luminance and presence, and actuators for lighting and heating. A starting point was to devise a mindmap for the concepts involved as a precursor to designing the knowledge graph:

The home's owners Janet and John have different preferences for the lighting hue and the desired room temperature, see the facts graph for details.

The rules are triggered when someone enters or leaves the room, and when the time of day changes. The reasoning process is bottom up, first applying the defaults, then the preferences of whoever is in the room, and finally, to apply the precedences for the lighting hue and room temperature. To save energy, the lights turn off in the morning or afternoon, unless you manually override them. See the rule graph for details.

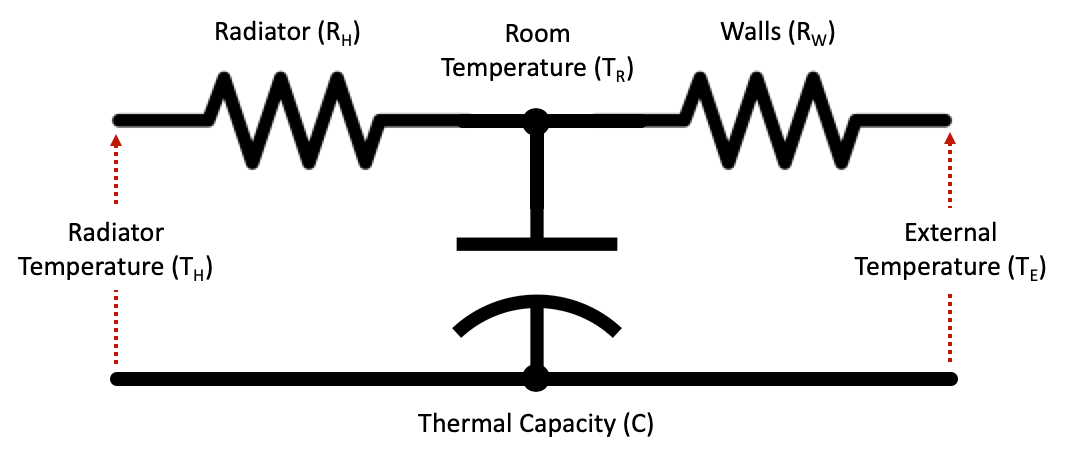

The room temperature rises and falls according to the difference with the external temperature and whether the heating is on. The external temperature varies according to the time of day, see the value in the window.

The heating and cooling of the room is modelled as a pair of resistors and a capacitor, where the radiator temperature and the external temperature are modelled as voltage sources, leading to current flows into and out of the capacitor.

The demo uses requestAnimationFrame to call a function to compute the changes based upon the time since the last frame was rendered. When changes are made in the simulated room these are signalled by setting goals to trigger rules representing procedural knowledge. The facts module is regularly updated to reflect the current values for the room state when the facts are changed. That also needs to happen before setting goals to ensure that the triggered rules are using accurate information.

The state of the room is modelled as the following chunks, which are updated to reflect the physical values, simulating the process of perception, which updates the cortex to reflect a model of sensory input:

room room1 {occupancy 1; heating on; temperature 16; thermostat 20; lights off; hue warm; time evening}

person John {room room1}

Note that the person facts are added and removed as Janet and John enter or leave the room, respectively.

The log shows the sequence of goals and rule firing, and discards older entries when it gets full. Goals are pushed when:

The demo implementation supports the following chunks for rule actions:

Note that you don't have to provide all of the properties in given rule, as the properties you omit will be left unchanged, e.g. action {@do thermostat; heating on} turns the heating on without changing the target temperature. The radiator struggles to warm the room at night if thermostat is set high, although it is fine at a lower temperature.

An open question is what to do when the lights, hue, heating and target temperature are manually adjusted from the form fields. At the moment, these just update the facts and don't push any goals.

n.b. the images of the seated man and woman are courtesy of Freepik. The empty chairs were crudely derived from them using the Gimp graphics editor. The table is courtesy of iconsdb

Dave Raggett <dsr@w3.org>

This work is supported by the European Union's Horizon 2020 research and innovation programme under grant agreement No 780732 for project Boost 4.0, which focuses on smart factories.

This work is supported by the European Union's Horizon 2020 research and innovation programme under grant agreement No 780732 for project Boost 4.0, which focuses on smart factories.