<tidoust> Scribe: Francois

<tidoust> scribenick: tidoust

Mark: Starting with mission statement. Make the Web a great platform for media of all kinds! That's certainly the thrust of all we've been doing here.

... I wanted to reflect of the history of major IG initiatives.

... We started in 2011.

... No doubt our first thrust was HTML5, in particular the HTML5 media pipeline.

... Big focus of that early times.

... I think we've been successful. We didn't get everything we needed but a good portion of it, and the Web has quickly switched over to HTML5 video from plugins such as Flash.

... There will be future versions of HTML. That initiative does not go away!

... This work led to the work on extensions for Media, with Media Streams Extensions (MSE) and Encrypted Media Extensions (EME).

... No doubt there will be future versions of these standards as well.

... Many media services on the Internet now rely on these technologies

... We did a number of other initiatives, e.g. on Timed Text.

... But the question now is which big initiative we should now be focusing on.

... I want to propose something: defining the Media Web App platform.

... We see that on smart TVs, they support HTML5. Same thing on set-top boxes, etc. But you still can't run the same code on all of these devices because support for technologies is different.

... So I believe there is value in defining the platform for Web media applications.

Jeff: The statement that we're setting up an initiative to define the media web platform is a great idea. I was wondering how people in the room react to that.

Alex: I think it makes sense to have a mission statement. I had not seen it before. I like it. It may be a bit broad, but that's what mission statements are for: to be forward-looking.

... If we were to be more specific or narrower, would it help? I do not know yet.

... But it's a good start.

... It would still be useful to scope it a bit down by explaining what we mean by media.

Mark: We definitely mean what people sometimes call continuous media.

Jeff: Just to share the reactions of the room, there are a few heads that went up and down.

Mark: Right, like I said, we discussed that yesterday so that's still new.

... Continuing with the IG name change from Web and TV IG to Media & Entertainment IG

... We were looking for a broader term to make it clear that it's not only about TV.

... Another thing is that we want to address the entire end-to-end pipeline.

... From capture to consumption (focused on web technologies, of course).

... The topics we're discussing include all sorts of people: clients, providers, production, transport & control, accessibility and relate (security comes to mind as well).

Will: It's Media & Entertainment. What are you focusing on Entertainment? Media covers news, other sectors, etc. What is the entertainment focus that justifies the term?

Mark: You're right. There's nothing special about entertainment. Media seemed too broad. Continuous media did not seem to common.

... Looking at the IG charter. Traditionally, IGs have focused on the identification of requirements. That is important. When you go to product or spec designers, it's important to have a clear list of requirements.

... We can go beyond that and incubate solutions in CGs and WGs. We should also track on-going activities in other W3C groups and external orgs.

... Looking at the work flow: we start from new ideas. The spec is not the main goal. The goal is to affect technologies out there including clients and servers.

... In the end, we do want ideas and issues to be deployed to existing browser runtimes.

... Typically, we'll discuss each topic, for instance in a task force, and define use cases, requirements, running code, etc.

... One thing we do not do is develop technical specifications.

... Depending on the outcome, we may report a bug on an existing spec. Or take the idea to a working group to develop a spec.

... One thing that is common nowadays is to incubate the idea in a lightweight group, called a Community Group.

... Many of them created at W3C and I'm chairing one of them.

... Then the spec created in the CG can transition to a WG. It's generally a better starting point for standardization, as it clarifies the scope.

... The IG continues to liaise with the working groups.

... One new model is prototyping.

... Not necessarily starting from a spec, but from running code. There's been a big push towards incubating prototypes. We'll have a presentation this afternoon from the Web Incubator Community Group (WICG).

... The prototype approach helps drive integration in browser runtimes

... Looking at task forces, we'll have reports from them shortly after this presentation.

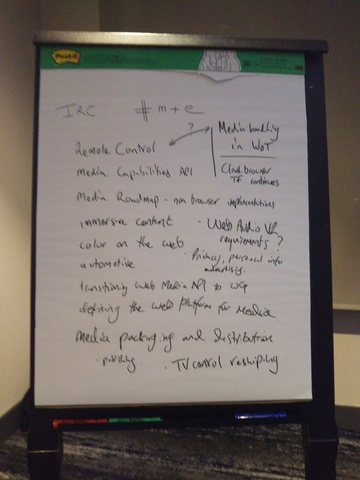

... We often use TPAC as a way to launch new task forces. I really offer anyone an open invitation at any time during the meeting to write up new topic ideas on the flip chart or raise them.

... And we'll have a look at this during the wrapping session.

... What are the topics for 2018?

Jeff: From my perspective, having some dialogue about whether to transition the output of the Web Media API CG would be an interesting task force for the IG to look at in the next few months.

Mark: Yes, that is a good point.

... [Mark reviewing the agenda for today's meeting]

... Joint session in the afternoon. Timed Text WG has the main spec for continuous data that goes along with continuous media. So we coordinate a lot with the group. We'll have a joint session with them in the afternoon.

... Also discussion on next steps for TV Control, following the closure of the WG.

Chris: Any comment on Mark's presentation?

... Some troubles have had troubles traveling. Joe, co-chair of the Audio WG may not arrive early enough for the presentation. So we may need to switch presentations. Same thing for the GGIE report.

... Any specific topic that you'd like to raise or discuss within the group?

Nigel: Are we going to discuss color on the Web?

Francois: Media Technologies for the Web should leave some time for that, yes.

Karen: Digital Publishing increasingly include media. Discussing the intersection would be useful. Also immersive content.

Mark: There are a number of groups looking at topics of interest, including HDR support, WebVR. You'll see a lot of that in Francois' presentation.

... Not all the work goes in the IG, we encourage people to participate in the other groups that are more specifically discussing a particular topic. That is part of our job to dispatch you to the right place.

Alex: Can we start to narrow down the scope by stating that publishing is not in scope of the Media & Entertainment IG?

Karen: Maybe. I do not know whether they are addressing streaming media yet.

... I do not know yet if there is an intersection yet.

Mark: We're definitely not doing book publishing, right?

Karen: Sure, but there is a segment with publishing where they are doing educational videos for instance, they might need to come to you with use cases.

Alex: They should, indeed.

Karen: OK, I will be with them tomorrow, will suggest that then.

Mark: In a way, we're chasing language meaning that evolves over time. TV referred to traditional broadcast streaming. Since then, the TV term has become broader. For instance, Apple uses "TV" in their app for something that has way more features than traditional TV.

... Language changes dynamically

Karen: I've also been working with the Automotive group. In the back seat, there is the entertainment system. I do not know where the intersection is.

Chris: That is a very good point. I plan to have discussions with the Automotive group.

... This feedback is very welcome!

Yam: About the Media & Entertainment name. Media can be anything, but one of the reasons why we chose Media & Entertainment is that we already have these businesses. This is an IG. Media can be news, etc.

... In the past, to drive this group, we used Entertainment. The main use cases will come from there.

... Web Media applications is very great. As a browser company, we have to implement it. Now that media is so ubiquitous (you can play media on the Apple watch for instance).

... We need to come up with device neutral implementations, and we need to face reality.

... Maybe business people won't like being told what they need to implement.

-> https://www.w3.org/2011/webtv/wiki/images/4/47/Hbbtv-tpac2017.pdf HbbTV update

Chris: [reviewing topic agenda]

... I'm not here to represent an official view of the HbbTV Consortium. Just speaking from BBC perspective here.

... The use cases, as you might expect, are all of the things needed in a TV environment. EPGs, application portals, information services delivered through the browser, companion screen applications.

... In terms of basic features, we have an HTML5 environment, support for remote control keys.

... Media playback capabilities, broadcast signalling to launch applications. Applications can be delivered over the web or through the broadcast in the carousel.

... In terms of deployment, this is info from the HbbTV Web site. 43 million devices out there, 300 applications, and used in many countries in the Web, mainly in Europe but also in Australia, some African countries and elsewhere.

... To give some background on the evolution of technogies. From HbbTV 1 which was 5 or 6 years ago now, that was really based on the HTML CE browser. We've moved recently to update that to be an HTML5 based specification.

... We have MPEG-DASH for adaptive streaming, and EBU-TTD for captioning.

... Recently, we added EME support with ClearKey.

... and companion screen features.

<scribe> ... New features in HbbTV 2.0: HTML5 support in the codec environment, enhanced A/V codec support, facilities to do ad-insertion into video on demand content,

UNKNOWN_SPEAKER: conditional access and DRM., etc.

Cyril: When was 2.0 released?

... Do deployment numbers include them?

Chris: End of 2015. No, numbers do not include v2. We're expecting deployments for 2.0 in the next year.

... On 2.0.1, this is specifically for the UK and Italy to bridge transitions from MHEG-5 and MHP.

... Hight resolution graphics, WebAudio support, and EME with ClearKey, as well as various security/privacy improvements.

Cyril: You went from HTML-CE to HTML5, but there was some profiling made, right?

Chris: I will come back to that, but yes.

Igarashi: Deployment in the UK has already started?

Nigel: Some available deployments with TV play (?)

Andrea: Some deployement of captioning. E.g. gor Freeview

Steve: You're right that full support for HbbTV 2.0 is not really available yet, because companies have been taking an incremental approach.

Chris: [Reviewing companion screen and media synchronization features]

... Synchronization can be between 2 HbbTV devices or between an HbbTV device and a companion device. Or synchronization between separately delivered streams.

... [going into details on companion screen workflow]

... For media synchronization, we're interested in the possibility to monitor the timeline and switch to IP content from time to time, e.g. to switch from a national broadcast to local news delivered over IP.

... The HbbTV spec itself references OIPF for browser environment, which references HTML5, a number of CSS specs, etc.

... Looking at the browser profile, there is full support for some technologies and partial support for others. Subset of HTML5, subset of Web audio, etc.

... The output of the Web Media API CG would be a good way to make that more up to date over time, which is what we'd like to see.

... We don't have MSE support for the moment (not stable enough at the time). WebGL, 3D transforms not supported either.

... No support for SVG images either.

Nigel: In your list of specs, TTML is obviously supported, not in your slides.

Will: Today, MSE is pervasive. What's HbbTV plan to support that in the future?

Chris: I can only give a BBC viewpoint here. We certainly want MSE to be included.

Steve: I actually thought that MSE was included and I'm currently checking that.

... EME with ClearKey is included, so that would strongly imply that MSE would need to be supported.

Chris: Actually, I think EME is present for the native DASH implementation.

Steve: OK. I think it's worth stating at this point that obviously device manufacturers will include a browser runtime in their platform, and support for MSE might come by default, even though this is not required by the HbbTV spec.

... HbbTV is the minimum set.

Francois: Interesting to note the relationship with the Web Media API work and difference of approach there where the spec is updated yearly to bring updates.

Masaya: From NHK, member of the IPTV Forum in Japan.

... I will go through deployment statistics, present a Hybridcast video, and report on the performance test event we held in Japan. Then next step.

... We ran a few presentations on Hybridcast in the past in this IG

... Standardization and experiment from 2014 to today.

... Some experiments with MPEG-DASH in 2017, following the establishment of the video spec in 2016.

... The number of hybridcast receivers is increasing rapidly [see slide]. September this year: about 6.5 million receivers.

... Predictions: we expect over 10 millions in 2018. In 2020, for Olympic Games in Tokyo, we expect over 20 millions.

... 30 broadcasters in Japan have experience with Hybridcast services. 6-7 use it daily.

... Experiment of MPEG-DASH 4K videos from regional broadcasters

... Hybridcast uses MSE and EME. HTML applications control the bitrate dynamically. No need for native MPEG-DASH support.

... Ad insertion by multi-period formed MPD.

... Emergency warning service, as the broadcasters have to respond to emergency calls during playback of video.

... ARIB-TTML closed caption.

... Specific MPEG-DASH profile for Hybridcast, with a reference library for TV named dashNX that uses MSE.

... That library was provided to private members.

Stefan: Any relationship with dash.js?

Masaya: Yes, it's a modified version of dash.js

... 3 features of verification: basic streaming, HD delivery, ad insertion.

... Various cases were tested in 3 years [see slide]

... [going through experiment details]

... About performance issues: the performance strongly affects the UX. Different performances of TV sets compared to regular desktops.

... It is hard to test 100s of device sets.

... It is difficult to distinguish between conformance and performance. Which is a conformance issue?

... So we held a performance event in Japan.

... Goal was to recognize actual performance and to identify practical but undocumented requirements for manufacturers.

... Event has been held over the last 3 years with an increasing number of participants.

... Typical test cases include the responsiveness of key-event firing, XHR communication speed tests, 3D picture displaying through three.js, capacity measurement of localStorage, maximum framerate of animations, etc.

Igarashi: Which test cases have the most disparate results?

Masaya: That's a good question. Maximum framerate of animations is a good one.

Igarashi: WebGL is supported?

Masaya: No, but Canvas is supported.

... About next steps: Hybridcast, there is no way to control the TV from smartphone. Now working. We need to enhance the UX and real-life services. On-air to offline, and offline to online, and online to on-air scenarios.

Chris: A couple of things that are interesting for this IG. The testing aspect. To what extent can we use the Web Platform Tests. How can we approach the testing of browsers on these devices.

... The other thing that you mentioned that is interesting is control of TV, to switch channels.

Masaya: Changing the channel from one to another is difficult because of different business models in Japan.

Will: I'd like to the see the dashNX. I don't know when you forked from dash.js. We've done a number of updates related to performance and I want to make sure that the updates you made get back to open source as well.

... I was not aware of the existence of dashNX in particular.

Igarashi: Is it possible to have a liaison between DASH-IF and the IPTV Forum Japan?

Masaya: Yes, dashNX uses an older version of dash.js. It's difficult to follow the updates.

Kazuhiro: Source code is proprietary but we can probably improve communications between organizations, yes.

-> https://www.w3.org/2011/webtv/wiki/images/8/8a/ATSC_Update_to_TPAC_2017.pdf ATSC update

Giri: We have been giving regular updates on ATSC within the IG.

... ATSC 3.0 is expected to be deployed to North America, South Korea, and possibly China as well.

... We do see redistribution of ATSC content over cable, but the techonology is focused on what we do over the air.

... A little bit of update of TG1 group. TG1 was focused on the previous version of ATSC. Right now, re-organized to streamline the work.

... Transitioning from ATSC 1.0 to ATSC 3.0. [going through slide]

... Organization of TG3 include a number of groups that are relevant for W3C, including companion screen, etc.

... We stay true to W3C technologies. There was some history with TV Control API, but we're using W3C technologies.

... Some technologies that I think are relevant are presented in my slide, with emphasis on the companion screen spec.

... We also have our runtime specifications. We extended the regular We bruntime when needed, but we haven't gone against anything that W3C has defined.

... One of the things that we actually defined was a way to add digital watermarks.

... We added specific Websocket-based APIs to extend the features available in the Web runtime.

... ATSC gives sufficient control over the app-related data from the broadcast signal.

... Another thing, not exactly the same origin policy, but possibility to scope data to application contexts.

... Application package signing is now mandatory. That's not 100% relevant to W3C, but that explains how we do security.

... Broadcaster applications can now access keypad control normally reserver for broadcast receivers. This goes beyond what you can do on a regular Web runtime.

... Related to the TV Control API spec, but using a different approach.

... Also Device Info API to expose specific information.

... The ATSC allows for 2 types of media rendering. One is similar to the regular video in HTML5. MSE/EME, media playback. However, as discussed in our liaison letter to W3C, we want to reduce the "dead-air", duration between the time the video is received and the time it is rendered, so the API control surface has been refined to include broadcast media playback.

... We hope a future TV Control API spec would take a similar approach.

Chris: To be discussed this afternoon.

Giri: EME support dropped because support for receive-only devices is unresolved (issue 132)

... We hope to solve that in the future. We are working on alternatives. To be concluded in the next 46 months.

Mark: The TV player is different from a Type 1 player?

Giri: It is separated. We do not impose anything on the implementation. It may well leverage MSE/MPEG DASH.

Igarashi: The receiver player is not based on Web technologies. The media player is the regular HTML5 player. In bother cases, the type 1 player is not used.

Giri: It's the difference between a system application and a broadcaster-related application.

Mark: It's just an issue of some application being loaded and unloaded, and some not.

Giri: There have been some investigation to have the RMP be used for the receiver player, but there was some concern on the latency needed to render the video.

... I agree with the generic sense of your comment.

Mark: Let's discuss this offline, I don't see the need for this. It's really smart to have deported the API to WebSockets, so as to be able to reuse existing browser code without change.

[Breaking until 11:00am]

<cpn> scribenick: cpn

will: I'll describe the WAVE project. it's relevant here

... tacking 3 problems: content diversity, different formats

... device playback, fragmentation across environments in devices

... problems with memory, cpu, HDR support

... there's no reference platform for the CE industry

... CTA is evolution of CEA

... task force: how can we package content to play across devices?

... HTML5 reference platform

... have 64 or so members, has Apple, Google, MS, lots of members in the room

... I chair the technical WG

... mav and john luther chair the HTML TF

... we welcome increased global participation

... WAVE is an HTML5-first organisation. we also favour MPEG CMAF

... we see these are the best roads forward for interop

... the content spec TF, WAVE is driving CMAF forward

... cyril is the co-chair

... CMAF has presentation profiles to describe aggregated audio, video, text codecs

... some profiles don't reference MPEG codecs, eg, from Dolby

... media and presentation profiles to bring these in, defined in a content spec

... there's an approval process to get in

... profiles around HEVC

... the HTML5 API TF, we mustn't do work outside of W3C

... what's the core set of components to go into a TV?

... WAVE is trying to be inclusive and relevant going forward

... we want to write apps once and run across lots of devices

... help device vendors by giving tests

... HTML5 TF publishes 3 documents through the W3C CG

... we ask for your eyes on this document: the Web Media APIs 2017

... in 2018 we'll revise it. the date is a proxy for the feature set

... also, developer guidelines, and a porting guide

... we want to provide guidance there

... please take a look at the github repos and feedback comments there

... there are lots of challenges: ad splicing, regional profiles - 50/60Hz, memory, long term playback

... there are practical but undocumented requirements for devices, and create test for them

... so they're shared across vendors

... there's work now on capability discovery

... there's an API proposal from google

cyril: i'm editing a document for MPEG i can share

will: we're also creating tests. we're using W3C tests and improving them, not doing our own

... the biggest gap is with device capabilites

... there isn't a public test set, companies doing their own

... everything we do is reasonably public, just need to be a CTA member

... we encourage you to join

mav: you can join the Web Media API CG from the W3C side

igarashi: about the media capabilties api, what kinds of capabilities?

will: ... the API is public. it's a more involved way to query a device for encode and decode capabilities

... it's not yet on standards track

... we can help define it

... existing solution is canPlayType with mime types

andreas: does the main work happen in the CG?

will: yes, it started from the TF, but the work does happen in the CG

andreas: does the TF meet separately from the CG?

mav: CTA and W3C are talking about making an agreement to publish the specs from both groups

... the 3 specs live in the w3c github, all issues are in the github repo

... there's a CG mailing list, and a CTA list

... most of the discussion is in the CTA list

... editors calls are W3C, main calls are CTA

... you need to be in CTA to join the calls

francois: i shared a document on the mailing list, i want to ask if the IG wants to adopt this, and have a discussion on future topics of interest

... there are many technologies defining the media platform. a good number at w3c. ideas in CGs

... the relationship between technologies isn't explained

... from a media company point view: what are the technologies in the space

... intended for non w3c members as well as members

... some requirements for this list: it needs to be appealing, highlight what we do and what we could be doing

... needs to be kept up to date over time

... some approaches: write use cases and requirements - detailed but takes a long time

... wiki pages can be just links, not sexy enough

... issue tracker doesn't give an overview

... we've published a mobile roadmap in 2011, maintained over the years, very detailed

... was hard to maintain. we had EU funding

... we wanted something easier to maintain, a generic framework

... it led to the current document for media technologies

... something simple that describes everything. it's a draft

... organises around the media pipeline

... not a perfect structure, but it seems to work

... client or server side processing

... distribution could be broadcast or p2p or network

... it's one way of presenting things

cyril: what do you mean by control?

francois: it's for controlling playback rather than the encoding

cyril: maybe control is too broad a term

francois: we divide each part into sections: well deployed (should match the web media apis spec)

... there's work in progress, exploratory work in CGs

... and gaps not covered by ongoing work

... we also include discontinued features, sometimes issues come back

... eg, network service discovery. stopped for security and privacy reasons

... the document should be easy to maintain

... it's i18n friendly

... relationship with the IG is important

... right now it's a document i'm working on in the team. we have limited resources

... it has no official standing, it's my interpretation, not representing a consensus

... we need to improve the code behind it

... there are other roadmaps: mobile, publishing, security

... about the IG - this is the steering committee for media discussion at W3C

... the IG needs to track and investigate gaps

... it's in scope by charter

... should the IG adopt this document, to identify new topics

... should be a living document for the IG. we'd use and update during discussions

... the content can change based on IG feedback, but the design is marcomm's area

... not intended to be normative

... i'll continue to be the main editor, i welcome help or if someone else wants to lead

... i have questions: is the scope correct?

... i got feedback about codecs, web developers need this

... gaps, timelines

... looking at media rendering for example

... the well-deployed list includes <audio> and <video>, web audio api, TTML, media fragments

... tables are automatically generated, fetches the maturity level from out database. it uses caniuse and browser status pages

... for specs in progress, there's presentation api, audio output devices api, color on the web - css media queries

... also css colors level 4 and initial discussions on canvas

cyril: how easy is it to contribute?

francois: it's in github, we have info there on how to do that

... it's regular HTML but with specific data attributes

... links to a JSON file with details. webvtt example: link to spec and caniuse

... i'd like contributions to the HTML and i can maintain the code

nigel: does it use caniuse for everything?

francois: no, also the browser own status pages

... caniuse says web audio is used everywhere, but in practice it's behind a flag

... the sources can conflict

... the difficulty is how to present this information

nigel: is there a way to extend beyond browsers, other devices?

francois: would be good to list, done manually at the moment. example: polyfills for IMSC

andreas: agree with nigel, don't just limit to browsers. other implementations make use of the technologies

francois: the IG could contribute to that

... but where to find the information

yamakami: information from manufacturers

andreas: also what is supported by hbbtv for example

francois: this is useful information, what hbbtv or atsc supports, it's doable

yamakami: could use RDF?

francois: it's plain JSON, not even JSON-LD at the moment

... we find this document useful for talking to people in the industry

... it helps document the vision for the web platform

alex: i think it's super useful, the group should adopt it. it will be a challenge to keep up to date

... what could be useful is to give people an idea of what the CGs and WGs area

... maybe it's a separate structure. intro to the IG, and the groups

yamakami: about the content section, there's exploratory work - features but not on spec track

scribe: also, discontinued features, things obsoleted in a v2 spec from v1

... also: dormant or suspended features

... it's a terminology question

francois: i'm open to better suggestions

... if there's a general support to adopt in the IG, next steps would be for people to review and comment

... there's a balance between detailed and generic

... we can discuss the scope

... webvr not discussed in the IG

nigel: it's a good document, i like it

will: we've used it, it's useful

colin: show of hands for who's familiar with cloud browser

... i'll start with an overview

... what we usually have is a local browser that gets content from the cloud

... but we move the browser into the cloud

... but there's no display there, so we have a client display

... the client is contextless, it has no notion of resources

<inserted> scribe: nigel

scribe+ nigel

colin: [web features] Web is feature rich, good thing

... Some problems with resource limited devices.

... Especially in media and entertainment

... devices usually low cost, long lifespan

... so not always capable of doing all the features

... so this is a problem because there's a lot of fragmentation, when new features

... are only supported by new devices. Not a coherent experience.

... Things like WAVE initiative try to solve this problem.

... I don't see this in the near future.

... Fragmentation makes it difficult for design and development, and even harder

... to maintain those devices.

... What we see in the TF is that the leading content providers are switching to another

... approach. They don't use profiles but are using a specific web browser rather than

... a general purpose one.

... It is optimised for a single application.

... Ensures the same experience on every devices.

... For example limited HTML subset, or javascript only browser.

<MarkVickers> Are there slides? None on WebEx...

colin: or proprietary based on web standards but not the normal HTML5 standards.

... [not a durable solution]

... Moving away from web principles.

... Also there may only be a few devices, so service provider has to choose which kinds of

... applications to deploy.

... The experience between those browsers is not coherent, for example accessibility

... settings may be different on each browser.

... Much more complex to author - have to target different languages.

... All kinds of problems with this approach.

... [Cloud browser solution]

will_law: How do you manage experience with latency etc?

... Have to throw quality away to get latency. Do you see a large media and entertainment

... applicability?

colin: Two approaches - one is to terminate video in the cloud and send to the device,

... helpful for legacy devices with limited codec support.

... Also double stream approach where UI is on the cloud and the video content is sent

... to the client by another route. That's how you preserve the quality of the media.

will_law: What's the benefit of the dual approach - is the UI component that much of an

... overhead that you wouldn't resort to doing that on the client too?

colin: What we promise with this solution is that the cloud browser supports all the features

... whereas on the client you have to rely on the client supporting all those features.

will_law: How would you support DRM and HDR on the client?

colin: It depends on the infrastructure. We haven't defined that in this task force. It would

... be vendor specific. It could be that the client would handle DRM. There could be a

... conditional access system on the cloud too.

... The cloud browser solution tries to solve the same thing - coherent experience over

... all the devices, and enables web standards for legacy devices.

... And deals with capabilities, like accessibility properties, having a single setting for the

... cloud browser.

... Also independent of the infrastructure - typically hard to supply web standards to

... broadcast. Only uses the last mile for sending to the client. Also ensures a coherent

... experience across infrastructures.

... Also always evergreen, much harder to make updates on client devices.

nigel: Isn't the cloud browser requirement going away with WAVE, ATSC etc?

colin: Could be, but I think we will still need both. For example which version of HbbTV

... will a device support? I see them as complimentary.

... [Recap TPAC 2016]

... First phase, defined the architecture and published as an IG Note.

... Goal of second phase was to refine the architecture use cases and requirements,

... and compare with comparable technologies.

... This done.

-> https://www.w3.org/2011/webtv/wiki/images/f/f4/TPAC2017_Cloud-Browser-report.pdf Cloud browser report slides

colin: [Refinements architecture]

... Don't want to explain the architecture here today.

... Didn't change much since last year.

... Explained a bit more what the relation between client device and cloud browser actually is.

... For example a concrete example of what is the resolution of an application - the client

... device screen or the virtual device?

... We made it more clear that it is the virtual device.

... The conceptual model is that the application is the virtual device. You are dependent

... on both the client device and also the transport and the capabilities of the orchestration.

... [Use cases]

... We divided this into two - communication and execution.

... Communication is comms between orchestration and client device, like signaling.

... Likely to become a new standard if we want to progress.

... Execution maybe more interesting - explains the differences between cloud and local browsers.

... The goal is the execution is the same on a local and cloud browser, but obviously there

... are some differences, like location - it should be the client device but it isn't formalised.

... The constraints from a cloud browser, so the API using synchronous calls will not work remotely.

... Lastly the cloud browser adds functionality, for example supplementing decoding codecs

... Application developers want to know which codecs are natively decoded and which

... are decoded on the cloud. All those use cases will probably compliment current standards.

... [phase 3]

... Objectives: Continue with TF, refine use cases and requirements.

... Use cases have been found to be beneficial.

... Open questions: We could create a WG or a CG for the communication use cases, or

... work together with existing WGs but we're not sure practically how to do that.

... Important to create awareness.

... We don't think it's niche anymore - millions of users experience this technology.

... Small group of TF participants - only makes sense to work on such topics if there are

... a lot of participants. Still working on this.

... Questions or comments?

igarashi: Have you done gap analysis with WebRTC?

colin: No but that is a good use case to add and investigate in phase 3.

igarashi: Why not have a liaison with WebRTC WG?

colin: It wasn't on our radar actually, this specific tech in a cloud browser.

... Need to investigate.

andreas_tai: Why is this TF part of this IG, since it is not restricted to media content?

colin: True, but it's particularly a problem in the Media and Entertainment space.

... For example phones and laptops are already powerful and also are changed more

... frequently, so there's less need for cloud browser.

andreas_tai: Is cloud browser also used outside media? Should we look for participation

... outside this interest group?

colin: It could be very broad.

andreas_tai: To get more participation.

tidoust: Would it be useful to draft a charter for a CG based on the TG charter?

... Just a thought, if spawning a new group is the thing to do right now.

... Then a consequence is it will not only be viewed as a media thing, and it could attract

... others.

colin: Makes sense.

Andreas_tai: Could be a way.

<tidoust> scribenick: tidoust

-> https://lists.w3.org/Archives/Public/www-archive/2017Nov/att-0005/GGIE_W3C_TPAC_2017.pdf GGIE update

Glenn: Update about what came out of the GGIE TF. We were chartered in 2015 to look at technologies from glass to glass.

... In the end, we splitted the world into the creation side, the core part around distribution, and then smart edges.

... Sometimes, systems require a very smart core. Usually on the Internet, it's more smart edge and dumb cores.

... We focused around 5 topics: content identification, metadata, scalability (example of the summer olympics with millions of hours of video being streamed), user identity and privacy that you cannot forget.

... We produced 33 use cases covering all aspects of the ecosystem.

... They are still very relevant, documented on the Wiki, I encourage you to look at them.

... Key use cases included the identification of media assets, key to enable other use cases, media & network collaboration, and media addressing & routing, to enable smartness of choosing the right local cache when available.

... I'm going to show you some working code based on that.

... At the end of 2016, we finished our work at W3C. We started to explore outside of W3C the outcomes at the network level.

... We realized that, under the really large set of IPv6 addresses, you can now reference media segments by IPv6, which was not possible with IPv4.

... The network can now be part of the healing.

... The segments under DASH now become IP addresses. 5 physical copies of one packaging, they all have the same address.

... Moving forward, there was a need to map EPGs to these addresses. So we came up with MARS (Maps Media Identifiers to/from Media Encoding Networks)

... "Spiderman French edition", which can be mapped to specific MPEG-DASH based package.

... [Showing the GGIE Video media model]

... Showing demo with 3 DASH players, pulling from 3 caches, but instead of using names from these files, the manifest simply contains addresses

... Caches are advertising through BGP what content they have.

... This makes it possible to pull out the cache from the network at any time, with automatic recovery, because the network realizes that the link no longer works and switches to another cache.

... Note the guy on the top uses its home cache. We did not change the manifest. The player, without even knowing that it's doing it, switched to the home cache.

... The idea behind this technology was that there could be collaboration between the network and content.

... The packaged media addressing, working together with the network layers. All of this was out of the box. No hacks.

... We actually took extra steps and defined these technologies in IETF drafts.

... It turned out that Cisco was on the same path.

... This crazy idea from the GGIE TF in W3C turned out to meet others interest. We're continuing development on this.

... We have purposely done this in the open so that it's available for everyone to play and extend.

... Note we've done the maths, you need 20 of the 128 bits of IPv6 addresses to index things the size of Youtube.

... You organize things intelligently. Aggregating these libraries let you not run out of addresses.

... For 100 years to come.

... Won't make a dent on IPv6 addresses.

... Using this system, you cut down your cost for advertisements. Your home network does not need to have all the advertisements for all possible movies, only for those you need to watch.

... We're natively done with the network level. Same as deploying hundreds of millions of IoT devices.

Will: You mention there's a lot of complexity in the manifest files nowadays. Aren't you shifting the complexity to further down the DNS level where QoS will be harder to assess?

Glenn: No. BGP advertizes the available caches.

... [further discussion on the ins and outs of the technology]

Cyril: How is it related to CCN?

Glenn: The big difference is that this is all done with existing technologies. No need for a new network architecture.

... We work with existing DASH players, existing BGP, etc.

... Nothing new.

... [going into details of the differences]

Francois: Back to W3C, is there anything for the GGIE TF to keep doing?

Glenn: We started by looking at more than just the Web.

... If it turns out that there is interest in going forward and revisit this work, that's great, we'll do it.

Sangwhan: Not necessarily speaking on behalf of the TAG here, but it seems to me that this is restricting a number of things here.

... I understand the scalability issues and all that.

... Also it seems to be more IETF and MPEG stuff, and not W3C stuff.

Glenn: We were given specific provisions to go beyond W3C in our charter.

Sangwhan: MSE gives the application layer control over things. This gives up control to the network layer. Nothing that the application can do about.

Mark: This got discussed. The presentation today was more about reporting what happened to the GGIE TF.

[Adjourned for lunch, back at 2:00pm]

<gmandyam> Please note the link for the ATSC Companion Device specification: https://www.atsc.org/wp-content/uploads/2017/04/A338-2017-Companion-Device-1.pdf (relevant to meeting with 2nd Screen WG)]

<matt_h> same for me - no webex audio

<anssik> proposed agenda for the joint session:

<anssik> - introduction to Second Screen WG/CG

<anssik> - follow-up on Second Screen & M&E IG Oct 5 2017 joint call issues

<anssik> -- ATSC evaluation, Tatsuya Igarashi, Giridhar Mandyam

<anssik> -- HbbTV evaluation, Tatsuya Igarashi, Chris Needham

<anssik> -- V2 API requirements, Working Group charter scope

<anssik> - revisit Presentation API HbbTV issue

<matt_h> I can hear you all now

<anssik> https://www.w3.org/wiki/Second_Screen/Meetings/Nov_2017_F2F#Agenda

Anssi: Second Screen WG is responsible for two APIs.

... The Presentation API to display Web content on a second screen and control that content.

... The Remote Playback API is a subset of that to display media content on a second screen and control the playback.

... The Second Screen CG is currently developing the Open Screen Protocol that could be used underneath the APIs. Still work in progress.

... We can give you some demos of these technologies in action, just get in touch with Mark!

Chris: For content, the TV industry has defined their own solutions for companion screens, discovery and control.

... HbbTV has such a mechanism for instance.

... The Presentation API and Remote Playback API are very interesting from a TV industry point of view.

... There is a question around how these things can interoperate.

... How can these things be deployed? Do we want only one set of APIs that can support all use cases from all fronts? At the moment, some requirements on the protocols are quite different, and some devices will soon be deployed with HbbTV support.

... Should the APIs be adjusted to interoperate with existing protocols? Or should we design a new set of protocols? What is the migration path?

... This is probably looking a few years ahead.

Anssi: How widely is HbbTV deployed in devices that are shipping? How many applications that are supporting HbbTV?

Matt: With HbbTV, primarily an European body at the moment. Existing deployed versions are 1.1, 1.5 and these versions do not include companion screen support.

... Germany, France, Spain and some parts of the UK.

... HbbTV 2.0, which includes these functionalities, is not yet deployed but there is a clear plan to deploy HbbTV 2.0 devices next year with millions of devices planned.

... The number of applications are relatively low since they are broadcast-related applications. In total, the number of applications out there are perhaps a hundred or less.

Anssi: Mark, I think you evaluated of HbbTV and had a few questions, I think.

MarkF: It looks like there are two models in HbbTV. One is having the TV launch content on the companion device. And the other is the reverse.

... Does that mean both sets of devices are performing discovery at each other?

Chris: Yes. I think that the protocols from the HbbTV device to the companion screen are not specified.

... They are from companion device to the HbbTV device.

Louay: How the TV contacts the companion device is not specified

Anssi: Moving the discussion to a higher level to discuss use cases.

Chris: I have a presentation that explains the HbbTV situation

... We all have a pretty good understanding of what HbbTV is and what it is for.

... The only thing that I'll point out here is that the browser environment exposes some TV specific functionalities, such as access to the broadcast tuner, PVR and so on.

... This is particularly relevant, because we want to enable these features for applications we launch on TV.

... The discovery and interaction between the TV and the companion device can work in two ways as noted earlier.

... The TV can launch a native companion app on devices. Or the companion device can discover TV, with some App to App communication mechanism, well-specified.

... Another feature that is of interest is synchronization. We're interesting in maintaining close synchronization between content on the two devices.

... We can synchronize playback between two HbbTV terminals, between an HbbTV terminal and a companion devices.

... Also between separately delivered streams.

... Some example use cases: A BBC app running on a device discovers and launches our interactive TV application on an HbbTV device.

Igarashi: The iPlayer is running on the TV?

... Defined in HbbTV how to launch?

Chris: Yes.

Anssi: This is the same use case as the Presentation API, right?

Chris: Yes.

... The communication channel goes through a Websocket server that runs on the TV device.

... Other use cases, related to synchronization: we can provide audio description, different camera angles. One of the aims is to provide a close level of synchronization to playback content on both screens simultaneously with minimum delay.

... Also, transfering playback from the main TV screen to a companion device "take-away viewing".

... Then rejoin seamlessly.

Anssi: Synchronization is a challenge. Goes beyond Remote Playback API.

Chris: Yes. But I guess that's an application issue.

... You can look at currentTime.

Anssi: What are the use cases where you play back content synchronously on screens?

Chris: Different camera angles. Or different audio tracks, one per viewer.

... HbbTV applications can be launched from different starting points. From the home screen. By AIT signalling or by the companion device.

... Then there are two modes for applications: broadcast-related (more rights) and broadcast-independent.

... Broadcast-related can change the channel, etc.

... Discovery of HbbTV terminal uses DIAL.

Igarashi: This is different from what you mentioned previously, right?

Chris: No, I think it's just the way that we drew it.

[Some discussion on the Application-URL response returned by the HbbTV device in the DIAL workflow]

Will: Wondering about DIAL, which protocol is used?

Francois: SSDP, then HTTP.

<sangwhan> DIAL spec: http://www.dial-multiscreen.org/dial-protocol-specification

Will: So any application can poll any local IP address and discover TV, right?

<sangwhan> And an implementation: https://github.com/Netflix/dial-reference

Chris: No, discovery is in native land.

Will: Malicious applications could ping all IP addresses until they find the available endpoints.

Chris: Right. That's a good point to take into account, especially since we're looking at increasing security.

... Moving on with launching an HbbTV application.

... You're typically putting the user in the loop on whether or not to launch an application.

... The app to app communication uses an HbbTV specific API to return the address of the Websocket server running on the HbbTV device.

... Establishing the communication channel is dependent on the application on the TV initiating the communication channel.

... In the Presentation API, the communication channel will already be available on the receiving page. With HbbTV, the app on the TV device needs to make that initialization.

... For app-to-app communication, we have an app endpoint suffix.

... It's all Websockets in the clear.

Igarashi: Talking about security, it is allowed that TV manufacturers implement some restrictions such as pre-authentication of companion screens.

Chris: It's allowed, it's true.

... Getting on some of the details of mapping to Presentation API.

... If we can pass some additional parameters to PresentationRequest, we can use them in the receiving side to determine whether we grant the app access to broadcast-related rights.

... Typically, the orgId and appId.

... Possibly a more generic view is: is there a need for a same-origin policy for mixed broadcast and Web content to determine whether a given Web content is allowed access to broadcast content?

... That could be a separate approach.

Anssi: Could you imagine passing the parameters within the URL?

Chris: It would work, but you would have to reconstruct the URL.

Louay: That could be the URL scheme as well.

MarkF: What we did was to use a separate scheme, indeed. Typically cast:// URL that describe how cast parameters get passed.

Anssi: That would mean no API level change.

MarkF: Mixed broadcast and web content. Is this both HTML content?

Chris: My thought was not that well-formed around this. Access to broadcast media that is coming from another provider should be forbidden.

<matt_h> An HbbTV app launch is DIAL launching "hbbtv" and providing this additional parameters as an XML document in the POST body. So maybe the question is how to provide a POST body for dial:// ?

Chris: App endpoint. Within HbbTV, you can have different app endpoints. Maybe the ability to send messages to named channels would be useful?

... No need to go into synchronization details. Not necessarily worth taking into consideration if done on the side.

... In HbbTV, DVB-CSS defines the mechanism.

... At the moment, the pointer to Timing Object is just to mention related work. That needs to be re-evaluated. If W3C wants to define a synchronization mechanism across devices, then maybe the Open Screen Protocol could have some primitives, and the Timing Object would be the API level.

Igarashi: I'm working in the HTTPS in Local Network Community Group. We have different use cases.

<igarashi> https://github.com/httpslocal/usecases

Igarashi: First use case is the Presentation API use case as far as I can tell. The user loads a page on his laptop. It uses the Presentation API to load Web content on the projector and control that content afterwards.

... On TV, a different use case is when the application that gets launched is the broadcaster's Web application, which could be streamed over broadcast.

... The application is already running on the TV.

... The current API does not cover this use case.

... I'd like to discuss whether the Presentation API could cover this possibility.

MarkF: Has the user already loaded a Web application from the broadcaster?

Igarashi: Yes, automatically launched when the user switches to the right channel.

MarkF: The API does not have a way to easily discover a presentation that has already been launched on a device. That is really up to the implementation.

... We have a way to connect an application to a running application, but it is through the browser UI.

... Two ways it could work: notification from the server and use of reconnect. The other way, the user hits a button, and the page has a default presentation request.

Giri: Could DIAL be used for this?

Sangwhan: Wouldn't the TV not be connected to the Internet as well?

Igarashi: It could. The application could actually be loaded from the Internet. Both use cases are possible.

Giri: DIAL should be applicable here. The Presentation API should be independent from that. Is that correct?

Igarashi: DIAL is a discovery mechanism.

... The Presentation API has no way to specify "connect to the broadcaster's application"

MarkF: What is not well-defined is how you map the application and the presentation running on the TV device.

Sangwhan: I would suggest you raise an issue on the Presentation API issue tracker.

Giri: Companion device in ATSC, I posted a link earlier on IRC.

... ATSC uses DASH content. It's pretty straightforward from the companion content to retrieve the content provided it is made available.

... That content is somewhat retrieved on a primary device. That content could be made available on the local network. Is that something that the Presentation API would support?

... Or is it out of scope?

MarkF: I will have to have a little bit more concreteness about where this content is going to be made available.

... That sounds like something that could be in scope. Please raise an issue on our issue tracker.

Giri: Yes, I can do that as an individual. But I would recommend sending a liaison to ATSC to get feedback from other companies as well.

MarkF: Sure.

Igarashi: Back to my use case. Discovery phase might be slightly different.

MarkF: Right. We don't have an API to discover presentations that are already running.

<gmandyam> More background on my comment: ATSC CD spec allows for Companion Device to discover content URL's that correspond to programming. These addresses can be used by the CD to access streaming media.

MarkF: On the Open Screen Protocol, this work began out of feedback that there was not a good story on interoperability between devices. We wanted to see if we could come up with a lower level protocol that browsers and devices could implement to improve interoperability.

<gmandyam> For segmented (e.g DASH) content, once a primary device downloads the media from the broadcast it can make it available over the local network.

MarkF: We started to look at functional and non functional requirements.

... Essentially, the requirements come from the API itself.

... We also defined a number of non functional requirements such as the fact that the protocol should be implementable on a number of hardwares, possibly low-end devices.

... Some requirements on privacy and security, to avoid leaking anything.

... User experience, we want discover to work quickly, with low latency messaging.

... Finally, extensibility to extend the protocol to other use cases.

... For discovery, we have proposal to use SSDP, mDNS / DNS-SD. For transport, QUIC, WebRTC Data Channel.

Giri: Why only UDP? Why not websocket?

MarkF: No one submitted a proposal.

... For each proposal, we have a specific list of requirements. We will discuss tomorrow how well we think our proposals perform.

... I have a testing environment in Seattle. I hope to be able to run a series of experiments there.

... [going through proposal details]

<gmandyam> Slight correction to what I said: why only UDP? QuIC and WebRTC are UDP based.

<gmandyam> WS for local connection is comparably performant (with appropriate TCP configuration).

<anssik> Open Screen Protocol

Anssi: We will meet tomorrow to discuss the Open Screen Protocol.

[Afternoon break]

<cpn> scribe: cpn

<tidoust> s|s/1\+//||

<scribe> scribenick: cpn

nigel: i'll update you on what we've been doing in TTWG since last TPAC and next challenges

... cyril, andreas, pierre are here from the WG

... TTML2 is in WD, we got feedback through wide review

... this is TTML1 + more styling, support for Japanese language, audio, profiles

... also mappings to CSS attributes and how to deal with HDR using either PQ or HLG method

... IMSC 1.0.1 has two optional features

... we're waiting for another implementation to satisfy exit criteria

... goal is to make a profile of TTML2 to support more requirements globally

... we'll publish the requirements as a WG Note

... the goal for WebVTT is to transition to CR

... i'm seeing industry convergence on IMSC. it's used in MPEG CMAF, also in DVB for MPEG-TS

igarashi: what are the new features in TTML

pierre: japanese language, ruby support, HDR, and more

nigel: it's used in various parts of the chain, from authoring, archive, distribution, playback

... in operation, the BBC generates EBU TTD, netflix are using it, also the german broadcasters

... native playback support in browsers is absent

... there's the IMSC JS polyfill thanks to pierre

pierre: DASH.js also supports, Shaka player supports parts of TTML

... if someone wants to use subtitles on the web, can use IMSC out of the box

nigel: TTML2 has support for audio renderings, could be a live recording or text-to-speech

... audio description (video description in the US)

... BBC has an implementation, but can't share yet

... would like to collaborate

igarashi: what about IMSC?

nigel: audio is out of scope for IMSC

igarashi: does this come from european or american broacaster requirements?

nigel: it's in the W3C's media accessibility requirements

pierre: who would be the first user do you think?

nigel: hard to say, i know the BBC would want to use it?

chris: there's be interest from education institutions

nigel: hurdles to jump?

... there are some gaps from CSS. ruby is an example

... also formatting for line areas

pierre: there's no native CSS mapping. same for line padding

nigel: those mappings of TTML style attributes to CSS are in the wiki

... another hurdle is more general: when you have sRGB color spaces and you want to display in HLG

... how does it work generally for the web?

... the color CG is meeting tomorrow afternoon, come along if interested

... what's next? moving TTML2 to CR status, updating WD of IMSC 1.1, gathering requirements

... moving WebVTT to CR

... is more work needed on HDR / wide color?

francois: what is happening with WebVTT? is TTML the direction?

nigel: industry is going down the TTML route, but WebVTT has significant usage, maybe targeting a different user group

... there's probably more activity on the TTML specs than WebVTT

andreas: there are some missing things in the Text Track API, eg, identifying the caption format as VTT or TTML

... the IG should push for these formats to be part of the web platform

... another example is CSS, could be used for applications other than web browsers

... some of the requirements of CSS don't satisfy what Timed Text needs to support

... the IG can be useful to push requirements within W3C

<nigel> Mapping of TTML style attributes to CSS properties (and where it can't be done!)

francois: this is in scope for the IG

pierre: there's a lot that's green, which is good

francois: have you already tried bringing this to the CSS WG

nigel: we've invited them to a joint meeting on friday morning

pierre: until recently, subtitles and captions were absent from the web, so CSS didn't need to deal with it

... this document is a good basis for discussion

andreas: one of the things for the IG is to confirm that there's support from the media industry

nigel: devices are part of the web platform, using TTML without HTML and CSS

... we should have the option to support the required styling

cpn: what should the IG be doing more concretely to support?

nigel: we need some way to allow different formats to be supported more cleanly in HTML5

... HTML5 mentions WebVTT and text tracks, but no mention of TTML. it's not obvious what the model is for making that work?

pierre: can we turn this into a resolution that the IG can review and approve?

francois: it can help to volunteer to do the CSS spec work

nigel: it's possible we need to generalise from what we have in TTML to bring it to CSS

... i think CSS WG would want to own the solution

pierre: we can bring resources to do that

andreas: we can draft something to be reviewed by the IG, with a link to the mapping table

<scribe> ACTION: nigel to draft a proposal for the IG to confirm a desire for HTML and CSS to support TTML playback

<tidoust> scribenick: tidoust

Chris: For context, the question there is what the best way to take forward ideas at W3C?

... This group does not produce specs per se, but can spawn new groups as needed.

<cwilso> https://goo.gl/kbpQRN

Chris: Two things: 1/ review existing topics on-going in the WICG. 2/ broader relation between the IG and the WICG and how best to collaborate.

ChrisW: Co-chair of the WICG. Also on the AB.

... WICG is not magic. The reason we set up the group is that even joining CGs have a cost, especially if you're a large company.

... The WICG is just an umbrella CG. You can still create your own CG, and it's totally fine, CGs are being created every day.

... All of the browser vendors are in the WICG, so it's easy for them to look at what is happening in the group, no patent review needed.

... The good thing about incubation is that it's easy to fail.

... You don't have to commit as in a Working Group.

... We have a lot of current incubations. Some of them are of interest in the media space:

... canvas-color-space, media session, media capabilities, picture-in-picture, media playback quality, prioriy hints.

... Some of these have been touched in the last week, some haven't in the last few months.

... It's OK, that's how it's supposed to work.

... We're probably at a point where we're looking at Media Session and wondering how to transition it to Rec-track.

Igarashi: How is the discussion going on with the CG? On GitHub? Mailing-list?

ChrisW: When you want to create a new incubation, we have a discussion forum that you can use to propose the idea. One pager to explain why you think we should work on that.

... Either we can create a repo on GitHub in the WICG space. Or transfer to the WICG space if the repo already exists.

... The development is then almost entirely done on GitHub.

... At some point, when this stabilizes, that's when we start to wonder about transitioning.

... The transitions that happened so far were for people that were already active in a WG.

... Doing new MSE features would probably go here, and then we'll have to determine whether the Web Platform WG can take that on, or whether another group would be needed.

Chris: Are there specific criteria for the kind of topics that can be brought to the WICG?

ChrisW: If you want to bring a feature that should clearly be addressed by some existing group, we would recommend to go that group directly. Apart from that, the rest is basically open.

... Can you get all the contributors to join a CG? That is the question you need to answer. I would not consider doing things on VR outside of the WebVR CG for instance, because the community is already there.

Chris: What do you mean by incubation? We're not implementers in my organization. Are you looking at active implementers?

ChrisW: This is probably the hardest question overall.

... Fundamentally, you need implementors to take your spec otherwise it won't work.

... At the same time, browser vendors tend to pay attention. Getting attention and focus can sometimes be a challenge.

... But putting ideas in a CG where people are looking at is a good way to attract that attention.

... Getting another entity to support a fantastic new idea seems like a good requirement to share a new idea. That's something I use internally to filter new ideas that Google engineers would like to put into standards.

Chris: Some of the examples you mention are from Google. I guess it makes sense for us to get in touch and say "yes, we're interesting in that feature".

ChrisW: Yes.

Chris: How does consensus work in a group with so many deliverables?

ChrisW: In CG, the chair is not required to build consensus. It's a good thing as it gives freedom to explore ideas that people disagree with. We can even incubate different solutions for the same idea.

... The WICG chairs job is to keep the incubation process running. Transfer specs in and escalation of code of conducts violation issues.

... Our job as WICG chairs is not to build consensus. That's the job of the editing team. At the end of the day, consensus will be sought for ideally, since the goal is to end up in a WG.

Yam: I'm very impressed. It's a very great idea.

... I will educate my company about this CG.

Sangwhan: Hopefully, the expectation is to have people give an explainer with code examples.

ChrisW: If you literally post a 3 lines request, you probably won't manage to attract people's support.

Sangwhan: The TAG recommends code examples as a way to trap bad design issues and as a way not to overcomplicate the proposal.

[Some discussion about the TAG's recommendations]

Andreas: Lifetime about incubations and how do you monitor incubations?

ChrisW: We don't really monitor incubation right now, but we're reaching the point where we need to do it.

... The one pattern that I want to avoid is situations where a spec is being shipped without some movement towards WG.

Francois: So best is to contact the editor right now to check on status of a particular idea?

ChrisW: Yes.

... We're talking on unifying some of the implementation data. Chrome status needs to give signals about other implementations. It would be good to consolidate. But that's our interpretation and some browser vendors sometimes do not want to comment.

Igarashi: Can I comment and contribute ideas without joining the CG?

ChrisW: No, by design, you need to join the CG to commmit to the CG patent policy.

<sangwhan> For the purpose of this group, if an incubation idea is not getting looked at nudge the username "root" - which is me.

Chris: Thank you very much for coming in!

... It's very possible that we will contribute new ideas and requirements to the WICG, so it's good to understand the mechanism there.

ChrisW: Yes, feel free to ping one of the WICG chairs. We're available 24/24, roughly ;)

Chris: In the interest of time, I propose to skip this topic. Giri, Steve, have an interest and are no longer around.

... We'll use the mailing-list.

Yam: Can you give us a quick summary of the current status?

Chris: The TV Control WG was drafting a spec. It was felt that there was insufficient implementors support to carry this forward. ATSC was looking at it, but time scale was not the right one.

... But also, there were significant questions about the design and scope of what the API was trying to do.

... One of the topics for discussion is whether we should restart the work, probably with a much narrower scope.

... My presentation offers a few different ideas.

Francois: One idea could be to run a breakout session on Wednesday?

Chris: Yes, that would be great.

Sangwhan: I'm with the Technical Architecture Group. We're elected and/or appointed.

... We look at the architecture. We also do a number of review for new specs and specs that are being incubated.

... Dan Appelquist and Peter Linss chair the group.

... [going through the list of participants in the TAG]

<sangwhan> https://github.com/w3ctag/design-reviews

Sangwhan: Inside the repository, there is an issue tracker, which you can use to request review of a given spec.

... You may request review of an idea you want to bring to the table. We have weekly calls going through these issues.

... If we have questions on your spec idea, we may invite you to our call to discuss.

... Otherwise you can talk with us on GitHub.

... That's basically the main work we do. But we also do this thing called Findings.

... One of the findings that we did is the "Evergreen Web", about TV sets in particular not being updated.

... Any browser that gets connected to the Internet and that is not updated exposes the user to security issues.

... Please keep your browser up-to-date.

... Also we would prefer if APIs developed in this group or related groups would also work in regular desktop browsers.

... Otherwise you'll get a lot of friction if you try to push the API through the Recommendation track.

Chris: The evergreen finding. Is it also about feature completeness?

Sangwhan: Yes, it is about getting everyone on the same page.

... With HbbTV for instance, we would like to have a mechanism for OEMs and developers to have access to new features and security updates.

Yam: To summarize, you're saying that we're very dangerous people.

Sangwhan: No :) I'm here to tell us about our work. Also we're trying to look at all groups to see if there are overlaps between deliverables.

Yam: So part of your work is to monitor on-going works for consistency.

Sangwhan: Yes.

... Note I'm "root" on Discourse. I've been reviewing most of the hardware APIs.

Chris: Thank you for the update, very useful.

Joe: I would like to give you an update of the Audio WG.

... The core idea behind Web Audio is to enable browsers to work with raw audio material in the same way that Canvas might allow you to manipulate graphics.

... Web Audio is about enabling Web applications to synthesize audio from basic elements, such as filters, reverbs, shaping, etc. These are all available as building blocks.

... Another key thing that Web Audio has is that it transforms audio. It can be a file, an audio element on the page, a stream.

... And finally it connects to the larger world of audio devices and audio sources. E.g. soundtrack of a movie.

... We're just entering the Candidate Recommendation phase.

Nigel: I mentioned using TTML for audio description earlier today. Our implementation uses Web Audio.

... I note the Web Speech API does not have access to the Web Audio API.

Joe: I don't know about that, but that is one of the challenges we're facing. Different APIs that touch on audio, and lack of blueprint right now.

... Web games is a big use case.

[Chris presenting Radiophonic demo]

https://webaudio.prototyping.bbc.co.uk/wobbulator/

Chris: Are you looking at a v2 API?

<sangwhan> v2 targets: https://github.com/WebAudio/web-audio-api/milestone/2

Joe: Absolutely. There were a number of features that were pushed to v2 to get to a stable spec sooner.

... Better integration with audio devices, grabbing successive chunks from the network.

Sangwhan: From a TAG perspective, we would like to see things such as FFT moved to a generic mechanism.

Chris: What I'd like to ask the IG. Do we have new requirements to bring for new features in Web Audio?

... There is an opportunity coming up, so now is a good time to think about it.

Joe: The issue list is a good place to look at

Sangwhan: I dropped a link on the IRC channel.

... From a developer perspective, feedback I got was that the API is super complex.

Joe: Libraries are an important part of the ecosystem.

Chris: Wondering about the equivalent for video

Francois: Was abandoned. Also because video processing was still being done on the CPU, not on the GPU. Is this something you're looking at for audio as well?

Joe: Not really. It has not really come up. But a lot of work on offloading processing to workers.

Francois: Also wondering about synchronization between audio and video. The Web Audio API exposes the latency and relation with Performance.now(). The video player in HTML5 does not. Is this being discussed?

Joe: Not really.

[Discussion possible next steps for discussion, including follow-up joint call]

Chris: I captured earlier some of the earlier themes. Tryin to think about what the IG does next.

... Open question about what do we as an IG want to investigate.

... Some of them we did not really discuss today.

... There is a dedicated meeting on Color on the Web tommorrow that I would recommend for everyone. How to mix HDR with SDR for instance.

... Other things that you recall from the discussion today?

... The first one that I noted from the IPTV Forum Japan presentation: standardization of a remote control API.

... Media capabilities was mentioned as part of the WAVE presentation.

... The existing spec is in the WICG. We should take a much closer look at that, possibly make that the focus during one of our monthly calls.

... The next thing is the roadmap document that Francois presented. Seems well received. Good way to frame discussions.

... I'd like to invite everybody to review and send feedback on the document.

Kaz: We can use GitHub issue mechanism to provide feedback on this document.

Chris: We alluded to immersive content. There are lots of different standard groups taking a look at VR. What should each one be doing?

... Bringing requirements to the WebVR CG could be good.

... Automotive was mentioned as well, with the entertainment system.

Kaz: The Automotive group actually plans to follow the VW approach named RSI for media capabilities. Using websockets.

Chris: And that work would still result in a W3C spec?

Kaz: Possibly. They will start discussions in the BG.

... Discussions on Thursday, people here might want to join.

Chris: If it's media related, should that work come to the Media & Entertainment group? Or is it automotive specific? I'm not familiar enough with that group, but we'll need to figure that out.

Francois: One thing missing here is IG evaluation of transitioning the Web Media API CG work to a working group, part of the mission statement that Mark presented this morning.

Igarashi: Packaging media applications is a topic of interest as well. Offline use cases.

Kaz: Related to Web of Things, there might be ways to handle media streaming using WoT as well. Some alignment on vocabularies to use.

Igarashi: Related to the Remote control API.

Yam: Possible inputs on Web Audio API version 2.

Colin: Cloud browser. We agreed to continue the TF and refine use cases and requirements.

Chris: Building awareness about that work is something we discussed.

Yam: For completeness, you should add reshaping the TV Control API.

Chris: One of the things that Jeff suggested was to use sessions on Wednesday to refine the mission statement, and purpose and scope of the IG. We would need Mark for that discussion.

Kazuhiro: Privacy , personal info tracking in the context of advertising.

Chris: OK, thanks for the great meeting today!

... I would like to mention these regular monthly calls where we focus on a specific topic, so I'd like to encourage you to join these calls because we will use them to pursue some of these topics.

... Thank you!