MBUI F2F Pisa 14-15 June 2012

MBUI Face to Face, Pisa 14-15 June 2012

Present: Davide (CNR), Carmen (CNR), Javier (TID), Cristina (CTIC), Nacho (CTIC), Joelle (Grenoble), Gaelle (Grenoble), Sebastian (UFSCar), Marius (DFKI), Sascha (UCL), François (UCL), Vivian (UCL), Nikolaos (CERTH-ITI), Fabio (CNR)

Chair: Dave (W3C)

Remote: Jaroslav (Bonn)

See also the minutes taken by Vivian.

Introductory Note

For the use cases, Sebastian volunteered to split them into sections containing related use cases. Gaelle took an action to add explanatory text for a new table that will show a matrix of use cases and features.

Action: Gaelle to add explanatory text for a new table that will show a matrix of use cases and features.

We are all invited to add explicit task models and abstract user interface design models to each of the use cases. These should be in the form of diagrams, so this may take a while as we have yet to settle of the details for the AUI. We would like each of the use cases to be described in a manner that provides consistancy across use cases.

In general, we should consider adding further illustrations to the WG Note. Jaraoslav proposed re-using introductory figures from CAMELEON. Joelle suggested that there were some more recent figures that would be better and offered to find them.

Action: Joelle add introductory figures to the introductory Note by July 15

Topic: Glossary

Jaroslav says we need to drop academic terms in favour of terms better suited to a broader audience. In the longer term, perhaps we should consider a wikipedia style approach instead of the current document-based approach. We need to collect terms from the Task Models and Abstact UI Models specifications and review their definitions in the glossary.

Task Models

We discussed the idea of tasks that update domain data as compared to tasks that update properties of the user interface itself. An example is the orientation (portrait v. landscape). How are domain and UI properties to be expressed?

Should task models be extended to support actors, i.e. to differentiate the roles of the users. This would lead us into dealing with multiple users.

We discussed the time line for the Task Model specification, and it now seems practical to target the end of June for a First Public Working Draft.

[We break for Lunch and a group photo]

Fabio talks us through the meaning of the terms in the meta model. Jaroslav asks about the potential role of alarms. This was followed by a discussion on task types, for example, system tasks. We will need careful definitions to avoid misunderstandings by people new to this approach.

Ben Schneiderman's task taxonomy could be helpful ("The eyes have it, a task by data type taxonomy"). This has several types:

- Overview

- Zoom (in)

- Filter (out)

- Details on demand

- Relate (to view relationships)

- History

- Extract

We discussed the visualization of task models, and agreed that this wouldn't be a normative part of the specification. For the use cases the conventional CTT diagrams should be sufficient together with the accompanying prose description for the details, e.g. the properties that aren't present in the diagrams. Some discussion on the discovery knowledge process and the potential for using the Schneiderman task types.

Abstract User Interface Models

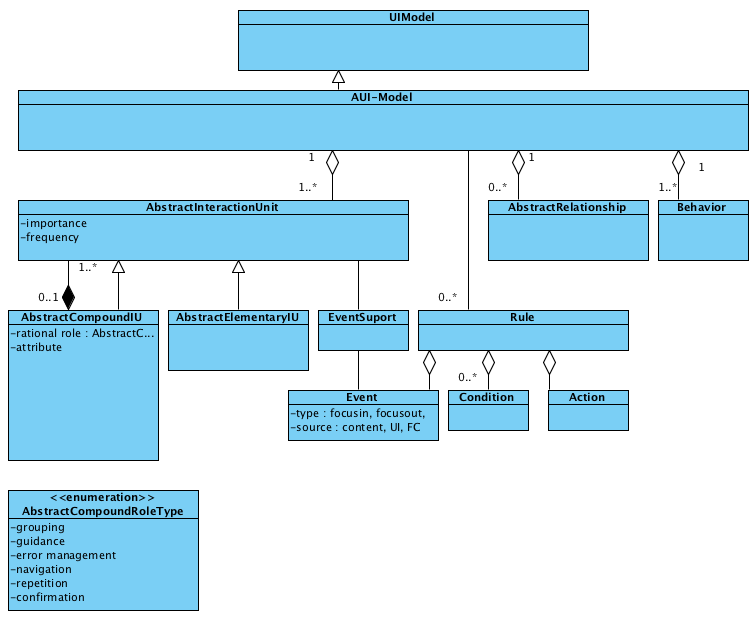

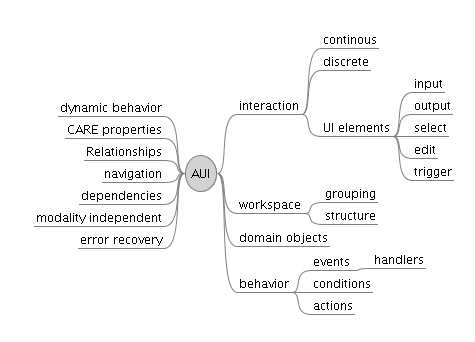

Francois presented the status of the AUI specification (slides to wiki?). He started with a quick review of the relevant submissions to the MBUI WG, including UseDM, MARIA, UsiXML, ASFE-DL and AIM.

What are the requirments needed for generating a localized concrete user interface from an abstract user interface model? We discussed this for a while an concluded that we just need to label the language of textual descriptions as a basis for translations, and to recommend best practices for avoiding cross cultural problems.

What are the goals for capturing behaviour? There is agreement that the abstract UI needs to be detailed enough to fully describe the user interface in a modality independent manner. Dave noted that there is quite a lot we can learn from the requirements for rich form-based interfaces, and work on XForms. How do abstract user interface models relate to task models? This is something that we should aim to clarify in the introductory Note.

Some delivery platforms may not be appropriate to implement a really rich abstract user interface design, e.g. touch-based devices like smart phones and tablets are relatively poor for extended text input.

Jaroslav suggested that the abstract user interface specification should allow for interactors that aim to prove the user is a human and not a robot. Concrete examples include "captcha" where users have to enter a character sequence based upon an image or sound clip. We further need to deal with authentication and access control in a modality independent way. This doesn't just relate to the domain data and models, and can also apply to parts of the user interface that are subject to access control.

Further discussion on the relationship between the abstract user interface, task models and the concrete user interface. Dave noted that some kind of layering is needed within the abstract user interface to support high level review and the means to expand and hide levels of detail.

We talked through an online shopping use case and the need for the concrete user interface to present the contents of the shopping kart at different levels of detail. A compact presentation with the total value and number of items, and an extended view showing each item in detail. What requirements if any does this impose on the abstract user interface? In particular does the two concrete presentations needed to be reflected by two abstract user interface models? Another possibility would be a means to express the kart at multiple levels of detail in the abstract user interface. We reached a consensus that the latter approach made the most sense, i.e. an overview, a detailed view, and a group/container around them.

This was followed by afternoon coffee and demonstrations of MARIA and Quill, and a video from Gaelle. We then closed for the day.

We resumed on Friday morning with a session that aimed to bridge the various different submissions for the abstract user interface. The starting point was to brainstorm about the core concepts and relationships. This led on to consideration for the meta model and a first cut at a shared approach. See the snapshot of the whiteboard. It looks as if it will take us the rest of the Summer to bring this to a point where we are ready to issue a First Public Working Draft.

Our thanks to Vivian for the following diagrams:

Our thanks to our host ISTI for excellent venue, great weather, and great food!