HttpUrisAreExpensive

HTTP URIs are not Without Expense

Introduction & Background

The content of this Wiki stems from a thread in the W3C Semantic Web Health Care and Life Sciences mailing list discussing what should be the language used in recommendations regarding authoring URIs for use in Biomedical datasets and ontologies. It is an attempt to paint a clearer picture about the assumption that the HTTP scheme is a one-shoe-fits-all URI scheme (especially WRT the expected behavior of intelligent agents which consume URIs that denote scientific concepts and documents). Relevant links to the recent Technical Architecture Group finding (URNs, Namespaces and Registries) are included for better context.

The Era of Semantic Web Agents

The primary motivation behind this Wiki is the fact that a significant barrier to the advent of Semantic Web automatons (or "intelligent agents") is threefold:

- A well-defined normalization function which (when applied against legacy data) results in RDF

- A robust policy for identifying additional RDF content relevant to an initial set of RDF assertions

- Can you clarify? Are you referring to the problem of finding assertions about a resource (other than the URI declaration associated with that resource)? -- DBooth

- I'm referring to the problem of finding assertions both about specific URIs as well as those about other URIs but still of direct relevance (the FOAF graph scenario) -- Chimezie

- A robust policy for identifying a set of semantics (typically in the form of an OWL ontology) which determines the mapping from terms in the initial RDF graph to referents in the world.

- Do you mean the problem of finding the URI declarations for those terms (assuming they use URIs)? -- DBooth

- I mean the problem which is essentially the reverse of what the RIF WG is currently contemplating: finding a ruleset or entailment regime to apply to an initial dataset to facilitate interpretation of the dataset. This is orthogonal to URI declarations since the mechanism for declaration is model-theoretic (RDF-mt,owl-semantics, and RIF-RDF) -- Chimezie

It is worth noting that with respect to biomedical ontologies, the set of referents of interest are typically not information resources but objects in the real world of scientific interest [Smith, B. "From Concepts to Clinical Reality: An Essay on the Benchmarking of Biomedical Terminologies"]

The class of GRDDL-Aware Agents (as defined by the GRDDL specification) addresses barrier #1. LinkedData is an attempt to address barrier #2 and is similar (in spirit) to OntologicalClosure, which attempts to address barrier #3. However, both run with the assumptions that 1) a vast majority (if not all) of the terms in the initial RDF are resolvable HTTP URIs and 2) exhaustively resolving representations from these HTTP RDF URIs is generally the most useful mechanism for guiding automatons. There are issues regarding what to make of the content returned upon resolving such URIs (TAG's httpRange-14; see also URI Declaration Versus Use) as well as issues with the feasibility of such an assumption. This Wiki is only concerned with the question of feasibility. This assumption seems rooted in the Web Architecture best practice of "Reuse URI schemes":

A specification SHOULD reuse an existing URI scheme (rather than create a new one) when it provides the desired properties of identifiers and their relation to resources.

However, conversely, Web architecture also suggests as a best practice:

A specification SHOULD allow content authors to use URIs without constraining them to a limited set of URI schemes.

HCLS/WebClosureSocialConvention is an attempt to address the second barrier along lines somewhat divorced from LinkedData. The suggestion there is built around the observation that 1) OWL ontologies tend to consolidate the set of assertions needed to address barrier #3 and thus are better-suited as a more guided trail from syntax to semantics and 2) there are at least 3 well-defined RDF relations that hold between RDF terms and information resources with RDF graphs representations (see HCLS/WebClosureSocialConvention#GraphLink): rdfs:isDefinedBy, rdfs:seeAlso, and owl:imports.

The Usecase

The points are built around the following usecase (not entirely fictional):

Jane is an employee (of company X) who wants to build a localized ontology for surgical procedures and does not have control over web space. Her primary concern is capturing the "concepts" associated with surgical procedures in a high-fidelity knowledge representation (OWL-DL). She also wants to be able to share this ontology with co-workers who will extend it and use it in their work. She wants to avoid unnecessary load on her employers HTTP servers from semantic web agents which make assumptions about the use of HTTP URIs for her RDF terms. She would like the option of being able to publish the OWL ontology at a single location in the future when she is able to share it publicly and broadcast that *single* location as the authoritative ontology for her terms. She would like not to break her co-workers use of her terms.

- I find some aspect of this use case quite un-compelling. Why doesn't Jane just get a PURL for her ontology? Initially she can just have it resolve to a free yahoo page that says "I'm not ready to publish this ontology yet, but if you're interested in it, you can contact me at jane@some-email-address.com". Heck, she could even say "I'm not ready to publish this yet, so please don't bug me about it." if she wants. But if she does get uptake on it, and later chooses to "put the OWL ontology in a single location", then she can just point the PURLs to that location and she won't have to change her URIs. This places no load on her employer's servers, but allows her to use consistent URLs that are at least potentially dereferenceable to useful information. Why would this not be better than using URIs that are guaranteed never to be dereferenceable? -- DBooth

- Re: why doesn't Jane use PURL - What does she gain (immediately) from the expense of managing both the representations of her terms and their interpretations other than a "web presense". Having a web presence for her terms is orthogonal to the semantics she is trying to model. See: "Conflating Web Architecture with Semiotics." -- Chimezie

- Re: what is the immediate gain? There is little if any immediate gain. The gain comes only when and if Jane decides that she wants others to adopt her ontology, because making her URIs dereferenceable (to useful information) lowers the barrier to their use. Using PURLs, if she has already given her ontolgy to some selected colleagues to try, then she doesn't have to change all the URIs later to make them dereferenceable when she does decide to publish her ontology more widely. So in that sense, if Jane is betting that her ontology will be a flop, then she will see no value in making her URIs dereferenceable. But if she thinks her ontology may have life outside of her laboratory, and she wants to be able to facilitate its adoption, then PURLs offer an advantage over URNs. -- DBooth

The Cost of Assumptions Regarding a Transport Protocol

Jane's concerns about misinterpretation (by web agents) of the use of HTTP-schemed URIs as serving doubly as both identifiers *and* locations is discussed in 4.5 Erroneous appearance of dereferenceability of identifiers:

A common reason given for needing myRIs for namespace names is that an http: identifier appears to humans as a location and hence dereferenceable...

In addition, this concern is cited under the FAQ section on www.tagurl.org (Why not use an http URL instead?):

Indeed, Sandro might instead have named his dog http://hawke.org/2001/06/05/Taiko. There are different points of view about this. The main argument against tags is "Why invent a new URI scheme when an old one — http — could be used?" Some arguments in favour are:

- "But that initial 'http' might be taken to imply the existence of a web resource that the minting entity doesn't necessarily want to provide — at least, not over HTTP."

- "I don't have a domain but I have an email address and I want to identify some stuff."

- "Tags are unique for all time by construction. But a domain name (in an http URL) has no long-term guarantees about unique use — it could be reassigned to an independent party. I could rely on purl.org to guarantee uniqueness for me (or rather, for whatever names I can grab after purl.org/net); but why rely on anyone when I don't need to?"

The Issues

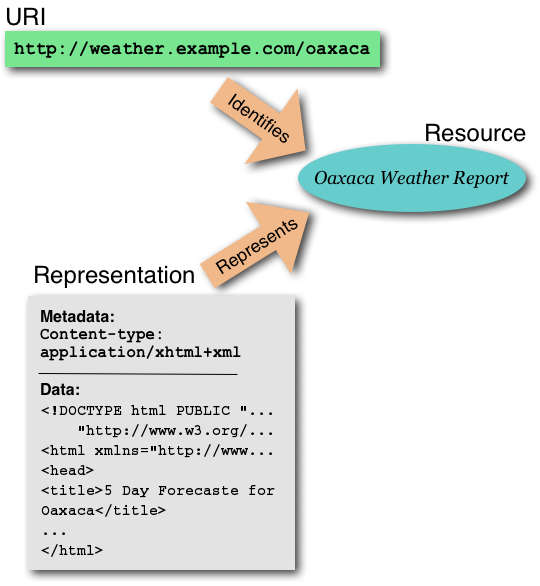

Conflating Web Architecture with Semiotics

One of the less-discussed expenses of an exlusive policy of HTTP URIs for RDF assertions is the Web Architecture responsibilities (adherence to best practices) which are brought to bear with use of the HTTP URI scheme. OWL ontology authors are primarily concerned with crafting a well-constrained model theory for the world. This has everything to do with Semiotics (the use of signs to denote things in the world) and model-theoretic semantics (declaration of constraints on the referents denoted by the use of terms in a formal logic) and nothing to do with resolution of information resources, their representations, and other relevant representations (see httpRange-14 resolution).

Signs behave more like windows into reality:

Whereas, resolvable HTTP URLs are more like entries in a File Allocation Table

The task of shaping ontological commitment (see: Role II: A KR is a Set of Ontological Commitments) using RDF and OWL is difficult in its own right without adding addition burden with best practices of resolving representations from the URIs used. This is especially the case where a majority of the referents denoted are not information resources.

Furthermore the HTTP 303 response code (and hence all usage of PURLs) is a bit problematic from a Semiotic perspective (see: Discussion). In particular, a 303 (per httpRange-14 interpretation) indicates that the representation returned describes something disjoint from what the initial URI denotes.

Surgical Procedure Example

For example: If Jane wants to mint a URI for the physical act of a surgical procedure she can pick a (guaranteed) unique URI easily enough. If she decides to use an HTTP URI it then falls under the jurisdiction (so to speak) of the Architecture of the World Wide Web (AWWW). So, she may have to contend with the following best practices:

- Good practice: Consistent representation

- Good practice: Hypertext links

- Good practice: Reuse representation formats

- Good practice: Available representation

.. etc ..

So, she might be compelled to use the following canonical URL to identify the action of a surgical procedure:

http://purl.org/cpr/#surgical-procedure

Furthermore, she then may be compelled to provide some 'consistent' representation at the web location. Which representation format should she use? Should it primarily be for human consumption (XHTML perhaps) or machine consumption (OWL/RDF)? If it is for human consumption, perhaps her fragment identifiers should be embedded in the representations she serves, etc... Note that so far her considerations have nothing to do the surgical procedure action which takes place over and over again in hospitals. She hasn't yet begun to consider the constraints that apply universally to surgical procedures and their relations to other things of interest in her domain.

- I think this is a good point (that having to deal with Web architecture issues is a distraction from what she wants to do). However: (a) any conventions will require some learning (including LSIDs); and (b) recommendations can reduce this distraction by providing clear guidelines (cookbook recipes, for example). Furthermore, as the ubiquity of the web continues to grow, I think it is far more likely that someone will already be familiar with minting and supporting HTTP URIs than with minting and supporting any other kind of URIs (such as LSIDs). Thus, over time this distraction is likely to diminish. -- DBooth

Finally, the fact the PURL domain responds with HTTP code 303 (by Technical Architecture Group dictate) indicates that whatever is served from the web location that the PURL domain redirects HTTP request traffic to is not a direct representation of the action of a surgical procedure. Whatever is returned is certainly not more authoritative (or informative) for an automaton than an OWL-DL expression which describes the universal constraints of the action of a surgical procedure. For example:

Class: Process

EquivalentTo: :Proces that

( :isMainlyCharacterisedBy some :performance ) and

( :isEnactmentOf some :SurgicalDeed ) and

( :playsClinicalRole only :SurgicalRole )

- Technical correction: purl.org currently returns a 302 redirect -- not 303. It would be good if OCLC (the owner) can be convinced to offer the option of using 303. People have sometimes discussed asking OCLC to do this, but I do not know whether they have been asked or what the response was. Anyone have OCLC contacts? Anyway, what this means in Jane's scenario is that if she is not using hash URIs, then unless or until she chooses to set up her own server to do 303 redirects, she may want to use a 303-redirect service, such as thing-described-by.org or t-d-b.org in conjunction with her PURL. So for example, if her PURL is http://purl.org/janesontology then she might initially set it up to 302-redirect to http://t-d-b.org?http://yahoo.example/janesontology which will automatically 303-redirect to http://yahoo.example/janesontology . -- DBooth

There is no guidance for how to structure your URIs (although some could be given!)

There are existing best practices and literature for authors who are in the business of minting URIs:

However, these are best practices and do not have the same (grammatic & semantic) rigor typically associated with RFC's for URI schemes (such as tag) - each of which have a concrete EBNF for the expected structure of URIs which make use of the scheme as well as clearly defined semantics for their interpretation.

- But EBNF and clearly defined semantics certainly could be provided if desired. In essence, the approach described in Converting New URI Schemes or URN Sub-Schemes to HTTP allows other conventions (even the LSID conventions) to be layered on top of the http scheme, just as LSIDs are currently layered on top of the URN scheme. -- DBooth

Hash vs. Slash still, in some sense, rages

See: Cool URIs for the Semantic Web which discusses the merits of each approach. In particular: "Choosing between 303 and Hash". The answer is still not definitive, however.

- Is there really a debate about hash versus slash? Maybe there are personal preferences, but it seems like the trade-offs are quite well defined. Are they not? Or are they in need of better explanation? -- DBooth

Things like versioning not built in or specced

The most common usage of version information embedded within an HTTP URI are those used for W3C specifications.

An example is the Proposed Recommendation URI for the GRDDL: http://www.w3.org/TR/2007/PR-grddl-20070716/ . This 'suggests' that the document is a Proposed Recommendation published on July 16th, 2007 (via the PR-grddl-20070716 portion of the path component)

However, this is not explicitly governed by the HTTP URI scheme, which only has the following major syntactic components: authority, path, query, and fragment. The most common scenario with HTTP URIs which embed revision metadata is to embed this 'metadata' in the path portion of the URI.

- True, but versioning is not built into the URN scheme either. It is built into the LSID subscheme, which is layered on top of URNs. But the exact same policies can just as well be layered onto HTTP. (Hmmm, maybe this would be clearer if I started referring to this approach as "http sub-schemes".) -- DBooth

Certain non-HTTP schemes such as tag and LSID have explicit syntactic components for revision (or time-stamping at the very least). Having the syntax and semantics of version explicitly defined in a URI scheme specification (where having such revision information in the identifier is desirable) removes any ambiguity about how to interpret revision.

Authoritativeness can be daunting

In Jane's case, she has *no* authority.

Either you have to maintain a server, or find someone who will

Same point as above

One big document or loads of little documents? Either has downsides

If Jane deployed her terminology using resolvable HTTP URIs she can either put all her terms in a single OWL ontology (which doubles as the baseURI of her URI naming convention) or in the logical equivalent (URI re-writing can suffice) of a single file for each term. Jane's scenario suggests she is better served with deploying a single OWL ontology, since this has the least impact on her employer's web server.

- Why is this relevant to the cost of using HTTP URIs? Jane can do it either way with HTTP URIs. In fact, if she chooses her URIs wisely she can do it one way and then change her mind later and switch to the other way, without changing the URIs. (This would involve server configuration though. I'm not sure off the top of my head whether purls can conveniently do many:1 URI mappings.) -- DBooth

Considered getting hammered by unsophisticated spider users

Semantic Web agents which are programmed to expect to be able to fetch representations from each HTTP URI they come across are likely to attempt to resolve each term they come across, putting a severe load on her employers server. This is especially the case for spiders which don't utilize HTTP caching effectively. The Hash approach resolves some of this concern, but not entirely.

- I have some sympathy for this concern, because it does seem like a logical possibility. On the other hand, it is hard to know how seriously to take this concern in practice, for a couple of reasons. First, because hosting web pages seems to be so inexpensive that there are many web sites that will host pages for free (usually with ads). And second because it seems like if one is interested in getting others to use one's ontology, a heavy server load sounds like a successful situation to be in, which is thus likely to justify the additional expense of hosting the load. Can anyone add insight into how much of a problem this really is in practice? -- DBooth

- With regards to the inexpensive nature of HTTP bandwidth, see "Fallacies of Distributed Computing" and Scalability of URI Access to Resources -- Chimezie

How do you handle disagreement? Is the URI owner the curator or do they maintain their POV?

- I'm not sure what you mean here, but differentiating between the URI declaration and other assertions would be a good start. -- DBooth

If the domain is sold things can fall apart

See: 5.2 Persistent Dereferenceability (location independence). In particular:

With http: URIs, there is a dependency that the original URI cannot be re-used for some other purpose and that it must remain "viable", that is it can't be terminated. If department.agency.example.org ever disappeared, all the clients would break on that document.

- But again, purls can mitigate this. -- DBooth

- PURL certainly mitigates this but doesn't eliminate the additional risk of impermanence when your identification scheme relies on a transport mechanism -- Chimezie

HTTP, RDF, and Fallacies of Distributed Computing

Also, the assumptions (mentioned at the start) do not fair well when compared with the "Fallacies of DistributedComputing":

- The network is reliable.

- Latency is zero.

- Bandwidth is infinite.

- The network is secure.

- Topology doesn't change.

- There is one administrator.

- Transport cost is zero.

- The network is homogeneous.

Most of the points of "collision" are well-aligned with the TAG finding (URNs, Namespaces and Registries).