DartGrid/dartreasoning

Enabling semantic web reasoning with legacy relational data.

This page is to record the disign issues about the query reasoning support of DartGrid. Generally, the main objective is to make a good balance between the reasoning capability of semantic web technologies and performance advantage of relational database technologies. We do not intend to develop a RDF/OWL store or relational-database-simulated RDF/OWL store, what we intend to develop is an integration framework for lecacy data well suited on available relational database technologies.

Problems

The Semantic Web aims at realizing the network effect of web data, or the data web, as like the achived network effect of web documents. Although huge efforts have been invested in standardization, tool development, application deployment, big obstacles are apparently existing on the road ahead.

Major obstacles impeding the advancement of the Semantic Web

- Data availability. Where should the linked data come from? How could we obtain semantically well-defined structured data? Three major sources or methods are under taken commonly. The third one is quite promising as most of those hot websites have structured data hidden in their backend (often referred as deep web data also), but tightly bound to and inextricably curated by specific client applications.

|| || ab initio knowledge engineering || extracted from raw documents || harvested from legacy structure data || || data quality || High || Low || High but may be lower than ab initio || || retrieving efficiency || Low || High ||High but requiring ad hoc programs || || Huamn intervention || High || Low if high quality is not required, but high if required || Low if having robust mapping tool which is achievable ||

- Data connectivity.

- What to link? As relational database has been the dominant data media for over a long time. In the foreseeable future, people will still prefer to store all of their data as relational format, no matter what advantages of SW technolgoies would provide. With also the consideration of most of existing data are mostly stored in relational databas, legacy relational data could be the mostly main source and flesh of semantic link, just as document to hyper link.

- How could we link them? Semantic link is not as same and simple as hyper link. Robust mapping tool for linking legacy data is desiable under the circumstances. SQL2OWL Mapping.

- Who is reponsible for creating the links? We probably should not expect normal user would understand and create the semantic links. The tool should be designed targeted to webmaster. Or shall we try some social-computing-based approach such as ESP to help harvest the semantic information?

- Data usage and usability. What's the advantage of using semantic data? The end users would not embrace this new technologies unless the return of their investment has reached a satisfying point. The reasoning capability of semantic web technologies to help manage and improve the usability of massive data is often boasted of by many SW researchers and practioners. However, the performance bottleneck of all currently available reasoner has awed away many application developers. In the future, more advanced applications such as semantic link analysis, knowedge discovery from semantic linked data will demand more powerful approach to process semantic data and cause more dreadful performance difficulties.

On the ground of the observation above, a more practical road for SW to go might require a much deliberately considered balance and compromise between the semantic web technologies and old-fashioned data mangagement technolgoeis, especialy the relational database technologies.

- First of all, we should emphosize on linking existing legcay data instead of recreating them using RDF/OWL again.

- At Second, we may need good quality semantic data to serve as the semantic glue for linking data, which may require ab initio knowledge engineering. For example, to achieve interoperability among data about time, a standard time ontology is necessary, and all other time data need to be mapped to this shared ontology with high semantic quality.

- At Third, the data, especially the instance data which are normally in massiveness, should still reside in old-typed data media such relational DMBS, and are mapped to a shared ontology using some mapping tool.

- At Fourth, query processing is based on query rewriting, instead of directly query a RDF/OWL store. This kind of design would lend some reasoning reponsibility (particularly the query processing) to relational database, taking full advantage of the performance superior. Beside, the input query can be first sent to an external reasoner to infer new queries (those queries subsumed by the original query.) before sending to the query rewriting components.

The whole design require a deliberate consideration of the balance between the reasoning and linking capablity of semantic web technologies and performance advantage of relational database technologies.

Targeted issues

- The heterogenity of legacy relational data which have the potential to be linked.

- The reasoning incompetence of the available RDF/OWL stores.

- The performance of the available reasoner while dealing large amount of data, particularly instance data which are usually in huge amount.

Objectives

What we are NOT doing

- A RDF/OWL store or a relational-simulated RDF/OWL store.

- A reasoning engine.

- Data conversion for Relational-2-RDF/OWL. Although the mapping tool can be used to do data conversion, we would not encourage to do that.

What we are doing

- A semantic data integration framework well suited upon relational database technolgoies.

- Rule-based mapping and query rewriting.

- Coupling reasoning component with the query rewriting component.

- Reasoning over views.

Design Strategy

Use Case

Architecture

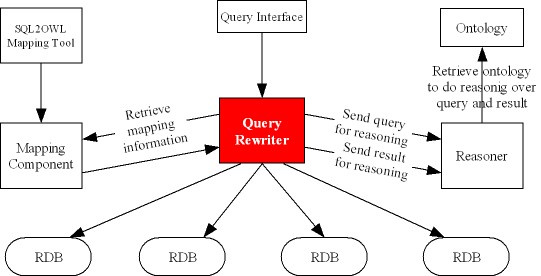

- Ontology component:

- Query rewriting component:

- The input SPARQL query is first tansformed into a OWL description and sent to the reasoner to make reasoning over the query. The reasoning output is transformed back to SPARQL syntax which might be either an extended queries or a set of new SPARQL queries.

- The reasoned queries are rewrittened into a set of SQL queries based upon the mapping information stored in the mapping component.

- The result are returned in RDF/OWL format, prior to returning, they are sent to the reasoner again to do reasoning, and yeild a complete query result.

- Mapping component:

- The mapping view might need to be sent to the reasoner to do reasoning over the mapping description, which will help reveal some implict description about the mappings. (Use case can be provided).

- Reasoning engine:

- Racer, KAON2, or ... DL reasoning.