Abstract

This document captures the current state of the Efficient XML Interchange

Working Group's analysis as we make progress in our evaluation of the

candidate formats that were submitted to the group. This document is

updated on a very regular basis, more so than documents published within

the Technical Reports area are.

A table pointing to the latest available results is permanently available

from http://www.w3.org/XML/EXI/test-report.

At this point in time, there are several limitations worth keeping in

mind:

- Partial list of candidates

-

Results are not included for all candidates as some are not yet

fully ready to be tested.

- Partial list of test documents and test groups

-

The EXI

WG gathered a lot of test data for its framework, but not all

is included in these preliminary results. This for two reasons. First,

not all of the test groups have been through sufficiently careful quality

insurance that they can be included meaningfully. Second, the test suite

built on the entirety of the content is rather large, and running the tests

for all candidates on all of the data takes an amount of time measured in

days. During the early stages, while the test framework is being fine-tuned

and the last problems with the candidates' integration being fixed, such

long run times are impractical. That having been said, the current

restricted data set is already representative of a large cross-section of

XML as it is used in

EXI use cases. Groups for which we have

collected some data (but welcome more) include: Ant,

DAML, DocBook, MARC-XML, MeSH,

NLM,

RDF, RosettaNet,

RSS, SOAP,

UBL,

WAP, WS-Addressing, X3D, XBRL, and XPDF.

- Partial list of analysis aspects

-

Of the many aspects of use in analysing an EXI format, only

one is currently being reported in this document: compaction. All aspects

that are listed as requirements in the

XBC

Characterization Note will eventually be included. Many do not require

the intermission of a testing framework and will therefore be analysed

more directly. Test runs have been performed for processing efficiency and

for memory usage but they have not yet been sufficiently well reviewed to

be published. They are expected to be included soon.

The reason for the limitations above is largely due to the fact that producing a

benchmarking framework able to produce fair measurements along a variety of axes

and integrating multiple different implementations from as many vendors is a

complex and arduous task. However progress is constant and fast, and the

objective of this document is to make it publicly available as soon as possible.

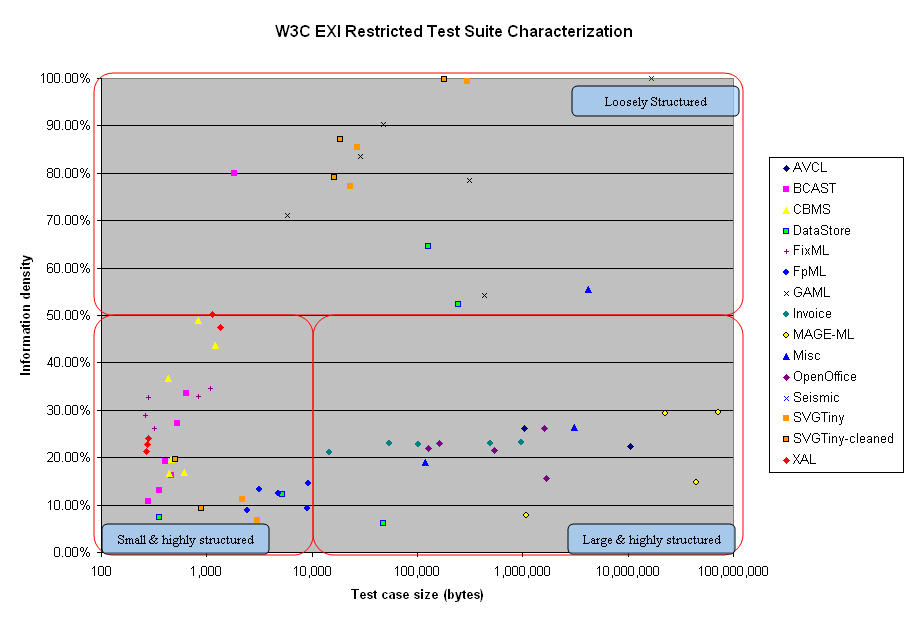

1. Properties of the Test Groups

The test documents can be characterised according to several metrics.

A typical metric is document size. Another that can be considered is

information density, i.e. the ratio between text and markup in the

document. A plot of these two metrics for the EXI test suite is shown below.

Information density is a metric obtained in the following manner:

- gather all character data information items that are the direct children of an element information item

- gather all the values of all the attribute information items

- sum up the size in characters of the text data gathered in the previous two steps

- the information density is the ratio of the the sum in the previous step on the size of the entire document in characters

The above requires checking the Infoset terminology, and the encoding considerations

when measuring bytes.

Looking at the plot above, three clusters can be

distinguished. The three also exhibit properties that make them interesting as

analysis groups. First is the group of loosely structured documents with information

density above 50% (which is to say, very little markup compared to the amount

of data). There is no need to subdivide that cluster further since prominence

of data tends to cause them to all behave similarly with EXI

solutions. On the other side of the 50% line are the highly-structured

documents. Since these tend to behave differently depending on their sizes,

they can usefully be split into smaller documents and larger documents. This

cluster is therefore separated into two subclusters on each side of the

10 kilobyte mark. This first metric therefore produces three clusters for

information density: High ID,

Low ID - Small, and Low ID - Large.

Another axis along which to order the test groups relates to the use

cases to which they correspond (either because they map to the same use

case, or because the use cases that they map to are similar in terms of

the properties that they require). This approach yields five rough

categories of test groups, henceforth called Use Groups, that

show no overlap:

- Scientific information

-

This covers data that is largely numeric in nature. The use group includes

AVCL, GAML,

MAGE-ML,

Seismic, and XAL.

- Financial information

-

This use group includes cases in which the information is largely structured

around typical financial exchanges: invoices, derivatives, etc.. It

is comprised of FixML, FpML,

and Invoice.

- Electronic documents

-

These are documents that are intended for human consumption, and can capture

text structure, style, and graphics. This use group covers

OpenOffice

and SVGTiny.

- Broadcast metadata

-

The type of information in this use group captures information typically used in

broadcast scenarios to provide metadata about programs and services (e.g. title,

synopsis, start time, duration, etc.). The use group includes BCAST

and CBMS.

- Data storage

-

This use group covers data-oriented XML documents of the kind that appear when

XML is used to store the type of information that is often found in RDBMS.

It includes DataStore.

2. Candidates Self-assessment

The XBC Characterization document

specifies the set of minimum requirements a format must satisfy to meet W3C requirements.

The chart below lists all of the candidates reviewed here in the EXI measurements

document and shows which requirements each claims to satisfy. It is important to note

that at this points the assessment has been performed by the candidate submitters

themselves.

| Status |

| Format |

Fast Infoset |

FXDI (Fujisu Binary) |

Efficient XML |

Xebu |

X.694 with BER |

X.694 with PER |

XSBC |

| Passes min. bar? |

No |

No |

Yes |

No |

No |

No |

Yes |

| MUST Support |

| Format |

Fast Infoset |

FXDI (Fujisu Binary) |

Efficient XML |

Xebu |

X.694 with BER |

X.694 with PER |

XSBC |

| Directly Readable & Writable |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

| Transport Independence |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

| Compactness |

No |

Yes |

Yes |

No |

No |

Yes |

Yes |

| Human Language Neutral |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

| Platform Neutrality |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

| Integratable into XML Stack |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

| Royalty Free |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

| Fragmentable |

Yes |

Yes |

Yes |

Yes |

Yes |

No |

Yes |

| Streamable |

Yes |

Yes |

Yes |

Yes |

Yes |

No |

Yes |

| Roundtrip Support |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

| Generality |

No |

Yes |

Yes |

No |

No |

No |

Yes |

| Schema Extensions and Deviations |

Yes |

Yes |

Yes |

Yes |

No |

No |

Yes |

| Format Version Identifier |

Yes |

No |

Yes |

No |

No |

No |

Yes |

| Content Type Management |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

| Self-Contained |

Yes |

Yes |

Yes |

Yes |

No |

Yes |

Yes |

| MUST NOT Prevent |

| Format |

Fast Infoset |

FXDI (Fujisu Binary) |

Efficient XML |

Xebu |

X.694 with BER |

X.694 with PER |

XSBC |

| Processing Efficiency |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

| Small Footprint |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

| Widespread Adoption |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

| Space Efficiency |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

| Implementation Cost |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

| Forward Compatibility |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

DNP |

Of the candidates reviewed in this draft, two claim to satisfy all the minimum W3C

requirements. While any of the formats above could form the basis for a W3C standard,

it must be noted that those that satisfy fewer constraints would likely require

more modifications than those that already meet the minimum W3C requirements.

Each modification to a format will impact the processing efficiency of the format and

may also impact other characteristics, such as compactness. For example, it is possible (but not necessary)

that modifications required to meet the W3C compactness requirements may significantly

reduce processing efficiency and modifications required to improve processing efficiency

may impact compactness. As such, the performance characteristics of each

candidate presented in this table that does not meet all requirements illustrate what

is possible when certain W3C requirements are not met, but do not illustrate the

performance of a format based that candidate modified to comply to the W3C

requirements.

It must therefore be noted that analysis of candidates should take into account whether

candidates meet all or a subset of the W3C requirements.

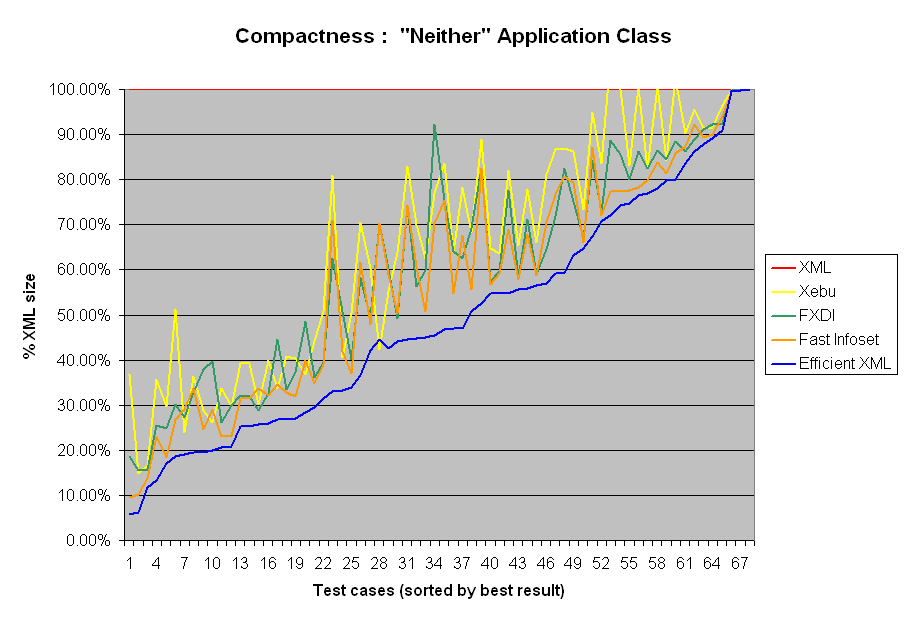

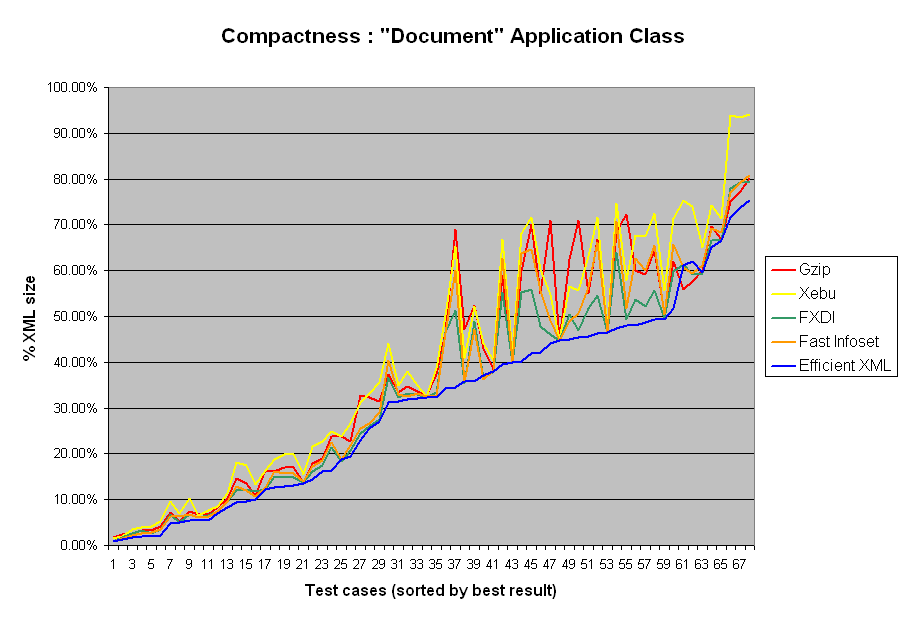

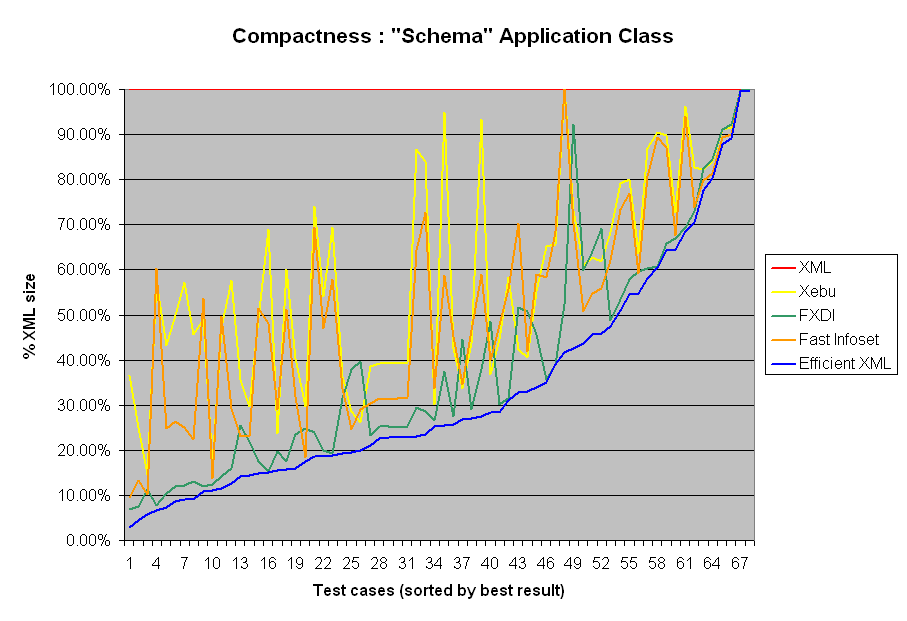

3. Compaction Analysis

This section gives a preliminary analysis of the results from the current set of test groups

based on the latest runs performed with the candidates that have been

submitted.

3.1. W3C Compaction Metrics

Given the many ways in which compression can be achieved, and the fact that some ways

are appropriate for some usage scenarios but not for others, candidates are measured

in four compaction cases that cover this variety in usage.

- Neither

-

In this case the candidate has no access to external information such as a schema

or has it but the encoded instances that it produces are still self-contained,

and does not perform any compression bases on analysing

the document. Typically, simple tokenisation of the XML document is

performed.

- Document

-

In this case the candidate does not have access external information such as schema

or has it but the encoded instances that it produces are still self-contained,

but may perform various document-analysis operations such as

frequency-based compression (results are compared against gzipped XML).

- Schema

-

In this case the candidate does not perform any manner of document analysis but may

rely on externally provided information, typically a schema, and the resulting bitstream

may not be self-contained.

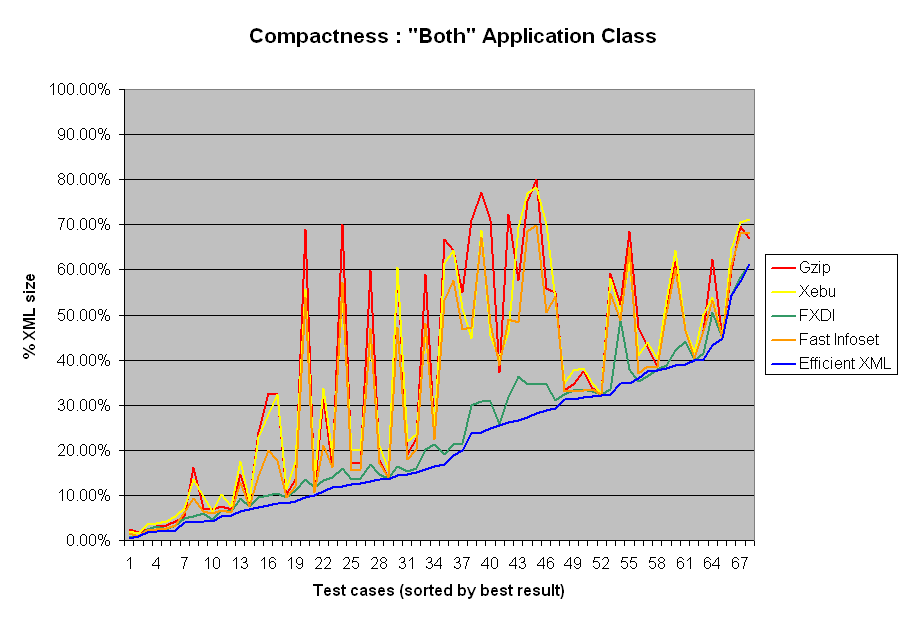

- Both

-

In this case the candidate may use methods available in both of the Document and the

Schema cases.

The following graphs provide a summary of the compactness results for each of

these compaction classes.

This analysis is based on the information density clusters (the number of

documents in the cluster is shown in parentheses). The nomenclature used

here is described in the EXI Measurements

Document section Characterization

of Compactness.

Note that the test documents do not divide neatly such that each test group

fits entirely into a single cluster, thereby entailing that documents from the

same test group frequently belong to separate information density clusters.

- High Information Density (16)

-

As can be expected, for the Neither case candidates mostly

stay above the information density of the documents. There are a few

exceptions though, in particular one of the GAML documents where all

candidates achieve a compactness of one half of the information

density. In the Document case, apart from Efficient XML the

candidates remain above 90% size in all cases except the GAML

document. Efficient XML manages to penetrate the 90% barrier four

times in total.

-

When allowed to use only Schema based compactification, both FXDI and Efficient XML manage to

consistently achieve sizes approximately 60% of XML, except for

SVGTiny documents where the sizes are between 80% and 90% and for

DataStore where the sizes are between 30% and 50%. Apart from the

pathological GAML document, the DataStore documents, and the Seismic

document, the ratio increases modestly in the Both case.

- Low Information Density - Large Documents (19)

-

Again in the Neither case the candidates remain above

the information density, with no anomalies this time. Of the

candidates, Efficient XML achieves consistently the best results, with

Fast Infoset a bit ahead of the rest. In the Document case, the

situation looks somewhat better than with the high density documents,

but still the 90% barrier seems a reasonable one. Compared to the

others, now Xebu is clearly behind the rest.

-

Only the densest of documents, in the OpenOffice group, go above the

25% mark with Schema information included but it must be noted that due

to schema issues the OpenOffice group results are not entirely consistent.

A few cases manage to penetrate even the 10% barrier. In the Both case, FXDI is almost

always between 80% and 100%, with Efficient XML managing to mostly go

between 70% and 80%.

- Low Information Density - Small Documents (33)

-

In the Neither case we do not see candidates managing

to achieve the information density of the documents, even Efficient

XML achieves a result above 50% more often than below, and the rest

manage to go below 50% only rarely. For the Document case, presumably

due to the small sizes, the situation compared to gzipped XML looks

better for the candidates, with FXDI and Efficient XML often managing

to go below 80%.

-

In the Schema case, apart from SVG, and XAL, going below 20% is more

the rule than an exception. Again, in a few cases the 10% barrier is

penetrated. With FixML the ratios are still clearly larger. The Both

case does not seem markedly different from the other clusters.

3.3. Analysis based on the use groups

- Scientific information

-

This use group shows very clearly that for instances that for strongly typed documents,

especially those that have a high information density (the archetype of which being the

Seismic test group), the benefits of being able to rely on schema information are high.

For the Seismic test group, candidates yield no compression in the Neither case, and

hit the same mark as gzip in the Document case. However in both cases in which schema

information is available candidates that use that information manage to reach 40% for

Schema, and 85% of gzip for Both (while candidates that don't fail to beat gzip).

-

Other test groups in this use group, given their lower ID, show much less caricatural

results. The dichotomy between candidates that make extensive use of schema information

(FXDI and Efficient XML) and those that make much less use of it (Xebu and Fast Infoset)

is still very noticeable in many cases but less pronounced. That having been said, despite

this use group constituting mostly of strongly typed information, schema information appears

to be by no means required for good compression levels, with Efficient XML almost breaking

the 5% barrier in the Neither case once, and all candidates except Xebu compressing to

50% of gzip in the MAGE-ML test group. In general the variance of results seems to be

a factor of the ability to use schema information and of the ID of individual test cases.

- Financial information

-

While the actual content of the test groups in this use group are related in many ways,

the FixML test group stands out in that its XML vocabulary was largely optimised for

size already, through extensive usage of attributes and sometimes radical shortening

of element and attribute names. This naturally tends to render its information density

higher than that of the other two test groups, and this translates directly in the results

to lesser compression. FpML is the most structured group (i.e. the one with the lowest

ID), and Invoice is closer to FixML with most of its instances as dense as the least

dense of the FixML instances. This has effects across all cases, but is most evident

in the Neither one where we see FixML in the 70-90% range, Invoice largely around 35-40%,

and FpML falling as low as 20% with Efficient XML. It is also visible in the Document

case in which candidates often fail to beat gzip for FixML, while they tend to revolve

around 80% of gzip for FpML, and succeed in dropping to 75% of gzip for Invoice (albeit

with strong disparity between the candidates which is worth further investigation).

-

When schema information is available a strong dichotomy between candidates that benefit

from it and those that don't surfaces. In the FixML test group, the former barely rise

above 35% and go down to 20% for Schema and hit in the 30-50% area for Both, while the

latter never do better than 55% and go as high as 95% for Schema and for Both do no

better than 85% of gzip and are sometimes over the 100% of gzip bar. The dichotomy is

less marked for FpML and Invoice with Fast Infoset tending to score in between Xebu

and the two others that are typically below 15% with Efficient XML breaking the 5%

barrier in one FpML instance.

- Electronic documents

-

In this use group schema results are unreliable since the OpenDocument schema is

currently broken while the SVG Tiny one is not available.

-

For OpenOffice, results tend to be rather regular: globally in the 30-40% range for

Neither (with Efficient XML dropping to 20% in one instance) and 80-90% of gzip for

Document (with Xebu being larger than gzip). For SVG, the test group is deliberately

distributed largely across the information density spectrum, and the results vary

accordingly, going down to 45% for Neither in some instances, but with data-intensive

documents giving the candidates the greatest difficulty in producing any compression

or in beating gzip. It is noteworthy however that cleaning up the SVG documents seems

to only benefit Efficient XML (in the Document case).

- Broadcast metadata

-

The schema rift is again visible in the Schema and Both cases, with schema-informed

candidates reaching as low as 10% in the Schema case while the others do no better than

45%.

-

It is interesting to note that in the Neither case candidates are mostly distributed

around 65%, but Efficient XML gets close to the 35% bar on one occasion. In the Document

case there is a notable difference between Xebu and Fast Infoset on one side that struggle to

do better than gzip — failing most of the time — while FXDI and Efficient XML on the other side are mostly

in the 80% area (with Efficient XML going to 60%). The latter discrepancy may be worth further

investigation since it is independent of the usage of schema information.

- Data storage

-

This use group is somewhat special in that its membership is a single test group. That

being said, that test group is somewhat representative of the usage consisting of storing

relational database information more or less directly in an XML document.

-

This use group exhibits a strong variance in information density, and that translates

directly to variance in the results for all cases. For the Neither case, in one instance

the best result is 45%, while for another it almost falls below 5%. Likewise in the Schema

case, with Efficient XML doing as good as 2% in one instance. The variance is attenuated

(but still clearly present) in the Document and Both

cases since compression dampens the

effects of excessive structure, but the candidates still succeed in falling as low as 50%

of gzip for Document, and 15% of gzip for Both.

3.4. Analysis based on the XBC compaction metrics in the various classes

Analysis for the Schema and Both case is not currently considered,

since ASN.1

PER numbers are not available and they form the basis of the

viability comparison. These analyses will be made as soon as those results

become available.

- Neither

-

All candidates achieve sufficient compactness with all the

test documents, except for Xebu with the FixML test group and all

candidates with the Seismic test group. This latter is caused by the

Seismic test document consisting of a list of floating-point values;

without document analysis or schema information there is little that a

format can do. A similar case is with some of the SVG test documents,

where attribute values contain long sequences of floating point

values, but here all candidates achieve a very small amount of

reduction.

- Document

-

Apart from a few isolated cases, candidate Xebu does not

achieve sufficient compactness throughout the test suite. The FixML

set proves problematic here as well: of the four candidates and five

documents, Efficient XML achieves sufficient compactness with three

and FXDI with one. These are the only cases in which Efficient XML does not hit the mark; FXDI

has with four others. Fast Infoset is in the middle of these

classes, missing the mark with approximately a third of the test documents.

3.5. Conclusion

It surfaces from this analysis that there are two major influences on the compaction

performance of candidates: information density, and limitations on the candidate's

ability to exploit schema information (either because none is available, or because

the candidate supports less of it). Neither of these is surprising. Since EXI

methods normally function by eliminating as much as possible of the structural

overhead, they can only work so well in cases in which there is little structure.

Likewise, the more information one has about an instance, the better one can

compress it.

It is interesting to note though that while in many cases the information density

appears to be the compression limit for the Neither case, it

is occasionally broken. Also, in the Document case candidates

often succeed in doing better than gzip, especially on the smaller documents.

Quite naturally, schema information being the means through which the overhead of the

lexical representation of typed values is revealed, it is the most evident mean of

breaking through the ID barrier.

4. Caveats

As the time of this report, the test suite and some of the candidates still had some

outstanding issues that should be taken into account to better understand the analysis.

Also, the candidates are not functionally homogenous.

These caveats do not prevent a useful perusal of the current results but should be

to be taken into account in order to better understand the analysis.

4.1. Schema-related Caveats

- Limited schema usage

-

Some candidates (notably Xebu and Fast Infoset) only make use

of schema information in a very limited fashion — largely restricted

to knowing the names of elements and attributes in advance – and

there is therefore discussion as to whether it is pertinent to

cover their analysis in the Schema and Both

cases below. (See Candidate-related Caveats.)

-

Xebu is able to make more extensive use of schema than creating a

table of names but it does not do so now. This is because it only

supports RelaxNG at this point and the schemata are not yet

available in both RelaxNG and XML Schema.

- Schema quality

-

Not all test groups include vocabularies for which complete

and normative schemata are available. Further analysis is

therefore desirable concerning the impact of using schema

or not.

- OpenDocument Schema

-

Due to technical issues with the OpenDocument schema, it is

currently not being used to produce the test results.

In the real world use case the schema would normally be used.

4.2. Candidate-related Caveats

- X.694 with PER

-

Complete results for this candidate have yet to be obtained. It must

be noted that this candidate does not have support for all of the

fidelity options, and that some of its results can therefore appear

smaller and faster than they would be if it did.

- X.694 with BER

-

Complete results for this candidate have yet to be obtained. It must

be noted that this candidate does not have support for all of the

fidelity options, and that some of its results can therefore appear

smaller and faster than they would be if it did.

- X.891 (Fast Infoset)

-

For the Schema and Both

application classes Fast Infoset is not fully optimised to utilise schema:

-

-

For the cases where prefixes do not need to be preserved (or in the

more general case when a set of sample documents can be utilised in

conjunction with schema) further reductions in compactness and

processing efficiency are possible.

-

The use of encoding algorithms or restricted alphabets to convert

lexical representations of text content or attribute values to more

optimised binary forms that may be faster to process and/or more

compact.

- Xebu

-

The current version is research software, therefore neither the format

nor the implementation can be considered to be fully mature.

- Efficient XML

-

The tests are being run using a non-production implementation of Efficient

XML designed for evaluating proposed extensions to Efficient XML. It includes

support for features beyond the minimum W3C requirements, such as random

access, accelerated sequential access and XML Digital Signatures. As a

non-production version, it has not been fully optimized and does not

achieve the same results as production releases of Efficient XML.

- XSBC

-

No caveats at this point.

- FXDI

-

No caveats at this point.

- esXML

-

This candidate has not yet been included in the test framework.

4.3. Test Group Caveats

- SVG Tiny, MAGE-ML, XAL

-

Some candidates are not yet able to preserve

DTDs; therefore, DTD

preservation has been temporarily disabled so as not to penalise the

compactness results of the candidates that can preserve them.

It will be turned back on as soon as these issues are properly addressed.

- SVG Tiny

-

The SVG Tiny test group also has the particularity of being present

twice: once with the original data and once more with a version

of the same information that has been cleaned up. The motivation

behind this is simple; many SVG authoring tools include all

manners of data in the documents they produce that are by and large

useless for the intended applications. Some SVG content publishers

will publish the output from these authoring tools directly, despite

the noticeable extra cost in bandwidth and processing time, while others

will add a clean-up process to the publication. Since many of the

documents in the original test group come from authoring tools that

produce "dirty" content, and given the fact that there are two ways

of publishing such content, the "clean" alternative has been added

so that as a whole the SVG data reflects actual patterns of

usage.

A. Test Groups Overview

This appendix describes the various test groups that are currently

in the test suite. As described in the Abstract,

the entirety of the test groups at our disposal has not yet been

included in these runs.

The test suite is organised into "test groups". Each group

consists of XML document instances pertaining to the same

vocabulary, or to a set of related applications of XML. For each

group, information is provided on the type of data that it

contains, and on the use cases from the

XBC Use Cases

document [XBC-UC] to

which it relates. Additionally, each group is annotated with its

fidelity level requirement, the lexical reproducibility

typically necessary for the group, from the table in

the Efficient XML

Interchange Measurements Note, section on

the Fidelity Scale.

5.2. BCAST

- Description

-

BAC Broadcast

is a vocabulary defined by the OMA

for use in the transport of broadcast metadata, notably

electronic service guides for mobile devices.

- Use cases

-

Metadata in Broadcast Systems

- Fidelity

-

Level -1. For the most part, only elements and attributes are required.

- Initial assessment

-

In progress.

5.3. CBMS

- Description

-

DVB

CBMS

(Convergence of Broadcast and Mobile Services, formerly known as UMTS) is a set of

standards aiming at improving convergence between 3G and broadcast services. The

samples in the test suite are used for electronic service guides.

- Use cases

-

Metadata in Broadcast Systems

- Fidelity

-

Level -1. For the most part, only elements and attributes are required.

- Initial assessment

-

In progress.

5.4. DataStore

- Description

-

This is a very simple format used to capture and store primitive data.

- Use cases

-

XML Documents in Persistent Store

- Fidelity

-

Level -1. Only relies on elements and attributes.

- Initial assessment

-

In progress.

5.8. Invoice

- Description

-

This set of documents was taken from transactions between companies and then

obfuscated. It represents typical invoice documents as exchanged between machines

both inside and outside companies.

- Use cases

-

Intra/Inter Business Communication

- Fidelity

-

Level -1. Essentially attributes and elements.

- Initial assessment

-

In progress.

5.9. MAGE-ML

- Description

-

The Microarray Gene Expression Markup Language (MAGE-ML)

is a language designed to describe and communicate information about microarray

based experiments. MAGE-ML is based on XML and can describe microarray designs,

microarray manufacturing information, microarray experiment setup and execution

information, gene expression data and data analysis results.

- Use cases

-

Supercomputing and Grid Processing

- Fidelity

-

Level 1. An Infoset level preservation is needed at least for the

Document Type Declaration.

- Initial assessment

-

In progress.

5.10. OpenOffice

- Description

-

The Open Document Format (ODF)

is a set of vocabularies for use within office applications.

- Use cases

-

Electronic Documents

- Fidelity

-

Level 0. Most constructs found in the XPath 1.0 data model are used

but none more.

- Initial assessment

-

In progress.

5.12. SVG Tiny

- Description

-

Scalable Vector Graphics (SVG)

is a language for describing high-quality two-dimensional, interactive,

animated, and scriptable graphics. It is increasingly available from Web

browsers, and its Tiny profile is the de facto standard for animated graphics

in the mobile industry.

- Use cases

-

Electronic Documents, Multimedia XML Documents for Mobile Handsets

- Fidelity

-

Level 2. It is not strictly necessary to preserve all of the

internal subset, but SVG is commonly hand-authored (or at least hand-edited after

the initial graphics have been created) and it is frequent that the internal

subset will be used to define entities for commonly used attribute values as well

as to declare some attributes to be of type

ID.

- Initial assessment

-

In progress.

5.13. XAL

- Description

-

XAL is a software

framework for particle accelerator online modelling software. It

makes use of XML descriptions of the devices, such as magnets of

various field shapes, beam position monitors, and others,

and XML documents of the translation matrices which describe

how the beam propagates from one device to another.

- Use cases

-

Floating Point Arrays in the Energy Industry, Sensor Processing and Communication, Supercomputing and Grid Processing

- Fidelity

-

Level 1. An Infoset level preservation is needed at least for the

Document Type Declaration.

- Initial assessment

-

In progress.