This section is informative.

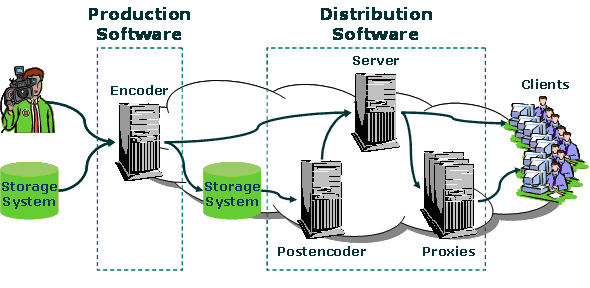

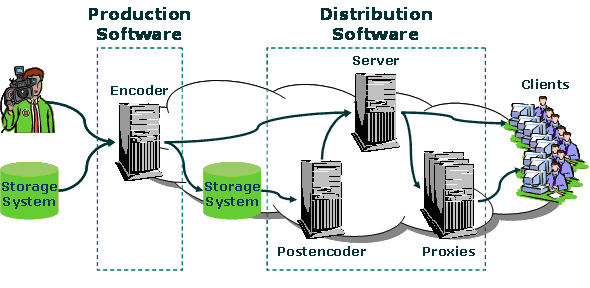

Server playlists allow companies to generate a live stream from a set of

contents. Figure shows the performance schema. Using stored audios and videos

and live streams, a computing system can get and combine them in the way

specified in a document named playlist.

Nowadays these systems run as follow:

- Have a set of pre-encoded files stored on the server and live

streams.

- Build a document where the order of these files is stated

(playlist).

- This document is then opened by the system to build a continuous stream

of data by putting the files into sequence.

- Metadata may be added to the playlist and, depending on each of the

available formats, basic timing functionality may be available (such as

clipBegin or clipEnd).

But, if we have SMIL, why do not we use it into these

systems?

We could have a standard format to define the playlist. Thus, we can use

an editor from one company and a server from another one, the playlist can be

reused for different services, even these tasks can be done by different

companies (production studio/content provider, TV channel/service provider).

In only one word, we could have "interoperability". Moreover, we can

introduce new functionality and build more powerful systems.

So the focus here is having a SERVER-ORIENTED SMIL format.

In this section we will try to define how the server playlists can be

used, and thus, to clarify what the main purpose of this profile is.

The figure depicts how a live service works. Live audio/video services may

use either stored or live contents.

When live contents are used a tool called encoder or producer captures

audio and video contents from an external source (e.g., a camera and a

microphone), and then generates and sends a continuous stream of data packets

to a streaming server. The server delivers then some of these data packets to

the users who have requested the information, after applying the necessary

changes (by changing sequence numbers, removing or adding specific fields in

the header of the data packets, etc.).

On the other hand, when stored contents are used, most software providers

have different solutions to generate live streams using a set of

previously-encoded files. We may call these solutions "oepostencoders" and

the main providers have the solutions which follow.

The live stream is after that, delivered to the final user through the

Internet or broadcasted using common radio/TV channel.

RealNetworks is one of the companies which implements this type of

systems. To generate its live streams, it mainly uses a program called slta.

This program uses a plain-text file where the path and names of the files to

be used are provided. When the program starts, it opens the playlist file and

starts to deliver data packets to the server by putting the files into a

sequence. When the program reaches the end of the playlist or a certain

length of this playlist, it may stop or it may read it and start again (the

slta can loop the list of pre-recorded files either a specified number of

times or indefinitely) Also, it is possible to add meta-data. As far as we

know, it is impossible to play with the layout of the files. For instance, if

we are a content provider working for several broadcasters we need to

generate different versions of the same contents even if small variations are

needed (like the insertion of a logo image). Also, timing functionality is

very limited and only stored contents can be used. An example of a slta

playlist follows:

llandecubel_cabraliega.rm?title="Cabraliega"&autor="Llan de

Cubel"

corquieu_labarquera_tanda.rm?title="Tanda"&author="Corquieu"

tolimorilla_desitiempupoema-a.rm?title="Desi

tiempu"&author="Toli Morilla"

losberrones_10anos_ladelatele-a.rm?title="La de la

tele"&author="Los Berrones"

jingle_1-a.rm?title="Jingle

Asturies.Com"&author="Asturies.Com Canal UN"

elpresi_laxatapinta-a.rm?title="La xata pinta"&author="El

Presi"

tolimorilla_natocintura-a.rm?title="Na to

cintura"&author="Toli Morilla"

In the example, the playlist uses 7 files in rm format and each of the

files has two associated meta-data items: a title and the name of the author.

The slta will open these files in a sequence or in random order depending on

an available input parameter.

Other developers are Microsoft (Windows Media Services) and Apple

(Darwin/Quicktime Streaming Server). These companies provide what they call

Server playlists. They have included a management tool to create and manage

these lists of contents. A module in the server uses these playlists and

performs actions similar to those exposed in the case of RealNetworks

solutions. A reduced set of SMIL 2.0 is available in Microsoft solutions. As

far as we know, it is impossible to play with layout (the elements available

in Microsoft Server playlists are those in

http://msdn.microsoft.com/library/default.asp?url=/library/en-us/wmsrvsdk/htm/playlistelements.asp).

An example of a Microsoft Server Playlist follows:

<?wsx version="1.0"?>

<smil>

<seq>

<media src="c:\wmpub\wmroot\audio1.wma" clipBegin="2:42"

/>

<media src="c:\wmpub\wmroot\audio2.wma" clipBegin="0:00"

/>

<media src="c:\wmpub\wmroot\audio3.wma" clipBegin="2min"

/>

<media src="c:\wmpub\wmroot\audio4.wma" clipBegin="0h"

dur="30" />

</seq>

</smil>

In the example, the playlist uses 4 files in wma format which will be

opened sequentially with different starting points along their internal

timeline.

So the basic idea behind the Server Playlist Profile is to:

- Standardize the playlists used by different providers. Depending on the

format of the files to be used, different servers could share the same

playlist. So if a change of technology is performed, the playlists can be

easily installed in the new solution. Also, different authoring tools

could be used to generate these playlists no matter the type of server we

have.

- New functionality can be incorporated to these products. For instance,

we can alternate between live and stored contents in the same playlist.

We can play with the layout to have complex streams, similar to those

available in a conventional TV channel, we can have complex timing

features, etc. These examples are not available in some of the

aforementioned solutions and more examples are provided in the following

sections.

As mentioned before, the most popular multimedia platforms permit the

usage of server playlists in different ways. A good point to start would be

to use SMIL in all of these server playlists. The migration to SMIL has three

levels of complexity for these platforms:

Migration Table

| Complexity |

Functionality |

Comments |

| Low |

To allow either audio or video media objects

To allow metadata associated to the SMIL file or to each of the

media items in the document

To allow sequences

To avoid the usage of any layout functionality

|

All this functionality is currently supported by Microsoft, and

RealNetworks only needs to update the parser of the slta. |

Medium

|

To support clipBegin, clipEnd, dur, begin, end, repeatCount and

repeatDur

To consider excl

To consider switch (to define alternative sources for redundancy

purposes)

|

Supported by Microsoft, but RealNetworks requires considerable

changes in the slta. |

High

|

Changes in layout |

Not supported, requires deep changes in all platforms |

Most server playlist technologies (at least RealNetworks) only allow the

usage of stored contents. It could be possible to have more complex services

where two types of companies are involved: Content providers and

Broadcasters.

Content providers may generate the contents and sell these contents to

broadcasters. On the other hand, broadcasters may combine the contents of

different providers in order to have a continuous stream of contents

(24/7)

For example, TVE, BBC and CNN (broadcasters) buy a football match to

AudioVisual Sport (Sport Content Provider). This match is transmitted from

the AudioVisual Sport server and each of the broadcasters would like to

broadcast the match, combined with other contents depending on their

scheduled program. One example is showed in the table. In it, the broadcaster

combines the contents provided by different content providers with their own

contents (either stored or live).

TV Channel Time Table

| Begin |

End |

Program |

Provider |

Type |

| 20:00 |

21:00 |

News |

Own |

Live |

| 21:00 |

12:00 |

Coronation Avenue |

Equirrel Productions |

Stored |

| 22:00 |

22:30 |

Who wants to be poor? |

Funny Productions Inc. |

Stored |

| 22:30 |

00:30 |

Football: Barcelona C.F vs Manchester United |

AudioVisual Sport |

Live |

| 00:30 |

01:00 |

News |

Own |

Live |

| 01:00 |

02:15 |

International Cinema: Xicu'l Toperu |

Producciones Esbardu |

Stored |

Another example are broadcasters which have a common set of contents and

then certain slots where specific contents are broadcasted depending on the

region (in local languages, reporting about local news, etc.). Each local

transmission may retrieve the general contents as stated on their playlists

and combine them with other contents designed for that particular region.

Regional TV Channel Time Table

| Begin |

end |

Program |

Provider |

Type |

| 9:00 |

14:00 |

National programs |

National TV |

Live |

| 14:00 |

15:00 |

Regional News |

Own |

Live |

| 15:00 |

16:00 |

Daily report: The salmon |

Own |

Stored |

| 16:00 |

00:30 |

National programs |

National TV |

Live |

This section is normative.

This version of SMIL provides a definition of strictly conforming Server

Playlist Profile documents, which are restricted to tags and attributes from

the SMIL 3.0 namespace. In the future, the language described in this profile

may be extended by other W3C Recommendations, or by private extensions. For

these extensions, the following rules must be obeyed:

- All elements introduced in extensions must have a skip-content attribute if it

should be possible that their content is processed by Server Playlist

Profile user agents.

- Private extensions must be introduced by defining a new XML

namespace.

Conformant Server Playlist Profile user agents are expected to handle

documents containing extensions that obey these two rules.

The Server Playlist Profile is a conforming SMIL 3.0 specification. The

rules for defining conformant documents are provided in the SMIL in the

SMIL document. Note that while the section is

written for the SMIL 3.0 Language profile, all of the rules apply to the

Server Playlist Profile as well, with the exception that the Server Playlist Profile's namespace

should be used instead of the SMIL 3.0 Language Profile's namespace and the

Server Playlist Profile's DOCTYPE declaration should be used instead of the

SMIL 3.0 Language Profile DOCTYPE declaration.

Documents written for the Server Playlist Profile must declare a default

namespace for its elements with an xmlns attribute on the smil root element with its identifier URI:

<smil xmlns="http://www.w3.org/2006/SMIL30/WD/ServerPlaylist">

...

</smil>

The default namespace declaration must be

xmlns="http://www.w3.org/2006/SMIL30/WD/ServerPlaylist"

Language designers and implementors wishing to extend the Server Playlist

Profile must consider the implications of the use of namespace extension

syntax. Please consult the section on Scalable Profiles for

restrictions and recommendations for best practice when extending SMIL.

Server Playlist Profile DOCTYPE

declaration

A SMIL 3.0 document can contain the following DOCTYPE declaration:

The SMIL 3.0 Mobile Profile DOCTYPE is:

<!DOCTYPE smil PUBLIC "-//W3C//DTD SMIL 3.0 Mobile//EN"

"http://www.w3.org/2006/SMIL30/WD/SMIL30Mobile.dtd">

If a document contains this declaration, it must be a valid XML

document.

Note that this implies that extensions to the syntax defined in the DTD are

not allowed. If the document is invalid, the user agent should issue an

error.

Since the Server Playlist Profile defines a conforming SMIL document, the

rules for defining conformant user agents are the same as provided in the Conforming SMIL 3.0

Language User Agents in the SMIL 3.0 Language Profile document, with the

exception that the conforming user agent must support the Server Playlist Profile's namespace

instead of the SMIL Language Profile's namespace.

The Server Playlist Profile supports the SMIL features for basic

multimedia presentations. It uses only modules from the SMIL 3.0

Recommendation. As the language profile includes the mandatory modules, it is

a SMIL

Host Language conforming language profile. This language profile includes

the following SMIL 3.0 modules:

The collection names contained in the following table define the Server

Playlist Profile vocabulary.

| SMIL 3.0 Server Playlist Profile |

| Collection Name |

Elements in Collection |

| ContentControl |

switch |

| Layout |

region, root-layout, layout, regPoint |

| MediaContent |

text, img, audio, video, ref, textstream |

| Metainformation |

meta, metadata |

| Structure |

smil, head, body |

| Schedule |

par, seq |

| Transition |

transition |

In the following sections, we define the set of elements and attributes

used in each of the modules included in the Server Playlist Profile. The

content model for each element is described. The content model of an element

is a description of elements which can appear as its direct children. The

special content model "EMPTY" means that a given element may not have

children.

The id, class and title attributes in the collection Core are

defined for all the elements of the Server Playlist Profile. The id attribute is used in the Server Playlist

Profile to assign a unique XML identifier to every element in a SMIL

document.

A conforming Server Playlist Profile document should not use the SMIL 1.0

attributes that have been depreciated in SMIL 2.0. Server Playlist Profile

implementations are not required to support these attributes. This would be

considered an unjustified burden for the targeted constraint devices. The

unsupported depreciated SMIL 1.0 attributes are the following: anchor,

background-color, clip-begin, clip-end, repeat; and the additional

depreciated test attributes of Content Control: system-bitrate,

system-captions, system-language, system-required, system-screen-size, and,

system-screen-depth.

The Layout Modules provide a

framework for spatial layout of visual components. The Layout Modules define semantics for the

region, root-layout, layout and the regPoint elements. The Server Playlist

Profile includes the Layout functionality of the BasicLayout,

AudioLayout,

BackgroundTilingLayout,

and AlignmentLayout

modules.

In the Server Playlist Profile, Layout elements can have the following

attributes and content model :

| Layout Module |

| Elements |

Attributes |

Content model |

| region |

Core, I18n,

Test, backgroundColor, showBackground (always | whenActive), bottom, fit (fill |

hidden | meet | scroll | slice), width, height, left, right, top, soundLevel, z-index, skip-content, regionName |

EMPTY |

| root-layout |

Core, I18n,

Test, backgroundColor , width, height, skip-content |

EMPTY |

| layout |

Core, I18n,

Test, type |

(root-layout | region | regPoint)* |

| regPoint |

Core, I18n,

Test, top, bottom, left, right, regAlign ( topLeft|topMid | topRight | midLeft | center |

midRight | bottomLeft | bottomMid | bottomRight ), skip-content |

EMPTY |

This profile adds the layout

element to the content model of the head element of the Structure Module.

The Media Object Modules

provide a framework for declaring media. The Media Object Modules define

semantics for the ref, audio, img, video, text, and textstream elements. The Server

Playlist Profile includes the Media Object functionality of the BasicMedia

and MediaClipping

modules.

In the Mobile Profile, media elements can have the following attributes

and content model:

| Media Object Module |

| Elements |

Attributes |

Content model |

| text, img, audio, video, ref, textstream |

Core, I18n,

Timing, Test,

region, fill (freeze | remove | hold |

transition | auto | default), author, copyright, abstract, src, type, erase, mediaRepeat, paramGroup, sensitivity, transIn, transOut, clipBegin, clipEnd, endsync, mediaAlign, regPoint, regAlign, soundAlign, soundLevel. |

(param | area | switch)* |

The Metainformation Module

provides a framework for describing a document, either to inform the human

user or to assist in automation. The Metainformation Module defines

semantics for the meta and metadata elements. The Server Playlist

Profile includes the Metainformation functionality of the Metainformation module.

In the Server Playlist Profile, Metainformation elements can have the

following attributes and content model :

This profile adds the meta element

to the content model of the head

element of the Structure

Module.

The content model of metadata is empty. Profiles that extend the Server

Playlist Profile may define their own content model of the metadata

element.

The Structure Module provides a framework for structuring a SMIL document.

The Structure Module defines semantics for the smil, head, and body elements. The Server Playlist Profile

includes the Structure functionality of the Structure module.

In the Server Playlist Profile, the Structure elements can have the

following attributes and content model :

| Structure Module |

| Elements |

Attributes |

Content model |

| smil |

Core, I18n,

Test, xmlns |

(head?,body?) |

| head |

Core, I18n |

(meta*,(metadata,meta*)?,((layout|switch),meta*)?, (transition+,meta*)?, (paramGroup+,meta*)?) |

| body |

Core, I18n,

Timing, fill, abstract, author, copyright |

(Schedule | MediaContent | ContentControl | a )* |

The body element acts as the root

element to span the timing tree. The body element has the behavior of a seq element. Timing on the body element is supported. The syncbase of

the body element is the application

begin time, which is implementation dependent, as is the application end

time. Note that the effect of fill on

the body element is between the end of

the presentation and the application end time, and therefore the effect of

fill is implementation dependent.

The Timing and Synchronization

Modules provide a framework for describing timing structure, timing

control properties and temporal relationships between elements. The Timing and Synchronization Modules

define semantics for par and seq elements. In addition, these modules

define semantics for attributes including begin, dur, end,

repeatCount, repeatDur, min, max. The

Server Playlist Profile includes the Timing and Synchronization functionality

of the BasicInlineTiming,

EventTiming, MinMaxTiming, RepeatTiming, BasicTimeContainers modules.

In the Server Playlist Profile, Timing and Synchronization elements can

have the following attributes and content model :

| Timing and Synchronization Module |

| Elements |

Attributes |

Content model |

| par |

Core, I18n,

Timing, Test,

endsync, fill (freeze | remove | hold | auto |

default), abstract, author, copyright, region |

(Schedule | MediaContent | ContentControl | a)* |

| seq |

Core, I18n,

Timing, Test,

fill (freeze | remove | hold |

auto | default), abstract,

author, copyright, region |

(Schedule | MediaContent | ContentControl | a) * |

The Attribute collection Timing is defined as follows:

This profile adds the par and seq elements to the content model of the body element of the Structure Module.

Elements of the Media Object

Modules have the attributes describing timing and properties of

contents.

The Mobile Profile specifies which types of events can be used as part of

the begin and end attribute values. The supported events are

described as Event-symbols according to the syntax introduced in the SMIL Timing and Synchronization

module.

The supported event symbols in the Mobile Profile are:

| Event |

example |

| focusInEvent (In DOM Level 2: "DOMFocusIn") |

end="foo.focusInEvent" |

| focusOutEvent (In DOM Level 2: "DOMFocusOut") |

begin="foo.focusOutEvent" |

| activateEvent (In DOM Level 2: "DOMActivate") |

begin="foo.activateEvent" |

| beginEvent |

begin="foo.beginEvent" |

| endEvent |

end="foo.endEvent" |

| repeatEvent |

end="foo.repeatEvent" |

| inBoundsEvent |

end="foo.inBoundsEvent" |

| outOfBoundsEvent |

begin="foo.outOfBoundsEvent" |

As defined by the SMIL

syncbase timing semantics, any event timing attributes that reference an

invalid time-value description will be treated as if "indefinite" were

specified.

- focusInEvent:

- Raised when a media element gets the keyboard focus in its rendering

space, i.e., when it becomes the media element to which all subsequent

keystroke-event information is passed. Once an element has the keyboard

focus, it continues to have it until a user action or DOM method call

either removes the focus from it or gives the focus to another media

element, or until its rendering space is removed. Only one media

element can have the focus at any particular time. The focusInEvent is

delivered to media elements only, and does not bubble.

- focusOutEvent:

- Raised when a media element loses the keyboard focus from its

rendering space, i.e., when it stops being the media element to which

all subsequent keystroke-event information is passed. The focusOutEvent

is delivered to media elements only, and does not bubble.

- activateEvent:

- Raised when a media element is activated by user input such as by a

mouse click within its visible rendering space or by specific

keystrokes when the element has the keyboard focus. The activateEvent

is delivered to media elements only, and does not bubble.

- beginEvent:

- Raised when the element actually begins playback of its active

duration. If an element does not ever begin playing, this event is

never raised. If an element has a repeat count, beginEvent is only

raised at the beginning of the first iteration. The beginEvent is

delivered to elements that support timing, such as media elements and

time containers, and does not bubble.

- endEvent:

- Raised when an element actually ends playback; this is when its

active duration is reached or whenever a playing element is stopped. In

the following example,

<ref id="x" end="30s" src="15s.mpg" />

<ref id="y" end="10s" src="20s.mpg" />

<ref id="z" repeatCount="4" src="5s.mpg" />

x.endEvent occurs at roughly 30s when the active duration is

reached, y.endEvent occurs at roughly 10s when the playback of the

continuous media is ended early by the active duration being reached,

and z.endEvent occurs at roughly 20s when the fourth and final repeat

has completed, thus reaching the end of its active duration. The

endEvent is delivered to elements which support timing, such as media

elements and time containers, and does not bubble.

- repeatEvent:

- Raised when the second and subsequent iterations of a repeated

element begin playback. An element that has no repeatDur, repeatCount, or repeat attribute but that plays two

or more times due to multiple begin times will not raise a repeatEvent

when it restarts. Also, children of a time container that repeats will

not raise their own repeatEvents when their parent repeats and they

begin playing again. The repeatEvent is delivered to elements which

support timing, such as media elements and time containers, and does

not bubble.

- inBoundsEvent:

- Raised when one of the following happens:

- by any input from the mouse or other input device that brings the

mouse cursor from outside to within the bounds of a media element's

rendering space, regardless of what part, if any, of that rendering

space is visible at the time, i.e., z-order is not a factor.

- by any other action that moves the "cursor" or "pointer", as

defined by the implementation, from outside to within the bounds of

a media element's rendering space, regardless of what part, if any,

of that rendering space is visible at the time, i.e., z-order is

not a factor. An implementation may decide, for instance, to raise

an inBoundsEvent on an element whenever it gets the focus,

including when keystrokes give it the focus.

A media element's bounds are restrained by the bounds of the region

in which it is contained., i.e., a media element's bounds do not extend

beyond its region's bounds. The inBoundsEvent is delivered to media

elements only, and does not bubble.

Note that, unlike with keyboard focus which can only be active on

one object at a time, the state of being within an object's bounds can

be true for multiple objects simultaneously. For instance, if one

object is on top of another and the cursor is placed on top of both

objects, both would have raised an inBoundsEvent more recently than the

raising of any respective outOfBoundsEvent. If a player does not

support a pointer cursor, then these players will typically not

generate the inBoundsEvent and outOfBoundEvent events.

- outOfBoundsEvent:

- Raised when one of the following happens:

- by any input from the mouse or other input device that brings the

mouse cursor from within to outside the bounds of a media element's

rendering space, regardless of what part, if any, of that rendering

space is visible at the time,

- by any other action that moves the "cursor" or "pointer", as

defined by the implementation, from within to outside the bounds of

a media element's rendering space, regardless of what part, if any,

of that rendering space is visible at the time.

A media element's bounds are restrained by its region's bounds,

i.e., a media element's bounds do not extend beyond its region's

bounds. The outOfBoundsEvent is delivered to media elements only, and

does not bubble.

There will be cases where events occur simultaneously. To ensure that each

Mobile implementation handles them in the same order, the following order

must be used to resolve ties:

- InBoundsEvent

- focusInEvent (should follow 1)

- activateEvent (should follow 2)

- OutOfBoundsEvent

- focusOutEvent (should follow 4)

- endEvent

- beginEvent (must follow 6)

- repeatEvent

Events are listed in order of precedence, e.g., if event #6 in this list

occurs at the same time as event #7, then #6 must be raised prior to #7.

The InBoundsEvent, focusInEvent, OutOfBoundsEvent, activateEvent, and

focusOutEvent events do not bubble and are delivered to the target media

element.

The beginEvent, endEvent and repeatEvent events do not bubble and are

delivered to the timed element on which the event occurs.

The Mobile Profile supports an extensible set of events. In order to

resolve possible name conflicts with the events that are supported in this

profile qualified event names are supported. Namespace prefixes are used to

qualify the event names. As a result, the colon is reserved in begin and end

attributes for qualifying event names.

For example:

<smil ... xmlns:example="http://www.example.com">

<img id="foo" .../>

<audio begin="foo.example:focusInEvent".../>

...

</smil>

A SMIL document's begin time is defined as the moment a user agent begins

the timeline for the overall document. A SMIL document's end time is defined

as equal to the end time of the body

element.

The Transition Effects

Modules provide a framework for describing transitions such as fades and

wipes. The Transition

Modules define semantics for the transition element. The Server Playlist

Profile includes the functionality of the BasicTransitions

and FullScreenTransitions

modules.

In the Server Playlist Profile, Transition Effects elements have the

following attributes and content model :

| Transition Effects Module |

| Elements |

Attributes |

Content model |

| transition |

Core, I18n,

Test, dur, type, subtype, startProgress,

endProgress,

direction,

fadeColor,

scope, skip-content |

EMPTY |

This profile adds the transition element to the content model

of the head element of the Structure Module.

The Transition Effects

Modules add transIn and transOut attributes to ref, audio, img, video, text and textstream elements of the Media Object Modules.

The Transition Effects

Modules add the transition value to the fill attribute for all elements on which this

value of the fill attribute is

supported.