The Media Analysis Management Interface (MAMI) enables the understanding of the real world at a low cost by using analysis engines such as video image processing engines, sensor data analysis engines, and so on.

It also enables various services to be easily provided, such as physical security, environmental load reduction, and intelligent accessibility services.

The MAMI Incubator Group (MAMI-XG) discussed the requirements and determined the feasibility of the MAMI, which consists of the data models and exchange protocols for the analysis data of various media. In this document, the findings of the group are summarized.

1 Background

The number of systems required to respond to situations in the real world is increasing.

Examples of these systems in use are physical security and environmental load reduction services.

In particular, the number of image analysis services is increasing rapidly with marked improvement in image-recognition technologies.

Various sensors for collecting various types of data and analysis engines analyzing those data are used to realize such services.

For example, human and vehicle trajectories captured with video images are used for security and marketing services.

In one example, information on age, sex, and clothes extracted from images of people is used for an information retrieval service.

Electric power data gathered from wattmeters is used for equipment control for energy saving.

However, such systems require a lot of specialized software depending on the features of individual services or analysis engines.

In addition, specialized knowledge about individual analysis engines is required to use these analysis engines.

This causes a rise in development cost and time.

It also restricts analysis engine usage.

In comparison, low cost systems lead to a prevalence of systems that have analysis engines.

For that purpose, an architecture and a standard interface for reducing cost are required.

2 Objective and Scope

In the MAMI-XG, we discussed a system architecture, use cases, and the requirements of an interface in order to specify an interface standard.

The target standard defines an interface between the analysis data manager and applications and between the analysis data manager and analysis engines.

It also defines a framework for multi-vocabulary, but specific vocabulary definitions are out of the scope of this research.

Implementing the analysis data manager is out of the scope of this research as well.

3 Related Standards

There are standardized APIs in specific fields such as surveillance camera control or biometrics. Some of them include the output format of an analysis engine.

The Open Network Video Interface Forum (ONVIF) and ISO/IEC JTC1 SC37 (Biometrics) are examples of that.

MPEG-7 and SPARQL are related to general-purpose standards.

The Multimodal Interaction Working Group from the W3C also specifies related standards.

3.1 ONVIF

ONVIF decides the standard for network cameras.

It specifies the APIs for camera detection, the setting and reading of control parameters, and the getting of the images and output of image analyses.

It also handles standards for object detection.

Combining several standards is necessary in order to build a complex system that uses more than one analysis engine.

Therefore, more knowledge is required for system development.

3.2 ISO/IEC JTC1 SC37(Biometrics)

ISO/IEC JTC 1/SC 37 specifies common APIs and data exchange formats for biometric authentication such as fingerprint or face recognition.

3.3 MPEG-7

MPEG-7 is a standard for metadata description which is associated with multimedia content such as voice or image data.

It includes a standard for face recognition results.

MPEG-7 is limited to the metadata description of multimedia content and cannot treat other data such as sensor analysis data.

3.4 SPARQL

SPARQL is a W3C recommendation for RDF query language.

It does not specialize in analysis data.

SPARQL is general-purpose standard for RDF retrieval. Therefore, it is not efficient enough to treat the results of analysis engines and to express and operate analysis data.

3.5 Multimodal Interaction Working Group (MMI-WG)

The MMI-WG standardizes Extensible MultiModal Annotations (EMMA) and the Multimodal Architecture.

EMMA is an interface for the Multimodal Architecture and is authorized as a W3C recommendation.

It treats input by human interaction such as voice or ink data.

The Multimodal Architecture integrates the information of various input and output devices and is aimed to integrate information from plural devices.

4 System Architecture

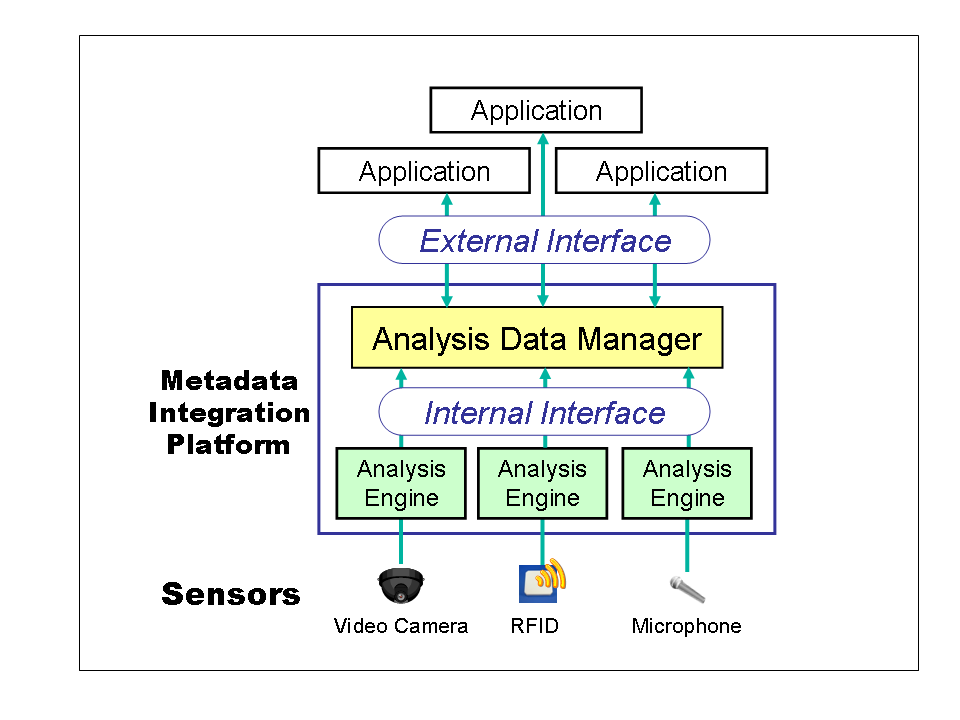

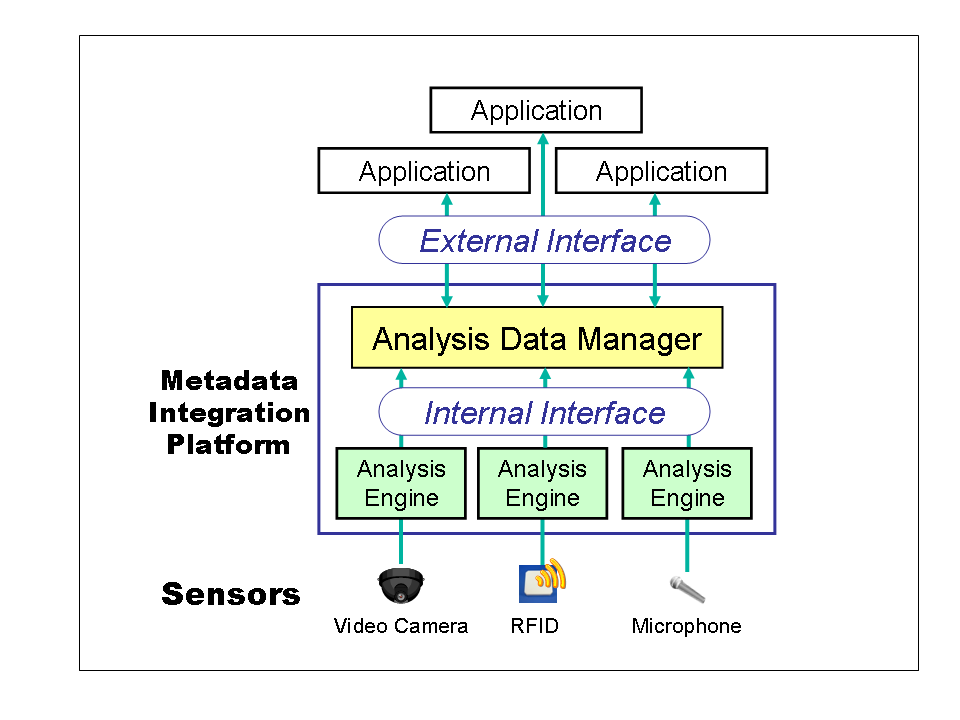

We propose a system architecture that reduces the development cost of systems that use analysis engines.

The metadata integration platform (MIP) is placed between applications and sensors.

It integrates analysis data and provides them to applications (See diagram below).

4.1 Applications

Applications use results of analysis engines.

4.2 External Interface

The external interface is an interface through which applications retrieve analysis data from the MIP. Through this interface, applications can clip the required data from the analysis data managed in the MIP.

4.3 Metadata Integration Platform

The metadata integration platform (MIP) consists of the analysis data manager and analysis engines, which integrates the analysis data and provides them to applications.

4.4 Analysis Data Manager

The functions of the analysis data manager are to:

- invoke analysis engines

- store, integrate and retrieve analysis results generated by analysis engines

- provide API to applications and analysis engines.

4.5 Internal Interface

The internal interface is the interface through which the analysis data manager receives analysis data from various analysis engines.

Through this interface, the analysis data manager stores and manages the analysis data retrieved from various analysis engines.

4.6 Analysis Engines

Analysis engines analyze raw data from various sensors and generate analysis results which show the status of the real world.

4.7 Sensors

Sensors collect various data from the real world through rays of light, radio waves, sound waves, and so on.

5 Requirements of Interfaces

We discussed a common interface standard that hides the individuality of each analysis engine.

Analysis results expressed by the interface are then used commonly between services and engines.

5.1 External Interface

- Each analysis engine must be available for plural applications without large customization.

The interface enables the use of analysis engines with a common description format and hides the individuality of each analysis engine.

As a result, application developers can develop systems efficiently without the details of engines.

- Analysis results can be reused from applications.

Applications that have different targets such as security and energy saving can use common data sets.

Additionally, it should be possible to generate a new data set that is integrated from different data sets.

Then applications and engines can use the new data set.

- The interface must support the cross-referenced results of an analysis.

Analysis results may include graph structures.

For example, source nodes may refer to the same destination node or an analysis result may have ambiguity.

Therefore, a standard for various analysis results needs to support graph structures easily.

5.2 Internal Interface

- Each analysis engine must be available for plural applications without large customization.

The interface enables the use of analysis engines with a common description format and hides the individuality of each analysis engine.

Engine developers need not customize engines for each application.

This expands the opportunity to use these engines.

- The interface must treat many kinds of analysis results.

Analysis results include various types of data depending on the analysis target or usage.

In addition, they may change due to developments in technology, making it difficult for a single vocabulary set to support all kinds of analysis results.

Therefore, a framework for multi-vocabulary exchanges is necessary so that the interface can correspond to changes in format and the operation of a subset of vocabulary.

6 Use Cases

We introduce use cases in three fields: energy saving, video surveillance, and operational improvement.

6.1 Energy Saving

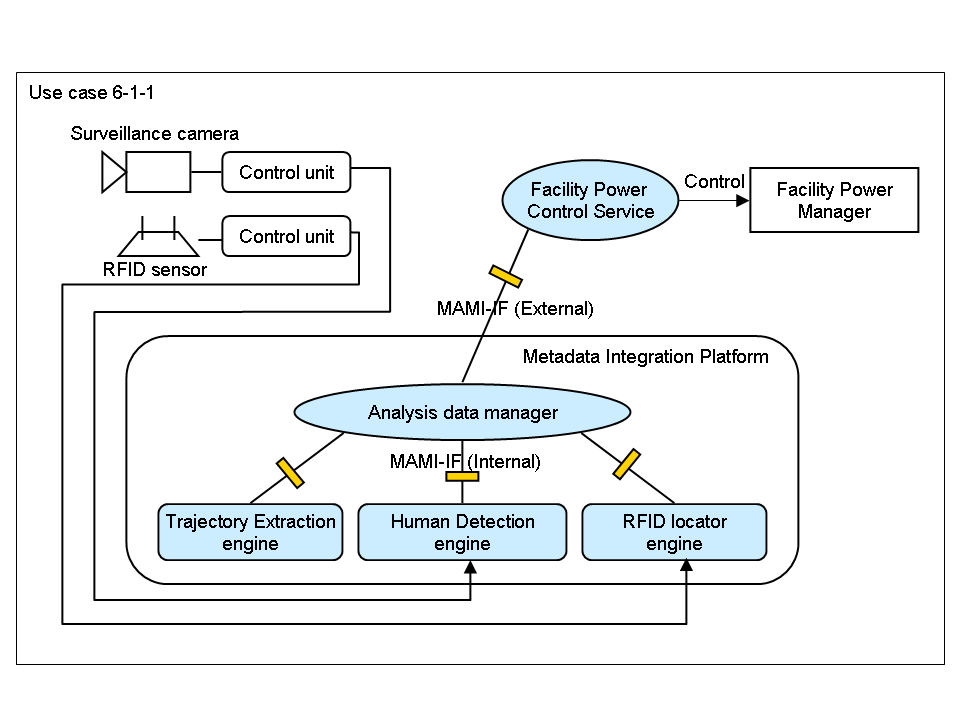

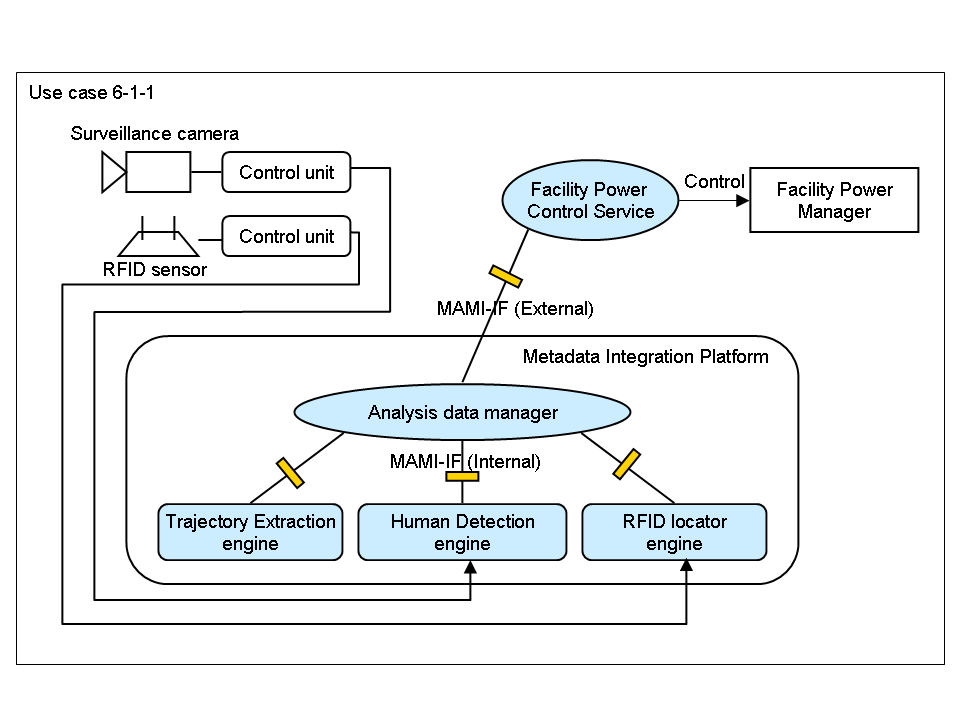

6.1.1 Office Worker Trajectory Visualizer and Facilities Controller

The office worker trajectory visualizer and facilities controller is a system that controls lights, air-conditioners, and office automation equipment on the basis of the locations of people in an office for energy saving.

- System Architecture

- System Components

- RFID locator engine: analyzes changes of RFID locations.

- Human detection engine: extracts human images from video images.

- Trajectory extraction engine: analyzes each person's location (human trajectory) on the basis of human images and RFID locations.

- Facility power control service: controls lights, air-conditioners and office automation equipment on the basis of human trajectories.

- Analysis data manager: exchanges analysis results with analysis engines, stores data and provides data by search request.

- System Behavior

-

The output of RFID locator and human detection engines is stored in the analysis data manager.

-

Trajectory data is then generated by a trajectory extraction engine and also stored in the analysis data manager.

-

Trajectory data is sent to a facility power control service.

- Position and Role of the MAMI

-

Register analysis results from the RFID locator engine and the human detection engine to the analysis data manager.

-

Exchange data between the analysis data manager and the trajectory extraction engine.

-

Provide data stored in the analysis data manager to the facility power control service.

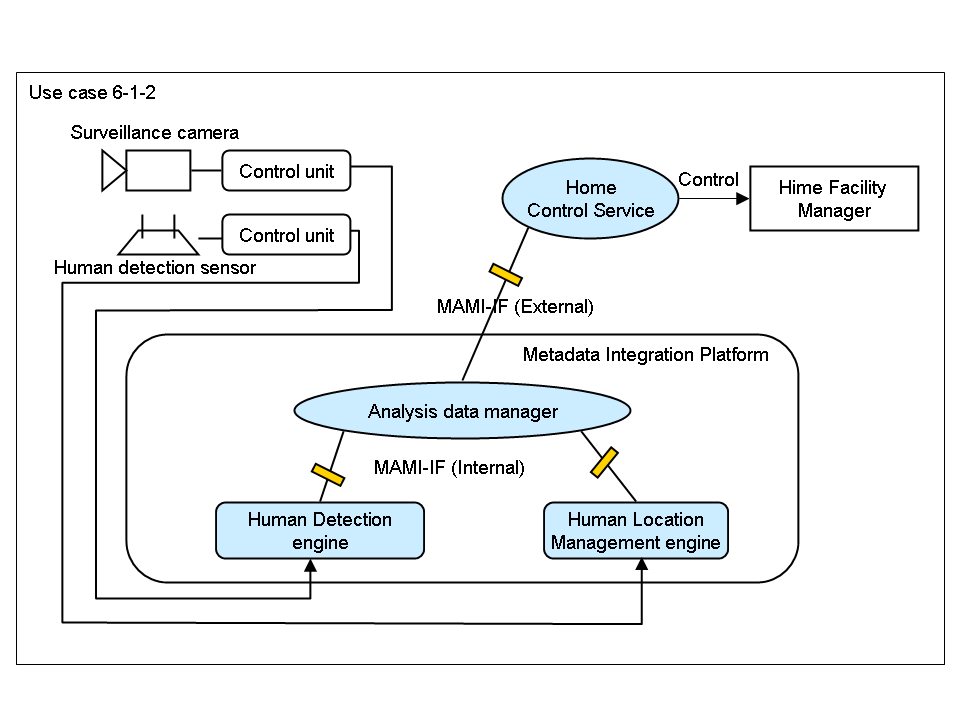

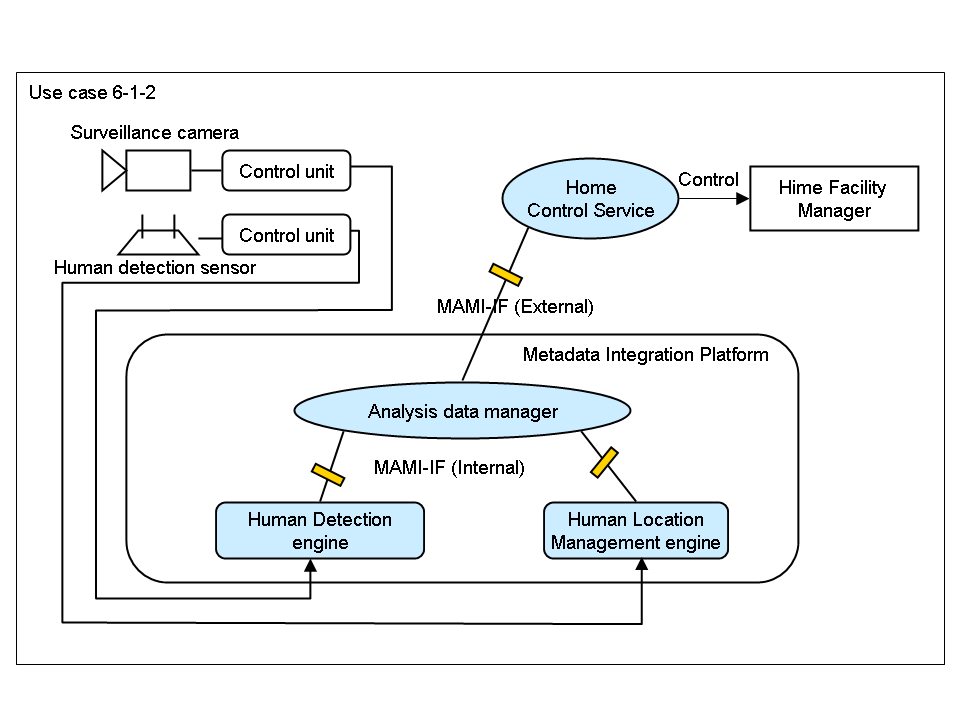

6.1.2 Home Facilities Controller

The home facilities controller is a system that uses video cameras and human detection sensors in houses in order to detect humans.

It controls lights, air-conditioners, and other electrical appliances for energy saving.

- System Architecture

- Components

- Human detection engine: detects humans in video images.

- Human location management engine: translates output of human detection sensors into location information.

- Home control service: controls home electrical appliances on the basis of human positions.

- Analysis data manager: exchanges analysis results with analysis engines, stores data and provides data by search request.

- System Behavior

-

The output of human detection and human location management engines is stored in the analysis data manager.

-

Human location data is sent to a home control service.

- Position and Role of the MAMI

-

Register analysis results from the human detection and the human location management engines to the analysis data manager.

-

Provide data stored in the analysis data manager to the home control service.

6.2 Video Surveillance

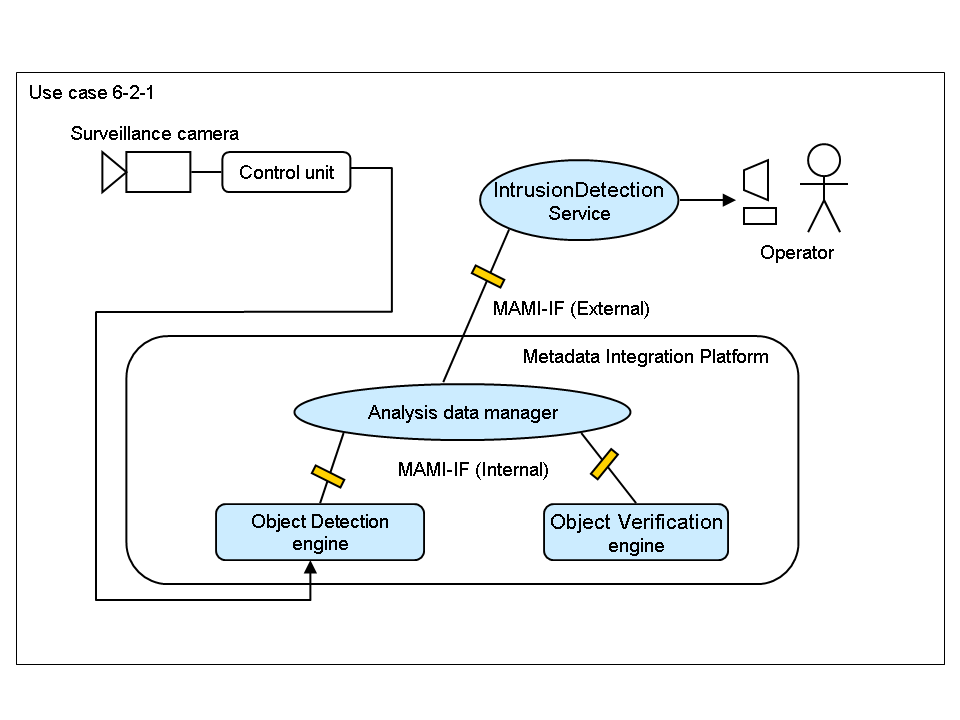

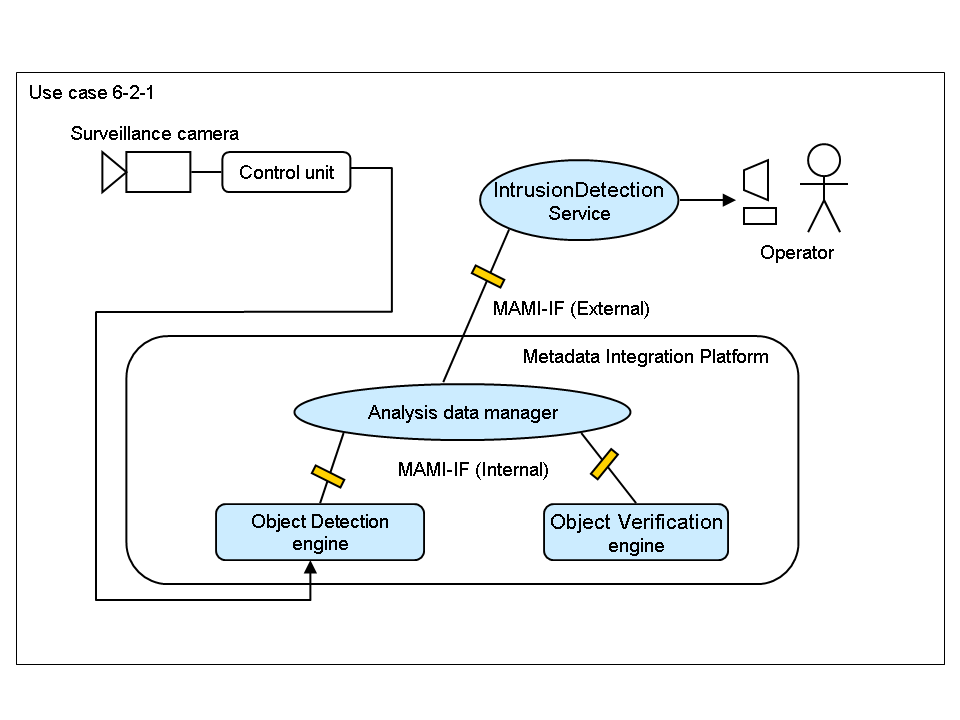

6.2.1 Intrusion Detection System

The intrusion detection system detects human or vehicle intrusions by analyzing images captured by surveillance cameras.

In addition, it raises an alarm when unauthorized intrusion is found with face or license plate recognition.

- System Architecture

- Components

- Object detection engine: detects specific objects such as humans or vehicles in video images.

- Object verification engine: verifies features, faces or license plate numbers of detected objects with registered information.

- Intrusion detection service: raises an alarm when unauthorized persons or vehicles are detected in a restricted area.

It provides related information such as video images.

- Analysis data manager: exchanges analysis results with analysis engines, stores data, and provides data by search request.

- System Behavior

-

The output of an object detection engine is stored in the analysis data manager.

-

Authentication is then performed by an object verification engine, and the results are also stored in the analysis data manager.

-

The results of the authentication are sent to an intrusion detection service.

- Position and Role of the MAMI

-

Register analysis results from the object detection and object verification engines to the analysis data manager.

-

Exchange data between the analysis data manager and the object verification engine.

-

Provide data stored in the analysis data manager to the intrusion detection service.

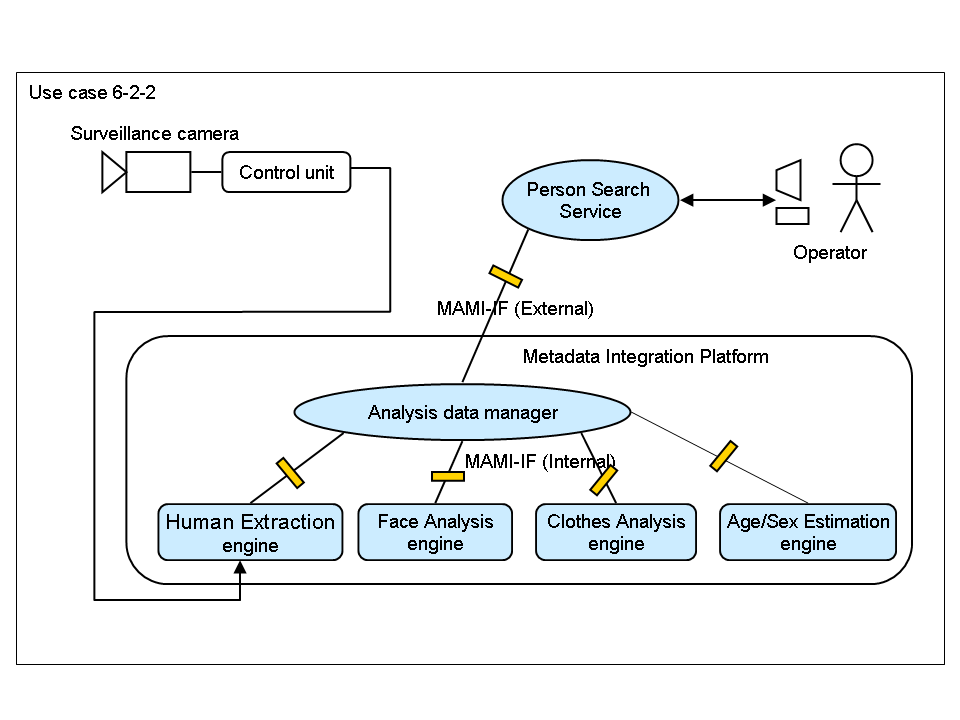

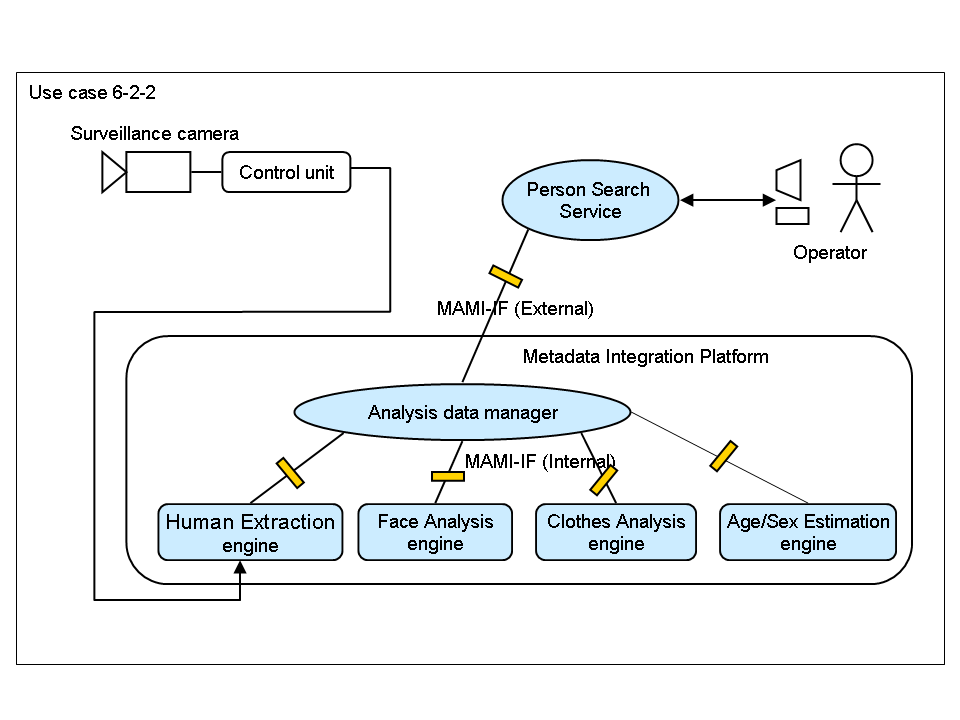

6.2.2 Person Search System using Face and Clothes Features

The person search system, which uses face and clothes features, is a system that enables image search services.

These services retrieve video images by using personal features like age, sex, face, and clothes.

- System Architecture

- Components

- Human extraction engine: extracts human images from video images.

- Face analysis engine: detects face images from human images and extracts face features.

- Clothes analysis engine: extracts clothes features from human images.

- Age/sex estimation engine: estimates age and sex of a person in human images.

- Person search service: retrieves video images with person features, time ranges, and areas.

- Analysis data manager: exchanges analysis results with analysis engines, stores data, and provides data by search request.

- System Behavior

-

A human extraction engine extracts human images from video images, and the human images are registered in the analysis data manager.

-

Face analysis engines, clothes analysis engines, and age/sex estimation engines then analyze the images and register the analysis results in the analysis data manager.

-

The analysis data manager gives the results to a person search service.

- Position and Role of the MAMI

-

Register analysis results from the human extraction engine to the analysis data manager.

-

Exchange data between the analysis data manager and the face analysis, clothes analysis, and age/sex estimation engines.

-

Request the analysis data manager to retrieve person images and return the results to a person search service.

6.3 Operational Improvement

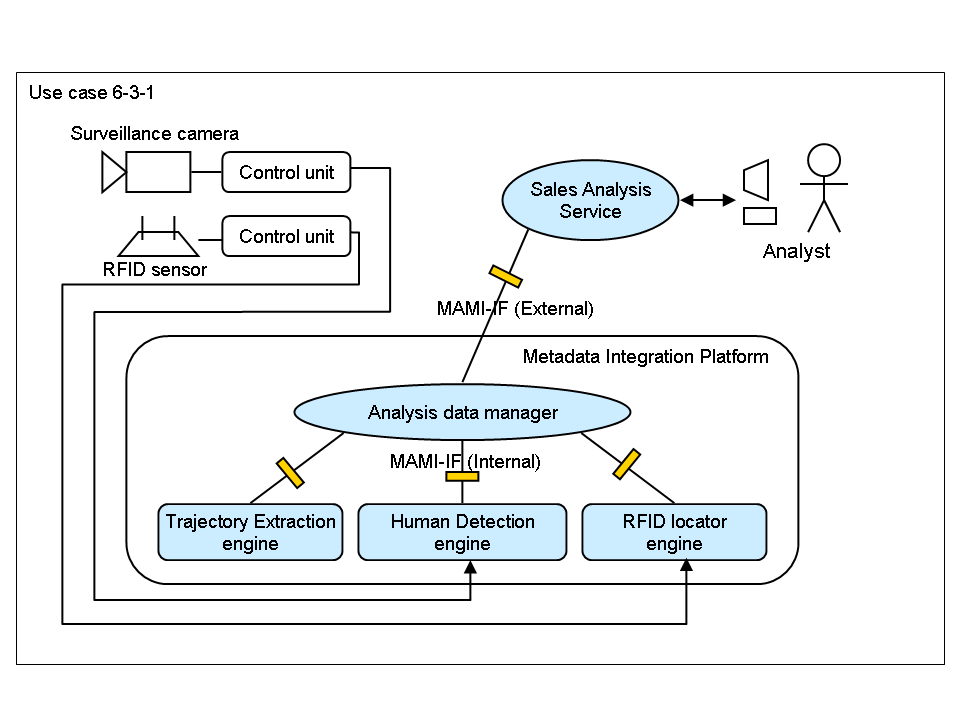

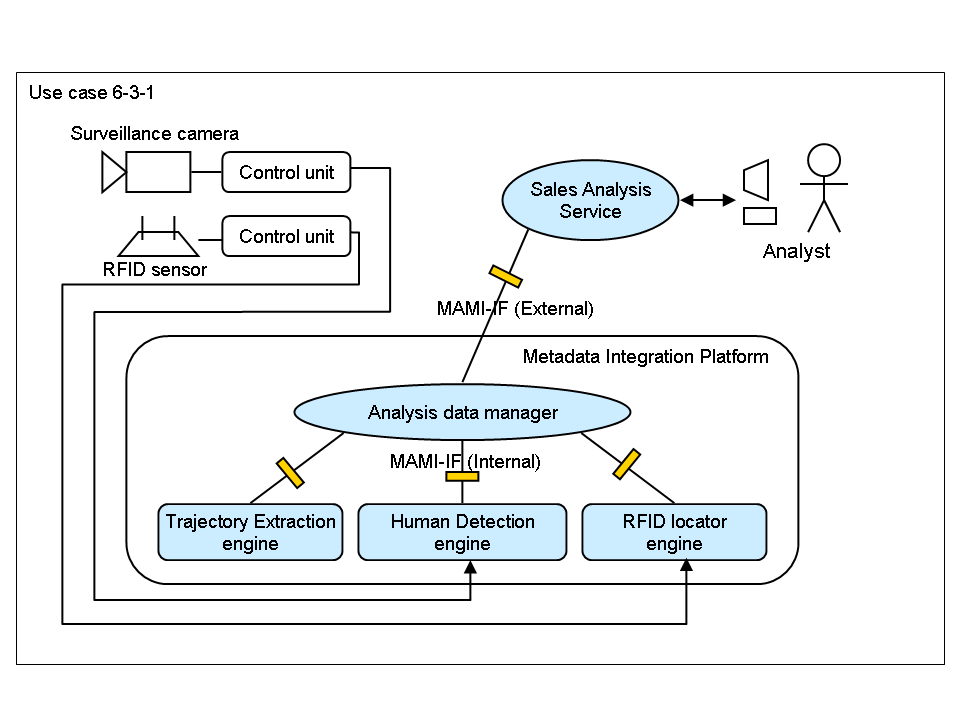

6.3.1 Sales Analysis based on Customer Trajectories

The sales analysis, based on customer trajectories, is a system that gives sales analyses for increasing sales and for better ways to lay out a shop.

For that purpose, it extracts customer's trajectories from video images and RFID data.

It then links the trajectories to a layout of goods and POS data.

- System Architecture

- Components

- RFID locator engine: analyzes changes of RFID locations.

- Human detection engine: extracts human images from video images.

- Trajectory extraction engine: analyzes each person's location (human trajectory) on the basis of human images and RFID locations.

- Sales analysis service: analyzes customer's trajectories in combination with sales data and layout information.

- Analysis data manager: exchanges analysis results with analysis engines, stores data and provides data by search request.

- System Behavior

-

The output of an RFID locator engine and a human detection engine is stored in the analysis data manager.

-

Trajectory data is then generated by a trajectory extraction engine and also stored in the analysis data manager.

-

Trajectory data is sent to a sales analysis service.

- Position and Role of the MAMI

-

Register analysis results from the RFID locator and human detection engines to the analysis data manager.

-

Exchange data between the analysis data manager and the trajectory extraction engine.

-

Request the analysis data manager to retrieve trajectory data and return the results to the sales analysis service.

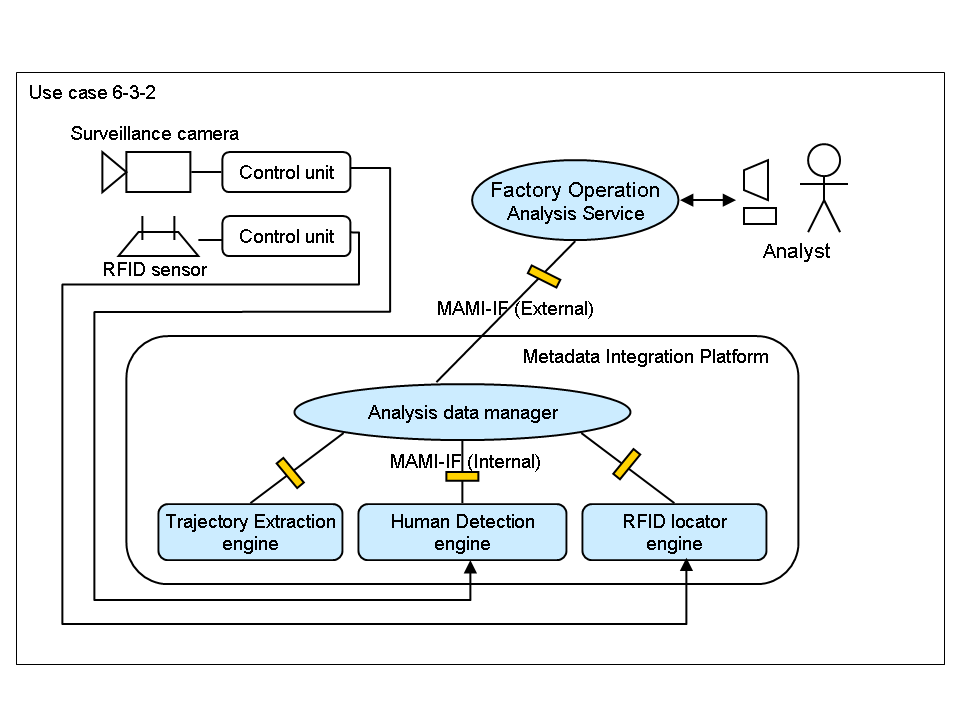

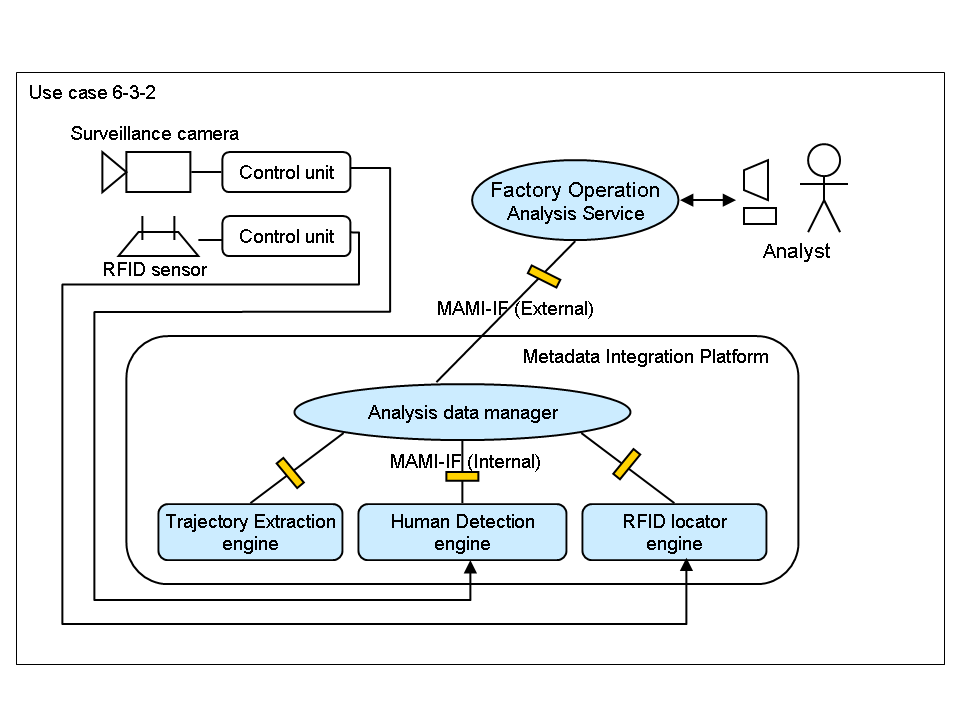

6.3.2 Factory Operation Analysis using Worker Trajectories

The factory operation analysis, which uses worker trajectories, is a system that analyzes work procedures and the layout of factories in order to improve work efficiency and safety.

For that purpose, it generates workers' trajectories from video images of factories and from RFID data and generates visualizations of these trajectories.

- System Architecture

- Components

- RFID locator engine: analyzes changes of RFID locations.

- Human detection engine: extracts human images from video images.

- Trajectory extraction engine: analyzes each person's location (human trajectory) on the basis of human images and RFID locations.

- Factory operation analysis service: analyzes factory workers' trajectories in combination with layout information.

- Analysis data manager: exchanges analysis results with analysis engines, stores data and provides data by search request.

- System Behavior

-

The output of an RFID locator engine and a human detection engine is stored in the analysis data manager.

-

Trajectory data is then generated by a trajectory extraction engine and also stored in the analysis data manager.

-

Trajectory data is sent to a factory operation analysis service.

- Position and Role of the MAMI

-

Register analysis results from the RFID locator and human detection engines to the analysis data manager.

-

Exchange data between the analysis data manager and the trajectory extraction engine.

-

Request the analysis data manager to retrieve trajectory data and return the results to the factory operation analysis service.

7 Future Plans

We have discussed use cases and requirements of the MAMI.

Hereafter, we will continue to discuss standardization in a working group activity.

The Multimodal Interaction Working Group (MMI-WG) standardizes the Multimodal Architecture which integrates information of various input and output devices.

MMI-WG's Multimodal Architecture is similar to our system architecture.

The Multimodal Architecture has the Interaction Manager which virtualizes devices and integrates information from plural devices.

EMMA V1.0 treats input by human interaction such as voice or ink data.

The next version, EMMA 2.0, is scheduled to be extended to sensor data and biometrics data.

We plan to implement the achievements of the MAMI-XG to the MMI-WG. We want to contribute to the MMI-WG in terms of how analysis results are used.

8 Summary

We discussed the Media Analysis Management Interface (MAMI), an analysis result exchange interface, in order to reduce the development cost and time of systems that use analysis engines.

The individuality of an analysis engine is a major factor that increases development cost.

Therefore, the analysis data manager, which hides this individuality, is placed between analysis engines and applications.

The manager stores and provides analysis data.

In addition, the MAMI is required for data exchange between analysis engines and applications.

We described the requirements of the MAMI and six use cases in three fields: energy saving, video surveillance, and operational improvement.

We also studied other WG activities and found that the MMI-WG has much in common with our system.

We will continue to collaborate with other WGs like the MMI-WG to specify the MAMI.