The following sections cover proposed solutions that this Incubator Group recommends. The proposed solutions

represent the consensus of the group, except where clearly indicated that an impasse was reached.

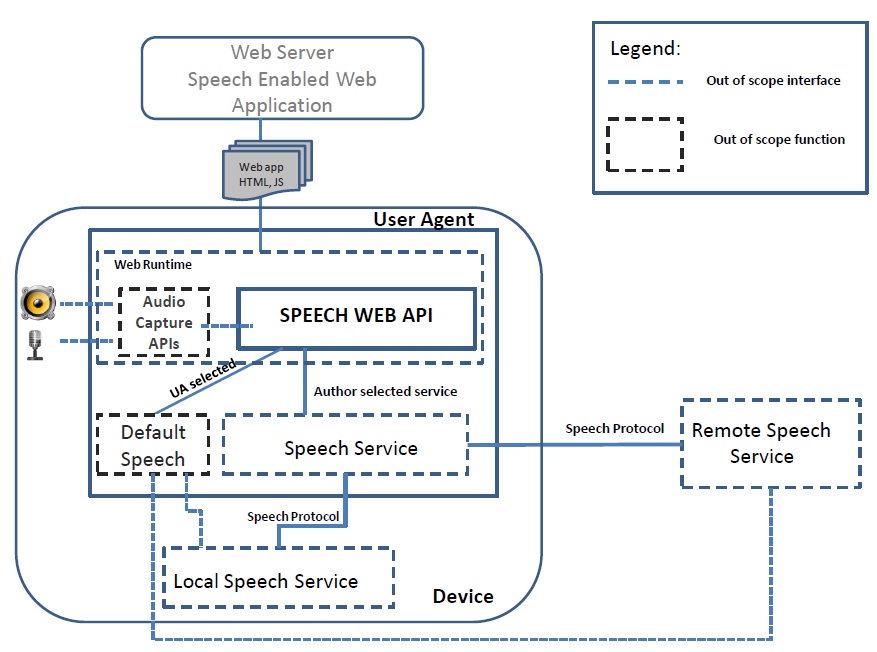

7.1 Speech Web API proposal

This proposed API represents the web API for

doing speech in HTML. This proposal is the HTML bindings and JS functions that sits on top of the protocol proposal

[section 7.2 HTML Speech Protocol Proposal]. This includes:

- A declarative <reco> tag which can optionally bind to recoable elements.

- A JS API that allows programmatic control and parameterization of recognition.

- The ability to do recognition either as one-shot turns or as continuous dictation.

- The ability of the web application author to use the recognition service of their choice.

- A declarative <tts> tag which can be used to output synthesis.

- A JS API that allows programmatic control of the synthesis.

- The ability of the web application author to choose the synthesis service of their choice.

The section on Design Decisions [section 6. Solution Design Agreements and Alternatives] covers the design decisions the group

agreed to that helped direct this API proposal.

The sections on Use Cases [section 4. Use Cases]

and Requirements [section 5. Requirements] cover

the motivation behind this proposal.

This API is designed to be used in conjunction with other APIs and elements on the web platform, including

APIs to capture input and APIs to do bidirectional communications with a server (WebSockets [WEBSOCKETS-API]).

7.1.1 Introduction

Web applications should have the ability to use speech to interact with users. That speech could be for output

through synthesized speech, or could be for input through the user speaking to fill form items, the user

speaking to control page navigation or many other collected use cases. A web application author should be able

to add speech to a web application using methods familiar to web developers and should not require extensive

specialized speech expertise. The web application should build on existing W3C web standards and support

a wide variety of use cases. The web application author should have the flexibility to control the recognition

service the web application uses, but should not have the obligation of needing to support a service.

This proposal defines the basic representations for how to use grammars, parameters, and recognition results

and how to process them. The interfaces and API defined in this proposal can be used with other

interfaces and APIs exposed to the web platform.

Note that privacy and security concerns exist around allowing web applications to do speech recognition.

User agents should make sure that end users are aware that speech recognition is occurring, and that the

end users have given informed consent for this to occur. The exact mechanism of consent is user agent

specific, but the privacy and security concerns have shaped many aspects of the proposal.

7.1.2 Reco Element

The reco element is the way to do speech recognition using markup bindings.

The reco element is legal where ever phrasing content is expected, and can

contain any phrasing content, except with no descendant recoable elements unless

it is the element's reco control, and no descendant reco elements.

The reco represents a speech input in a user interface. The speech input can be associated with a specific form control,

known as the reco element's reco control, either using for attribute, or by putting the form control inside the

reco element itself.

Except where otherwise specified by the following rules, a reco element has no reco control.

Some elements are catagorized as recoable elements. These are elements that can be

associated with a reco element:

The reco element's exact default presentation and behavior, in particular what its activation behavior might be is

unspecified and user agent specific. When the reco element is bound to a recoable element if no grammar attribute

is specified, then by default the default builtin uri is used.

The activation behavior of a reco element for events targeted at interactive content descendants of a reco element, and

any descendants of those interactive content descendants, must be to do nothing. When a reco element with a reco control

is activated and gets a reco result, the default action of the recognition event must be to use the

value of the top n-best interpretation of the current result event. The exact binding depends on the

recoable element in question and is covered in the binding results section.

Not all implementers see value in linking the recognition

behavior to markup, versus an all scripting

API. Some user agents like the possibility of good defaults based on

the associations. Some user agents like the idea of different consent bars based on the user clicking a markup

button, rather then just relying on scripting. User agents are cautioned to remember click-jacking and

should not automatically assume that when a reco element is activated it means the user meant to start

recognition in all situations.

7.1.2.1 Reco Attributes

- form

The form attribute is used to explicitly associate the reco element with its form owner.

The form IDL attribute is part of the element's forms API.

- htmlFor

The htmlFor IDL attribute must reflect the for content attribute.

The for attribute may be specified to indicate a form control with which a speech input is to be associated.

If the attribute is specified, the attribute's value must be the ID of a recoable element in the same Document as the reco element.

If the attribute is specified and there is an element in the Document whose ID is equal to the value of the for attribute, and

the first such element is a recoable element, then that element is the reco element's reco control.

If the for attribute is not specified, but the reco element has a recoable element descendant, then the first such

descendant in tree order is the reco element's reco control.

- control

The control attribute returns the form control that is associated with this element. The control

IDL attribute must return the reco element's reco control, if any, or null if there isn't one.

control . recos returns a NodeList of all the reco elements that the form control is associated with.

Recoable elements have a NodeList object associated with them that represents the list of reco elements,

in tree order, whose reco control is the element in question. The reco IDL attribute of recoable elements, on getting, must return that NodeList object.

- request

The request attribute represents the SpeechReco associated with this reco element. By default the

User Agent sets up the speech service specified by serviceURI and the default speech reco object associated with this reco. The author

may set this attribute to associate a markup reco element with an author created speech reco object. In this way the

author has control over the reco involved. When the reco request object is set then the object's speech parameters take priority

over the corresponding parameters on the reco attributes.

- grammar

The uri of a grammar associated with this reco. If unset, this defaults to the default builtin uri.

Note that to use multiple grammars or different weights the user must use the scripted SpeechReco API.

The other attributes are all defined identically to how they appear in the SpeechInputResult section.

7.1.2.2 Reco Constructors

Two constructors are provided for creating HTMLRecoElement objects (in addition to the factory methods from DOM Core

such as createElement()): Reco() and Reco(for). When invoked as constructors, these must return

a new HTMLRecoElement object (a new reco element). If the for argument is present, the object created must have its for

content attribute set to the provided value. The element's document must be the active document of the browsing context

of the Window object on which the interface object of the invoked constructor is found.

7.1.2.3 Builtin Default Grammars

When the user agent needs to create a default grammar from a recoable element

it builds a uri using the builtin scheme. The format of the uri is of the form:

builtin:<tag-name>?<tag-attributes> where the tag-name is just the name of the

recoable element, and the tag-attributes are the content attributes in the form name=value&name=value.

Since this a uri, both name and value must be properly uri escaped. Note the ? character may be omitted

when there are no tag-attributes.

For example:

- A simple textarea like <textarea /> would produce:

builtin:textarea

- A simple number like <input type="number" max="3" /> would produce:

builtin:input?type=number&max=3

- A zip code like <input type="text" pattern="[0-9]{5}" /> would produce:

builtin:input?type=text&pattern=%5B0-9%5D%7B5%7D

Speech services may define other builtin grammars as well. It is recommended that speech services

allow a builtin:dictation to represents a "say anything" grammar and

builtin:websearch to represent a speech web search and

builtin:default to represent whatever default grammar, if any, the recognition service

defines.

builtin uri for grammars can be used even when the reco element is not bound to any particular element

and may also be used by the SpeechReco object and as a rule reference in an SRGS grammar.

In addition to the content attribute other parameters may be specified. It is recommended that

speech services support a filter parameter that can be set to the value noOffensiveWords to represent

a desire to not recognize offensive words. Speech services may define other extension parameters.

Note the exact grammar that is generated from any builtin uri is specific to the recognition service

and the content attributes are best thought of as hints for the service.

7.1.2.4 Default Binding of Results

When a reco element is bound to a recoable element and does not have an onresult attribute set

then the default binding is used. The default binding can also be used if the SpeechInputResult's

outputElement attribute is set. In both cases the exact binding depends on the recoable

element in question. In general, the binding will use the value associated with the interpretation

of the top n-best element.

When the recoable element is a

button then if

the button is not disabled,

then the result of a speech recognition is to activate the button.

When the recoable element is a

input element

then the exact binding depends on the control type. For basic text fields (input elements with

a type attribute of text, search, tel, url, email, password, and number) the value of the result

should be assigned to the input (inserted at the cursor and replacing any selected text). For

button controls (submit, image, reset, button) the act of recognition just activates the input.

For type checkbox, the input should be set to a checkedness of true. For type radiobutton,

the input should be set to a checkedness of true, and all other inputs in the radio button

group must be set to a checkedness of false. For date and time types (datetime, date,

month, week, time, datetime-local) then the value should be assigned unless the value

represents an non-empty string that is not valid for that type as described

here. For type

of color then the value should be assigned unless the value does not represent a

valid lowercase simple color.

For type of range the assignment is only allowed if it is a valid floating point number,

and before being assigned, it must undergo the value sanitization algorithm as described

here.

When the recoable element is a

keygen element

then the element should regenerate the key.

When the recoable element is a

select element

then the recognition result will be used to select any options that are named the same as the

interpretations value (that is any that are returned by namedItem(value)).

When the recoable element is a

textarea element

then the recognized value is inserted in to the textarea where the text cursor is if it is in the textarea.

If text in the textarea is selected then the new value replaces the highlighted text.

If the text cursor is not in the textarea then the value is appended to the end of the textarea.

More work is needed on making sure that this binding behavior is well specified and matches

the input validation described in HTML 5. The general desire from the group is for binding to

do a reasonable default behavior, for the additional attributes (type, pattern, max, etc.) to

be available to possibly improve recognition, but for as much as possible those additional

attributes to merely be hints - other then where the HTML 5 specification forbids user agents

to assign certain elements certain values. For example, an input with type of Date can't take arbitrary

non-empty strings, they can only take

non-empty string that are valid date string,

at an recognized string

of "brown" wouldn't work). In addition, some would like the ability to both check selectors as well

as uncheck them, but the exact semantics of how that work (does a default binding toggle the selected item?

check it unless a magic phrase like "uncheck" is recognized before it? something else?) is not well understood,

so no further effort to explain that is made here in this section.

7.1.3 TTS Element

The TTS element is the way to do speech synthesis using markup bindings.

The TTS element is legal where embedded content is expected. If the TTS element has a src attribute,

then its content model is zero or more track elements, then transparent, but with no media element

descendants. If the element does not have a src attribute, then one or more source elements, then

zero or more track elements, then transparent, but with no media element descendants.

IDL

[NamedConstructor=TTS(),

NamedConstructor=TTS(in DOMString src)]

interface HTMLTTSElement : HTMLMediaElement {

attribute DOMString serviceURI;

attribute DOMString text;

attribute DOMString lastMark;

};

A TTS element represents a synthesized audio stream. A TTS element is a media element whose media data is ostensibly synthesized audio data.

When a TTS element is potentially playing, it must have its TTS data played synchronized

with the current playback position, at the element's effective media volume.

When a TTS element is not potentially playing, TTS must not play for the element.

Content may be provided inside the TTS element. User agents should not show this content to the user;

it is intended for older Web browsers which do not support TTS.

In particular, this content is not intended to address accessibility concerns. To make TTS content

accessible to those with physical or cognitive disabilities, authors are expected to provide

alternative media streams and/or to embed accessibility aids (such as transcriptions) into

their media streams.

Implementations should support at least UTF-8 encoded text/plain and application/ssml+xml (both SSML 1.0 and 1.1 should be supported).

User Agents must pass the xml:lang values to synthesizers and synthesizers must use the passed in xml:lang to determine the language

of text/plain.

The existing timeupdate event is dispatched to report progress

through the synthesized speech. If the synthesis is of type application/ssml+xml, timeupdate events should be fired for each mark element that is encountered.

7.1.3.1 Attributes

The src, preload, autoplay, mediagroup, loop, muted, and controls attributes are the attributes common to all media elements.

- serviceURI

The serviceURI attribute specifies the speech service to use in the constructed default request. If the serviceURI

is unset then the User Agent must use the User Agent default service. Note that the serviceURI is a generic URI and can thus point to local services either through use of a URN with meaning to the User Agent or by specifying a URL that the User Agent recognizes as a local service. Additionally, the User Agent default can of course be local or remote and can incorporate end user choices via interfaces provided by the User Agent such as browser configuration parameters.

- text

The text is an optional attribute to specify plain text to be synthesized. The text attribute, on setting must

construct a data: URI that encodes

the text to be played and assign that to the src property of the TTS element. If there are no encoding issues the URI for any given

string would be data:text/plain,string, but if there are problematic characters the UserAgent should use base64 encoding.

- lastMark

The new lastMark attribute must, on getting, return the name of the last SSML mark element that was encountered during playback.

If no mark has been encountered yet, the attribute must return null.

There has been some interest in allowing lastMark to be set to a value as a way to control where playback would start/resume. This feature is simple in principle but may have subtle implementation consequences. It is not a feature that has been seriously studied in other similar languages such as SSML. Thus, the group decided not to consider this feature at this time.

7.1.3.2 Constructors

Two constructors are provided for creating HTMLTTSElement objects (in addition to the factory methods

from DOM Core such as createElement()): TTS() and TTS(src). When invoked as constructors, these must return a

new HTMLTTSElement object (a new tts element). The element must have its preload attribute set to the

literal value "auto". If the src argument is present, the object created must have its src content attribute

set to the provided value, and the user agent must invoke the object's resource selection algorithm before returning.

The element's document must be the active document of the browsing context of the Window object on which the

interface object of the invoked constructor is found.

7.1.4 The Speech Reco Interface

The speech reco interface is the scripted web API for controlling a given recognition.

7.1.4.1 Speech Reco Attributes

- grammars attribute

- The grammars attribute stores the collection of SpeechGrammar objects which represent the grammars

that are active for this recognition.

- maxNBest attribute

- This attribute will set the maximum number of recognition results that should be returned.

The default value is 1.

- lang attribute

- This attribute will set the language of the recognition for the request, using

a valid BCP 47 language tag.

If unset it remains unset for getting in script, but will default to use the

lang of its

recoable element, if tied to an html element, and the lang of the html document root element and

associated hierachy are used when the

SpeechReco is not associated with a recoable element. This default value is

computed and used when the input request opens a connection to the recognition service.

- saveForRereco attribute

- This attribute instructs the speech recognition service if the utterance should be saved for

later use in a rerecognition (true means save). The default value is false.

- endpointDetection attribute

- This attribute instructs the user agent if it should do a low latency endpoint detection

(true means do endpointing). The user agent default should be true.

- finalizeBeforeEnd attribute

- This attribute instructs the recognition service if it should send final results when it gets them, even

if the user is not done talking (true means yes it should send the results early).

The user agent default should be true.

- interimResults attribute

- If interimResults is set to false, that instructs the recognition service that it must not send any interim

results. A value of true represents a hint to the service that the web application would like interim

results. The service may not follow the hint, as the ability to send interim results depends on a combination of the recognition service, the grammars in use,

and the utterance being recognized. The user agent default value should be false.

- confidenceThreshold attribute

- This attribute represents the degree of confidence the recognition system needs in order to

return a recognition match instead of a nomatch. The confidence threshold is a

value between 0.0 (least confidence needed) and 1.0 (most confidence) with 0.5 as the default.

- sensitivity attribute

- This attribute represents the sensitivity to quiet input. The sensitivity is a

value between 0.0 (least sensitive) and 1.0 (most sensitivity) with 0.5 as the default.

- speedVsAccuracy attribute

- This attribute instructs the recognition service on the desired trade off between low latency

and high speed. The speedVsAccuracy is a

value between 0.0 (least accurate) and 1.0 (most accurate) with 0.5 as the default.

- completeTimeout attribute

- This attribute represents the amount of silence, in milliseconds, needed to match a

grammar when a hypothesis is at a complete match of the grammar (that is

the hypothesis matches a grammar, and no larger input can possibly match a grammar).

- incompleteTimeout attribute

- This attribute represents the amount of silence, in milliseconds, needed to match a grammar

when a hypothesis is not at a complete match of the grammar (that is

the hypothesis does not match a grammar, or it does match a grammar but so could a larger input).

- maxSpeechTimeout attribute

- This attribute represents how much speech, in milliseconds, the recognition service should

process before an end of speech or an error occurs.

- inputWaveformURI attribute

- This attribute, if set, instructs the speech recognition service to recognize from this URI instead

of from the input MediaStream attribute.

- parameters attribute

- This attribute holds an array of arbitrary extension parameters. These parameters could set user specific

information (such as profile, gender, or age information) or could be used to set recognition parameters specific

to the recognition service in use.

- serviceURI attribute

- The serviceURI attribute specifies the location of the speech service the web application wishes to connect

to. If this attribute is unset at the time of the open call, then the user agent must use the user agent

default speech service.

- input attribute

- The input attribute is the MediaStream that we are recognizing against. If input is not set, the

speech reco object uses the default UA provided capture (which may be nothing), in which case

the value of input will be null. In cases where the MediaStream is set but the SpeechReco

hasn't yet called start the User Agent should not buffer the audio, the semantics are that the

web application wants to start listening to the Media Stream at the moment it calls Start, and not

earlier than that.

- authorizationState attribute

- The authorizationState variable tracks if the web application is authorized to do speech recognition.

The UA should start in UNKNOWN if the user agent can not determine if the web application

is able to be authorized. The state variable may change values in response to policies of the user agent and possibly

security interactions with the end user. If the web application is authorized then the user agent must set this

variable to AUTHORIZED. If the web application is not authorized then the user agent

must set this variable to NOT_AUTHORIZED. Any time this state variable changes in value

the user agent must raise a authorizationchange event.

- continuous attribute

- When the continuous attribute is set to false the service must only return a single simple

recognition response as a result of starting recognition. This represents a request/response

single turn pattern of interaction. When the continuous attribute is set to true the service

must return a set of recognitions representing more a dictation of multiple recognitions in

response to a single starting of recognition. The user agent default value should be false.

- outputElement attribute

- This attribute, if set, causes default

binding of recognition matches to the recoable element it is set to. This is the same

default binding that occurs when a <reco> element is bound as described at

Default Binding of Results section.

7.1.4.2 Speech Reco Methods

- The setCustomParameter method

- This method appends an arbitrary recognition service parameter to the parameters array. The name of the parameter is given by

the name parameter and the value by the value parameter.

This arbitrary parameter mechanism allows services that want to have extensions or to set user specific information (such

as profile, gender, or age information) to accomplish the task.

- The sendInfo method

- The method allows one to pass arbitrary information to the recognition service, even while recognition is on going.

Each set info call gets transmitted immediately to the recognition service. The type attribute specifies the content-type

of the info message and the value attribute specifies the payload of the info method.

- The open method

- When the open method is called the user agent must connect to the speech service. All of the attributes and parameters

of the SpeechInputResult (I.e., languages, grammars, service uri, etc.) must be set before this method is called, because

they will be fixed with the values they have at the time open is called, at least until open is called again. Note that

the user agent may need to have a permissions dialog at this point to ensure that the end user has given informed consent

for the web application to listen to the user and recognize. Errors may be raised at this point for a variety of

reasons including: not authorized to do recognition, failure to connect to the service, the service can not handle

the languages or grammars needed for this turn, etc. When the service is successfully completed the open with no

errors the user agent must raise an open event.

- The start method

- When the start method is called it represents the moment in time the web application wishes to begin recognition.

When the speech input is streaming live through the input media stream, then this start call represents the moment in

time that the service must begin to listen and try to match the grammars associated with this request. If the

SpeechReco has not yet called open before the start call is made, a call to open is made by the start call

(complete with the open event being raised). Once the system is successfully listening to the recognition the

user agent must raise a start event.

- The stop method

- The stop method represents an instruction to the recognition service to stop listening to more audio, and to try

and return a result using just the audio that it has received to date. A typical use of the stop method might be

for a web application where the end user is doing the end pointing, similar to a walkie-talkie. The end user might

press and hold the space bar to talk to the system and on the space down press the start call would have occurred and

when the space bar is released the stop method is called to ensure that the system is no longer listening to the user.

Once the stop method is called the speech service must not collect additional audio and must not continue to listen to

the user. The speech service must attempt to return a recognition result (or a nomatch) based on the audio that

it has collected to date.

- The abort method

- The abort method is a request to immediately stop listening and stop recognizing and do not return any information

but that the system is done. When the stop method is called the speech service must stop recognizing. The user agent

must raise a end event once the speech service is no longer connected.

- The interpret method

- The interpret method provides a mechanism to request recognition using text, rather than audio.

The text parameter is the string of text to recognize against. When

bypassing audio recognition a number of the normal parameters may be ignored and the sound and audio

events should not be generated. Other normal SpeechReco events should be generated.

7.1.4.3 Speech Reco Events

The DOM Level 2 Event Model is used for speech recognition events. The

methods in the EventTarget interface should be used for registering

event listeners. The SpeechReco interface also contains

convenience attributes for registering a single event handler for each

event type.

For all these events, the timeStamp attribute defined in the DOM Level

2 Event interface must be set to the best possible estimate of when

the real-world event which the event object represents occurred.

This timestamp

must be represented in the User Agent's view of time, even for events where

the timestamps in question could be raised on a different machine like

a remote recognition service (I.e., in a speechend event with a remote

speech endpointer).

Unless specified below, the ordering of the different events is

undefined. For example, some implementations may fire audioend before

speechstart or speechend if the audio detector is client-side and the

speech detector is server-side.

- audiostart event

- Fired when the user agent has started to capture audio.

- soundstart event

- Some sound, possibly speech, has been detected. This must be fired

with low latency, e.g. by using a client-side energy detector.

- speechstart event

- The speech that will be used for speech recognition has started.

- speechend event

- The speech that will be used for speech recognition has ended.

speechstart must always have been fire before speechend.

- soundend event

- Some sound is no longer detected. This must be fired with low latency,

e.g. by using a client-side energy detector. soundstart must always

have been fired before soundend.

- audioend event

- Fired when the user agent has finished capturing audio. audiostart

must always have been fired before audioend.

- result event

- Fired when the speech recognizer returns a result. See

here for more information.

- nomatch event

- Fired when the speech recognizer returns a final result with no recognition

hypothesis that meet or exceed the confidence threshold. The result field

in the event may contain speech recognition results that are below the confidence

threshold or may be null.

- resultdeleted event

- Fired when the recognizer needs to delete one of the previously returned

interim results in a continuous recognition. In the protocol, this would be

represented by an empty recognition complete. A simplified example of this might be

the recognizer gives an interim result for "hot" as the zeroth index of the

continuous result and then gives an interim result of "dog" as the first index.

Later the recognize wants to give a final result that is just one word "hotdog".

In order to do that it needs to change the zeroth index to "hotdog" and delete

the first index. When the first element is deleted the response is the

raising of a resultdeleted event. The resultIndex of this event will be the

element that was deleted and the resultHistory will have the updated value.

- error event

- Fired when a speech recognition error occurs. The error attribute must be

set to a SpeechInputError object.

- authorizationchange event

- Fired whenever the state variable tracking if the web application is authorized

to listen to the user and do speech recognition changes its value.

- open event

- Fired whenever the SpeechReco has successfully connected to the speech service

and the various parameters of the request can be satisfied with the service.

- start event

- Fired when the recognition service has begun to listen to the audio with the intention

of recognizing.

- end event

- Fired when the service has disconnected. The event must always be generated

when the session ends no matter the reason for the end.

7.1.4.4 Speech Input Error

The speech input error object has two attributes code and message.

- code

- The code is a numeric error code for whatever has gone wrong. The values are:

- OTHER (numeric code 0)

- This is the catch all error code.

- NO_SPEECH (numeric code 1)

- No speech was detected.

- ABORTED (numeric code 2)

- Speech input was aborted somehow, maybe by some UA-specific behavior such as

UI that lets the user cancel speech input.

- AUDIO_CAPTURE (numeric code 3)

- Audio capture failed.

- NETWORK (numeric code 4)

- Some network communication that was required to complete the

recognition failed.

- NOT_ALLOWED (numeric code 5)

- The user agent is not allowing any speech input to occur for

reasons of security, privacy or user preference.

- SERVICE_NOT_ALLOWED (numeric code 6)

- The user agent is not allowing the web application requested speech service, but would allow some speech service,

to be used either because the user agent doesn't support the selected one or because of reasons of security, privacy

or user preference.

- BAD_GRAMMAR (numeric code 7)

- There was an error in the speech recognition grammar.

- LANGUAGE_NOT_SUPPORTED (numeric code 8)

- The language was not supported.

- message

- The message content is implementation specific. This attribute is

primarily intended for debugging and developers should not use it directly in their application user interface.

7.1.4.5 Speech Input Alternative

The SpeechInputAlternative represents a simple view of the response that gets used in a n-best list.

To see a more full view of how these simple view are derived from the raw emma see the

Mapping EMMA to Speech Input Alternatives section.

- transcript

- The transcript string represents the raw words that the user spoke.

- confidence

- The confidence represents a numeric estimate between 0 and 1 of how confident the recognition

system is that the recognition is correct. A higher number means the system is more confident.

- interpretation

- The interpretation represents the semantic meaning from what the user said. This might

be determined, for instance, through the SISR specification of semantics in a grammar.

7.1.4.6 Speech Input Result

The SpeechInputResult object represents a single one-shot recognition match, either as one small part of a continuous

recognition or as the complete return result of a non-continuous recognition.

- EMMAXML

- The EMMAXML is a Document that contains the complete EMMA [EMMA] document the recognition service returned from the

recognition. The Document has all the normal XML DOM processing to inspect the content.

- EMMAText

- The EMMAText is a text representation of the EMMAXML.

- length

- The long attribute represents how many n-best alternatives are represented in the item array. The user agent

must not return more SpeechInputAlternatives than the value of the maxNBest attribute on the recognition request.

- item

-

- The item getter returns a SpeechInputAlternative from the index into an array of n-best values.

The user agent must ensure that there are not more elements in the array then the maxNBest attribute was set.

The user agent must ensure that the length attribute is set to the number of elements in the array.

The user agent must ensure that the n-best list is sorted in non-increasing confidence order (each element must

be less than or equal to the confidence of the preceding elements).

- final

- The final boolean must be set to true if this is the final time the speech service will return this

particular index value. If the value is false, then this represents an interim result that could still

be changed.

7.1.4.7 Speech Input Result List

The SpeechInputResultList object holds a sequence of recognition results representing the complete return result of a continuous recognition. For a non-continuous recognition it will hold only a single value.

- length

- The length attribute indicates how many results are represented in the item array.

- item

-

- The item getter returns a SpeechInputResult from the index into an array of result values.

The user agent must ensure that the length attribute is set to the number of elements in the array.

7.1.4.10 Speech Grammar Interface

The SpeechGrammar object represents a container for a grammar. This structure has the

following attributes:

- src attribute

- The required src attribute is the URI for the grammar. Note some services may support builtin

grammars that can be specified using a builtin URI scheme.

- weight attribute

- The optional weight attribute controls the weight that the speech recognition service should use with

this grammar. By default, a grammar has a weight of 1. Larger weight values positively weight

the grammar while smaller weight values make the grammar weighted less strongly.

7.1.4.11 Speech Grammar List Interface

The SpeechGrammarList object represents a collection of SpeechGrammar objects. This structure has the

following attributes:

- length

- The length attribute represents how many grammars are currently in the array.

- item

-

- The item getter returns a SpeechGrammar from the index into an array of grammars.

The user agent must ensure that the length attribute is set to the number of elements in the array.

The user agent must ensure that the index order from smallest to largest matches the order in which grammars were added to the array.

- The addFromElement method

- This method allows one to create a builtin grammar uri from a recoable element

as outlined in the builtin uri description. This

grammar is then appended to the grammars array parameter. The element in question is provided by the

inputElement attribute. The optional weight attribute sets the corresponding attribute value in the created

SpeechGrammar object.

- The addFromURI method

- This method appends a grammar to the grammars array parameter based on URI. The URI for the grammar is specified by the

src parameter, which represents the URI for the grammar. Note, some services

may support builtin grammars that can be specified by URI. If the

weight parameter is present it represents this grammar's weight

relative to the other grammar. If the weight parameter is not present, the default value of 1.0 is

used.

- The addFromString method

- This method appends a grammar to the grammars array parameter based on text. The content of the grammar

is specified by the

string parameter, which represents the content for the grammar. This content

should be encoded into a data: URI when the SpeechGrammar object is created.

If the

weight parameter is present it represents this grammar's weight

relative to the other grammar. If the weight parameter is not present, the default value of 1.0 is

used.

7.1.4.12 Speech Parameter Interface

The SpeechParameter object represents the container for arbitrary name/value parameters. This extensible

mechanism allows developers to take advantage of extensions that recognition services may allow.

- name attribute

- The required name attribute is the name of the custom parameter.

- value attribute

- The required value attribute is the value of the custom parameter.

7.1.4.13 Speech Parameter List Interface

The SpeechParameterList object represents a collection of SpeechParameter objects. This structure has the

following attributes:

- length

- The length attribute represents how many parameters are currently in the array.

- item

-

- The item getter returns a SpeechParameter from the index into an array of parameters.

The user agent must ensure that the length attribute is set to the number of elements in the array.

7.1.5 Mapping EMMA to Speech Input Alternatives

An EMMA document represents the users input and may contain a number of possible hypothesis of what the user

said. Each individual hypothesis is represented by a emma:interpretation. In the case where there is only one

hypothesis than the emma:interpretation may be a direct child of the emma:emma root element. In cases where there

are one or more hypothesis, the emma:interpretation tags may be included as children of a emma:one-of tag which

is a child of the root emma:emma element.

Each emma:interpretation in turn contains information about the transcript, confidence, and interpretation for

that one hypothesis. The interpretation information

is given in EMMA by the instance data representing the semantics of what the user

meant. In the case that the instance data is in an emma:literal then the interpretation variable of

the Speech Input Alternative must be set to the contents of the emma:literal tag. In the case where the

instance data is given by an XML structure then the interpretation must be set to the XML document created when you

wrap the instance data in a root result element. This Document has all the normal XML DOM processing to inspect

the content.

Each emma:interpretation also contains the transcript of what the user said, represented by the emma:tokens attribute.

The transcript variable of the Speech Input Alternative must be set to the contents of this attribute.

Each emma:interpreation also contains the confidence the recognizer has in this hypothesis, represented by

the emma:confidence attribute. The confidence variable of the Speech Input Alternative must be set to

this value.

Note that while these mapping cover the common usecases, the full EMMA document is available for inspection

when needed through the XML and text properties on the SpeechInputResult object.

7.1.5.1 EMMA Mapping Example

Here is an example that shows how this mapping works.

In the example below the different recognition results are mapped to a result set.

EMMA Document

<emma:emma version="1.0"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns="http://www.example.com/example">

<emma:one-of id="r1" emma:medium="acoustic" emma:mode="voice">

<emma:interpretation id="int1" emma:confidence="0.8" emma:tokens="Boston Flight 311">

<emma:literal>BOS</emma:literal>

</emma:interpretation>

<emma:interpretation id="int2" emma:confidence="0.5" emma:tokens="Austin to Denver Flight on 3 11">

<origin>AUS</origin>

<destination>DEN</destination>

<date>03112003</date>

</emma:interpretation>

</emma:one-of>

</emma:emma>

As a result of getting the above EMMA a SpeechInputResultEvent will be generated. The complete

EMMA and n-best list will be accessible from the result property in the form of the SpeechInputResult

object. But the top level mapping is as follows.

SpeechInputResultEvent top level mappings

{transcript:"Boston Flight 311",

confidence:0.8,

interpretation:"BOS",

...}

The SpeechInputResult object that is generated has two SpeechInputAlternative in the item collection.

The one at the 0th index (the best result) has the same transcript, confidence, and interpretation as the above top level

mappings. The SpeechInputAlternative at the 1st index (the 2nd best result) is associated with the second emma:interpretation

from the EMMA and thus has a transcript of "Austin to Denver Flight on 3 11" with a confidence of 0.5

and the interpretation is the XML Document, complete with all the normal DOM parsing, that is represented

below.

2nd best SpeechInputAlternative Interpretation XML Document

<result>

<origin>AUS</origin>

<destination>DEN</destination>

<date>03112003</date>

</result>

7.1.6 Extension Events

Some speech services may want to raise custom extension interim events either while doing speech recognition

or while synthesizing audio. An example of this kind of event might be viseme events that encode lip and mouth

positions while speech is being synthesized that can help with the creation of avatars and animation. These

extension events must begin with "speech-x", so the hypothetical viseme event might be something like

"speech-x-viseme".

7.1.7 Examples

In the example below the various speech APIs are used to do basic speech web search.

Speech Web Search Markup Only

<!DOCTYPE html>

<html>

<head>

<title>Example Speech Web Search Markup Only</title>

</head>

<body>

<form id="f" action="/search" method="GET">

<label for="q">Search</label>

<reco for="q"/>

<input id="q" name="q" type="text"/>

<input type="submit" value="Example Search"/>

</form>

</body>

</html>

Speech Web Search JS API With Functional Binding

<!DOCTYPE html>

<html>

<head>

<title>Example Speech Web Search JS API and Bindings</title>

</head>

<body>

<script type="text/javascript">

function speechClick() {

var inputField = document.getElementById('q');

var sr = new SpeechReco();

sr.addGrammarFrom(inputField);

sr.outputToElement(inputField);

// Set what ever other parameters you want on the sr

sr.serviceURI = "https://example.org/recoService";

sr.speedVsAccuracy = 0.75;

sr.start();

}

</script>

<form id="f" action="/search" method="GET">

<label for="q">Search</label>

<input id="q" name="q" type="text" />

<button name="mic" onclick="speechClick()">

<img src="http://www.openclipart.org/image/15px/svg_to_png/audio-input-microphone.png" alt="microphone picture" />

</button>

<br />

<input type="submit" value="Example Search" />

</form>

</body>

</html>

Speech Web Search JS API Only

<!DOCTYPE html>

<html>

<head>

<title>Example Speech Web Search</title>

</head>

<body>

<script type="text/javascript">

function speechClick() {

var sr = new SpeechReco();

// Build grammars from scratch

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("http://example.org/topChoices.srgs", 1.5);

sr.grammars.addFromUri("builtin:input?type=text", 0.5);

sr.grammars.addFromUri("builtin:websearch", 1.1);

// This 3rd grammar is an inline version of the http://www.example.com/places.grxml grammar from Appendix J.2 of the SRGS document (without the comments and xml and doctype)

sr.grammars.addFromUri("data:application/srgs+xml;base64,PGdyYW1tYXIgeG1sbnM9Imh0dHA6Ly93d3cudzMub3JnLzIwMDEvMDYvZ3JhbW1hciINCiAgICAgICAgIHhtbG5zOnhzaT0iaHR0cDovL3d3dy53My5vcmcvMjAwMS9YTUxTY2" +

"hlbWEtaW5zdGFuY2UiIA0KICAgICAgICAgeHNpOnNjaGVtYUxvY2F0aW9uPSJodHRwOi8vd3d3LnczLm9yZy8yMDAxLzA2L2dyYW1tYXIgDQogICAgICAgICAgICAgICAgICAgICAgICAgICAgIGh0dHA6Ly93d3cudzMub" +

"3JnL1RSL3NwZWVjaC1ncmFtbWFyL2dyYW1tYXIueHNkIg0KICAgICAgICAgeG1sOmxhbmc9ImVuIiB2ZXJzaW9uPSIxLjAiIHJvb3Q9ImNpdHlfc3RhdGUiIG1vZGU9InZvaWNlIj4NCg0KICAgPHJ1bGUgaWQ9ImNpdHki" +

"IHNjb3BlPSJwdWJsaWMiPg0KICAgICA8b25lLW9mPg0KICAgICAgIDxpdGVtPkJvc3RvbjwvaXRlbT4NCiAgICAgICA8aXRlbT5QaGlsYWRlbHBoaWE8L2l0ZW0+DQogICAgICAgPGl0ZW0+RmFyZ288L2l0ZW0+DQogICA" +

"gIDwvb25lLW9mPg0KICAgPC9ydWxlPg0KDQogICA8cnVsZSBpZD0ic3RhdGUiIHNjb3BlPSJwdWJsaWMiPg0KICAgICA8b25lLW9mPg0KICAgICAgIDxpdGVtPkZsb3JpZGE8L2l0ZW0+DQogICAgICAgPGl0ZW0+Tm9ydG" +

"ggRGFrb3RhPC9pdGVtPg0KICAgICAgIDxpdGVtPk5ldyBZb3JrPC9pdGVtPg0KICAgICA8L29uZS1vZj4NCiAgIDwvcnVsZT4NCg0KICAgPHJ1bGUgaWQ9ImNpdHlfc3RhdGUiIHNjb3BlPSJwdWJsaWMiPg0KICAgICA8c" +

"nVsZXJlZiB1cmk9IiNjaXR5Ii8+IDxydWxlcmVmIHVyaT0iI3N0YXRlIi8+DQogICA8L3J1bGU+DQo8L2dyYW1tYXI+", 0.01);

// Say what happens on a match

sr.onresult = function(event) {

var q = document.getElementById('q');

q.value = event.result.item(0).interpretation;

var f = document.getElementById('f');

f.submit();

};

// Also do something on a nomatch

sr.onnomatch() = function(event) {

// even though it is a no match we might have a result

alert("no match: " + event.result.item(0).interpretation);

}

// Set what ever other parameters you want on the sr

sr.serviceURI = "https://example.org/recoService";

sr.speedVsAccuracy = 0.75;

// Start will call open for us, if we wanted to open the sr on page start we could have to do initial permission checking

sr.start();

}

</script>

<form id="f" action="/search" method="GET">

<label for="q">Search</label>

<input id="q" name="q" type="text" />

<button name="mic" onclick="speechClick()">

<img src="http://www.openclipart.org/image/15px/svg_to_png/audio-input-microphone.png" alt="microphone picture" />

</button>

<br />

<input type="submit" value="Example Search" />

</form>

</body>

</html>

Web search by voice, with auto-submit

<script type="text/javascript">

function startSpeech(event) {

var sr = new SpeechReco();

sr.onresult = handleSpeechInput;

sr.start();

}

function handleSpeechInput(event) {

var q = document.getElementById("q");

q.value = event.result.item(0).interpretation;

q.form.submit();

}

</script>

<form action="http://www.example.com/search">

<input type="search" id="q" name="q">

<input type="button" value="Speak" onclick="startSpeech">

</form>

Behavior

- User clicks button.

- Audio is captured and the speech recognizer runs.

- If some speech was recognized, the first hypothesis is put in the text field and the form is submitted.

- Search results are loaded.

Web search by voice, with "Did you say..."

This example uses the second best result. The search results page will display a link with the text "Did you say $second_best?".

<script type="text/javascript">

function startSpeech(event) {

var sr = new SpeechReco();

sr.onresult = handleSpeechInput;

sr.start();

}

function handleSpeechInput(event) {

var q = document.getElementById("q");

q.value = event.result.item(0).interpretation;

if (event.result.length > 1) {

var second = event.result[1].interpretation;

document.getElementById("second_best").value = second;

}

q.form.submit();

}

</script>

<form action="http://www.example.com/search">

<input type="search" id="q" name="q">

<input type="button" value="Speak" onclick="startSpeech">

<input type="hidden" name="second_best" id="second_best">

</form>

Speech translator

<script type="text/javascript" src="http://www.example.com/jsapi"></script>

<script type="text/javascript">

library.load("language", "1"); // Load the translator JS library.

// These will most likely be set in a UI.

var fromLang = "en";

var toLang = "es";

function startSpeech(event) {

var sr = new SpeechReco();

sr.onresult = handleSpeechInput;

sr.start();

}

function handleSpeechInput(event) {

var text = event.result.item(0).interpretation;

var callback = function(translationResult) {

if (translationResult.translation)

speak(translationResult.translation, toLang);

};

library.language.translate(text, fromLang, toLang, callback);

}

function speak(output, lang) {

var tts = new TTS();

// NOTE: these attributes don't seem to be in the proposal

tts.text = output;

tts.lang = lang;

tts.play();

}

</script>

<form>

<input type="button" value="Speak" onclick="startSpeech">

</form>

Behavior

- User clicks button and speaks in English.

- System recognizes the text in English.

- A web service translates the text from English to Spanish.

- System synthesizes and speaks the translated text in Spanish.

Speech shell

HTML:

<script type="text/javascript">

function startSpeech(event) {

var sr = new SpeechReco();

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("commands.grxml");

sr.onresult = handleSpeechInput;

sr.start();

}

function handleSpeechInput(event) {

var command = event.result.item(0).interpretation;

if (command.action == "call_contact") {

var number = getContactNumber(command.contact);

callNumber(number);

} else if (command.action == "call_number") {

callNumber(command.number);

} else if (command.action == "calculate") {

say(evaluate(command.expression));

} else if {command.action == "search") {

search(command.query);

}

}

function callNumber(number) {

window.location = "tel:" + number;

}

function search(query) {

// Start web search for query.

}

function getContactNumber(contact) {

// Get the phone number of the contact.

}

function say(text) {

// Speak text.

}

</script>

<form>

<input type="button" value="Speak" onclick="startSpeech">

</form>

English SRGS XML Grammar (commands.grxml):

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE grammar PUBLIC "-//W3C//DTD GRAMMAR 1.0//EN"

"http://www.w3.org/TR/speech-grammar/grammar.dtd">

<grammar xmlns="http://www.w3.org/2001/06/grammar" xml:lang="en"

version="1.0" mode="voice" root="command"

tag-format="semantics/1.0">

<rule id="command" scope="public">

<example> call Bob </example>

<example> calculate 4 plus 3 </example>

<example> search for pictures of the Golden Gate bridge </example>

<one-of>

<item>

call <ruleref uri="contacts.grxml">

<tag>out.action="call_contact"; out.contact=rules.latest()</tag>

</item>

<item>

call <ruleref uri="phonenumber.grxml">

<tag>out.action="call_number"; out.number=rules.latest()</tag>

</item>

<item>

calculate <ruleref uri="#expression">

<tag>out.action="calculate"; out.expression=rules.latest()</tag>

</item>

<item>

search for <ruleref uri="http://grammar.example.com/search-ngram-model.xml">

<tag>out.action="search"; out.query=rules.latest()</tag>

</item>

</one-of>

</rule>

</grammar>

Turn-by-turn navigation

HTML:

<script type="text/javascript">

var directions;

function startSpeech(event) {

var sr = new SpeechReco();

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("grammar-nav-en.grxml");

sr.onresult = handleSpeechInput;

sr.start();

}

function handleSpeechInput(event) {

var command = event.result.item(0).interpretation;

getDirections(command.destination, handleDirections);

speakNextInstruction();

}

function getDirections(query, handleDirections) {

// Get location, then get directions from server, pass to handleDirections

}

function handleDirections(newDirections) {

directions = newDirections;

// List for location changes and call speakNextInstruction()

// when appropriate

}

function speakNextInstruction() {

var instruction = directions.pop();

var tts = new TTS();

tts.text = instruction;

tts.play();

}

</script>

<form>

<input type="button" value="Speak" onclick="startSpeech">

</form>

English SRGS XML Grammar (grammar-nav-en.grxml):

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE grammar PUBLIC "-//W3C//DTD GRAMMAR 1.0//EN"

"http://www.w3.org/TR/speech-grammar/grammar.dtd">

<grammar xmlns="http://www.w3.org/2001/06/grammar" xml:lang="en"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2001/06/grammar

http://www.w3.org/TR/speech-grammar/grammar.xsd"

version="1.0" mode="voice" root="nav_cmd"

tag-format="semantics/1.0">

<rule id="nav_cmd" scope="public">

<example> navigate to 76 Buckingham Palace Road, London </example>

<example> go to Phantom of the Opera </example>

<item>

<ruleref uri="#nav_action" />

<ruleref uri="builtin:search" />

<tag>out.action="navigate_to"; out.destination=rules.latest();</tag>

</item>

</rule>

<rule id="nav_action">

<one-of>

<item>navigate to</item>

<item>go to</item>

</one-of>

</rule>

</grammar>

The examples below show a few ways to handle permissions using Speech API.

Hide possible graphical UI related to reco element if permission is denied

<!-- Anywhere in the page -->

<script>

/** When the document has been loaded, iterate through all the

* reco elements and remove the ones which have request.authorizationState

* NOT_AUTHORIZED. For the rest of the reco elements

* add authorizationchange event listener, which will remove the target of

* the event from DOM if its request.authorizationState changes to

*/ NOT_AUTHORIZED.

window.addEventListener("load",

function() {

var recos = document.getElementsByTagName("reco");

for (var i = recos.length - 1; i >= 0; --i) {

if (!removeNotAuthorizedReco(recos[i])) {

recos[i].addEventListener("authorizationchange",

function(evt) { removeNotAuthorizedReco(evt.target); });

}

}

});

function removeNotAuthorizedReco(reco) {

if (reco.request.authorizationState ==

SpeechReco.NOT_AUTHORIZED) {

reco.parentNode.removeChild(reco);

return true;

}

return false;

}

</script>

Ask permission as soon as the page has loaded and if permission is granted activate SpeechRequest object whenever user clicks the page

<!-- Anywhere in the page -->

<script>

var request; // Make sure the request object is kept alive.

window.addEventListener("load",

function () {

request = new SpeechRequest();

request.onresult =

function(evt) {

// Do something with the speech recognition result.

}

request.open(); // Trigger permission handling UI in the user agent.

document.addEventListener("click",

function() {

if (request.authorizationState ==

SpeechReco.AUTHORIZED) {

request.start();

}

});

});

</script>

Domain Specific Grammars Contingent on Earlier Inputs

A use case exists around collecting multiple domain specific inputs sequentially where the later inputs depend on the results of the earlier inputs. For instance, changing which cities are in a grammar of cities in response to the user saying in which state they are located.

This seems trivial, assuming there are a variety of suitable grammars to choose from.

<input id="txtState" type="text"/>

<button id="btnStateMic" type="button" onclick="stateMicClicked()">

<img src="microphone.png"/>

</button>

<br/>

<input id="txtCity" type="text"/>

<button id="btnCityMic" type="button" onclick="cityMicClicked()">

<img src="microphone.png"/>

</button>

<script type="text/javascript">

function stateMicClicked() {

var sr = new SpeechReco();

// add the grammar that contains all the states:

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("states.grxml");

sr.onmatch = function (e) {

// assume the grammar returns a standard string for each state

// in which case we just need to get the interpretation

document.getElementById("txtState").value =

e.result.item(0).interpretation;

}

sr.start();

}

function cityMicClicked() {

var sr = new SpeechReco();

// The cities grammar depends on what state has been selected

// If state is blank, use major US cities

// otherwise use cities in that state

var state = document.getElementById("txtState").value;

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri(state ? "majorUScities.grxml" : "citiesin" + state + ".grxml");

sr.onresult = function(e) {

document.getElementById("txtState").value =

e.result.item(0).interpretation;

}

sr.start();

}

</script>

Rerecognition

Some sophisticated applications will re-use the same utterance in two or more recognitions turns in what appears to the user as one turn. For example, an application may ask "how may I help you?", to which the user responds "find me a round trip from New York to San Francisco on Monday morning, returning Friday afternoon". An initial recognition against a broad language model may be sufficient to understand that the user wants the "flight search" portion of the app. Rather than get the user to repeat themselves, the application will just re-use the existing utterance for the recognition on the flight search recognition.

<script type="text/javascript">

function listenAndClassifyThenReco() {

var sr = new SpeechReco();

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("broadClassifier.grxml");

sr.onresult = function (e) {

if ("broadClassifier.grxml" == sr.grammars[0].uri) {

// Also, this switch is a little contrived. no reason why the re-reco grammar

// wouldn't just be returned in the interpretation

switch (getClassification(e.interpretation)) {

case "flightsearch":

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("flightsearch.grxml");

break;

case "hotelbooking":

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("hotelbooking.grxml");

break;

case "carrental":

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("carrental.grxml");

break;

default:

throw ("cannot interpret:" + e.result.item(0).interpretation);

}

sr.inputWaveformUri = getUri(e); // This probably comes from the sessionId that comes back from the service

// re-initialize with new settings:

sr.open();

// start listening:

sr.start();

}

else if ("flightsearch.grxml" == sr.grammars[0].uri) {

processFlightSearch(e.result.item(0).interpretation);

}

else if ("hotelbooking.grxml" == sr.grammars[0].uri) {

processHotelBooking(e.result.item(0).interpretation);

}

else if ("carrental.grxml" == sr.grammars[0].uri) {

processCarRental(e.result.item(0).interpretation);

}

}

sr.saveForRereco = true;

sr.start();

}

</script>

Speech Enabled Email Client

The application reads out subjects and contents of email and also listens for commands, for instance, "archive", "reply: ok, let's meet at 2 pm", "forward to bob", "read message". Some commands may relate to VCR like controls of the message being read back, for instance, "pause", "skip forwards", "skip back", or "faster". Some of those controls may include controls related to parts of speech, such as, "repeat last sentence" or "next paragraph".

This is the other end of the spectrum. It's a huge app, if it works as described. But here's a simplistic version of some of the logic:

<script type="text/javascript">

var sr;

var tts;

// initialize the speech object when the page loads:

function initializeSpeech() {

sr = new SpeechReco();

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("globalcommands.grxml");

sr.onresult = doSRResult;

sr.onerror = doSRError;

tts = new TTS();

}

function onMicClicked() {

// stop TTS if there is any...

if (tts.paused == false && tts.ended == false) {

tts.controller.pause();

}

sr.start();

}

function doSRError(e) {

// do something informative...

}

function doSRResult(e) {

// I don't want to write this line. How do I avoid it?

if (!e.result.final) {

return;

}

// Assume the interpretation is an object with a

// pile of properties that have been glommed together

// by the grammar

var semantics = e.result.item(0).interpretation;

switch (semantics.command) {

case "reply":

composeReply(currentMessage, semantics.message);

break;

case "compose":

composeNewMessage(semantics.subject, semantics.recipients, semantics.message);

break;

case "send":

currentComposition.Send();

break;

case "readout":

readout(currentMessage);

break;

default:

throw ("cannot interpret:" + semantics.command);

}

}

function readout(message) {

tts.src = "data:,message from " + message.sendername + ". Message content: " + message.body;

tts.play();

}

From the multimodal use case "The ability to mix and integrate input from multiple modalities such as by saying 'I want to go from here to there' while tapping two points on a touch screen map."

In the example below the various speech APIs are used to do a simple multimodal example where the user is presented with a series of buttons and may click one while they are speaking. The click event is sent to the speech service using the sendInfo method on the SpeechReco. The result coming back from the speech service is the integration of the voice command and gesture. The combined multimodal result is represented in the EMMA document along with the EMMA interpretations for the individual modalities.

Simple Multimodal Example JS API Only

<!DOCTYPE html>

<html>

<head>

<title>Simple Multimodal Example</title>

</head>

<body>

<script type="text/javascript">

// Only send gestures if sr is active, start() has been called

sr_started = false;

function speechClick() {

var sr = new SpeechReco();

// Indicate the reco service to be used

sr.serviceURI = "https://example.org/recoService/multimodal";

// Set any parameters

sr.speedVsAccuracy = 0.75;

sr.completeTimeout = 2000;

sr.incompleteTimeout = 3000;

// Specifying the grammar

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("http://example.org/commands.srgs");

// Say what happens on a match

sr.onresult = function(event) {

sr_started=false;

var af = document.getElementById('asr_feedback');

af.value = event.result.item(0).transcript;

// code to pull out interpretation and execute;

interp = event.result.item(0).interpretation;

// ....

};

// Also do something on a nomatch

sr.onnomatch() = function(event) {

// even though it is a no match we might have a result

alert("no match: " + event.result.item(0).interpretation);

}

//

// Start will call open for us, if we wanted to open the

// sr on page start we could have to do initial permission checking

sr.start();

sr_started=true;

};

// What do if the user clicks a button during speech capture

// Uses sendInfo to add the click to the stream sent to the service

function sendClick(payload) {

if (sr_started) {

sr.sendInfo('text/xml',payload);

}

};

</script>

<div>

<input id="asr_feedback" name="asr_feedback" type="text"/>

<button name="mic" onclick="speechClick()">SPEAK</button>

<br/>

<br/>

<button name="item1" onclick="sendClick('<click>joe_bloggs</click'">Joe Bloggs</button>

<button name="item2" onclick="sendClick('<click>pam_brown</click>'">Pam Brown</button>

<button name="item3" onclick="sendClick('<click>peter_smith</click>'">Peter Smith</button>

</div>

</body>

</html>

SRGS Grammar (http://example.org/commands.srgs)

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE grammar PUBLIC "-//W3C//DTD GRAMMAR 1.0//EN"

"http://www.w3.org/TR/speech-grammar/grammar.dtd">

<grammar version="1.0" mode="voice" root="top" tag-format="semantics/1.0">

<rule id="top" scope="public">

<one-of>

<item>call this person<tag>out._cmd="CALL";out._obj="DEICTIC";</tag></item>

<item>email this person<tag>out._cmd="CALL";out._obj="DEICTIC";</tag></item>

<item>call<tag>out._cmd="CALL";out._obj="DEICTIC";</tag></item>

<item>email<tag>out._cmd="CALL";out._obj="DEICTIC";</tag></item>

</one-of>

</rule>

</grammar>

EMMA Returned

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:grammar id="gram1" grammar-type="application/srgs-xml" ref="http://example.org/commands.srgs"/>

<emma:interpretation id="multimodal1"

emma:confidence="0.6"

emma:start="1087995961500"

emma:end="1087995962542"

emma:medium="acoustic tactile"

emma:mode="voice gui"

emma:function="dialog"

emma:verbal="true"

emma:lang="en-US"

emma:result-format="application/json">

<emma:derived-from resource="#voice1" composite="true"/>

<emma:derived-from resource="#gui1" composite="true"/>

<emma:literal><![CDATA[

{_cmd:"CALL",

_obj:"joe_bloggs"}]]></emma:literal>

</emma:interpretation>

<emma:derivation>

<emma:interpretation id="voice1"

emma:start="1087995961500"

emma:end="1087995962542"

emma:process="https://example.org/recoService/multimodal"

emma:grammar-ref="gram1"

emma:confidence="0.6"

emma:medium="acoustic"

emma:mode="voice"

emma:function="dialog"

emma:verbal="true"

emma:lang="en-US"

emma:tokens="call this person"

emma:result-format="application/json">

<emma:literal><![CDATA[

{_cmd:"CALL",

_obj:"DEICTIC"}]]></emma:literal>

</emma:interpretation>

<emma:interpretation id="gui1"

emma:medium="tactile"

emma:mode="gui"

emma:function="dialog"

emma:verbal="false">

<click>joe_bloggs</click>

</emma:interpretation>

</emma:derivation>

</emma:emma>

Speech XG Translating Example

This is a complete example that can be saved as an HTML document.

<html>

<head>

<title>Speech XG Translating Example</title>

</head>

<body>

<script type="text/javascript" src="https://example.org/translation.js"/>

<script type="text/javascript">

function speechClick() {

var sr = new SpeechReco();

sr.lang = document.getElementsById("inlang").value;

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("builtin:dictation");

sr.serviceURI = "ws://example.org/reco";

// Haven't had a good link with the capture stuff in examples yet

sr.input = document.getElementById("speak").capture;

sr.onopen = function (event) { document.getElementById("p").value = 5; };

sr.onstart = function (event) { document.getElementById("p").value = 10; };

sr.onaudiostart = function (event) { document.getElementById("p").value = 20; };

sr.onsoundstart = function (event) { document.getElementById("p").value = 25; };

sr.onspeechstart = function (event) { document.getElementById("p").value = 30; };

sr.onspeechend = function (event) { document.getElementById("p").value = 60; };

sr.onsoundend = function (event) { document.getElementById("p").value = 65; };

sr.onaudioend = function (event) { document.getElementById("p").value = 70; };

sr.onresult = readResult();

sr.start();

}

function readResult(event) {

document.getElementsById("p").value = 100;

var tts = new TTS();

tts.serviceURI = "ws://example.org/speak";

tts.lang = document.getElementById("outlang").value;

tts.src = "data:text/plain;" + translate(event.result.item(0).interpretation, document.getElementById("inlang").value, tts.lang);

}

</script>

<h1>Speech XG Translating Example</h1>

<form id="foo">

<p>

<label for="inlang">Spoken Language to Translate: </label>

<select id="inlang" name="inlang">

<option value="en-US">American English</option>

<option value="en-GB">UK English</option>

<option value="fr-CA">French Canadian</option>

<option value="es-ES">Spanish</option>

</select>

</p>

<p>

<label>Translation Output Language:

<select id="outlang" name="outlang">

<option value="en-US">American English</option>

<option value="en-GB">UK English</option>

<option value="fr-CA" selected="true">French Canadian</option>

<option value="es-ES">Spanish</option>

</select>

</label>

</p>

<p>

<label for="speak">Click to speak: </label>

<input type="button" name="speak" value="speak" capture="microphone" onclick="speechClick()"/>

</p>

<p>

<progress id="p" max="100" value="0"/>

</p>

</form>

</body>

</html>

Multi-slot Filling Example

This is a complete example that can be saved as an HTML document.

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN">

<html>

<head>

<meta http-equiv="content-type"

content="text/html; charset=ISO-8859-1">

<title>Mult-slot filling</title>

<script type="text/javascript">

function speechClick() {

var sr = new SpeechReco();

//assume some multislot grammar coming from a URL

sr.grammars = new SpeechGrammarList();

sr.grammars.addFromUri("http://bookhotelspeechtools.example.com/bookhotelgrammar.xml");

sr.start();

}

// on a match, extract the appropriate EMMA slots and populate the form

sr.onresult = function(event) {

//get EMMA

var emma = event.EMMAXML;

//the author of this application will know the names of the slots

//there is a root node called "booking" in the application semantics

//there are four slots called "arrival", "departure", "adults" and "children"

var booking = emma.getElementsByTagName("booking").item(0);

//get arrival

var arrivalDate = getEMMAValue(booking, "arrival");

arrival.value = arrivalDate;

// get departure

var departureDate = getEMMAValue(booking, "departure");

departure.value = departureDate;

//get num adults (default is 1)

var numAdults = getEMMAValue(booking, "adults");

adults.value = numAdults;

//get num children (default is 0)

var numChildren = getEMMAValue(booking, "children");

children.value = numChildren;

//In a real application, before submitting, you would

//check that arrival and departure are filled, if either one is

//missing, prompt for user to fill. This could be done

//by initiating a directed dialog with slot-specific grammars.

The user should also be able to type values.

f.submit();

};

getEMMAValue(Node node String name){

return node.getElementsByTagName(name).item(0).nodeValue;

}

}

</script>

</head>

<body>

<h1>Multi-slot Filling Example</h1>

<h2>Welcome to the Speech Hotel!<br>

</h2>

<br>

<form id="f" action="/search" method="GET"> Please tell us your arrival

and departure dates, and how many adults and children will

be staying with us. <br>

For example "I want a room from December 1 to December 4 for two

adults" <br>

Click the microphone to start speaking. <button name="mic"

onclick="speechClick()"> <img

src="http://www.openclipart.org/image/15px/svg_to_png/audio-input-microphone.png"

alt="microphone picture"> </button> <br>

<input id="arrival" name="arrival" type="text"> <br>

<label for="arrival">Arrival Date</label> <br>

<br>

<input id="departure" name="departure" type="text"> <br>

<label for="departure">Departure Date</label> <br>

<br>

<input id="adults" name="adults" value="1" type="text"> <br>

<label for="adults">Adults</label> <br>

<br>

<input id="children" name="children" value="0" type="text"> <br>

<label for="children">Children (under age 12)</label> <br>

<br>

<input value="Check Availability" type="submit"></form>

</body>

</html>

These are inline grammar examples

Referencing external grammar

We could write the grammar to a file and then reference the grammar via URI.

Then we end up with file overhead and temporary files.

<form>