Techniques for User Agent Accessibility Guidelines 1.0

W3C Working Draft 8 March 2000

- This version:

- http://www.w3.org/WAI/UA/WD-UAAG10-TECHS-20000308

- (plain text,

gzip PostScript,

gzip PDF,

gzip tar file

of HTML,

zip archive of HTML)

- Latest version:

- http://www.w3.org/WAI/UA/UAAG10-TECHS

- Previous version:

- http://www.w3.org/TR/2000/WD-UAAG10-TECHS-20000128

- Editors:

- Jon Gunderson, University of Illinois at Urbana-Champaign

Ian Jacobs, W3C

Copyright

©1999 - 2000 W3C® (MIT, INRIA, Keio), All Rights Reserved. W3C liability, trademark, document use and software licensing rules apply.

This document provides techniques for satisfying the

checkpoints defined in "User Agent Accessibility Guidelines 1.0" [UAAG10].

These techniques cover the accessibility of

user interfaces, content rendering, application programming interfaces

(APIs), and languages such as the Hypertext Markup Language

(HTML),

Cascading Style Sheets

(CSS) and

the Synchronized Multimedia Integration Language

(SMIL).

This document is part of a series of accessibility documents

published by the Web Accessibility

Initiative (WAI)

of the World Wide Web Consortium (W3C).

This section describes the status of this document at the time

of its publication. Other documents may supersede this document. The

latest status of this document series is maintained at the W3C.

This is a W3C Working Draft for review by W3C Members and other

interested parties. It is a draft document and may be updated,

replaced or obsoleted by other documents at any time. It is

inappropriate to use W3C Working Drafts as reference material or to

cite them as other than "work in progress". This is work in

progress and does not imply endorsement by, or the consensus of,

either W3C or participants in the WAI User Agent (UA) Working

Group.

While User Agent Accessibility Guidelines 1.0 strives to be a stable document (as a W3C

Recommendation), the current document is expected to evolve as

technologies change and content developers discover more effective

techniques for designing accessible Web sites and pages.

Please send comments about this document, including

suggestions for additional techniques, to the public mailing

list w3c-wai-ua@w3.org

(public

archives).

This document has been produced as part of the Web Accessibility Initiative. The

goals of the User Agent Working

Group are described in the charter. A

list of the Working Group

participants is available.

A list of current W3C Recommendations and other technical documents

can be found at http://www.w3.org/TR.

This document provides some suggestions for satisfying

the requirements of the "User Agent Accessibility Guidelines 1.0" [UAAG10].

The techniques listed in this document are not required for

conformance to the Guidelines. These techniques are not necessarily

the only way of satisfying the checkpoint, nor are they necessarily a

definitive set of requirements for satisfying a checkpoint.

Section 2 of this document reproduces the guidelines and

checkpoints of the User Agent Accessibility Guidelines 1.0 [UAAG10]. Each checkpoint definition

includes a link to the checkpoint definition in [UAAG10]. Each

checkpoint definition is followed by a list of techniques, information

about related resources, and references to the accessibility topics in

section 3. These accessibility topics may

apply to more than one checkpoint and so have been split off into

stand-alone sections.

Note. Some of the techniques in this document

are appropriate for assistive technologies.

"Techniques for User Agent Accessibility Guidelines 1.0" and the Guidelines [UAAG10]

are part of a series of accessibility guidelines

published by the Web Accessibility

Initiative (WAI).

The series also includes "Web Content Accessibility Guidelines 1.0"

[WCAG10] (and techniques [WCAG10-TECHS])

and "Authoring Tool Accessibility Guidelines 1.0"

[ATAG10] (and techniques [ATAG10-TECHS]).

The following editorial conventions are used throughout

this document:

- HTML element

names are in uppercase letters (e.g., H1, BLOCKQUOTE, TABLE, etc.)

- HTML attribute

names are quoted in lowercase letters

(e.g., "alt", "title", "class", etc.)

Each checkpoint in this document is assigned a priority

that indicates its importance for users with disabilities.

- [Priority 1]

- This checkpoint must be satisfied by user agents,

otherwise one or more groups of users with disabilities will

find it impossible to access the Web. Satisfying

this checkpoint is a basic requirement for enabling

some people to access the Web.

- [Priority 2]

- This checkpoint should be satisfied by user

agents, otherwise one or more groups of users

with disabilities

will find it difficult

to access the Web. Satisfying this checkpoint

will remove significant barriers to Web access for some

people.

- [Priority 3]

- This checkpoint may be satisfied by user agents

to make it easier for one or more groups of users

with disabilities to

access information. Satisfying this checkpoint will improve

access to the Web for some people.

This section lists each checkpoint of User Agent Accessibility Guidelines 1.0 [UAAG10]

along with some possible techniques for satisfying it.

Each checkpoint also links to more general accessibility topics

where appropriate.

Checkpoints for user interface accessibility:

- 1.1 Ensure that every functionality available through the user interface is also available through every input device API supported by the user agent. Excluded from this requirement are functionalities that are part of the input device API itself (e.g., text input for the keyboard API, pointer motion for the pointer API, etc.) [Priority 1]

(Checkpoint 1.1)

-

Note. The device-independence

required by this checkpoint applies to functionalities

described by the other checkpoints in this document

(e.g., installation, documentation,

user agent user interface

configuration, etc.).

This checkpoint does not require user agents to use

all operating system input device APIs, only to make

the software accessible through those they do use.

Techniques:

-

Ensure that the user can do the following with

all supported input devices:

- Select content and

operate on it. For example, if

the user can select text with the mouse and make that

text the content of a new link by pushing a button, they must

also be able to do so through the keyboard and

other supported devices. Other operations include

cut, copy, and paste.

- Set the focus.

Ensure that software may be installed, uninstalled,

and updated in a device-independent manner.

- Navigate content.

- Navigate links (refer to

link techniques).

- Use the graphical user interface menus.

- Fill out forms.

- Access documentation.

- Configure the software.

- Install, uninstall, and update the user agent software.

Ensure that people with disabilities are involved in the design and

testing of the software.

- 1.2 Use the standard input and output device APIs of the operating system. [Priority 1]

(Checkpoint 1.2)

-

Do not bypass

the standard output APIs when rendering information

(e.g., for reasons of speed, efficiency, etc.).

For example, do not bypass standard APIs

to manipulate the memory associated

with rendered content, since

assistive technologies

monitor rendering through the APIs.

Techniques:

-

- Operating system and application frameworks provide

standard mechanisms for communication with input devices.

In the case of Windows, OS/2, the X Windows

System, and Mac OS, the window manager provides Graphical

User Interface (GUI) applications

with this information through the messaging queue. In the case of

non-GUI applications, the compiler run-time libraries provide standard

mechanisms for receiving keyboard input in the case of desktop

operating systems. Should you use an application framework such as the

Microsoft Foundation Classes, the framework used must support the same

standard input mechanisms.

- Do not communicate directly with an input device; this may

circumvent system messaging. For instance,

in Windows, do not open the keyboard device driver directly.

It is often the case that the windowing system needs to change

the form and method for processing standard input mechanisms for proper

application coexistence within the user interface framework.

- Do not implement your own input device event queue mechanism; this may

circumvent system messaging. Some assistive technologies

use standard system facilities for simulating keyboard

and mouse events. From the application's perspective,

these events are no different than

those generated by the user's actions.

The Journal Playback Hooks (in both OS/2 and Windows) is one example

of an application that feeds the standard event queues.

- Operating system and application frameworks provide standard

mechanisms for using standard output devices. In the case of

common desktop operating systems such as Windows, OS/2, and Mac OS,

standard API are provided for writing to the display and the

multimedia subsystems.

- Do not render text in the form of a bitmap before transferring to the

screen, since some screen readers rely on the user

agent's offscreen model.

Common operating system 2D graphics engines and drawing

libraries provide functions for drawing text to the screen. Examples

of this are the Graphics Device Interface (GDI) for Windows, Graphics

Programming Interface (GPI) for OS/2, and for the X Windows System or

Motif it is the X library (XLIB).

- Do not communicate directly with an output device.

- Do not draw directly to the video frame buffer.

- Do not provide your own mechanism for generating pre-defined

system sounds.

- When writing textual information in a GUI operating system, use

standard operating system APIs for

drawing text.

- Use operating system resources for rendering audio information.

When doing so, do not take exclusive control of system

audio resources. This could prevent an assistive technology such as a screen

reader from speaking if they use software text-to-speech conversion.

Also, in operating systems like Windows,

a set of standard audio sound resources are

provided to support standard sounds such as alerts. These preset sounds

are used to activate

SoundSentry graphical cue when a problem

occurs; this benefits users with hearing disabilities. These queues may be

manifested by flashing the desktop, active caption bar, or active window.

It is important to use the standard mechanisms to generate audio

feedback so that operating system or special assistive technologies can add

additional functionality for the hearing disabled.

- Enhance the functionality of standard system controls to improve

accessibility where none is provided by responding to standard keyboard

input mechanisms. For example provide keyboard navigation to menus and

dialog box controls in the Apple Macintosh operating system. Another

example is the Java Foundation Classes, where internal frames do not provide

a keyboard mechanism to give them focus. In this case, you will need to

add keyboard activation through the standard keyboard activation facility

for Abstract Window Toolkit components.

- 1.3 Ensure that the user can interact with all active elements in a device-independent manner. [Priority 1]

(Checkpoint 1.3)

- For example, users who are blind or have physical

disabilities must be able to

activate text links, the links

in a client-side image map, and form controls

without a pointing device.

Note. This checkpoint is an important special case of

checkpoint 1.1.

Techniques:

-

- Refer to checkpoint 1.1 and checkpoint 1.5.

- Refer to image map techniques.

- In the "Document Object Model (DOM) Level 2 Specification"

[DOM2], all elements may have associated behaviors.

Assistive technologies should be able to activate these elements

through the DOM. For example, a DOM 'focusin' event may

cause a JavaScript function to construct a pull-down menu. Allowing

programmatic activation of this function will allow users to

operate the menu through speech input (which benefits users of

voice browsers in addition to assistive technology users).

Note that, for a given element, the same event may trigger more

than one event handler, and assistive technologies must be

able to activate each of them. Descriptive information about handlers

can allow assistive technologies to select the most important

functions for activation. This is possible in the Java Accessibility API

[JAVAAPI], which provides

an an AccessibleAction Java interface. This interface provides a list of

actions and descriptions that enable selective activation.

Refer also to checkpoint 5.3.

- 1.4 Ensure that every functionality available through the user interface is also available through the standard keyboard API. [Priority 1]

(Checkpoint 1.4)

-

Note. This checkpoint is an important special case of

checkpoint 1.1. The comment about

low-level functionalities in checkpoint 1.1

applies to this checkpoint as well.

Refer also to checkpoint 10.8.

Techniques:

-

- Apply the techniques for checkpoint 1.1

to the keyboard.

- Account for author-supplied keyboard shortcuts, such

as those specified by "accesskey" attribute in HTML 4.01

([HTML4], section 17.11.2).

- Allow the user to trigger event handlers

(e.g., mouseover, mouseout, click, etc.) from the keyboard.

- Test that all user interface

components may be operable by software or devices that emulate

a keyboard. Use SerialKeys

and/or voice recognition software to test keyboard event emulation.

- 1.5 Ensure every non-text message (e.g., prompt, alert, etc.) available through the user interface also has a text equivalent in the user interface. [Priority 1]

(Checkpoint 1.5)

-

Note.

For example, if the user interface provides

access to a functionality through a graphical

button, ensure that a text equivalent for

that button provides access to the same functionality

from the user interface. If a sound is used to

notify the user of an event, announce the event

in text on the status bar as well. Refer also to checkpoint 5.7.

Techniques:

-

- Display text messages on the status bar of the

user interface.

- For graphical user interface elements such as

proportional scroll bars, provide a text equivalent (e.g.,

a percentage of the document viewed).

- Provide a text equivalent for

beeps or flashes that are used to convey information.

- Provide a text equivalent

for audio user agent tutorials.

Tutorials that use speech to guide a user through the operation of the user

agent should also be available at the same time as

graphical representations.

- All user interface components

that convey important information using sound should also provide

alternate, parallel visual representation of the information for

individuals who are deaf, hard of hearing, or operating the user agent

in a noisy or silent environment where the use of sound is not

practical.

Checkpoints for content accessibility:

- 2.1 Ensure that the user has access to all content, including equivalent alternatives for content. [Priority 1]

(Checkpoint 2.1)

-

Refer to 5 for information

about programmatic access to content.

Techniques:

-

- Some users benefit from concurrent access to primary

and alternative content. For instance,

users with low vision may want to view images (even imperfectly)

but require a text equivalent

for the image; the text may be rendered with

a large font or as speech.

- When content changes dynamically (e.g., due to scripts

or content refresh), users must have access to the content

before and after the change.

- Refer to the section on

access to content.

- Refer to the section on

link techniques.

- Refer to the section on

table techniques.

- Refer to the section on

frame techniques.

- Refer to the section on

form techniques.

- Sections 10.4 ("Client Error 4xx") and

10.5 )"Server Error 5xx") of the

HTTP 1.1 specification state that user agents should have

the following behavior in case of these error conditions:

Except when responding to a HEAD request,

the server SHOULD include an entity containing an explanation of the

error situation, and whether it is a temporary or permanent

condition. These status codes are applicable to any request method.

User agents SHOULD display any included entity to the user.

- Make available information about abbreviation

and acronym expansions. For instance, in HTML, look for abbreviations

specified by the ABBR and ACRONYM elements. The expansion may be

given with the "title" attribute.

To provide expansion information, user agents may:

- Allow the user to configure that the expansions be

used in place of the abbreviations,

- Provide a list of all abbreviations in the document,

with their expansions (a generated glossary of sorts)

- Generate a link from an abbreviation to its expansion.

- Allow the user to query the expansion of a selected

or input abbreviation.

- If an acronym has no explicit expansion, user agents

may look up in a glossary of acronyms for that page for

another occurrence. Less reliably,

the user agent may look for possible expansions (e.g.,

in parentheses) in surrounding context.

- 2.2 For presentations that require user input within a specified time interval, allow the user to configure the time interval (e.g., to extend it or to cause the user agent to pause the presentation automatically and await user input before proceeding). [Priority 1]

(Checkpoint 2.2)

Techniques:

-

-

Render time-dependent links as

a static list that occupies the same screen real estate;

authors may create such documents in SMIL 1.0 [SMIL].

Include (temporal) context in the list of links. For

example, provide the time at which the link appeared along

with a way to easily jump to that portion of the

presentation.

-

Provide easy-to-use controls (including both mouse and

keyboard commands) to allow users to pause a presentation

and advance and rewind by small or large time increments.

Note.

When a user must respond to a link by pausing the program and

activating the link, the time dependent nature of the link does not change

since the user must respond somehow in the predetermined time. The pause

feature is only effective in conjunction with the ability to rewind to the

link, or when the pause can be configured to stop the presentation

automatically and require the user to respond before continuing, either by

responding to the user input or by continuing with the flow of the document.

- Highlight the fact that there are

active elements in a presentation and allow users to

navigate to and activate them. For example,

indicate the presence of active elements on the

status bar and allow the user to navigate among them with the

keyboard or mouse.

- 2.3 When the author has not supplied a text equivalent for content as required by the markup language, make available other author-supplied information about the content (e.g., object type, file name, etc.). [Priority 2]

(Checkpoint 2.3)

Techniques:

-

Refer to techniques for

missing equivalent alternatives of content.

- 2.4 When a text equivalent for content is explicitly empty (i.e., an empty string), render nothing. [Priority 3]

(Checkpoint 2.4)

Techniques:

-

- User agents should render nothing in this case because

the author may specify a null text equivalent for content

that has no function in the page other than as decoration.

In this case, the user agent should not render

generic labels such as "[INLINE]" or "[GRAPHIC]".

- Allow the user to toggle the rendering of null text equivalents: between

nothing and an indicator of a null equivalent (e.g., an icon

with the text equivalent "EMPTY TEXT EQUIVALENT").

Checkpoints for user interface accessibility:

- 2.5 If more than one equivalent alternative is available for content, allow the user to choose from among the alternatives. This includes the choice of viewing no alternatives. [Priority 1]

(Checkpoint 2.5)

-

For example, if a multimedia presentation has several

captions

(or subtitles) available, allow the user to choose from among them.

Captions might differ in level of detail, address

different reading levels, differ in natural language, etc.

Techniques:

-

- Refer to the section on

access to content.

- Allow users to choose more than one equivalent at a given

time. For instance, multilingual audiences may wish to

have captions in different natural languages on the screen at the

same time. Users may wish to use

both captions and auditory descriptions concurrently as well.

- Make apparent through the user agent user interface which

auditory tracks are meant to be played mutually exclusively.

- In the user interface, construct a list of

all available tracks from short descriptions provided

by the author (e.g., through the "title" attribute).

- Allow the user to configure different

natural language preferences

for different types of equivalents (e.g., captions and auditory

descriptions). Users with disabilities may need to choose the

language they are most familiar with in order to understand a

presentation for which equivalent tracks are not all available

in all desired languages. In addition, some users may

prefer to hear the program audio in its original language

while reading captions in another, fulfilling

the function of subtitles or to improve foreign language

comprehension. In classrooms, teachers may wish to configure

the language of various multimedia elements to achieve

specific educational goals.

- Consider system level natural language preferences

as the user's default language preference. However, do not

send HTTP Accept-Language request headers ([RFC2616], section 14.4)

based on the operating system preferences.

First, there may be a privacy problem as indicated in

RFC 2616, section 15.1.4 "Privacy Issues Connected to Accept Headers".

Also, the operating system defines one language, while the Accept-Language

request header may include many languages in different priorities. Automatic

setting of accept-language the operating system language may

result in the user receiving messages from servers that do not

have a match to this single language although they have acceptable

other languages to the users.

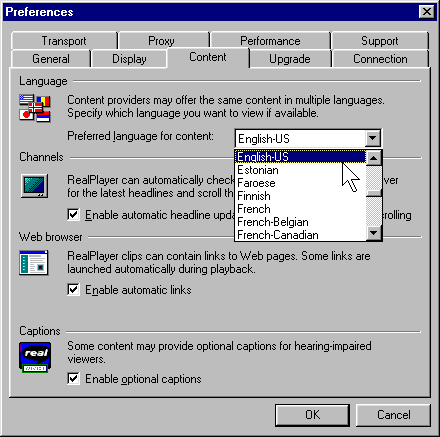

The following image shows

how users select a natural language preference in the Real Player.

This setting, in conjunction with language markup in the

presentation, determine what content is rendered.

- 2.6 Allow the user to specify that text transcripts, collated text transcripts, captions, and auditory descriptions be rendered at the same time as the associated auditory and visual tracks. Respect author-supplied synchronization cues during rendering. [Priority 1]

(Checkpoint 2.6)

Techniques:

-

- Captions and

auditory descriptions

may not make sense unless rendered synchronously with the

primary content. For instance, if someone with

hearing loss is watching a video presentation

and reading associated captions, the

captions must be synchronized with the audio so

that the individual can use any residual hearing. For auditory

descriptions, it is crucial that the primary auditory track and

the auditory description track be synchronized to avoid having

them both play at once, which would reduce the clarity of the

presentation.

- User agents that implement SMIL 1.0 ([SMIL]) should implement the

"Accessibility Features of SMIL" [SMIL-ACCESS]. In particular,

SMIL user agents should allow users to configure whether they

want to view captions, and this user interface switch should

be bound to the 'system-captions' test attribute.

Users should be able to indicate a preference for receiving

available auditory descriptions, but SMIL 1.0 does

not include a mechanism equivalent to 'system-captions' for

auditory descriptions. The next version of SMIL is expected

to include a test attribute for auditory descriptions.

Another SMIL 1.0 test attribute, 'system-overdub-or-captions',

allows users to select between subtitles and overdubs in multilingual

presentations. User agents should not interpret a value of

'caption' for this test attribute as meaning that the user prefers

accessibility captions; that is the purpose of the 'system-captions'

test attribute. When subtitles and accessibility captions are both

available, deaf users may prefer to view captions, as they generally

contain information not in subtitles: information on music, sound

effects, who is speaking, etc.

- User agents that play QuickTime movies should allow the user to

turn on and off the different tracks embedded in the movie. Authors

may use these alternate tracks to provide equivalent alternatives. The

Apple QuickTime player currently provides this feature through the

menu item "Enable Tracks."

- User agents that play Microsoft Windows Media Object presentations

should provide support for Synchronized Accessible Media Interchange

(SAMI, a protocol for creating and displaying captions) and should

allow users to configure how captions are viewed. In addition, user

agents which play Microsoft Windows Media Object presentations should

enable people to turn on and off other equivalent alternatives,

including auditory description and alternate visual tracks.

- For other formats, at a minimum, users must be able to turn on and

off auditory descriptions and captions.

- 2.7 For author-identified but unsupported natural languages, allow the user to request notification of language changes in content. [Priority 3]

(Checkpoint 2.7)

Techniques:

-

- A user agent should treat the

natural language of

content as part of context information.

When the language changes, the user agent should either

render the content in the supported natural language or notify the user of the

language change (if configured for notification). Rendering could

involve speaking in the designated natural language in the case of an audio

browser or screen reader. If the natural language is not supported, the

language change notification could be spoken in the default language

by a screen reader or audio browser.

- Switching natural languages for blocks of content may be more helpful than

switching for short phrases. In some language combinations (e.g.,

Japanese being the primary and English being the secondary or quoted

language), short foreign language phrases are often well-integrated in the

primary language. Dynamic switching for these short phrases may make

the content sound unnatural and possibly harder to understand.

- Refer to techniques for generated

content, which may be used to insert text to indicate a language

change.

- Refer to techniques for

synthesized speech.

- Refer to checkpoint 2.7 and checkpoint 5.5.

- For information on language codes, refer to [ISO639].

- If users do not want to see or hear blocks

of content in another natural language, allow the user to suggest

hiding that content (e.g., with style sheets).

- Refer to "Character Model for the World Wide Web"

[CHARMOD]. It contains basic definitions and

models, specifications to be used by other specifications or directly

by implementations, and explanatory material. In particular,

this document addresses early

uniform normalization, string identity matching, string indexing, and

conventions for URIs.

- Implement content negotiation so that users may

specify language preferences. Or allow the user to

choose a resource when several are available in different

languages.

- Use an appropriate glyph set when rendering visually

and an appropriate voice set when rendering as speech.

- Render characters with the appropriate directionality.

Refer to the

"dir" attribute

and the BDO element in HTML 4.01

([HTML4], sections 8.2 and 8.2.4 respectively). Refer also

to the Unicode standard [UNICODE].

- A user agent may not be able to render all characters in a

document meaningfully, for instance, because the user agent lacks a

suitable font, a character has a value that may not be expressed in

the user agent's internal character encoding, etc. In this case,

section 5.4 of HTML 4.01 [HTML4]

recommends the following for undisplayable characters:

- Adopt a clearly visible (or audible),

but unobtrusive mechanism to alert the user of missing resources.

- If missing characters are presented using their numeric

representation, use the hexadecimal (not decimal) form since this is

the form used in character set standards.

In addition to the techniques below, refer also to the section on

user control of style.

Checkpoints for content accessibility:

- 3.1 Allow the user to turn on and off rendering of background images. [Priority 1]

(Checkpoint 3.1)

Techniques:

-

- 3.2 Allow the user to turn on and off rendering of background audio. [Priority 1]

(Checkpoint 3.2)

Techniques:

-

- Allow the user to turn off background

audio through the user agent user interface.

- Authors sometimes specify background sounds with the "bgsound"

attribute. Note. This attribute is not

part of HTML 4.01 [HTML4].

- In CSS 2, background sounds may be turned on/off with the

'play-during' property ([CSS2], section 19.6).

- 3.3 Allow the user to turn on and off rendering of video. [Priority 1]

(Checkpoint 3.3)

Techniques:

-

- Allow the user to turn off

video through the user agent user interface. Render a still image

in its place.

- Support the

'display'

property in CSS ([CSS2], section 9.2.5).

- Allow the user to hide a video presentation from view,

even though it continues to play in the background.

- 3.4 Allow the user to turn on and off rendering of audio. [Priority 1]

(Checkpoint 3.4)

Techniques:

-

- 3.5 Allow the user to turn on and off animated or blinking text. [Priority 1]

(Checkpoint 3.5)

Techniques:

-

- Allow the user to turn off

animated or blinking text through the user agent user interface

(e.g., by pressing the Escape key to stop animations).

Render static text in place of blinking text.

- The BLINK element. Note. The BLINK

element is not defined by a W3C specification.

- The MARQUEE element. Note. The MARQUEE

element is not defined by a W3C specification.

- The 'blink' value of the

'text-decoration' property in CSS ([CSS2], section 16.3.1).

- 3.6 Allow the user to turn on and off animations and blinking images. [Priority 1]

(Checkpoint 3.6)

Techniques:

-

- Allow the user to turn off

animated or blinking text through the user agent user interface

(e.g., by pressing the Escape key to stop animations).

Render a still image in its place.

- 3.7 Allow the user to turn on and off support for scripts and applets. [Priority 1]

(Checkpoint 3.7)

- Note. This is particularly important

for scripts that cause the screen to flicker, since

people with photosensitive epilepsy can have seizures triggered by

flickering or flashing, particularly

in the 4 to 59 flashes per second (Hertz) range.

Techniques:

-

- Peak sensitivity to flickering or flashing occurs at 20 Hertz.

- Refer to the section on

script techniques

- 3.8 For automatic content changes specified by the author (e.g., redirection and content refresh), allow the user to slow the rate of change. [Priority 2]

(Checkpoint 3.8)

Techniques:

-

- Alert the users to pages that refresh

automatically

and allow them to specify a refresh rate.

- Allow the user to slow content refresh

to once per 10 minutes.

- Allow the user to

stop automatic refresh, but indicate that

content needs refreshing and allow the user

to refresh the content by activating a button or link.

Or, prompt the user and ask whether to continue with forwards.

- Refer to the HTTP 1.1 specification [RFC2616] for

information about redirection mechanisms.

- Some HTML authors create a refresh effect by

using a META element with

http-equiv="refresh" and the refresh rate specified

in seconds by the "content" attribute.

- 3.9 Allow the user to turn on and off rendering of images. [Priority 3]

(Checkpoint 3.9)

Techniques:

-

- Provide a simple command that allows users to turn on/off the

rendering of images on a page. When images are turned off,

render any associated equivalents.

- Refer to techniques for checkpoint 3.1.

In addition to the techniques below, refer also to the section on

user control of style.

Checkpoints for fonts and colors:

- 4.1 Allow the user to configure the size of text. [Priority 1]

(Checkpoint 4.1)

-

For example, allow the user to

specify a font family and style directly through the

user agent user interface or

in a user style sheet.

Or, allow the user to zoom or magnify content.

Techniques:

-

- Inherit text size information from user preferences

specified for the operating system.

- Use operating system magnification features.

- Support the

'font-size' property in CSS ([CSS2], section 15.2.4).

- Allow the user to override author-specified font sizes.

- When scaling text, maintain size relationships among

text of different sizes.

- 4.2 Allow the user to configure font family. [Priority 1]

(Checkpoint 4.2)

Techniques:

-

- Inherit font family information from user preferences

specified for the operating system.

- Support the

'font-family' property in CSS ([CSS2], section 15.2.2).

- Allow the user to override author-specified font families.

- 4.3 Allow the user to configure foreground color. [Priority 1]

(Checkpoint 4.3)

Techniques:

-

- Inherit foreground color information from user preferences

specified for the operating system.

- Support the

'color'

and

'border-color'

properties in CSS 2 ([CSS2], sections 14.1 and 8.5.2, respectively).

- Allow the user to specify minimal contrast between foreground

and background colors, adjusting colors dynamically to meet those

requirements.

- Allow the user to override author-specified foreground colors.

- 4.4 Allow the user to configure background color. [Priority 1]

(Checkpoint 4.4)

Techniques:

-

- Inherit background color information from user preferences

specified for the operating system.

- Support the

'background-color'

property (and other background properties) in CSS 2 ([CSS2], section 14.2.1).

- Allow the user to override author-specified background colors.

Checkpoints for multimedia and audio presentations:

- 4.5 Allow the user to slow the presentation rate of audio, video, and animations. [Priority 1]

(Checkpoint 4.5)

Techniques:

-

- Allowing the user to slow the presentation of

video, animations, and audio will benefit individuals with specific learning

disabilities, cognitive deficits, or those with normal cognition but newly

acquired sensory limitations (such as the person who is newly blind,

learning to use a screen reader). The same difficulty is common among

individuals who have beginning familiarity with a

natural language.

- When changing the rate of audio, avoid pitch distortion.

- Some formats do not allow changes in playback rate.

- 4.6 Allow the user to start, stop, pause, advance, and rewind audio, video, and animations. [Priority 1]

(Checkpoint 4.6)

Techniques:

-

- Allow the user to advance or rewind the presentation

in increments. This is particularly valuable to users

with physical disabilities who may not have fine control

over advance and rewind functionalities. Allow users to

configure the size of the increments.

- If buttons are used to control advance and rewind,

make the advance/rewind distances proportional to the

time the user activates the button. After a certain

delay, accelerate the advance/rewind.

- There are well-known techniques for changing audio

speed without introducing distortion.

- Home Page Reader [HPR] lets users insert bookmarks

in presentations.

- 4.7 Allow the user to configure the position of text transcripts, collated text transcripts, and captions on graphical displays. [Priority 1]

(Checkpoint 4.7)

Techniques:

-

- Support the CSS 2

'position' property ([CSS2], section 9.3.1).

- Allow the user to choose whether captions appear at the bottom or top

of the video area or in other positions. Currently authors may place

captions overlying the video or in a separate box. Captions may block

the user's view of other information in the video or on other parts

of the screen, making it necessary to move the captions in order to

view all content at once. In addition, some users may find captions

easier to read if they can place them in a location best suited to

their reading style.

- Allow users to configure a general preference for

caption position and to be able to fine tune specific cases.

For example, the user may want the captions to be in front of

and below the rest of the presentation.

- Allow the user to drag and drop the

captions to a place on the screen like any other viewport.

To ensure device-independence, allow the user to enter the screen

coordinates of one corner of the caption viewport.

- It may be easiest to allow the user to position all parts

of a presentation rather than trying to identify captions specifically.

- Do not require users to edit the source code of the

presentation to achieve the desired effect.

- 4.8 Allow the user to configure the audio volume. [Priority 2]

(Checkpoint 4.8)

Techniques:

-

- Support the CSS 2

'volume' property ([CSS2], section 19.2).

- Allow the user to configure a volume level at the operating

system level.

Checkpoints for synthesized speech:

Refer also to techniques for

synthesized speech.

- 4.9 Allow the user to configure synthesized speech playback rate. [Priority 1]

(Checkpoint 4.9)

Techniques:

-

- 4.10 Allow the user to configure synthesized speech volume. [Priority 1]

(Checkpoint 4.10)

Techniques:

-

- 4.11 Allow the user to configure synthesized speech pitch, gender, and other articulation characteristics. [Priority 2]

(Checkpoint 4.11)

Techniques:

-

Checkpoints for user interface accessibility:

- 4.12 Allow the user to select from available author and user style sheets or to ignore them. [Priority 1]

(Checkpoint 4.12)

- Note. By definition

the browser's default style sheet is always present,

but may be overridden by author or user styles.

Techniques:

-

- 4.13 Allow the user to configure how the selection is highlighted (e.g., foreground and background color). [Priority 1]

(Checkpoint 4.13)

Techniques:

-

- 4.14 Allow the user to configure how the content focus is highlighted (e.g., foreground and background color). [Priority 1]

(Checkpoint 4.14)

Techniques:

-

- Support the CSS 2

':focus' pseudo-class

and

dynamic

outlines and focus of CSS 2 ([CSS2], sections 5.11.3 and

18.4.1, respectively).

For example, the following rule will cause links with focus to

appear with a blue background and yellow text.

A:focus { background: blue; color: yellow }

The following rule will cause TEXTAREA elements

with focus to appear with a particular focus outline:

TEXTAREA:focus { outline: thick black solid }

- Inherit focus

information from user's settings for the operating system.

- Test the user agent to ensure that

individuals who have low vision and use screen

magnification software are able to follow highlighted item(s).

- 4.15 Allow the user to configure how the focus changes. [Priority 2]

(Checkpoint 4.15)

-

For instance, allow the user to require

that user interface focus

not move automatically to a newly

opened viewport.

Techniques:

-

- Allow the user to configure how

current focus changes

when a new viewport opens. For instance, the user

might choose between these two options:

- Do not change the focus when a window opens,

but notify the user (e.g., with a beep, flash, and text

message on the status bar). Allow the user to navigate

directly to the new window when they choose to.

- Change the focus when a window opens and use a subtle

alert (e.g., a beep, flash, and text message on the status

bar) to indicate that the focus has changed.

- If a new viewport

or prompt appears but focus does not move to it,

notify assistive so that they may

(discreetly) inform the user, allow querying, etc.

- 4.16 Allow the user to configure viewports, prompts, and windows opened on user agent initiation. [Priority 2]

(Checkpoint 4.16)

-

For instance, allow the user to turn off viewport creation.

Refer also to checkpoint 5.7.

Techniques:

-

- For HTML [HTML4], allow the user to control the process of

opening a document in a new "target" frame or a viewport created by

author-supplied scripts. For example, for

target="_blank", open the window according

to the user's preference.

- For SMIL [SMIL], allow the user to control

viewports created with the "new" value of the "show" attribute.

- Allow users to turn off support for user agent initiated

viewports entirely.

- Prompt users before opening a viewport. For instance,

for user agents that support CSS 2 [CSS2], the following rule

will generate a message to the user at the beginning of

link text for links that are meant to open new windows when

followed:

A[target=_blank]:before{content:"Open new window"}

- Allow users to

configure the size or position of the viewport and to be able to

close the viewport (e.g., with the "back" functionality).

Checkpoints for content accessibility:

- 5.1 Provide programmatic read access to HTML and XML content by conforming to the W3C Document Object Model (DOM) Level 2 Core and HTML modules and exporting the interfaces they define. [Priority 1]

(Checkpoint 5.1)

-

Note. These modules are defined in

DOM Level 2 [DOM2], chapters 1 and 2. Please refer to

that specification for information about which versions

of HTML and XML are supported and for the definition

of a "read-only DOM. For content other than

HTML and XML, refer to checkpoint 5.3. This checkpoint

is an important special case of checkpoint 2.1.

Techniques:

-

- Information of particular importance to accessibility

that must be available through the document object model includes:

- Content, including equivalent alternatives.

- The document structure (for navigation, creation

of alternative views).

- Style sheet information (for user control of styles).

- Script and event handlers (for device-independent

control of behavior).

- Assistive technologies also require information about browser

selection and

focus mechanisms, which may not be

available through the W3C DOM.

- The W3C DOM is designed to be used on a server as well as a client,

and so does not address some user interface-specific information

(e.g., screen coordinates).

- Refer to the appendix on

loading assistive technologies for DOM access.

- For information about rapid access to Internet Explorer's [IE]

DOM through COM, refer to [BHO].

- Refer to the Java Weblets implementation of the DOM [JAVAWEBLET].

- 5.2 If the user can modify HTML and XML content through the user interface, provide the same functionality programmatically by conforming to the W3C Document Object Model (DOM) Level 2 Core and HTML modules and exporting the interfaces they define. [Priority 1]

(Checkpoint 5.2)

-

For example, if the user interface allows users to

complete HTML forms, this must also be possible

through the DOM APIs.

Note. These modules are defined in

DOM Level 2 [DOM2], chapters 1 and 2.

Please refer to DOM

Level 2 [DOM2] for information about which versions

of HTML and XML are supported. For content other than

HTML and XML, refer to checkpoint 5.3. This checkpoint

is an important special case of checkpoint 2.1.

Techniques:

-

- 5.3 For markup languages other than HTML and XML, provide programmatic access to content using standard APIs (e.g., platform-independent APIs and standard APIs for the operating system). [Priority 1]

(Checkpoint 5.3)

-

Note. This checkpoint addresses

content not covered by

checkpoints checkpoint 5.1 and checkpoint 5.2. This checkpoint

is an important special case of checkpoint 2.1.

Techniques:

-

- 5.4 Provide programmatic access to Cascading Style Sheets (CSS) by conforming to the W3C Document Object Model (DOM) Level 2 CSS module and exporting the interfaces it defines. [Priority 3]

(Checkpoint 5.4)

-

Note. This module is defined in

DOM Level 2 [DOM2], chapter 5. Please refer to

that specification for information about which versions

of CSS are supported. This checkpoint

is an important special case of checkpoint 2.1.

Techniques:

-

Checkpoints for user interface accessibility:

- 5.5 Provide programmatic read and write access to user agent user interface controls using standard APIs (e.g., platform-independent APIs such as the W3C DOM, standard APIs for the operating system, and conventions for programming languages, plug-ins, virtual machine environments, etc.) [Priority 1]

(Checkpoint 5.5)

-

For example, ensure that assistive technologies have access to information about the

user agent's current

input configuration so that

they can trigger functionalities through keyboard events, mouse

events, etc.

Techniques:

-

- Some operating system and programming language APIs support accessibility by providing a bridge

between the standard user interface supported by the operating system

and alternative user interfaces developed by assistive technologies. User agents that implement these APIs are

generally more compatible with assistive technologies and provide

accessibility at no extra cost. Some public

APIs that promote accessibility include:

- Microsoft Active Accessibility ([MSAA])

in Windows 95/98/NT versions.

- Sun Microsystems Java Accessibility API ([JAVAAPI]) in Java

Code. If the user agent supports Java applets and provides a Java

Virtual Machine to run them, the user agent should support the proper

loading and operation of a Java native assistive technology. This

assistive technology can provide access to the applet as defined by

Java accessibility standards.

- Use standard user interface

controls. Third-party assistive technology developers

are more likely able to access standard controls than custom

controls. If you must use

custom controls, review them for

accessibility and compatibility with third-party assistive

technology. Ensure that they provide

accessibility information through an API as is done for the

standard controls.

- Makes use of operating system level features.

See the appendix of

accessibility features for some common operating systems.

- Inherit operating system settings related to

accessibility (e.g., for fonts, colors,

natural language

preferences, input configurations, etc.).

-

Write output to and take input from standard system APIs rather than directly from hardware controls.

This will enable the I/O to be redirected from or to

assistive technology devices - for example, screen readers and Braille

displays often redirect output (or copy it) to a serial port, while

many devices provide character input, or mimic mouse

functionality. The use of generic APIs makes this feasible in a way

that allows for interoperability of the assistive technology with a

range of applications.

- For information about rapid access to Internet Explorer's [IE]

DOM through COM, refer to [BHO].

- 5.6 Implement selection, content focus, and user interface focus mechanisms. [Priority 1]

(Checkpoint 5.6)

- Refer also to checkpoint 7.1 and checkpoint 5.5.

Note. This checkpoint is an important special case of

checkpoint 5.5.

Techniques:

-

- 5.7 Provide programmatic notification of changes to content and user interface controls (including selection, content focus, and user interface focus). [Priority 1]

(Checkpoint 5.7)

Techniques:

-

- Refer to "mutation events" in DOM Level 2

[DOM2]. This module of DOM 2 allows assistive technologies

to be informed of changes to the document tree.

- Allow assistive technologies to register for some, but

not all, events.

- Refer also to information about monitoring HTML events through

the document object model

Internet Explorer [IE].

- Refer also to checkpoint 5.5.

- 5.8 Ensure that programmatic exchanges proceed in a timely manner. [Priority 2]

(Checkpoint 5.8)

-

For example, the programmatic exchange of information required by

other checkpoints in this document must be efficient enough to

prevent information loss, a risk when changes to content or user

interface occur more quickly than

the communication of those changes. The techniques for this checkpoint

explain how developers can reduce communication delays, e.g.,

to ensure that assistive technologies have timely access to

the document object model

and other information needed for

accessibility.

Techniques:

-

- 5.9 Follow operating system conventions and accessibility settings. In particular, follow conventions for user interface design, default keyboard configuration, product installation, and documentation. [Priority 2]

(Checkpoint 5.9)

-

Refer also to checkpoint 10.2.

Techniques:

-

- Refer to techniques for checkpoint 1.2.

- Refer to techniques for checkpoint 5.5.

- Refer to techniques for checkpoint 10.2.

- Follow operating system and application

environment (e.g., Java) conventions for loading assistive

technologies. Refer to

the appendix on loading

assistive technologies for DOM access for information

about how an assistive technology

developer can load its software into a Java Virtual Machine.

- Evaluate the standard interface controls on the target

platform against any built-in operating system accessibility

functions and be sure the user agent operates

properly with all these functions.

For example, be attentive to the following features:

- Microsoft Windows supports an accessibility function called

"High Contrast". Standard window classes and controls automatically

support this setting. However, applications created with custom

classes or controls must understand how to work with the "GetSysColor"

API to ensure compatibility with High Contrast.

- Apple Macintosh supports an accessibility function called "Sticky Keys".

Sticky Keys operate with keys the operating system recognizes

as modifier keys, and therefore a custom control should not

attempt to define a new modifier key.

- Follow accessibility guidelines for specific platforms:

-

"Macintosh Human Interface Guidelines" [APPLE-HI]

-

"IBM Guidelines for Writing Accessible Applications Using 100% Pure

Java"

[JAVA-ACCESS].

-

"An ICE Rendezvous Mechanism for X Window System Clients"

[ICE-RAP].

-

"Information for Developers About Microsoft Active Accessibility"

[MSAA].

-

"The Inter-Client communication conventions manual"

[ICCCM].

-

"Lotus Notes accessibility guidelines"

[NOTES-ACCESS].

-

"Java accessibility guidelines and checklist"

[JAVA-CHECKLIST].

-

"The Java Tutorial. Trail: Creating a GUI with JFC/Swing"

[JAVA-TUT].

-

"The Microsoft Windows Guidelines for Accessible Software Design"

[MS-SOFTWARE].

- Follow general guidelines for producing accessible software:

Checkpoints for content accessibility:

- 6.1 Implement the accessibility features of supported specifications (markup languages, style sheet languages, metadata languages, graphics formats, etc.). [Priority 1]

(Checkpoint 6.1)

Techniques:

-

- Features that are known to promote

accessibility should be made obvious to users and easy to find

in the user interface and in documentation.

- The accessibility features of Cascading Style Sheets

([CSS1], [CSS2]) are described

in "Accessibility Features of CSS" [CSS-ACCESS].

Note that CSS 2 includes properties for

configuring synthesized speech styles.

- The accessibility features of SMIL 1.0

[SMIL] are described in "Accessibility Features of SMIL"

[SMIL-ACCESS].

- The following is a list of accessibility features of

HTML 4.01 [HTML4] in addition

to those described in techniques for

checkpoint 2.1:

- The

CAPTION element (section 11.2.2) for rich table captions.

- Table elements

THEAD,

TBODY, and TFOOT (section 11.2.3),

COLGROUP and

COL (section 11.2.4) that

group table rows and columns into meaningful sections.

- Attributes

"scope",

"headers",

and "axis" (section 11.2.6)

that non-visual browsers may use to render a table in a linear

fashion, based on the semantically significant labels.

- The

"tabindex" attribute (section 17.11.1) for assigning the order of keyboard navigation within a

document.

- The

"accesskey" attribute (section 17.11.2) for assigning keyboard commands to

active components such as links and form

controls.

- 6.2 Conform to W3C Recommendations when they are appropriate for a task. [Priority 2]

(Checkpoint 6.2)

- For instance, for markup, implement

HTML 4.01 [HTML4] or

XML 1.0 [XML]. For style sheets, implement

CSS ([CSS1], [CSS2]). For mathematics, implement

MathML [MATHML]. For synchronized multimedia,

implement SMIL 1.0 [SMIL]. For access to the structure of

HTML or XML documents, implement the DOM [DOM2].

Refer also to guideline 5.

- Note. For reasons

of backward compatibility, user agents should continue to support

deprecated features of specifications. The current guidelines

refer to some deprecated language features that do not necessarily

promote accessibility but are widely deployed. Information about

deprecated language features is generally part of

the language's specification.

Techniques:

-

- The requirement of this checkpoint

is to implement at least one W3C Recommendation that

is available and appropriate for a particular task.

For example, user agents would satisfy this checkpoint

by implementing the Portable

Network Graphics 1.0 specification [PNG] for raster images.

In addition, user agents may implement other image formats

such as JPEG, GIF, etc.

- If more than one version or level of

a W3C Recommendation is appropriate for a particular task, user

agents are encouraged to implement the latest version.

- Specifications that become W3C Recommendations after a user agent's

development cycles permit input are not considered "available" in time

for that version of the user agent.

- Refer to the list of

W3C technical reports. Each specification

defines what conformance means for that specification.

- W3C encourages the public to review and comment on specifications

at all times during their development, from Working

Draft to Candidate Recommendation (for implementation

experience) to Proposed Recommendation. However, readers

should remain aware that a W3C specification do not

represent consensus in the Working Group,

Web Community, and W3C Membership until it becomes

a Recommendation.

- Use the W3C validation services:

-

Checkpoints for user interface accessibility:

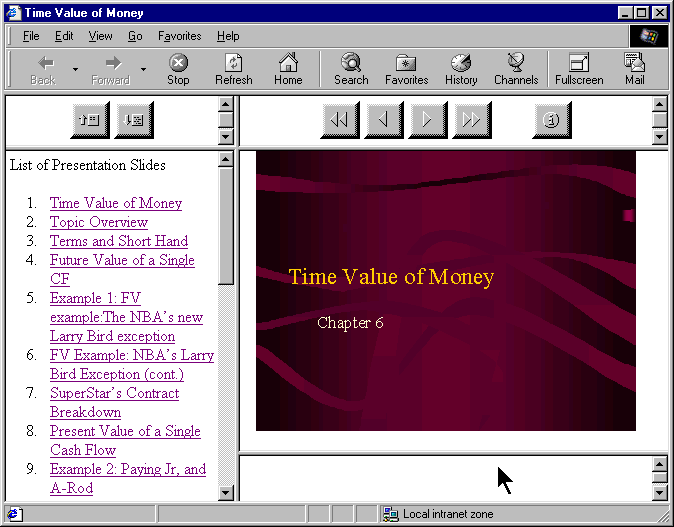

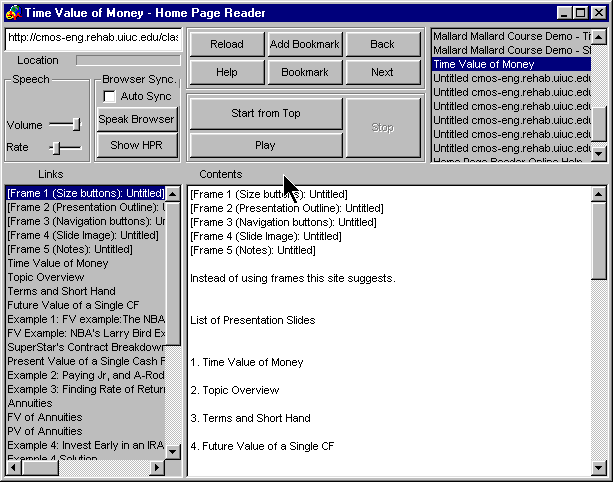

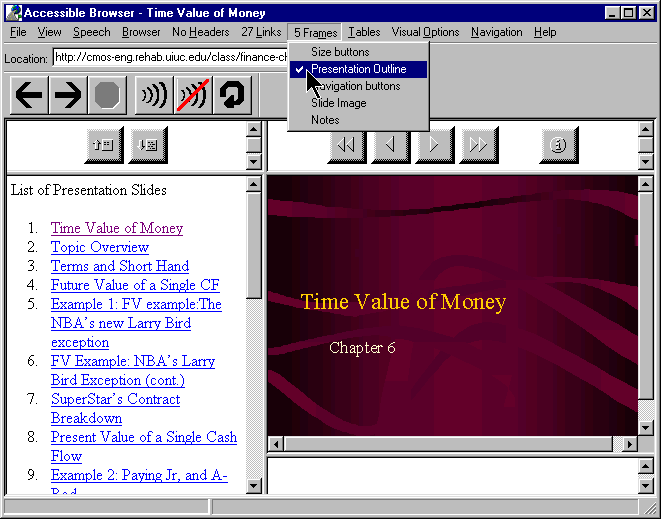

- 7.1 Allow the user to navigate viewports (including frames). [Priority 1]

(Checkpoint 7.1)

-

Note.

For example, when all frames of a frameset are displayed

side-by-side, allow the user to navigate among them

with the keyboard. Or, when frames are accessed or viewed one at a time

(e.g., by a text browser or speech synthesizer), provide

a list of links to other frames.

Navigating into a viewport makes it the

current viewport.

Techniques:

-

- Refer to the frame techniques.

Some operating systems provide a means to navigate among all open

windows using multiple input devices (e.g., keyboard and mouse). This

technique would suffice for switching among user agent viewports that

are separate windows. However, user agents may also provide a

mechanism to shift the

user interface focus

among user agent windows, independent of

the standard operating system mechanism.

- 7.2 For user agents that offer a browsing history mechanism, when the user returns to a previous viewport, restore the point of regard in the viewport. [Priority 1]

(Checkpoint 7.2)

- For example, when users navigate

"back" and "forth" among viewports, they should find the

viewport position where they last left it.

Techniques:

-

- If the user agent allows the user to browse

multimedia or audio presentations, when the user leaves one presentation

for another, pause the presentation.

When the user returns to a previous presentation,

allow the user to restart the presentation where it was paused

(i.e., return the point of regard

to the same place in space and time). Note.

This may be done for a presentation that is available "completely"

but not for a "live" stream or any part of a

presentation that continues to run in the background.

- When the user returns

to a page after following a link, restore content focus to that link.

- Refer to the HTTP 1.1 specification for

information about history mechanisms ([RFC2616], section 13.13).

- 7.3 Allow the user to navigate all active elements. [Priority 1]

(Checkpoint 7.3)

-

Navigation may include non-active

elements in addition to active elements.

Note. This checkpoint is an important special case of

checkpoint 7.6.

Techniques:

-

- Allow the user to sequentially navigate all active elements

using a single keystroke.

Many user agents today allow users to navigate sequentially

by repeating a key combination -- for example,

using the Tab key for forward navigation and Shift-Tab for

reverse navigation. Because the Tab key is typically on one

side of the keyboard while arrow keys are located on the other,

users should be allowed to configure the user agent so that

sequential navigation is possible with keys that are physically

closer to the arrow keys. Refer also to checkpoint 10.4.

- Provide other sequential navigation mechanisms

for particular element types or semantic units.

For example "Find the next table" or "Find the previous form".

For more information about sequential navigation of form

controls

and form submission, refer to techniques for

[#info-form-submit].

- Maintain a logical

element navigation order. For instance, users may use the

keyboard to navigate among elements or element groups

using the arrow keys within a group of elements. One example

of a group of elements is a set of radio buttons. Users should

be able to navigate to the group of buttons, then be able to

select each button in the group. Similarly, allow users to

navigate from table to table, but also among the cells within

a given table (up, down, left, right, etc.)

- The default sequential navigation order should respect

the conventions of the

natural language

of the document.

Thus, for most left-to-right languages, the usual navigation

order is top-to-bottom and left-to-right.

Thus, for right-to-left languages, the order would be

top-to-bottom and right-to-left.

- Respect author-supplied information about navigation

order (e.g., the "tabindex" attribute in HTML 4 [HTML4], section 17.11.1). Allow

users to override the author-supplied navigation order (e.g., by

offering an alphabetized view of links or other orderings).

- Give the users the option of navigating to and activating

a link, or just moving the

content focus

to the link. First-time users of a page may want

access to link text before deciding whether to follow the link

(activate). More experienced users of a page might prefer to follow

the link directly, without the intervening content focus step.

- In Java, a component is part of

the sequential navigation order when

added to a panel and its isFocusTraversable()

method returns true. A component can be removed from the navigation order

by extending the component, overloading this method, and

returning false.

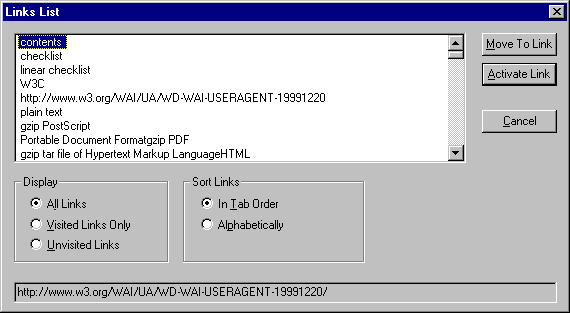

The following view from Jaws for Windows [JFW] allows users to

navigate to links in a document and activate them independently.

Users may also configure the user agent to navigate visited links,

unvisited links, or both. Users may also change the sequential

navigation order, sorting links alphabetically or leaving them

in the logical tabbing order.

- Excessive use of sequential navigation can reduce the usability of

software for both disabled and non-disabled users.

- Some useful types of direct navigation include:

navigation based on position (e.g., all links are numbered

by the user agent),

navigation based on element content (e.g., the

first letter of text content), direct navigation to a table cell

by its row/column position, and searching (e.g.,

based on form control text, associated labels, or

form control names).

- Document available direct navigation mechanisms.

- 7.4 Allow the user to choose to navigate only active elements. [Priority 2]

(Checkpoint 7.4)

Techniques:

-

- 7.5 Allow the user to search for rendered text content, including rendered text equivalents. [Priority 2]

(Checkpoint 7.5)

-

Note.

Use operating system conventions for marking

the result of a search (e.g.,

selection or content focus).

Techniques:

-

- Allow users to search for (human-readable) element content and

attribute values.

- Allow users to search forward and backward from the point

of regard, the beginning of document, or the end of the document.

- Allow users to search the document source view.

- For forms, allow users to find

controls that must be changed

by the user before submitting the form.

Allow users to search on labels as well as content of some controls.

- Allow the user to search among just

text equivalents of other content.

- For multimedia presentations:

- Allow users to search and examine

time-dependent media elements and links in a time-independent manner.

For example, present a static list of time-dependent links.

- Allow users to find all media elements active at

a particular time in the presentation.

- Allow users to view a list of all media elements or

links of the presentations sorted by start or end time or alphabetically.

- For frames, allow users to search for content in all frames,

without having to be in a particular frame.

- It may be confusing to allow users to search for text

content that is not rendered (and thus that they have not

viewed); it will be difficult to move the selection if text

is found. If the user agent allows this type of search, notify the

user of this particular search mode.

- 7.6 Allow the user to navigate according to structure. [Priority 2]

(Checkpoint 7.6)

- For example, allow the user to navigate familiar

elements of a document: paragraphs, tables and table

cells, headers, lists,

etc. Note.

Use operating system conventions to indicate navigation

progress (e.g.,

selection or content focus).

Techniques:

-

- Use the DOM [DOM2] as the basis of structured navigation.

However, for well-known markup languages such as HTML, structured

navigation should take advantage of the structure of the source

tree and what is rendered.

- Allow navigation based on commonly understood document

models, even if they do not adhere strictly to a Document Type Definition

(DTD).

navigation. For instance, in HTML, although headers (H1-H6)

are not containers, they may be treated as such for the purpose

of navigation. Note that they should be properly nested.

- Allow the user to limit navigation to the cells

of a table (notably left and right within a row

and up and down within a column).

Navigation techniques include keyboard navigation from

cell to cell (e.g., using the arrow keys) and page up/down

scrolling.

Refer to the section on table navigation.

- Allow depth-first as well as breadth-first navigation.

- Provide context-sensitive navigation. For instance, when the

user navigates to a list or table, provide locally useful navigation

mechanisms (e.g., within a table, cell-by-cell navigation) using

similar input commands.

- From a given element, allow navigation to the

next or previous sibling, up to the parent, and to the end of an element.

- Allow users to navigate synchronized multimedia presentations

in time. Refer also to checkpoint 4.6.

- Allow the user to navigate characters,

words, sentences, paragraphs, screenfuls,

and other pieces of text content that depend on

natural language.

This benefits users of speech-based user agents and has

been implemented by several screen readers, including

Winvision [WINVISION], Window-Eyes [WINDOWEYES], and Jaws for Windows [JFW].

- Allow users to skip author-supplied navigation mechanisms

such as navigation bars. For instance, navigation bars at

the top of each page at a Web site may force users with screen readers or some

physical disabilities to wade through many links before reaching

the important information on the page.

User agents may facilitate browsing for these users by allowing

them to skip recognized

navigation bars (e.g., through a configuration

option). Some techniques for this include:

- Providing a functionality to jump to the first non-link content.

- In HTML, the MAP element may be used to mark up a navigation

bar (even when there is no associated image). Thus, users

might ask that MAP elements not be rendered in order to

hide links inside the MAP element. Note. Starting

in HTML 4.01, the MAP element allows block content, not just

AREA elements.

- The following is a summary of ideas provided by

the National Information Standards Organization [NISO]:

A talking book's "Navigation Control Center" (NCC)

resembles a traditional table of contents, but it is more. It

contains links to all headings at all levels in the book,

links to all pages, and links to any items that the reader has

chosen not to have read. For example, the reader may have turned off the

automatic reading of footnotes. To allow the user to

retrieve that information quickly,

the reference to the footnote is placed in the NCC and the reader

can go to the reference, understand the context for the footnote, and then

read the footnote.

Once the reader is at a desired location and wishes to begin

reading, the navigation process changes. Of course, the reader may

elect to read sequentially, but often some navigation is required

(e.g., frequently people navigate forward or backward one word or

character at a time). Moving from one sentence or paragraph at a time

is also needed. This type of local navigation is different from the

global navigation used to get to the location of what you want to

read. It is frequently desirable to move from one block element to the

next. For example, moving from a paragraph to the next block element

which may be a list, blockquote, or sidebar is the normally expected

mechanism for local navigation.

- 7.7 Allow the user to configure structured navigation. [Priority 3]

(Checkpoint 7.7)

- For example, allow the user to navigate only paragraphs,

or only headers and paragraphs, etc.

Techniques:

-

- Allow the user to navigate by element type.

- Allow the user to navigate HTML elements that share the same

"class" attribute.

- Allow the user to expand or shrink portions of the

structured view (configure detail level) for faster access to

important parts of content.

Checkpoints for content accessibility:

- 8.1 Make available to the user the author-specified purpose of each table and the relationships among the table cells and headers. [Priority 1]

(Checkpoint 8.1)

- For example, provide information about table headers,

how headers relate to cells, table summary

information, cell position information, table dimensions, etc.

Refer also to checkpoint 5.3.

Note. This checkpoint is an important special case of

checkpoint 2.1.

Techniques:

-

- Refer to the section on table techniques

- Allow the user to navigate to a table cell and query the

cell for metadata (e.g., by activating a menu or keystroke).

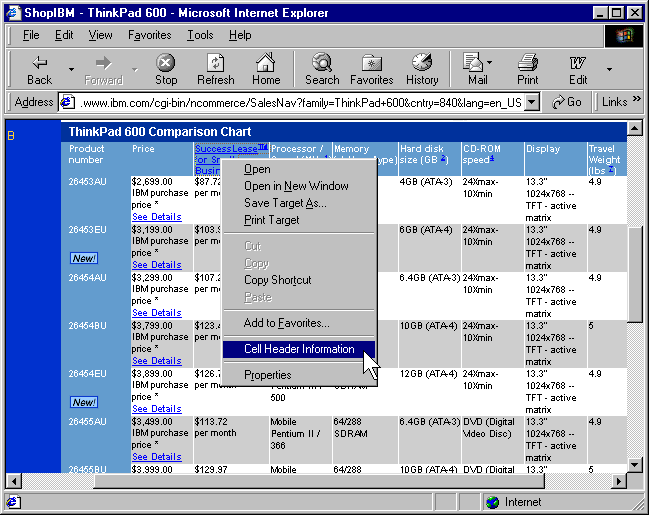

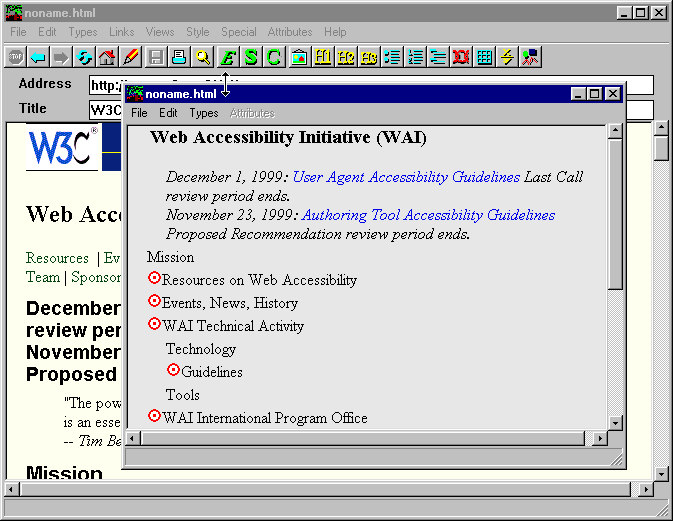

The following image shows how Internet Explorer [IE] provides

cell header information through the context ("right-click") menu:

- 8.2 Indicate to the user whether a link has been visited. [Priority 2]

(Checkpoint 8.2)

-

Note. This checkpoint is an important special

case of checkpoint 8.4.

Techniques:

-

- 8.3 Indicate to the user whether a link has been marked up to indicate that following it will involve a fee. [Priority 2]

(Checkpoint 8.3)

-

Note. This checkpoint is an important special

case of checkpoint 8.4.

The W3C specification "Common Markup for micropayment per-fee-links"

[MICROPAYMENT] describes how authors may mark up

micropayment information in an interoperable manner.

Techniques:

-

- Use standard, accessible interface controls to present information

about fees and to prompt the user to confirm payment.

- For a link that has content focus, allow the user to query the link for fee information

(e.g., by activating a menu or keystroke).

- Refer to the section on link techniques.

- 8.4 To help the user decide whether to follow a link, make available link information supplied by the author and computed by the user agent. [Priority 3]

(Checkpoint 8.4)

-

Information supplied by the author includes link

content, link title, whether the link is internal,

whether it involves a fee, and hints on the content type, size, or

natural language of the linked resource. Information

computed by the user agent includes whether the user has

already visited the link. Note. User agents

are not required to retrieve the resource designated

by a link as part of computing information about the link.

Techniques:

-

- For a link that has content focus, allow

the user to query the link for information

(e.g., by activating a menu or keystroke).

- Refer to the section on link techniques.

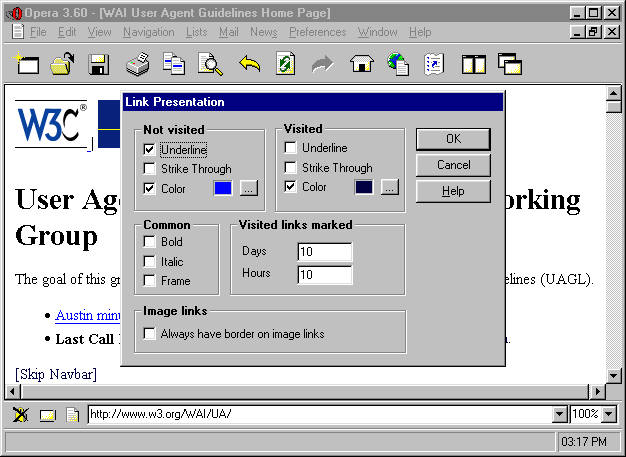

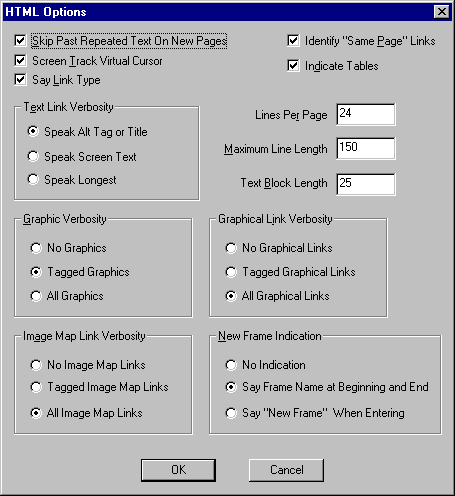

The following image shows how Opera [OPERA] allows the

user to configure link rendering, including the

identification of visited links.

Checkpoints for user interface accessibility:

- 8.5 Provide a mechanism for highlighting and identifying (through a standard interface where available) the current viewport, selection, and content focus. [Priority 1]

(Checkpoint 8.5)

-

Note. This includes highlighting

and identifying frames.

Note.

This checkpoint is an important special case of

checkpoint 1.1.

Refer also to checkpoint 8.4.

Techniques:

-

- If colors are used to highlight the current

viewport, selection, or content focus,

allow the user to configure these colors.

- Provide a setting that causes a window that is the

current viewport to pop to the foreground.

- Provide a setting that causes a window that is the

current viewport to be maximized automatically.

For example, maximize the parent window of the browser

when launched, and maximize each child

window automatically when it receives

focus. Maximizing does not necessarily mean occupying the whole

screen or parent window; it means expanding the current window

so that users have to scroll horizontally or vertically as little

as possible.

- If the current viewport is a frame or the

user does not want windows to pop to the foreground,

use colors, reverse videos, or other graphical clues

to indicate the current viewport.

- For speech or Braille output, use the frame or

window title to identify the current viewport. Announce

changes in the current viewport.

- Use operating system conventions, for specifying

selection and content focus (e.g., schemes in Windows).

- Support the ':hover', ':active', and ':focus' pseudo-classes of CSS 2 ([CSS2], section 5.11.3).

This allows users to modify content focus presentation

with user style sheets.

- Refer to the section on frame

techniques.

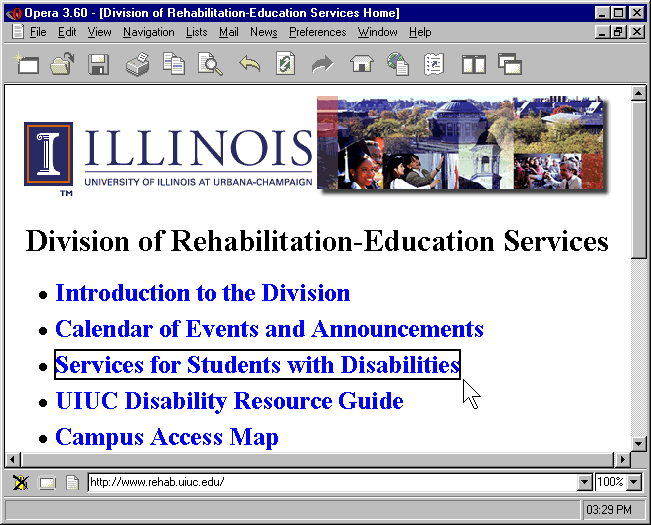

The following image shows how Opera [OPERA] uses a solid

line border to indicate content focus:

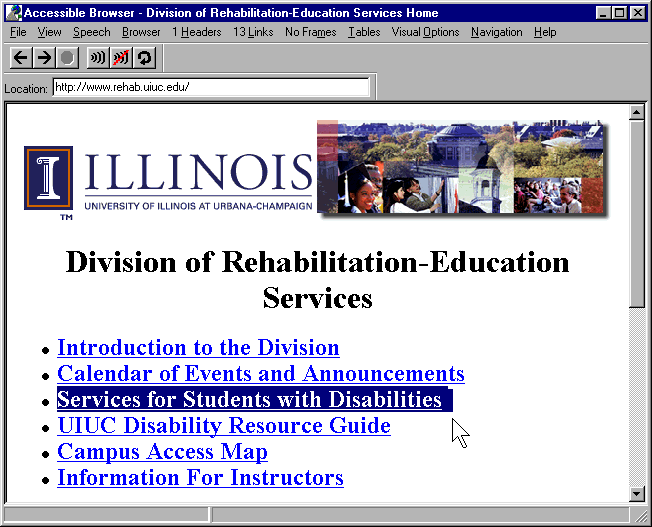

The following image shows how the Accessible Web Browser

[[AWB] uses the system highlight colors to indicate content focus:

- 8.6 Make available to the user an "outline" view of content, built from structural elements (e.g., frames, headers, lists, forms, tables, etc.). [Priority 2]

(Checkpoint 8.6)

-

For example, for each frame in a frameset, provide a table