Original Photograph

Close-up view of a modified feature

Since September 1997, I am a research officier at the department of mathematical sciences at Bath University (UK). My research subjects are 2D and 2.5D computer graphics, image compositing and 2D animation.

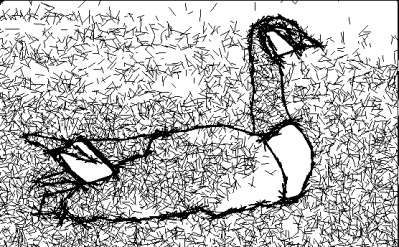

As part of EU-TMR project PAVR (Platform for Animation and Virtual Reality) which involves 11 European research sites to build a common computer graphics environment, Prof Phil Willis, Dr Fred Labrosse and I have designed and implemented a package for image analysis with wich a user can easily extract features from any picture (e.g. people on a photograph). While previous methods require a lot of user intervention to accurately define feature contours, ours only needs from the user a very rough outline of an object to be extracted. This outline is then automatically modified to fit the actual contour of the object using edge extraction methods.

Regions contours, as well as information on the texture of those regions can be viewed as a new "content-oriented" representation of the original image and allows modifications of it in a simpler way than using a the usual raw pixel description. Possible applications of this method are photo retouching or cleaning, and special effects for cinema and television.

One particularity of the extraction process is that it is sub-pixel: the information extracted from the original image are independant of its size or resolution. It is instead encoded in 2D vector form (polygons and closed curves on a continuous plane). The main advantage of this is that an image can be resynthesised at any resolution. In particular it makes it possible possible to display close-up views of the original image without any pixel artefacts.

Original Photograph

Close-up view of a modified feature

My work in this project to develop the reconstruction part of the process: resynthesizing a new image from the extracted (and possibly modified) contour data.

As some applications require resolutions up to 32000 scan lines (especially when the pictures are antialisaed using supersampling), rendering a single image using standard algorithms can be very long and use a huge amount of memory. To solve this problem, I have designed a renderer called IRCS (Image Rendering and Compositing Software) which uses colour coherency and layering information from the vector description to reduce computation time and memory usage. In the beast cases, complexity of the rendering algorithm is linear relative to image resolution (number of scan lines), while standard rendering algorithms have quadratic complexity.

Those results have been published at Eurographics 99 conference and the global extraction/rendering system will be published at Eurographics 2000. While further projects on image analysis and reconstruction have started at Bath, my work has moved along three new directions:

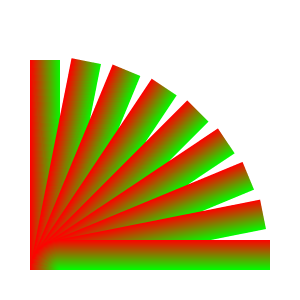

Project Quasi3D aims at reusing the principles of IRCS about acelerating of 2.5D rendering for an "almost" 3D renderer. While a 2.5D system is limited to displaying flat objects that lie on parallel planes facing the viewer, Quasi-3D moves one step closer to true 3D rendering by removing the limitations on the position and orientation of the layers. Indeed they can be located anywhere and rotated around any axis in 3D space. The renderer will correctly handle layer intersections and still make image computation fast using the same principles as IRCS. Early results have shown that it is possible, using this system, to render images in almost the same way Image-Based Rendering renderes do, avoiding the use of costly methods such as Z-buffer rendering.

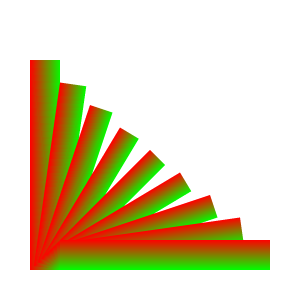

|

|

2.5D Rendering |

Quasi-3D Rendering |

In applications such as digital special effects, the goal is usually to create images as close to real photographs as possible. My rendering system can also be used for NPR, in order to produce image with different graphics styles: painting, technical illustration, cartoon drawing, etc. Using the information extracted from any photograph we can reproduce non-photorealistic renderings such as watercolour, pointillism or comic-strip style:

Rendu "Pen-And-Ink"

IRCS is currently used as the renderer for an 2D key-frame animation project (where the user specifies a set of key scenes and the computer produces a complete animation by "inbetweening" key-frames by interpolation).

The data structure used to represent the key scenes is a CSG tree in which each leaf contains a graphics primitive and a texture, and each node contains a boolean operator and a transformation matrix. In order to generate in-between frames the simplest solution is to linearly interpolate the coefficients of the transformation matrices. But the resulting animations are often unconvincing: object are deformed and movement do not look natural. I have designed a method to interpolate in a more complex way: rather than simply interpolating matrix values, translation, rotation and scaling values are first extracted from the key matrices, using modified singular value decomposition techniques. These values are then linearly interpolated to produce intermediary transform matrices. The animations produced by this method are much more realistic:

|

|

Linear interpolation |

SVD+ interpolation |

In order to display textures other than triangulations or images, we have recently introduced procedural textures, where the texture data are created by calling an external programme that generates the texture image.

As we have not yet written texture generation software, we decided to test procedural textures by calling showstk (the front-end programme for IRCS) itself as the external programme. Calling showstk within showstk is quite simple and straightforward when the 'inner' showstk renders a different stack. The final image contains another image rendered by another invocation of showstk (which could have been performed beforehand, saving the result in a picture file, and using it as a picture texture later).

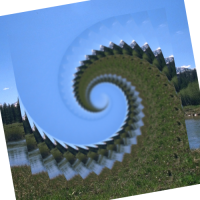

But of course, it becomes interesting when the inner showstk is told to render the same stack as the calling showstk. Recursion galore! The pictures below were produced using this trick. Of course care must be taken to avoid infinite recursion: the size of the procedural textures must be smaller than that of the final image, and the recursion must stop at some point, for example when the texture becomes small enough (typically, less than one pixel wide or tall) so it can be replaced by a transparent rectangle.

|

|

| Recursion I | Spiral |

|---|---|

|

|

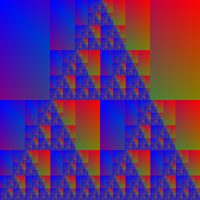

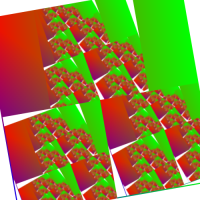

| Sierpinski's Gasket | Twisted Gasket |

The top left picture was generated from a stack containing a textured rectangle (to which a slight perspective transform has been applied), which itself contains another rectangle with the procedure texture. This kind of recursive images appear in "real life" when someone points a camera at the screen the camera is connected to, or in late 70s TV programmes (remember Queen's Bohemian Rhapsody video?). "Spiral" is based on the same principle, except that a rotation is used instead of a perspective transformation.

Sierpinski's Gasket is a well-known fractal pattern, which is obtained by a threefold recursion of the original image (here, one copy in the middle of the top half and two in the bottom half). The 'twisted gasket' uses the same principle but introduces a small rotation of the pattern at each step (as with the spiral).

The projects described above are funded by EU-TMR grant "Platform for Animation and Virtual Reality", and by EPSRC projects Faraday Shrink-to-fit and Quasi-3D.

Last updated 29 June 2000 (MF).