See also: IRC log

See also: Minutes of day 1/2

[resuming discussion from yesterday's meeting]

adam: some confusion on whether 5.1 audio should be 6 tracks, or 6 parallel channels of a single track. I tend to prefer the second approach, but suggest to move on to the mailing-list.

cullen: it sounds good to come up with a decision

hta: 2 alternatives: 1) a track is whatever has one sensible encoding and 2) a track is whatever cannot be subdivided

adam: cannot be subdivided in JavaScript.

hta: in the case of 5.1, there are encodings that will take 5.1 as one entity that JavaScript will have trouble decoding.

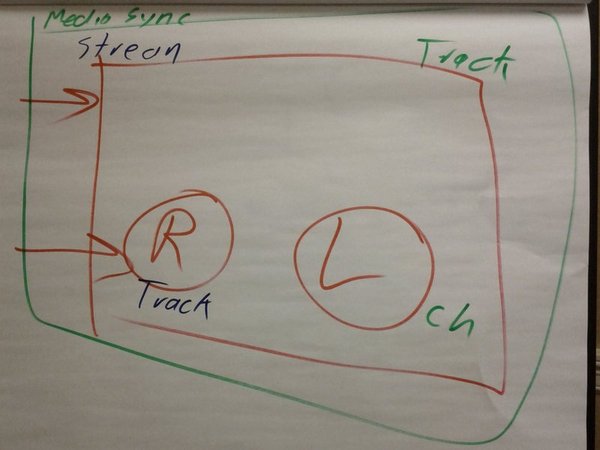

[cullen draws a diagram with stereo channels on white board (Fig 1)]

Fig 1 - Stream, track, and channel

anant: question is does JavaScript need access to the channels?

cullen: it has to be addressable e.g. for one video to go to one monitor and the other to the second one.

adam: why do they need to be

addressable?

... they're two different things

cullen: no, they're not.

... [taking example of audio with multiple channels]

... I was assuming they need to be addressable.

anant: only problem is that track means something else in other contexts.

burn: if we only need to come up with a different name for track, not a big deal. It makes more sense to focus on the definition of the term than on the term itself.

anant: I think JavaScript needs to have access to different channels e.g. to decode things itself.

hta: provided it should be able to do that

[more discussion on audio tracks]

cullen: you need one more name, English won't be encoded with Russian.

[Fig 1 completed]

burn: 3 levels now. Is there a possibility that we'll end up with another, and another?

adam: I don't think we need to go any deeper than channel.

cullen: some possibility to group tracks in pseudo-tracks in some contexts

burn: if we agree on the top level, and the bottom level.

hta: for the moment, we have stream, track and channel.

adam: question is whether channel has to be a JavaScript object.

hta: to say that the right channel should go to the left speaker, that's useful.

cullen: if you have polycom setup, you can end up with 4 different channels, that may be encoded together.

stefan: we have tracks mainly for legacy with the media element.

cullen: the grouping is going to go to the other side through SDP, I think.

tim: that square box is a single SSRC. MediaStream is a single CNAME.

cullen: ignoring these points for the time being.

stefan: I think synchronization should be on an endpoint basis

hta: you can't do that.

stefan: that's my view, we'll discuss that later on.

cullen: we're all confused so need clarification.

anant: for video, if you have the context of channels, what would that be useful for?

cullen: stereo video.

adam: the channels are dependent on each other.

anant: I don't understand why channels have to be exposed in the context of tracks.

[discussion on relationship with ISO base file format]

<Travis_MSFT> Can a "track" be a text caption too?

francois: wondering about mapping to media element. MediaStreamTrack (or channel) will have to be mapped to the track in an audio/video element for rendering in the end. Needs to be clarified.

hta: seems we need some audio expertise. Perhaps discuss during joint session with Audio WG.

adam: moving on. We removed

parent-child relationship within a stream. Good opportunity to

make MediaStreamTrackList immutable.

... I don't have a strong opinion, but it simplifies

things.

anant: if you clone a MediaStream

and disable a track on cloned object, it will disable, not

remove the track.

... The right way to fix this is not to make MediaStreamTrack

list immutable.

... If someone decides to disable the parent track, the child

should receive an event that the track is disabled. Same as if

the track was received from a remote peer.

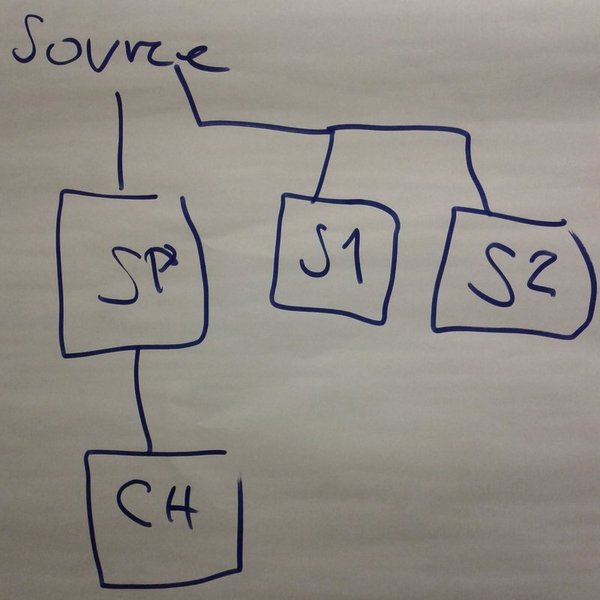

[adam draws Fig 2 on the paperboard]

Fig 2 - Cloned streams with and without parent-child relationship

[exchanges on parent-child relationship, enable-disable events]

tim: the worst situation is starting up the call, adding a video stream, then an audio stream, then something else.

cullen: then unplug a camera.

hta: it's disabling tracks. addStream is the one thing you cannot do with enabling/disabling.

anant: we need some kind of grouping for synchronization.

adam: difference between mute and

disabled. If I disable on my side, track is muted on your

side.

... Should the track be removed from your side?

... I might re-enable it later on.

anant: should we have the concept

of who owns the MediaStream?

... I think the WhatWG thought about it, leading to the concept

of local MediaStream.

... You are in control of local MediaStreams, not on remote

MediaStreams.

hta: it makes a lot of sense to

disable things received from a remote peer.

... When you're changing from disabled to enabled, you're

telling the other side that you'll do something with the data

if you receive it.

anant: It's possible for a track to be enabled without receiving any data. That makes sense.

anant: no real difference between mute and disabled.

cullen: I was assuming you were sending data in the case of mute, and not in the case of disabled.

anant: that's just a waste of bandwidth.

<juberti> there are good reasons to send zeroes.

anant: any sensible scenario?

cullen: no idea.

<juberti> some gateways will disconnect if data is not provided.

richt: perhaps if re-negotiation is noe possible.

ileana: there are cases when you press mute and send music.

anant: does that level of

semantic need to be exposed to JS?

... does it have to be a change of state in the object?

... Use cases?

<juberti> Call waiting is the main use case for "hold".

adam: we need to have some sense of "mute" for streams you don't have control over. Not sure about the best word.

anant: I would assume that you can remove tracks from a stream.

<juberti> Local muting (sending zeroes) has obvious use cases.

[discussion on adding/removing tracks in SDP]

adam: for removing tracks,

disconnecting a camera is a good use case.

... Symmetry for adding tracks sounds important.

francois: same discussion in HTML WG through Web and TV IG concerns on adding/removing, enabling/disabling, tracks to the video element. It seems important to end up with the same interface for both contexts.

stefan: in the end, the first bullet point is removed here ('immutable MediaStream objects')

adam: and we'll make updates for

the remaining points.

... moving on to getUserMedia

... only difference between local and not is you can stop on

localMediaStream.

anant: why do you need to have a stop method?

tim: if I cloned a track in different streams, you have to stop each track individually. With stop, that's taken care of.

hta: now you're introducing a dependency that I thought we had agreed to drop.

adam: I think "stop" on a local

MediaStream shouldn't affect other MediaStreams.

... you need "stop" to say you're not going to use the object

anymore.

anant: I don't understand why it's not possible with remote media streams.

hta: I guess for the time being, the problem is that "stop" is not clearly defined.

adam: moving on to

recording.

... MediaStream.record(). You get the data via an async

callback.

cullen: what format does the recorded data follows?

adam: that's an interesting

question.

... when that got specified, I think there was this romantic

idea about a simple unique format.

anant: getRecordedData means stop the recording and give me the data?

adam: not that much.

cullen: it's awful.

hta: yes, I suggested to delete it while we find something working.

burn: what does it have to do with WebRTC, sending data from one peer to another?

anant: we need to define getUserMedia, so that's one use case we need to tackle.

cullen: we should start from

scratch and design that thing. Need to specify format,

resolution, etc.

... Why don't we start by sending some good list of

requirements to this working group?

hta: At a minimum, a recorder that returns blobs has to specify what the format of the blog is.

anant: Additionally, we could use hints here, so that caller suggests what he wants.

hta: given the reality of memory, we need chunks, so that running a recorder during two hours doesn't mean it will try to buffer everything in memory.

anant: I don't get the use case for getting the recorded data back. It should go to a file.

cullen: somebody else might want to access the data to do other things such as barcode recognition.

anant: not the recording API, that's the MediaStream API.

hta: you might want to move

backward, e.g. in the hockey game to move back to an

action.

... My suggestion is to nuke it from the draft for the time

being. Once we have MediaStream, we can figure out how to

record.

richt: you can call record multiple times. Does that reset?

adam: you get the delta from last time

tim: possibly with some overlap depending on the codec

burn: maybe check with Audio WG, because they are also doing processing with audio streams. Recording is just one example of what you can do with a media (audio) stream.

richt: we're going to record to formats we can playback in our video implementation today, and I guess other browsers will probably do the same, so no common format.

[summary of the discussion: scrap the part on recording for the time being, trying to gather requirements for this, to be addressed later on]

adam: on to "cloning".

... With the MediaStream constructor, you can clone and create

new MediaStream objects without prompting user.

... If you have one MediaStream object, you can only control it

in one way. Muting mutes tracks everywhere.

... With cloning, you can mute tracks individually. Also used

for self-view ("hair check screen")

... Pretty nice thing.

... Cloning is creating a new MediaStream from existing

tracks)

hta: if you have one MediaStream and another one that you want to send over the same PeerConnection, you can create a MediaStream that includes both sets of tracks.

adam: composition: same approach.

Used to pick tracks from different MediaStreams.

... e.g. to record local and remote audio tracks in a

conversation.

... Discussion about this: obvious question is synchronization

when you combine tracks from different MediaStream. Combining

local voice track with remote voice track, how do you do

that?

cullen: if we have grouping for

sync, can't we reuse it? If same group, sync'ed, if not, no

sync.

... We're synchronizing at global times, so no big deal if

local and remote streams.

hta: works if different clocks?

cullen: that's what RTP does.

Works as well as in RTP.

... I think it's taken care of by whatever mechanism we have

for synchronization.

... another thing on cloning and authorization. If

authorization on identity, we might need to re-authorize on the

clone if destination is not the same.

... May not bring changes to the API.

hta: that is one of the reasons

why authorization on destination is problematic.

... If it's irritating, people will work around it.

tim: If I want to authorize sending to Bob, I don't want JS to be able to clone.

hta: what you care about is that it doesn't send it to Mary.

anant: the data can go anywhere,

there's nothing preventing that.

... getUserMedia allows the domain to access camera/microphone,

not tied up to a particular destination.

hta: I think ekr's security draft goes to some details on what we can do and limitations. Identity providers can mint names that look close to the names the user might expect to see.

adam: we need to solve authorization without cloning anyway, because of addStream.

hta: if you want to do that, you have to link your implementation of PeerConnection with the list of authorized destinations.

[more exchanges on destinations]

DanD: depending on where you're attaching to, you can go on different levels of authorizations. If I have a level of trust, then I can say I'm ok with giving full permissions.

anant: problem is where you do permissions. PeerConnection level?

cullen: implementation may check that crypto identity matches the one on authorized lists of identities that can access the camera.

Travis_MSFT: we still need authorization for getUserMedia.

richt: this authorization stuff

on PeerConnection is quite similar on submitting a form where

you don't know where the submitted form is going.

... Not convinced about this destination authorization.

adambe: (missed this)

anant: how do you correlate different media streams

hta: with only local media it's

easy, but mixer has media from multiple sources and they will

be unsynced

... a single media stream, everything in it is by definition

synced. but across media streams no guarantee of sync

fluffy: makes sense. could get cname from them, but doesn't necessarily tell you how they sync

Slides: WebRTC PeerConnection

fluffy: Split work into interface

types

... current spec isn't very extensible

... wrt sdp

... propose wrapping sdp in json object

... need to differentiate offer from answer

... so add this to sdp JSON representation

fluffy: offer answer pair

contribute bits to caller session id and callee session

id

... answer always have an implicit offer

... offers can result in more than one answer

... each media addition results in a new offer/answer

pair

... replaces previous

... increment sequence id to note replacement without setting

up entirely new call

Anant: If sdp doesn't result in new call, does it require user consent?

Fluffy: Yes, depends upon the

class of change. EG new video camera?

... require and OK to the offer answer

... can only have one offer outstanding at a time

... need to know when can re-offer (timeout)

Stefan: Also need to know when offer accepted for rendering RTP streams

Anant: Why sequence ids?

Fluffy: need to suppress duplicates

Anant: Isn't websockets a reliable protocol?

Harald: Not really

... because http can close connection at any time

Stefan: If A makes new offer, when would B side be able to make new offer?

Fluffy: Depends on order of

delivery

... so OK required

Suresh: Are these formats in scope for peer discussion?

Fluffy: Yes, APIs will define how

to process these messages. Work performed by browser

vendors.

... ICE is slow

... it is a strategy for finding optimal paths (uses

pinholes)

... as such can't send packets too fast

... start processing as early as possible. But

need to wait for user authorization on IP address

... amount of time deciding to accept call should mask ICE

delay

... so need a tentative answer followed by final answer which

was confirmed by user

... this is implemented by adding another flag to sdp/json

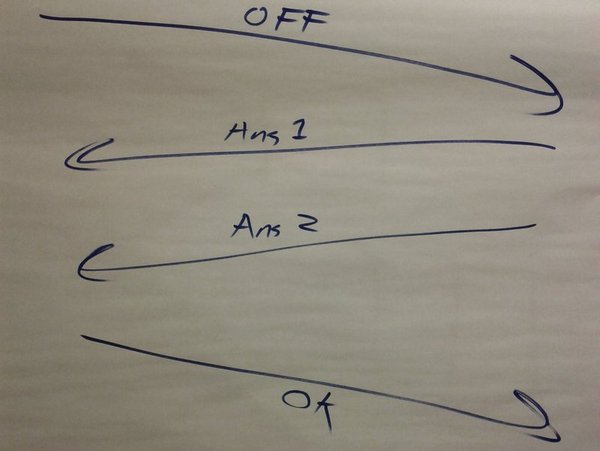

object. "morecoming" (see Fig 3)

Fig 3 - "More coming" temp answer flow in ROAP

DanD: Why not implement as a state machine?

Anant: Why can't OK contain SDP?

DanD: How much time does it buy you?

Fluffy: Time is function of user

input

... and also if IP address is protected information

DanD: Maybe browser should delay response

Fluffy: This flag is

optional

... this should be part of the hint structure

Harald: User interaction must be between offer/answer regardless

RichT: Maybe we could use the 180 in place

Fluffy: Might confuse forking issue and early media

If you get back calllee sessionid that is different, how do you know that should result in same or replacement?

Fluffy: This is same question as to allow forking

Harald: If you get back calllee sessionid that is different, how do you know that should result in same or replacement?

Fluffy: Don't like passing STUN

and TURN credentials

... would rather get an offical API from IETF

Eric: Proposed format is not

strongly typed

... makes extensibility difficult

Harald: For example whether to

provide IP address quickly

... Action add hint structure to peer connection

constructor

Fluffy: Turn servers require

credentials

... so need to pass them around in JS

Anant: username/password@domain

Richt: Should this be mandatory parameter, or can browser substitute?

Anant: Browser should provide default

Fluffly: Common to force all

media traffic through single turn server

... option for browser to be configured for turn server

... ice will choose best

... Also need application to provide prefered turn servers

Harald: Google does http

transaction before setting up turn service

... need to be able to stop serving dead users

... so need a provisioning step

... so this scheme is consistent

... must pass password at start of peer connection

Anant: If JS object, then it's in developer control

Fluffy: Label usage is broken

Harald: Thought was that each

label was a new RTP session?

... Have need for sending information peer-to-peer for

nominating tracks as being in focus

Stefan: Metadata much also be

passed as well as lable

... for example the meaning of the microphone channel

... need to interop with legacy equiptment

DanB: How many thinkgs do we want to label?

Fluffy: I want identifiers on the channels

Tim: Should the list of channels in a given track be mutable?

Harald: Track is defined by its properties

DanB: Haven't decided on the definition of a track

Fluffy: ROAP deals with

glare

... SIP works on waits

... but can take too much time

... better solution based on random timeouts where larger

wins

Fluffy: need DTMF for legacy systems

Anant: Should live in a seperate track in same stream

Harald: Use case for receiving DTMF?

Fluffy: Yes

Harald: What about analog DTMF?

Fluffy: That is translated by the gateway

Milan: What about SIP tones?

Fluffy: Seems reasonable if easy

RichT: Could this fit into proposal where inject additional media into stream?

Fluffy: Injecting crappy sound is cool, but not a general solution for DTMF

Anant: Way to handle that is to define alternate track types

Francios: How do we integrate ROAP into current API?

Harald: Easy. Just replace sendSDP with sendROAP

Have Al & Chris from Audio WG with us

Al: Few proposal roc's work and Web Audio API

Chris: Web Audio API deals with arbitrary media graphs

… we may be interested in basic convolution, equalization, analysis etc. other effects

Chris: Robert's work provided other cases including remote peer to peer

… Chris proposed some code examples of how to integrate with rtcweb

spec at https://dvcs.w3.org/hg/audio/raw-file/tip/webaudio/specification.html

… examples at https://dvcs.w3.org/hg/audio/raw-file/tip/webaudio/webrtc-integration.html

Chris: Audio API is implemented but connection with RTCWeb not done yet but confident we could implement this

Anant: What does createMediaStreamSource do ?

Chris: It creates a media stream

that can be added to the peer (more to answer I missed)

... stream can be more or one channel but defaults to two

Harald: If you have a bunch of microphones in room - are these 3 channels in a stream or ...?

Chris: Can process each stream through a separate filter.

anant: Did it have any idea this is 4 channels

Chris: probably need something more like createMediaTrack

anant: We see it as we have streams, that have a set of tracks, that have one or more channels

Chris: The connections between

the nodes are sort of like a webrtc track. The connection

between the audioNodes is a bit like a RTCweb track

... have direct way ways to get the channels / tracks and

combine into a single stream

... Makes more sense to do these operations on tracks than on

streams

anant: Do you have code showing operating on channels instead of tracks

Chris: See

https://dvcs.w3.org/hg/audio/raw-file/tip/webaudio/specification.html#AudioChannelSplitter-section

... The splitter and merger can be used to extract the channels

from a track or recombine

anant: Can the channels be represented by JS objects

Chris: no - (liberally

interpreted by scribe) more the processing is represented by

objects and the connections between are more what RTCWeb thinks

of as tracks / channels. The channels are accessed by index

number

... can combine with other media, mix, and send to far

side

... Dynamo (sp?) did apps that send information over

werbsockets that allows people to create music locally using

this

anant: how do you deal with codecs / representation

Chris: linear PCM with floating

point using typed array

... want clean integration with graphics and webrtc

... most expensive operation is convolution to simulate sound

of a concert hall

… this is implemented multi threaded

… multiple implementations of different FFTs

and such

anant: Processing in JS?

Chris: much of the processing

done in native code

… Can create node with custom processing with

JS

Cullen: have you looked at echo cancelation

Chris: not yet but have code from

the GIPS stuff

... can use audio tag or video tag and connect with this

Stefan: Have 4 requirements. F13 to F15 and F17

Chris: F13 can do with placement

node

… F14 classes RealTimeAudioNode - probably

better to make new AudioLevel node that just did this simple

function. This is easy and we plan to do it.

… F15 Can use the AudioGain node

… F17 yes can be deal with Mixer

… that happens automatically when you connect

multiple things into an input

fluffy: Question about mixing spoken audio to bit 3 of top speakers

Chris: could do this

Harald: Can you create events in JS from detecting things in audio

Chris: could build something to

add an event to a level filter or something

... no gate, but have compression that is similar

Doug: How does this compare to ROC proposal with respect to library ?

Chirs: ROC proposal has all audio processing in JS

Chris: there is a JS node that allows you to create processing effects in just JS, you can associate an event this way by writing some code to detect a level and trigger an event

Chris: simple common audio , EQ,

dynamics etc are very common

… library provides nodes for these

… for specialized, have a customer JS node

Harald: concern with JS is worry about latency

Chris: agree and very concerned

about latency in games

… for something like guitar to midi to audio,

need very low latency

Doug: with voice, don't want that lag effect where you have talk over

Chirs: JS would add to the

latency

… for something things such as trigger on

signal level, latency would not matter as much

… could have latency in the 100 to 150 ms

range

fluffy: what sort of latency would we typically see on some EQ

Chirs: working on 3.3 ms frames of latency

anant: which API is likely to be accepted?

Doug: plan to publish both as

first public drafts (a very mature one and less matter one)

… It is shipping in nightly of webkit

… If Doug had to bet, since we have mature

spec, something that works well, have specs, have

implementation, Doug would guess lots of interested in web

audio API

… sounds like existing spec can easily be

made to meet needs of RTCWeb

Tim: roc code runs on worker thread so latency is far less than 100 ms in most cases

Eric: In resource constrained environment, better to have processing done in native where it can take advantage of hardware resources of the host

Doug: the difference at an overly simplified level is do you do mostly JS with some extensions for native speedup or do you do mostly native with an escape hatch to do things in JS

Chris: procession in a web work

still have dangers - such as garbage collection stalls

… garbage collection stalls can be in excess

of 100 ms. Depends on the JS engine

Harald: Is the web work stable and widely implemented?

MikeSmith: in all major browsers and has not

changed in long time

… does not mean Hixie won't make changes.

Stefan: should we bring over some requirements to the WG

Doug: Send us the minutes

anant: Need to make sure the representations of the audio can be the same and we can make this work together (media steams in one need to match up with the other)

Chris: have similar problem linking to audio tag

Doug: Good to have doc how how to bridge gaps between the two and make is easy for someone authoring to these two things

Chris: hopefully have concrete

implementation where we can link this together with peer

connection over upcoming months

... have working code in webkit. Gnome GTK port has working

code. Can share code with Mozilla to help move the code in

… Bindings of IDL and such will be some

tedious work but tractable problem

Chris: the DSP code is C++

code

… on mac can use accelerate framework

… Convolution use intel mpl, have ffpt gmpl,

have ffmepg fft, call apples.

Harald: Have fair picture of where we are. Thank you. When getting specs settled down, can use this. Hope things finished on right schedule

Doug: having concrete proposal

from roc will help resole that

… pace will accelerate dramatically

… will keep coordinating

Adam (Ericsson): Prototype implementation has been out for a while

Adam: We have a MediaStream

implementation, getUserMedia with a dialog, PeerConnection

which currently opens a new port for every new media (no

multiplexing)

... We could do a demo, perhaps

anant: any particular challenges so far?

Adam: with the gstreamer backend, directly hooked up directly with video source in webkit. this means we cannot branch out from this pipeline to go in other directions

fluffy: has anyone done ICE? caps negotiation; dtls, strp, how far along on these?

adam: using libnice for ICE based

on gobject, part of the collabra project

... no encryption or renegotiation

... prioritized codecs that we need to test with. using

motion-jpeg, h.264, theora

anant: are you looking to implement your prototype from a browser perspective?

adam: yes

stefan: also looking to interoperate with internal SIP systems

Harald: public initial release

for chrome in november

... main missing pieces were a few patches waiting to land in

webkit, and some audio hacks to connect a media stream from the

peerconnection into an audio element (little cheating here and

there)

<fluffy> Public estimate of release is in November. Main bits missing were some patches in webkit. Still have audio hack simple put object from PeerConnection to speakers. Have ICE have encrypting with lib jingle. Negoatiatoine crypto key. Have implemetation of DTLS-SRTP but not yet hooked up

Harald: we do have ICE, we do

have encryption, using libjingle and can negotiate crypto keys.

have an implementation of dtls/strp but not hooked it up into

our webrtc implementation yet

... we are usign VP8 for video codec, and using tons of audio

codecs

... not hooking up opus until we know what the IPR implications

are

fluffy: SDP?

Harald: using the old version of somewhat SDP proposed earlier

<fluffy> using VP8, waiting for IPR clarification before adding OPUS

Harald: chrome on desktop only, not working on android yet

prefixing?

Harald: yes, -webkit prefix

... patches are landing on the central webkit repository, as

well as chrome

... interesting trying to keep versioning straight amongst all

these repos

<juberti> i think the bulk of the webrtc code is in the embedder section of webkit, so not directly included in what apple would use

On to tim, for mozilla

Tim's slides: WebRTC implementation status in Firefox

tim: slides are similar to

presented in quebec

... waiting for reviews before we land new libcubeb

backend

... roc has an implementation of an audio API that plays

silence, which is progress since last update

... made more progress on the actual code

... list of open bugs on slide, using google webrtc code drop.

work on integration into that codebase

... no longer doing an add-on, doing a branch of firefox

instead

... camera support working on linux and mac, build system issue

preventing windows for now

... dtls, strp are all getting started, moving echo

cancellation to hardware etc. no patches but hopefully

soon

... Q1 2012 for a functional test build

???: thought about mobile?

tim: we have, number of challenges for mobile. no great audio API on mobile, not low latency. need help from google before we can build something people can actually use

Harald: we (google) can't have a low latency API without low latency support from android either

tim: we will probably hook up with a sound API initially (a demo) that it's possible

opera: different timeline. we are pushing getUserMeida separately from PeerConnection. labs build that implements getUserMedia is out there, timeline to implement PeerConnection is not in the next 6 months

DanD: New agenda topic, seeking

feedback on an open issue

... boils down to notifications.

DanD: You want to be called on

your browser but your browser is not up and running.

... Want to understand how big of a need this is.

... Need a platform-independent commonly supported push

notification framework.

... How does such a push notification reach a browser that

isn't running, and how does it start the browser and the

correct web application.

... If this is solved somewhere else in W3C, we need to have a

note that references it.

... May need something in the API to indicate if you want to

send such a notification when you try to start a session.

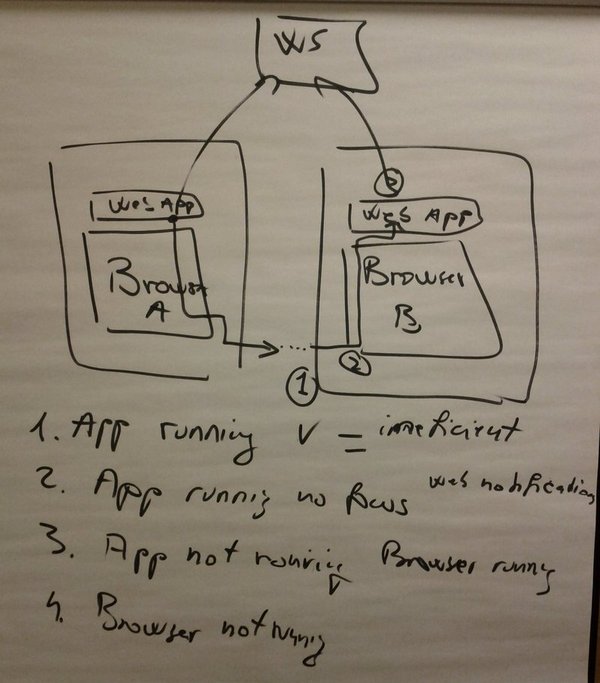

[DanD draws diagram on the white board (Fig 4).]

Fig 4 - Incoming notifications

anant: Essentially related to the

open question from yesterday: do we allow incoming calls even

when the user is not on a particular website, but is

online?

... Can we require the web app to be running in some form or

another?

DanD: Want it to work even if it's not running.

anant: Actually, I would advise

against that.

... I want to be in a state where I don't want to receive

calls.

DanD: But you set up your browser to receive notifications.

anant: But that's the same as always being signed into gmail. It's an explicit user action.

fluffy: When you have a cellphone

and you turn it on, it will always accept incoming calls.

... I'm not suggesting the model of the past is the model of

the future, but I think we're moving more and more towards

devices that are always on.

anant: It's easy to build

automated stuff on the web, so you're going to get in trouble

with spam.

... Just a note of caution.

francois: I don't get the difference between having a setting in the browser and running a web app.

DanD: This is why I was drawing

this diagram.

... If none of these things are running and I send a

notification, I want to be able to launch the browser and have

contextual information to say that a user of this browser wants

to be able to talk to you on app A.

francois: So you're outside of the context of the browser.

DanD: The browser may be in the

background, but it might be not running altogether.

... If I'm truly going to implement an incoming call use case,

there's no point if I can't answer that call.

RichT: There are four

scenarios

... 1) The web app is running in the browser and focused

... 2) The web app is running but not focused

... 3) The browesr is open but not the web app

... 4) The browser is not running.

anant: You always have to have something, a small daemon listening for notifications.

fluffy: I want to talk about the

first one first.

... I think if you have 10 apps all running at the same time,

and they all have a TCP connection back to the webserver and

they're on a mobile connection

... you have to do a liveness check fairly frequently

... so your battery life is abysmal.

... If you do that in an app on the Apple app store, they will

ban your app and make you use their single global

connection.

DanD: I captured that by saying that case 1 is solved, but inefficient.

RichT: We have web notifications

come out of a different working group.

... if an app is not focused there should be an even that an

incoming call is received that can trigger a web

notification.

anant: That part is missing from

the API right now, and we should add that.

... We need an API to register a callback when an incoming call

occurs

francois: It could be an event.

anant: Should it receive a

PeerConnection object in that callback?

... Depends on whether we want to start doing ICE and reveal IP

address before answering.

RichT: We could trigger an OS level notification, but require the user to click on it to take them to the webapp.

anant: Could we apply the same

thing for case 3?

... I was thinking that when the OS notification is generated,

you click on it, and the web app is opened in a new tab?

hta: Is that in the spec?

anant: I'm just brainstorming ideas.

hta: I was wondering whether the notification API was part of the callback API you were describing or if it was new things that you think we should put into it.

anant: I think the notification API is open-ended.

hta: That kind of open-endedness is a null specification. It doesn't tell me whether it's going to work or not.

DanD: The moment you rely on

something that is OS dependent, how do you know that it works

interoperably?

... What is the level of support of this web notification?

RichT: It's still a draft, but it's part of the web, and should work on all devices.

francois: In the 3rd case, the app is not running?

RichT: It's headless, so there's no UI.

DanD: There is still the situation where you don't have any headless thing running.

francois: If nothing is running, how do you know where to dispatch the incoming notification?

DanD: It has to be dispatched to the default runtime to kick start the process of running the web app.

francois: Which app?

DanD: That has to be part of the notification.

hta: An incoming notification to my computer has no business knowing which browser I'm running.

DanD: I agree with you. It should invoke the default web browser.

anant: What if that browser doesn't support WebRTC?

fluffy: Then you need to uninstall it :).

DanD: But I said in the

notification I need to know what application to launch to get

back into focus.

... otherwise I don't know what JS to load.

hta: Basically you need a URL.

anant: But to generate that notification you're relying on non-web technology.

francois: It means your listening

to notifications that could come from anywhere.

... Maybe it could be restricted from known domain names.

... Otherwise you could flood the user.

Stefan: I think you could

register your web application with web intents to be

notified.

... something is left running even if you close the

browser.

... To get from the server side to the browser we have

something to send events and web sockets, so that part is

solved.

... Some part of the device must be awake and listening to

pushed events.

hta: Some devices like Chromebooks don't have the concept of browsers not running.

fluffy: If you work in a web framework that's generic, you want to be able to optimize it for lower power situations.

Stefan: I think web intents is similar to this notification idea. You actually rely on the OS to wake up your platform, but don't specify how it's done.

DanD: The only reason I wanted to

have this discussion was not to solve it.

... but to understand the reasoning, if it's a technology we

have to support, what the options are, and what the

dependencies are for future work.

Vidhya: Is one of the conclusions we're coming to, do we need an application ID associated with the notification?

francois: No.

Stefan: We haven't gone into that level of detail.

DanD: We don't need an

application ID, we need a way for the end parties to register

with a web server.

... There is some sort of a presence notion.

... How do you convey, "I know where you were registered last

time, how do I send you an alert?"

... If I go to Facebook, I know how my friends are, but I don't

care what application they use, as long as they're registered

with a service that will do the handshake.

... It's not that webapp A will send the notification, but

there will be a mediation framework that optimizes the

notifications like fluffy said and packages them to send the

results.

... to determine if you need the user or the application or you

need both.

Vidhya: I feel uneasy because that ties you to a specific server. This should be open.

DanD: I'll give you an exact use

case. I called my mom from my browser.

... She was on a physical phone, and she hangs up, and then

wants to call me back.

... Where is she going to call?

hta: It's not at all clear that she should be able to.

fluffy: We have this odd tradeoff

between one connection for everything you'd ever want to

connect to.

... There's different levels you can aggregate on, from one

connection per site to only one connection total.

... They all make me very uncomfortable.

Vidhya: Once I got my iPad I noticed certain things that I couldn't do without an iPad or without being connected to Apple, and this was supposed to be web technology.

RichT: I don't think it's tied to

a particular server.

... Browser B has established a connection to a provider, and

the provider knows where the browser is.

... the message is received with a particular context, and the

context decides what to do.

... I think it's just going to be a process of that out-of-band

channel.

DanD: It has one big assumption: that the browser is up and running.

RichT: If the browser isn't

running, but we're still running a context, we're fine.

... A JS context would provide the same functionality.

DanD: I'd like to learn more

about this headless thing.

... From what I'm hearing you're saying it covers all the

scenarios.

... It goes back to fluffy's point that these things may not be

efficient.

francois: What is not efficient

may be the way that you push the notification.

... Which is why I was talking about the server-sent event,

which is an ongoing draft.

... It's precisely meant for mobile devices.

DanD: The conclusion is we have a

need, we may have solutions, and we need to capture how we can

deal with these scenarios.

... I'm trying to understand if there's anything we need to put

in the API to indicate if I want to send notifications.

anant: I was saying we need a callback for receiving them.

francois: Is that up to the app?

DanD: I'm done.

Stefan: I think we need to describe the use case.

DanD: I'd like to see some links to some of the works on IRC.

anant: Web notifications is still in editor's draft, so they're even farther behind than we are.

<francois> Server-Sent Events

<francois> Web Notifications (exists both as public working draft and editor's draft)

RichT: Should notifications be

explicitly asked for, or something that is always done?

... At the moment it's going to be an extra thing that

developers do voluntarily, but perhaps it has to be

implicit.

DanD: From what I understand there's nothing that has to be built into the browser to receive notifications.

anant: Web notifications needs to be implemented in the UA to be useful.

DanD: So the work that is planned for web notifications is the hool that is needed.

anant: I think they are a fairly good match.

hta: I think that the next thing

that needs to happen is that someone needs to look at these

mechanisms and try to build what we need.

... if that covers the use cases then we're done.

... if not we have to take notes of that.

Stefan: Are you volunteering DanD?

DanD: I don't have a working demo.

hta: I don't think you need a working demo of WebRTC, just to be able to get web notifications going.

Stefan: Or just look at the specs and see if they do what we need.

DanD: I can definitely look into that.

RichT: If web notifications were

to work, you're going to click this thing and get back into the

tab, but then get back into the tab and show the normal accept

UI.

... should we have a way to do this in one step?

hta: That depends on how much richness you're going to have in the notification.

anant: You may need to be able to refuse, pick cameras, check your hair, etc.

fluffy: When you're trying to filter spam, the consent message itself can deliver the spam.

anant: You definitely have to think about spam.

fluffy: I don't know the answer, but it's a great point.

RichT: It's not perfect, but it does allow the focus to go back naturally for the webapp.

anant: For the good calls, but we want to separate them from the bad calls.

hta: We've gotten through some

fairly hefty discussions.

... we have a few action items that are reflected in the

minutes, and some that are not.

... including an action item to come up with a specific

proposal for what a track is.

anant: One of the editors.

hta: We did not get around to saying how tracks, MediaStreams map onto RTP sessions and all that.

Stefan: That's for the IETF.

hta: As long as they know what

things we think they should be mapping on to, they might have a

better chance of hitting them.

... In two weeks it's Taipei, and we'll revisit some of these

issues at the IETF meeting.

... What other things have we forgotten?

fluffy: Just putting my IETF

chair hat on, what things are useful that came out of this

meeting?

... We're okay with this ROAP model?

... We seem to be okay with DTLS-SRTP, we're still discussing

permission models.

... we talked about codecs.

... The long-term permission model.

... IETF is working on the assumption that we need the

long-term permission model, so that doesn't change.

anant: There's also the issue of identity.

hta: We have raised the concept that buying into a specific identity scheme is probably premature.

anant: Agree.

hta: We seem to think that interfaces that allow us to tie into identity schemes are a good thing when we need them.

fluffy: If that's possible. Did that make it into the minutes?

derf: Yes.

anant: I think the right answer is a generic framework that you can hook up any identity service, it was just motivated by browser ID.

fluffy: And ekr's draft gives two examples.

RichT: I was thinking that this could be done with DNS.

fluffy: So you call

fluffy@cisco.com?

... I have some browser code for you...

anant: ikran. It's just

SIP.

... Facebook may not have their user names in the form of

username@facebook.com.

... If we can build a more generic thing, then we can get

buy-in from more services.

... It's a balance between short-term how many people can we

get to use this service vs. having something interoperable,

where the e-mail address format is much better.

fluffy: I don't think it's the

form of the identifier, but it's clear identity systems are

rapidly evolving.

... ekr has not claimed that we can have this pluggable

identity model.

... but he thinks it's possible and wants to go do the work,

and he's already shown it works for two.

anant: But that is very much an

IETF issue, so definitely worth discusssing in Taipei.

... though there's some overlap with W3C.

fluffy: IETF may say we're very happy to do the comsec security issues, but we don't want to touch how do you display a prompt to ask for a password, etc.?

hta: I thought it totally destroyed that distinction with oauth.

fluffy: I think it took the power of Lisa to make oauth happen at IETF.

burn: It also needs to blend well

with what web developers do.

... if they have to track too many identities it's a mess.

anant: If I want to write

chatroulette.com, I don't want any identity.

... and that should work, but if you want identity, you should

be able to get some assurance.

burn: But it should still be able to play well with existing methods of identity on the web.

DanD: But if users say I want to have the identity of the other party verified, they'll got with whatever solution is most trustworthy.

anant: It depends on much trust

the user has.

... The places where it may not be possible to use existing

identity schemes is when both parties don't trust the signaling

service.

... Which is where we may not be able to use the existing

identity systems.

fluffy: That model is common (see

OpenID, etc.).

... I think it's also interesting in oauth most of things

you're trying to solve can be solved with SAML, but the web

hates SAML.

DanD: Most of the problems can be solved with long and short-term permissions.

DanD: you might actually use oauth as a method of permission granting.

Stefan: Any other final business?

hta: Thank you all for coming and well met at our next meeting?

DanD: When is the next meeting?

hta: After Taipei.

... is it reasonable to aim for a phone meeting in

December?

fluffy: That sounds reasonable. I

will be in Australia.

... I'm going to be up very, very late, aren't I.

hta: Somehow I doubt a doodle will end up with a convenient time for Australia.

burn: Shall we take a poll to see how many people are sympathetic?

hta: Okay, so next meeting

sometime in December timeframe.

... at that time we might have more input from the IETF and new

public WG drafts.

... we'll continue to make forward progress.

burn: They might not be public drafts, but that's more for convenience and simplicity.

francois: Now that you've published your first public working draft, you'll want to publish public drafts regularly.

DanD: Will the meeting notes be published?

francois: I'll clean them up and

send them to the mailing list.

... I took pictures of the diagrams.

anant: I noticed the Audio WG was

using hg.

... I'd like to switch to that, if it's not a big deal.

burn: We could put the draft on github.

fluffy: Are you okay with github, Adam?

AdamB: Yeah, yeah. I'm basically in favor of the systems in reverse order of age.

RichT: You'll publish details on the list?

fluffy: This is just where we merge things together before putting them in CVS, but we'll send details.

burn: Public drafts still need to use CVS, right?

francois: Yes, but that's not on your plate. That's internal W3C business, because you don't have access to that CVS area.

hta: The editors will have to sort that out.

[meeting adjourned]