In the past, W3C browser-centric technologies have not adequately addressed the needs of dynamic treatment of media, be it static images, audio, or video. The HTML and DOM specifications have not exposed any properties of the media itself, only the elements that reference it, such as <img> and <object>.

A proposed API, the Media Object Metadata specification (still in Editor's Draft), would allow users to access that metadata client-side, and even server-side. It would give them the ability to read, and possibly write, to the metadata about that file, on a temporary or permanent basis.

Metadata is simply information about a particular piece of media, such as video. But there is nothing simple about the implications of where that metadata is stored, and how it is disseminated.

Both HTML 5 and SVG (Scalable Vector Graphics) 1.2 include <video> elements, though they use different models. But neither model exposes the richness of all of that video's metadata, and the Media Object Metadata specification would define the interface. It would allow for author and end-user access to both intrinsic and extrinsic metadata, and fills in a looming gap in standardized methods.

Because metadata can come in many forms, from binary to XML, open and closed, structured and loose, the methods and properties that expose that metadata must be as flexible as possible. At this stage, individual metadata formats would not be defined, and implementors would have to decide which to support. Hopefully, this will change as dominant metadata formats emerge, and it would be best if those formats were open and standardized.

Metadata that relates specific properties of the media itself is intrinsic. Examples of intrinsic metadata are file size, duration, and format. Changing this information may result in corruption of the file format; thus it should be treated as immutable, unless modified in a manner appropriate to the format itself with an appropriate editing application. For example, the duration of a video may be shortened by a video-editing program, but merely changing the duration propoerty would not accomplish the same task, or change the file size; it would only cause the media player to misinterpret the content, and may even present a security risk due to buffer overrun.

Some types of intrinsic metadata are relevant only within the presentation context. The bitrate of a progressive video is a transitory effect based on the available bandwidth and whether the file is being accessed locally or remotely. In these cases, altering this metadata is simply meaningless.

Metadata that imparts specific properties of the media itself is extrinsic. Examples of intrinsic metadata are geo-locational data, comments, and author information. Changing this information may result in loss of non-critical information, but in the best case would enhance the information. This is probably the most exciting and accessible kind of metadata for end-users; "tagging" is a form of metadata.

A common type of extrinsic metadata is annotation, which is contextual information applied to the media.

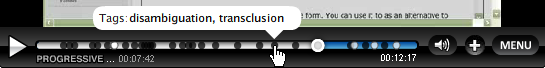

One specific form of annotation is temporal cues. These pertain to a particular point on the timeline of timed media like video; they may carry specific information regarding that point (such as scene or chapter headings), or be generic identifiers with no content (such as timestamps). Figure 1 shows an example of an interactive timeline visualization which has an exceptionally large number of time-related data. In addition to the current state (playing, with the option to pause), it has a marker (and a textual timestamp) showing the current position on the timeline relative to the total video length, the current percentage of the content stream that has been downloaded (the blue bar), and two different types of temporal markers (light and dark circles). Absent, but commonplace, is an indicator of the estimated time until the file has finished downloading based on the current bitrate.

|

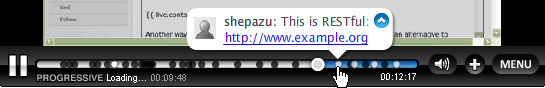

Figure 2 shows a traditional metadata "tag" on a particular timestamp. Hovering the pointer over the dark circle reveals a tooltip with all the tags associated with that point. These tags may or may not be associated with a structured ontology or folksonomy. They are typically textual, and often have limited context; they may apply to that timepoint in the video, or may be meant to apply to the video as a whole. Most often, these tags are used for associating concepts to the media in question, and are useful for categorizing, indexing, or searching for that content. Time-specific tags may even be used to search within a video, providing still more functionality and value to that piece of media.

|

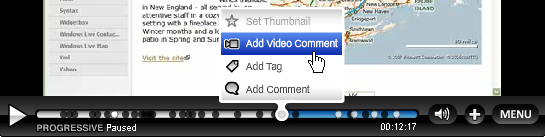

Figure 3 shows an similar tooltip, but the content is a complex comment, with a hypertext link. Normally, such information is not used for categorizing the video, but merely serving as an extraneous remark or opinion, or even a threaded dialog between end-users.

|

Figure 4 shows an example of an interface used for annotating a particular point in the timeline. In this instance, it offers tags, comments, and video comments; however, it could associate any timestamped or synchronized reference, including timed text (closed captioning or karaoke lyrics), raster images, audio commentary, music, or any other supported media. Audio of video annotations could be included by link reference or by direct embedding, depending upon the format and the use case.

|

Any of these marked timepoints may be theoretical targets for a link, assuming the structure of the metadata and the User Agent support such functionality. This would be useful, since a video's duration may be changed, which would break any timeline-based links, while metadata-based links would remain robust. A metadata-based link could also apply to multiple videos that are marked up in a predictable manner, such as instructional material with consistent steps or chapters, obviating the need to customize a linking structure. Embeded metadata could easily be exposed outside the video file itself, such as a linked list of keypoints in a surrounding HTML page.

Currently, these APIs are exposed only through predefined interfaces and controls offered by the format, exposed only insofar as the API allows. This inherently limits what a creative content author can do with this information, and the manner in which this information can be accessed by the end-user. The Media Object Metadata specification would allow for much more diverse and rich interfaces for that data, aligning it more closely with the architecture of the Web.

Another form of annotation is locational markers, similar to MPEG-7 Shape Descriptors. Locational markers serve to pinpoint or outline particular areas within the frame of the video, and may or may not apply to particular points in time; for many videos, such as video-blogs, the subject rarely moves, and a simple outline could remain in place through the entire duration of the video. Like time-based markers, they can bear any kind of related information, such as tags, comments, or links.

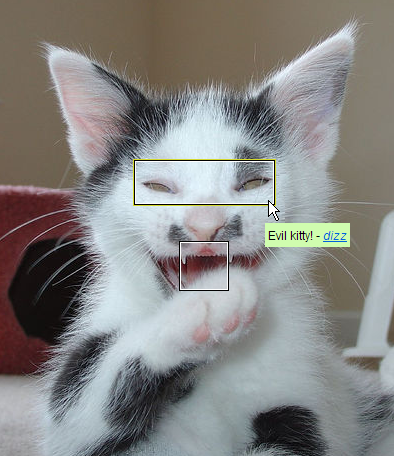

Figure 5 shows a locational marker in a popular photo-sharing service, revealed as a comment in a hovering tooltip. This could easily be supported in a video format and User Agent as well, created by either manual or automatic means, or a combination of both; a edge-detection algorithm could be applied to find objects, and those outlines could be selectively annotated by end-users. The shape itself could be in the SVG format, allowing rich interactivity.

Figure 5: Location-specific annotations

An interesting application of temporal and locational metadata would be a shapes outlining particular individuals within a video, tied in with FOAF (Friend-of-a-Friend) information, which could describe the relationships of the people in a rich way, and definitively associate those individuals to other media resources (such as other movies or photoes) in a loosely-coupled, distributed way.

Both content creators and end-users can avail themselves of any of these annotation options, but only if the metadata is embedded in the media is it portable. Both video-editing applications and presentation forums (such as Web sites or video-viewing software) would need to interoperate and expose compatible APIs for these metadata to be useful along the entire lifecycle of the video.

The manner in which metadata is consumed also opens new possibilities. End-users may choose to filter the metadata on its provenence, such as the author of the comment or timestamp, or the site or application that inserted it. When this metadata resides in the file, that possibility persists no matter if the file is on some popular Website or on the user's hard-drive.

The value of media such as video stands to be greatly enhanced by the same principles that have made the Web as a whole successful: interaction, cooperation, portability, and linking. But most video content loses the contextual additional value when it is moved from the site where that metadata content lies. Ideally, metadata should be embedded, not locked away in proprietary walled gardens.

It is only because authors and end-users together have taken an interest in tagging content that it is indexable and searchable. This is why it's desirable for the metadata to persist with the media itself, not within the confines of a single, transitory Web Application. Similarly, the metadata format itself must be an open standard, or the information generated by a distributed effort is locked away in the resource, in a manner usually more legal than technical.

In order for video to be a first-class citizen of the Web, it must bear not only the privileges that come with that, but the responsibilities. It must adhere to the principles that fostered the Web, which includes openness. An API, and a common set of agreed principles, must expose those possibilities to the greater community.