Position

paper for W3C Workshop on Frameworks for Semantics in Web Services

Semantic Multimedia Adaptation Services

for MPEG-21 Digital Item Adaptation

Dietmar Jannach, Klaus Leopold, Christian. Timmerer‡, and Hermann

Hellwagner

‡ …

Chairman of Ad-Hoc Group on MPEG-21 Digital Item Adaptation

Department of Information Technology, Department

of Business Informatics and Application Systems

Klagenfurt University, 9020-Austria

{firstname.lastname@uni-klu.ac.at}

Overview

Background

and research area

Over

the last years, our research groups have been actively involved in the development

of the ISO/IEC MPEG-21 standard, in particular on Part 7 entitled Digital Item

Adaptation (DIA) [1][2]. Our recent work in that area ([3],[4]) aims at developing a standardized framework for Semantic Multimedia Adaptation Services.

In the context of Universal Multimedia Access (UMA), next-generation multimedia

servers will be capable of intelligently transforming the multimedia content

according to the users’ context before sending it over the network. We see the

main challenge in this area that the field is rapidly evolving, i.e, new video encoding and compression techniques are

being developed, metadata annotations (e.g., MPEG-7, Dublin Core) allow for

content-based adaptation, and the heterogeneity of networks and end-user

devices is continuously increasing.

As a

result of this development, we cannot be optimistic that a single software tool

will be available in the near future which is capable of doing all of the

possible and required transformations of all kind of coding formats (e.g.,

JPEG, JPEG2000, MPEG-1/-2/-4, MPEG-4 AVC/SVC, H.26x, etc.) for all the

different types of user preferences, network conditions, or (end) device

capabilities.

In [3]

and [4] we therefore propose a framework for multimedia

adaptation that solves such complex adaptation task by executing an appropriate

sequence of much simpler, individual transformation steps on the original

content. The main goal of the framework is to enable a simple integration of

external software components that provide specialized multimedia transformation

functionality. Given the specific preferences (e.g., terminal capabilities) of

a user, the multimedia server computes and executes appropriate adaptation

sequences on the given media. For the construction of these adaptation chains,

we propose to use formal, semantic descriptions of the effects of applying an

individual function on the content. Based on such precise semantics, these

descriptions are then exploited by a knowledge-based reasoner

(planner) that composes suitable adaptation chains.

In

particular, we propose the usage of OWL-S as representation language for

describing adaptation tool semantics in terms of inputs, outputs,

preconditions, and effects. Additionally, we use the existing MPEG-7 Multimedia

Description Schemes (MDS) and MPEG-21 DIA standards as the shared domain

ontology, as these standards already define the set of terms and symbols that

can be used in the description of the semantics of service execution. The

feasibility of the technical integration of OWL-S descriptions, the standard

Web Service grounding, and the existing MPEG standards has been evaluated in

two MPEG Core Experiments (CEs) and documented in an

informative Annex of [5].

Our

expectations/interest in the workshop

We

view our work as a real-world use case for the application of automatic service

composition based on semantic descriptions. The technical feasibility and the

low-level integration into the related MPEG standards was

demonstrated in a prototype implementation of the framework.

Nonetheless,

the acceptance of the approach (within MPEG) will be hampered, as long as OWL-S

and SWRL and in particular the languages for describing execution semantics are

not standardized.

As

such, our aims of the workshop participation are to:

―

get an up-to-date insight on

current developments both with respect to the technical infrastructure as well

as recent trends in the area which will potentially further influence the

ongoing standardization efforts in the MPEG-21 community, and

―

optionally report actively on the experiences we gained from our application

domain, i.e., what is actually possible, where did we need workarounds, what

are the limitations of the current approaches and so forth.

Technical details

We built a framework

which is capable of computing and executing multi-step adaptation sequences

based on semantic descriptions of the available transformation operations [3]. We use OWL-S for

representing inputs, outputs, preconditions, and effects

(IOPE) and a Prolog-based planning engine that interprets these descriptions

and produces adequate adaptation plans. For interoperability reasons, we used

the existing MPEG standards as the shared domain ontology, which for instance

defines terms that can be used in the IOPE descriptions.

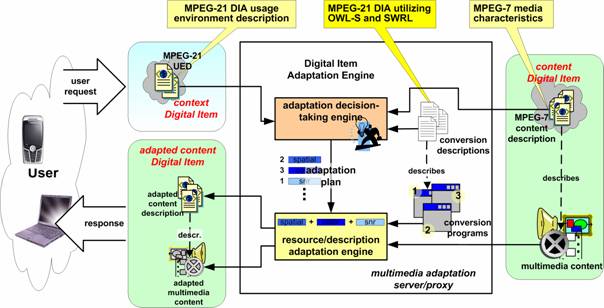

Our framework consists of two major parts, the adaptation decision-taking engine and the resource/description adaptation engine as depicted in Figure 1. The adaptation decision-taking engine is responsible

for finding a suitable sequence of transformation steps – the adaptation plan – that can be applied on

the multimedia content. The adaptation plan is then forwarded to the resource/description

adaptation engine which performs the actual transformation steps on the

multimedia resource. In parallel, also the accompanying (MPEG-7) content descriptions

are transformed adequately.

The finding of a suitable transformation sequence is modeled as a

typical state space planning problem, where actions are applied on an initial

state to reach a goal state. In our domain, the start state corresponds to the original multimedia content which is

specified by means of MPEG-7 MDS metadata descriptions. The goal state is an adapted version of the

multimedia content which satisfies the users’ context (i.e., preferences,

device capabilities, and/or network conditions) which are expressed using

MPEG-21 DIA descriptions. Actions are

the conversion programs that can be applied on the multimedia content and which

are described in terms of inputs, outputs, preconditions, and effects

(IOPE) by using OWL-S together with SWRL.

Figure 1: Knowledge-based

multimedia adaptation framework [6].

The following simplified example shows the description of the start

state and the goal state of a multimedia adaptation problem where an image has

to be spatially scaled and the color has to be removed. Note that for the sake

of readability we use an informal notation rather than the internal OWL-S

representation.

The start state – the MPEG-7 description of the existing multimedia

content – can be described as follows:

jpegImage(http://path/to /image.yuv),

width(640), height(480), color(true).

The goal state – the MPEG-21 DIA description of the user context – can

be described as follows:

jpegImage(file://path/to/image.yuv), horizontal(320),

vertical(240), color(false)

The following example shows the description of the spatial scaling

operation for images based on the IOPE approach. We omit the similar

grey-scaling description for sake of brevity.

Operation: spatialScale

Input: imageIn, oldWidth, oldHeight, newWidth, newHeight

Output: imageOut

Preconditions: jpegImage(imageIn), width(oldWidth), height(oldHeight)

Effects: jpegImage(imageOut),

width(newWidth), height(newHeight),

horizontal(newWidth), vertical(newHeight)

The adaptation framework features s

1.

read(http://path/to/image.yuv,

outImage1)

2.

spatialScale(outImage1, 640, 480, 320, 240, outImage2)

3.

greyscale(outImage2,outImage3)

4. write(outImage3, file://path/to/output/image.yuv)

By using the IOPE approach for modeling the functionality of adaptation

services, our engine remains flexible, since the core planner operates on

arbitrary symbols, such that new adaptation services can be easily added

without changing the implementation. Moreover, IOPE-style descriptions have

shown to be expressive enough for a wide range of problem domains. Another

interesting aspect in our problem domain is that the level of detail of the functional

descriptions of available adaptation services can vary. In the example given,

each action is an atomic, single-step picture transformation. This

fine-granular specification is reasonable in cases when, e.g., open source

transformation software can be used in the adaptation engine. In this scenario,

the adaptation chain and the execution plan is composed of API calls to a local

media processing library. On the other hand, as newer s

Concluding remarks

The first edition of MPEG-21 DIA has been published by ISO/IEC in

October 2004 which addresses many interoperability issues imposed by UMA, i.e.,

enabling transparent access to (distributed) advanced multimedia content by

shielding the user from terminal and network installation, configuration,

management, and implementation issues. In other words, it allows users to

access multimedia content anywhere, anytime, and with any kind of device. The

first amendment to DIA entitled Conversion and Permissions to be finally

approved in July/October 2005 targets the description of devices in terms of

its supported conversion operations among others. In this position paper we

indicated how OWL-S/SWRL can be used for this purpose by utilizing existing

MPEG standards as the shared domain ontology.

Discussions on this topic are also taking place on the mailing list

mpeg21-uma@merl.com, which can be subscribed to by sending an email to christian.timmerer@itec.uni-klu.ac.at.

References

3.

Jannach, D., Leopold, K.,

Timmerer, C., and Hellwagner H.: Toward Semantic Web Services for Multimedia

Adaptation. In: X. Zhou, S. Su, M. Papazoglou, M.

Orlowska, K. Jeffery (Eds.): Web Information Systems

- WISE 2004.

4.

Jannach, D., Leopold, K.,

Hellwagner, H., and Timmerer, C.: A Knowledge Based Approach for Multi-step

Media Adaptation. In: Instituto Superior Técnico,

5.

Timmerer, C., DeMartini, T.,

and Barlas, C., (eds.), “Information technology — Multimedia framework

(MPEG-21) — Part 7: Digital Item Adaptation, AMENDMENT 1: DIA Conversions and

Permissions”, ISO/IEC 21000-7 AMD/1, Final Proposed Draft Amendment 1

(FPDAM/1), January 2005.

6.

Jannach, D., Timmerer, D., Leopold,

K., and Hellwagner, H., “A Knowledge-based Framework for Multimedia Adaptation”,

to appear in the International Journal of Applied Intelligence, Springer Science+Business Media B.V., 2005.

Other MPEG documents are available at http://www.chiariglione.org/mpeg/

under ‘Hot news’ or ‘Working Documents’. An introduction to MPEG-21 – especially

to DIA – is also available at http://mpeg-21.itec.uni-klu.ac.at/.