Or a network of Web services

III.2 Input Modalities

Need Grammars: SRGS and InkML

SRGS

<one-of> <item>Michael</item> <item>Yuriko</item> <item>Mary</item> <item>Duke</item> <item><ruleref uri="#otherNames"/></item> </one-of> <one-of><item>1</item> <item>2</item> <item>3</item></one-of> <one-of> <item weight="10">small</item> <item weight="2">medium</item> <item>large</item> </one-of> <one-of> <item weight="3.1415">pie</item> <item weight="1.414">root beer</item> <item weight=".25">cola</item> </one-of>

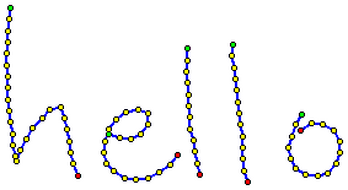

Defining handwritten gestures and grammar: InkML

<ink>

<trace>

10 0 9 14 8 28 7 42 6 56 6 70 8 84 8 98 8 112 9 126 10 140

13 154 14 168 17 182 18 188 23 174 30 160 38 147 49 135

58 124 72 121 77 135 80 149 82 163 84 177 87 191 93 205

</trace>

<trace>

130 155 144 159 158 160 170 154 179 143 179 129 166 125

152 128 140 136 131 149 126 163 124 177 128 190 137 200

150 208 163 210 178 208 192 201 205 192 214 180

</trace>

<trace>

227 50 226 64 225 78 227 92 228 106 228 120 229 134

230 148 234 162 235 176 238 190 241 204

</trace>

<trace>

282 45 281 59 284 73 285 87 287 101 288 115 290 129

291 143 294 157 294 171 294 185 296 199 300 213

</trace>

<trace>

366 130 359 143 354 157 349 171 352 185 359 197

371 204 385 205 398 202 408 191 413 177 413 163

405 150 392 143 378 141 365 150

</trace>

</ink>

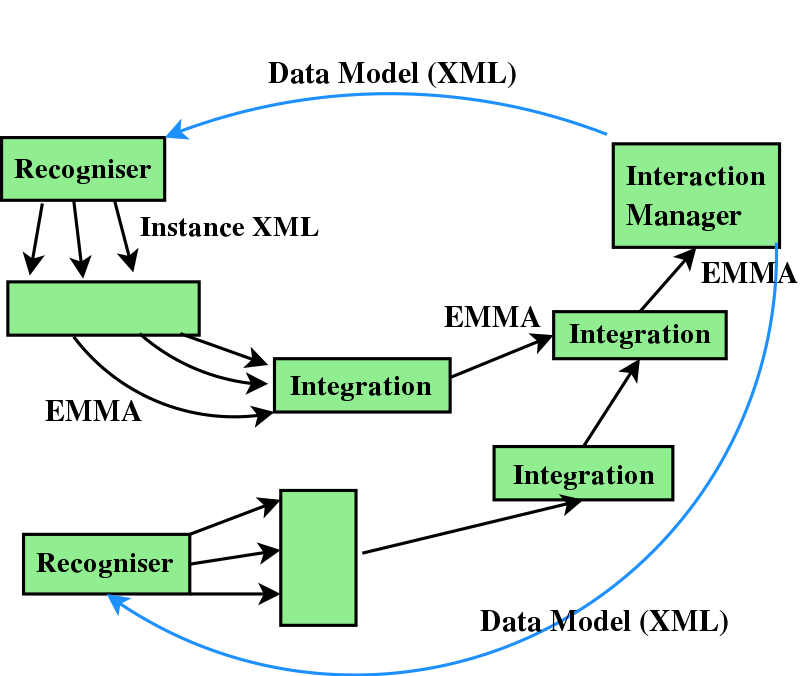

III.3. annotating and combining input

EMMA: annotating input

Goals:

- define a markup language to combine user input information from recognisers to the interaction manager

- intermediaries add medatada to recogniser output

EMMA: example

<emma:emma emma:version="1.0"

xmlns:emma="http://www.w3.org/2003/04/emma#">

<emma:one-of emma:id="r1"

emma:start="2003-03-26T0:00:00.15"

emma:end="2003-03-26T0:00:00.2">

<emma:interpretation emma:id="int1" emma:confidence="0.75" >

<origin>Boston</origin>

<destination>Denver</destination>

<date>

<emma:absolute-timestamp

emma:start="2003-03-26T0:00:00.15"

emma:end="2003-03-26T0:00:00.2"/>

03112003

</date>

</emma:interpretation>

<emma:interpretation emma:id="int2" emma:confidence="0.68" >

<origin>Austin</origin>

<destination>Denver</destination>

<date>03112003</date>

</emma:interpretation>

</emma:one-of>

</emma:emma>

III.4. Compositing

Making one emma file out of two

- programming language (e.g. Java+DOM)

- XSLT

<xsl:if test="@emma:confidence > 40"> <xsl:copy-of select="."/> </xsl:if>

- Declarative: SMIL

<par> <par> <ref id="input1" mode="ink" grammar="select.ink" begin="activateEvent"/> <ref id="timeout1" dur="2s" begin="input1.activateEvent"/> </par> <excl end="timeout2.end"> <priorityClass peers="pause"> <ref id="timeout2" end="timeout1.end"/> <ref id="speech1" mode="speech" grammar="print.grm" begin="activateEvent"/> </priorityClass> </excl> </par>

III.5. The Dynamic Properties Framework

The S+E specification defines an API to access system properties. E.g.

- battery level

- signal strength

- latitude/longitude from GPS

- ambient noise

- user preferences

DPF: example

<html>

<head>

<title>GPS location example</title>

<script type="text/javascript">

<![CDATA[

SystemEnvironment.location.format="zip code";

SystemEnvironment.location.updateFrequency="20s";

]]>

</script>

<script defer="defer" type="text/javascript"

ev:event="se:locationUpdate">

<![CDATA[

var field = document.getElementById("location");

var zipcode = SystemEnvironment.location;

field.childNodes[0].nodeValue = zipcode;

]]>

</script>

</head>

<body>

<h1>Track your location as you walk</h1>

<p>Your current zip code is: <span id="location">(please

wait)</span></p>

</body>

</html>

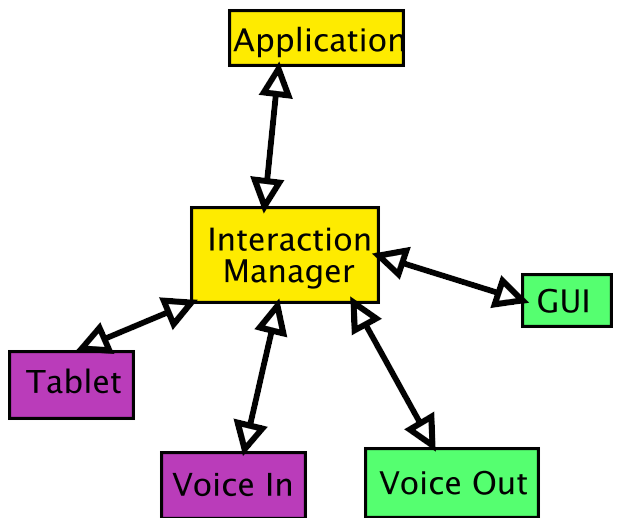

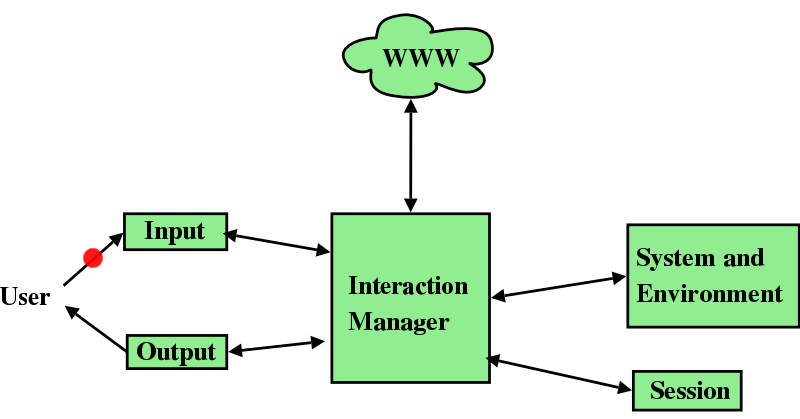

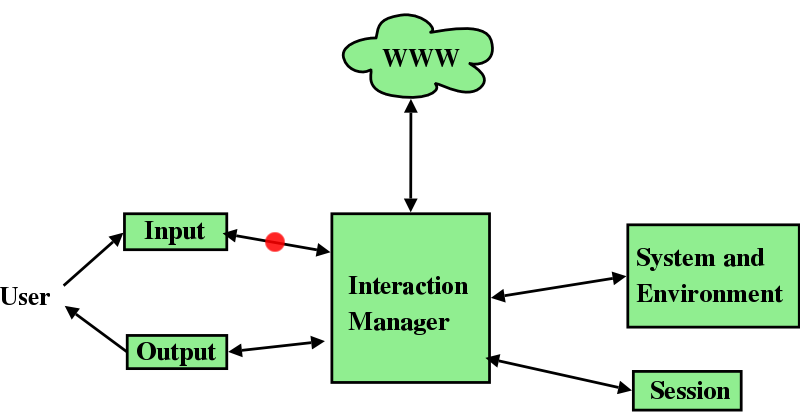

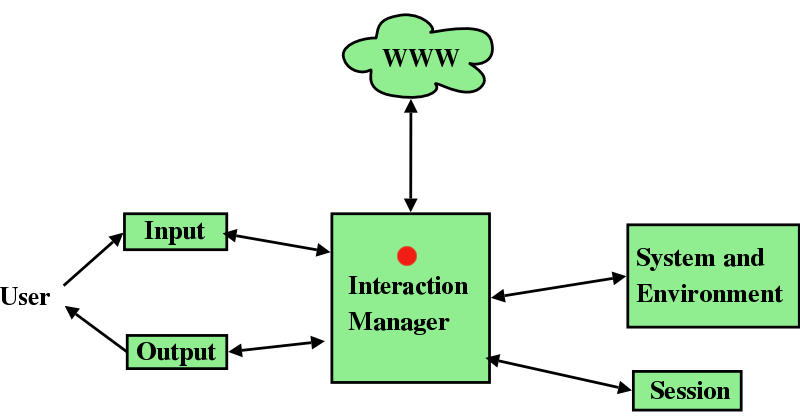

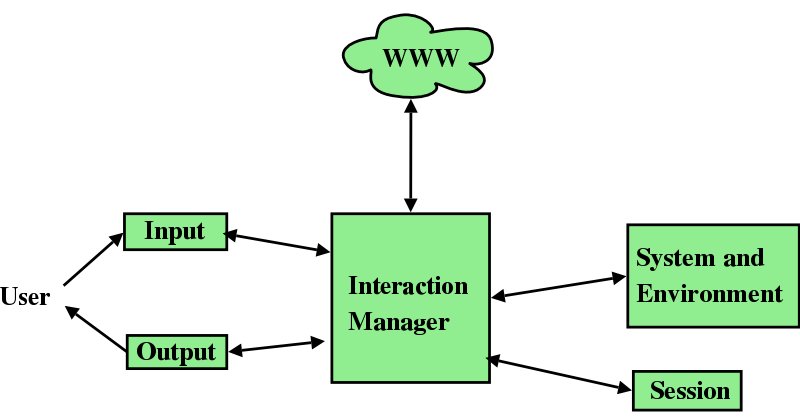

III.6. Interaction Manager

The manager...

- receives the page or application from the Web

- knows what modalities are available or not

- gets information from the S+E and Session component

...

Interaction Manager (2)

...and shapes the interaction accordingly:

- with visual media: shows the page on the screen

- with audio media: presents an application as a dialogue (a la VoiceXML)

sometimes with a little help from the application author...

Part IV: writing multimodal web content

Existing web pages and applications will still work but won't provide:

- modality dependent text

- modality dependent interaction

So extensions will be useful.

- You can already do it in HTML+JavaScript+MID+DPF (See above)

- Declarative markup (better) is apparing XHTML+Voice, SALT, CSS-MMI

SALT

Speech Application Language Tags

<?xml version="1.0"?>

<vxml version="2.0" xmlns="http://www.w3.org/2001/voice">

<form>

<field name="stock">

<grammar src="./g_stock.grxml"/>

<help> Please just say stock name. </help>

Please say the stock name.

</field>

<field name="op">

<grammar src="./g_op.grxml"/>

<help> Please just say buy or sell. </help>

Do you want to buy or sell?

</field>

<field name="quantity">

<grammar src="./g_quant.grxml"/>

<help> Please just say number of shares. </help>

How many shares?

</field>

<field name="price">

<grammar src="./g_price.grxml"/>

<help> Please just say price. </help>

What's the price?

</field>

</form>

</vxml>

XHTML+Voice

<?xml version="1.0"?>

<html

xmlns="http://www.w3.org/1999/xhtml"

xmlns:vxml="http://www.w3.org/2001/vxml"

xmlns:ev="http://www.w3.org/2001/xml-events"

xmlns:xv="http://www.voicexml.org/2002/xhtml+voice"

>

<head>

<title>XHTML+Voice Example</title>

<!-- voice handler -->

<vxml:form id="sayHello">

<vxml:block><vxml:prompt xv:src="#hello"/>

</vxml:block>

</vxml:form>

</head>

<body>

<h1>XHTML+Voice Example</h1>

<p id="hello" ev:event="click" ev:handler="#sayHello">

Hello World!

</p>

</body>

</html>

Focus on CSS-MMI

Extensions to CSS for multimodal interaction

Designing an application's interaction can be viewed as styling it

CSS-MMI: a simple HTML file

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<title>Daily Horoscope</title>

</head>

<body>

<form action="http://example.com/horoscope">

Your star sign?

<input id="sign" type="text" name="sign" />

</form>

</body>

</html>

CSS-MMI: example stylesheet

#sign:focus {

prompt: "What is your star sign?";

grammar: Aries | Taurus | Gemini | Cancer;

reprompt: 1.5s;

}

or per-modality:

@media speech {

prompt: "Do you confirm?";

grammar: yes | yeah {yes} | sure {yes} | no | nah {no}

}

Conclusion

- The framework is big!

- But pieces work individually, and can be used elsewhere

- Open standards will make possible to bring many different disciplines together

Join The Future!