Deborah Dahl, W3C Invited Expert

This is a description of how the Multimodal Architecture and EMMA can be used to support a Smart Hotel Room use case with natural language user interaction.

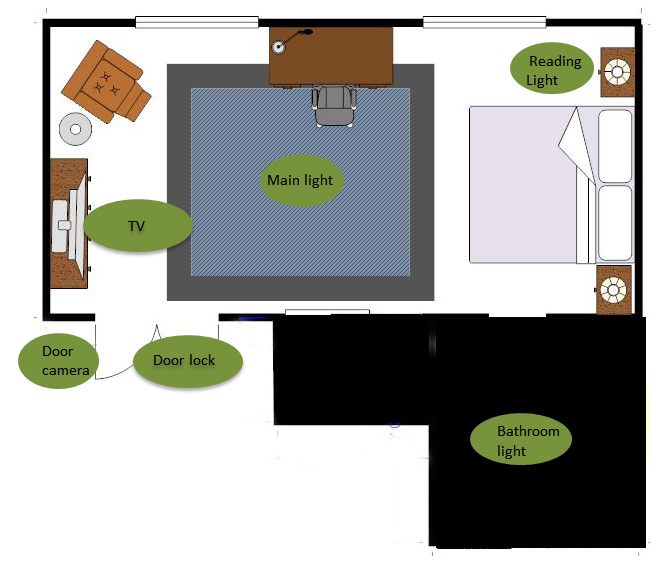

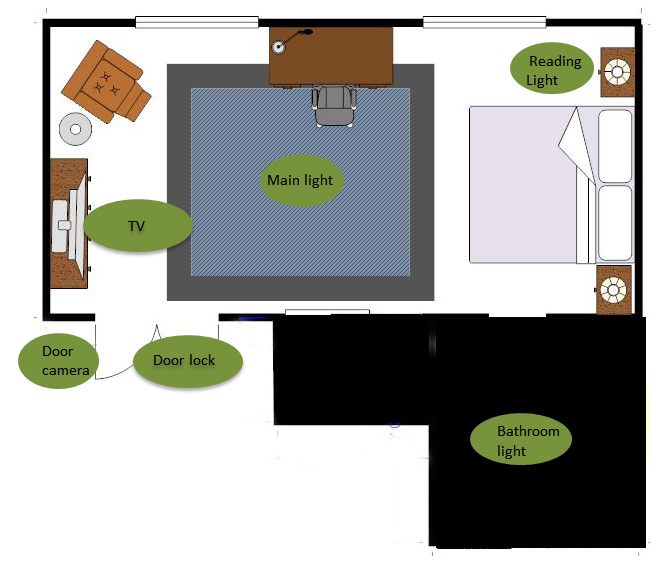

A user arrives at his/her hotel room, which is equipped with a number of connected things, as shown in Figure 1. These include the reading light, the main light, the TV, door camera, door lock, and the bathroom light. The user can control these things through a speech interface, using natural language commands. The user interface could be on an ambient interface like Amazon Echo, or it could be located on a mobile device. The interface on the mobile device could be a speech-enabled web application or a native application. Alternatively, the user could have a non-speech graphical web application or native application on his/her mobile device. For simplicity we will assume the user interface is part of an ambient system. This avoids the complexities of discovery for the purposes of this discussion, since the system would be built into the room.

The connected things in the room are Modality Components in the MMI Architecture [1], and they are controlled by an Interaction Manager (IM) as defined in the MMI Architecture, based on SCXML [2].They communicate using MMI Architecture Life Cycle events (listed in the Appendix).

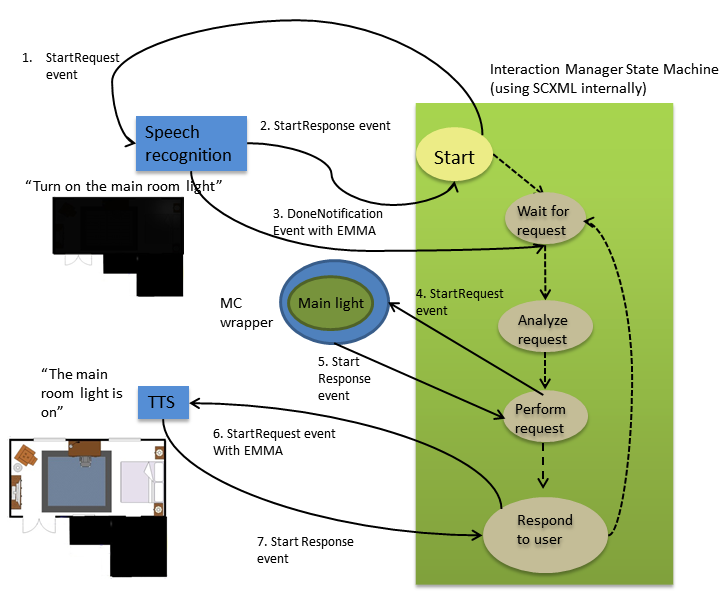

As the user enters the room, the system starts up, and the Interaction Manager State Machine shown in Figure 2 begins in the "Start" state.

First a StartRequest event (Step 1 in Figure 2) is sent to the speech recognizer, which immediately responds with a "StartResponse" event (2). This signals that the IM should transition to the application-specific "wait for request" state. At this point it is simply waiting for the user to do something. Because the room is dark, the user says "turn on the main light". This request is processed by the speech recognizer, and the interpretation of the user's request is returned in the form of a "DoneNotification" event (3) containing the EMMA [3] representation of the user's request (this event is shown in detail in Figure 3). This causes the IM to transition to the "analyze request" state, which internally within the IM decides what to do and then transitions to the "perform request" state.

In order to act on the user's request, the IM sends a "StartRequest" event (4) to the Modality Component (MC) that communicates with the main room light using the light's native API. The native API is not exposed by the MC; all communication between the IM and the MC is done through the Life Cycle events. The MC responds with a "StartResponse" event (5), which indicates that the MC is processing the request. The MC could also send a "DoneNotification" event after the light is turned on, but since turning on the light is more or less instantaneous from the user's perspective, the developer could decide not to have the MC do that, and just return any feedback in the "StartResponse" event. Any problems or errors that occur with turning on the light could be reported back to the IM in the "Status" and "StatusInfo" fields of the StartResponse event.

After turning on the light, the IM transitions to the "Respond to user" state. In this state the IM will announce that the light has turned on to the user with a spoken response using text to speech or recorded audio. Whether or not to verbally confirm that the light has turned on is a user interface design choice. In many situations, if the user can see the light, it would be obvious that the light was turned on, and it would not be necessary to confirm it, but the user might not always be able to see the light. In that case verbal feedback would be useful. Alternatively, graphical confirmation could be used instead of speech, if there is an available display.

For the purposes of this example, we will include an "Respond to user" state in this example Here the IM sends an EMMA message in a StartRequest event (6) to a text to speech (TTS) system to say "the main room light is on", and the TTS system responds with a StartResponse event (7). The IM then transitions back to the "wait for a request" state and awaits another user input.

An actual "DoneNotification" event (including EMMA representing the user input) can be seen in Figure 3.

<mmi:mmi

xmlns:mmi="mmi">

<mmi:DoneNotification mmi:Context="nlClient0314" mmi:RequestID="requestID1999" mmi:Source="ctNLServer"

mmi:Status="success" mmi:Target="ctNLClient">

<mmi:Data>

<emma:emma

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" version="2.0"

xsi:schemaLocation="http://www.w3.org/2003/04/emma http://www.w3.org/TR/2009/REC-emma-

20090210/emma.xsd">

<emma:interpretation emma:confidence="0.791" emma:process="wit.ai" emma:tokens="turn on the main

room light" id="interp6">

<emma:derived-from composite="false" resource="#initial2"/>

<nlResult>

<_text>turn on the main room light</_text>

<msg_id>952a756a-dcec-41f8-92a9-38d5efc2d4a0</msg_id>

<outcomes>

<e>

<_text>turn on the main room light</_text>

<confidence>0.791</confidence>

<entities>

<on_off>

<e>

<value>on</value>

</e>

</on_off>

<placeName>

<e>

<type>value</type>

<value>main room</value>

</e>

</placeName>

<thingType>

<e>

<type>value</type>

<value>light</value>

</e>

</thingType>

</entities>

<intent>changeState</intent>

</e>

</outcomes>

</nlResult>

</emma:interpretation>

<emma:derivation>

<emma:interpretation emma:confidence="0.9779622554779053" emma:device-type="microphone"

emma:end="1456172378220" emma:expressed-through="language" emma:function="lightControl" emma:lang="en-

US" emma:medium="acoustic" emma:mode="voice" emma:process="Web Speech API"

emma:start="1456172402185" emma:tokens="turn on the main room light" emma:verbal="true" id="initial2">

<emma:literal>turn on the main room light</emma:literal>

</emma:interpretation>

</emma:derivation>

</emma:emma>

</mmi:Data>

</mmi:DoneNotification>

</mmi:mmi>

[1] J. Barnett, et al. (2012, November 20). Multimodal Architecture and Interfaces. Available: http://www.w3.org/TR/mmi-arch/

[2] J. Barnett, et al. (2012, November 20). State Chart XML (SCXML): State Machine Notation for Control Abstraction. Available: http://www.w3.org/TR/scxml/

[3] M. Johnston, et al. (2015, December 16). EMMA: Extensible MultiModal Annotation markup language Version 2.0. Available: http://www.w3.org/TR/emma20/

The full set of MMI Life-Cycle Events

Name |

Function |

Paired messages initiated by an MC |

|

NewContextRequest |

Request to the IM to initiate a new context of interaction |

NewContextResponse |

Response from the IM with a new context ID |

Paired messages initiated by an IM |

|

PrepareRequest |

Request to the MC to prepare to run, possibly including a URL pointing to markup that will be required when the StartRequest is issued. This allows the MC to fetch and compile markup, if necessary. |

PrepareResponse |

Response from the MC to a PrepareRequest. The MC is not required to take any action other than acknowledging that it has received the PrepareRequest, although it would be desirable to send back error information if there are problems preparing. |

StartRequest |

Request to the MC to start processing. |

StartResponse |

Response from the MC to the IM to acknowledge the StartRequest. The MC is required to send this event in response to a StartRequest. |

CancelRequest |

The CancelRequest message is sent to stop processing in the MC. In this case, the MC must stop processing and return a CancelResponse. |

CancelResponse |

Response from the MC to the IM to acknowledge the CancelRequest. |

PauseRequest |

A PauseRequest is a request to the MC to pause processing. |

PauseResponse |

MC's return a PauseResponse once they have paused, or if they are unable to pause, the message is sent when they determine that they will be unable to pause. |

ResumeRequest |

ResumeRequest is a request to resume processing that was paused by a previous PauseRequest. It can only be sent to a currently paused context. |

ResumeResponse |

|

ClearContextRequest |

A ClearContextRequest may be sent to an MC to indicate that the specified context is no longer active and that any resources associated with it may be freed. MC's are not required to take any particular action in response to this command, but are required to return a ClearContextResponse. |

ClearContextResponse |

A response from the MC acknowledging the ClearContextRequest. Note that once the IM has sent a ClearContextRequest to an MC, no more events can be sent for that context. |

Paired messages that can be sent in either direction |

|

StatusRequest |

The StatusRequest message and the corresponding StatusResponse provide keep-alive functionality. This message can be sent by either the IM or an MC. |

StatusResponse |

Response to the StatusRequest message. If the request specifies a context which is unknown to the MC, the MC's behavior is undefined. |

Unpaired Messages |

|

DoneNotification |

Sent from an MC to the IM to indicate completion of a task; contains any data from the MC action, such as an EMMA message representing user input. |

ExtensionNotification |

Sent in either direction to convey application-specific data, typically sent from the MC to convey user inputs in EMMA format or report intermediate statuses. Typically sent from the IM to set modality-specific parameters such as speech recognition grammars, timeouts or confidence thresholds. This is the point of extensibility for the lifecycle events. If no other lifecycle event is suitable for a message between the IM and MC's, this message is used. |