Phil Archer and Jonathan Rees - DRAFT - 5 February 2009

Previous version: 9 May 2008

This document surveys the problem of specifying a uniform method for obtaining information pertaining to a resource without necessarily having to parse a representation of the resource. It is an attempt to rationalise several discussions that have taken place in a variety of e-mail fora. More background and links to e-mail threads area available on the wiki page.

The borders of "information pertaining to a resource" is left intentionally fuzzy. "Pertaining to a resource" could mean document metadata, information about how a document is accessed or should be accessed, or even a description of a resource that is not a document. The "information" could be a just single link to another document that contains more information, or it could be something more involved.

The following ideas motivate this effort:

This discussion relates to TAG issue 57, HttpRedirections-57. More background and links to e-mail threads area available on the wiki page.

The first section, which forms the bulk of this report, presents several use cases. The following section presents solutions that have been put forth. Finally some critique of the idea and of the various solutions is given.

Here a gateway server wants to use metadata ("pertinent information") from an origin server to decide whether content is to be passed through unmodified or must be transformed first. The generic use case for the Protocol for Web Description Resources [POWDER] is as follows:

Then either

or

The POWDER use case document applies this abstract concept to more real-world applications. For example, the profile may indicate that the user's device is a mobile phone and that therefore that 'appropriate' means mobileOK [OKBASIC, OKPRO]. That is, only content that is likely to provide a functional user experience on a mobile device would be displayed without adaptation. This would avoid the expense, latency and frustration, for example, of downloading a 4MB file to a mobile phone only to find that the device couldn't process it.

Other use cases revolve around accessibility, child protection, trust and licensing. In each case, some form of processing takes place to ensure that only content that is suitable for the delivery context is delivered.

POWDER offers an optimisation route to this scenario as it separates the description from described resource and allows a single description to be applied to multiple resources, typically 'everything on a Web site.' Step 2 in the use case above would be more efficient if the link to the metadata (the Description Resource) were available through an HTTP header, thus obviating the need to parse the content before deciding whether it can/should be displayed directly or adapted in some way. The same would apply to any service that wished to aggregate content that met particular criteria, discoverable through POWDER Description Resources. The service would be seeking and authenticating Description Resources as a means of discovering relevant content, preferably without having to parse the content itself.

Setting up a pointer from a resource to a related description at the HTTP level has another practical advantage in that for some content providers it is significantly easier to achieve. In a large publishing company, responsibility for content production and content description will often be allotted to different individuals or, in some cases, different departments in different countries. Authorisation to edit the page template for a Web site will usually be in the hands of yet another individual or department. Description is seen as an editorial role, rather than a content production role. Presentation is a job for the marketing department. Therefore including descriptions directly within a document, or document-like resource, may not be technically permissible for the person whose job it is from a policy point of view.

A company-wide policy of including a common pointer from all content to the location of descriptive data is easier to implement if that company has a choice of whether this is done at page level, document template level or network level.

These assertions derive from experience with PICS which has led the POWDER WG to think of HTTP Link and HTML <link> as equivalent (rightly or wrongly). In PICS, you would set a specific HTTP Header or use an http-equiv meta tag in the HTML. As an example, the ICRA label tester [ICRA] which makes use of Perl's LWP module [LWP], makes no distinction between a PICS label delivered as HTTP or HTML. Neither does it distinguish between a Link delivered as HTTP or HTML when looking for links to the RDF-based used by ICRA and Segala now.

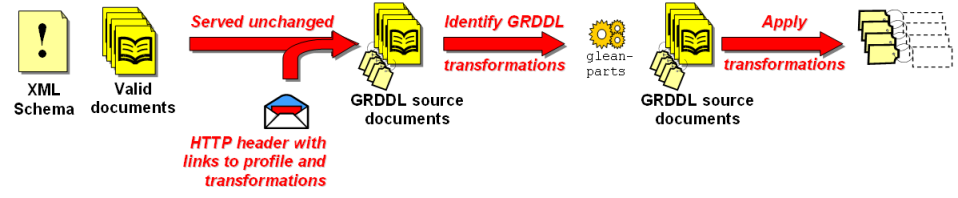

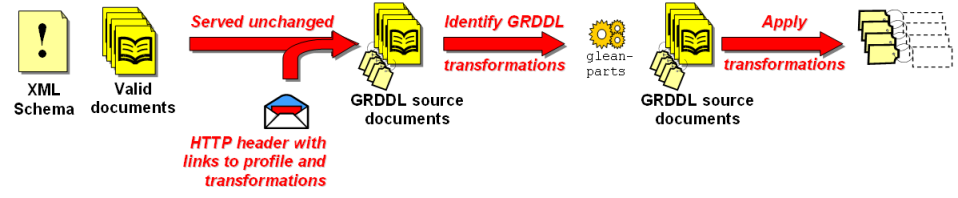

This text is taken almost verbatim from the GRDDL Use cases document [GRDDL].

Oceanic is part of a consortium of airlines that have a group arrangement for the shared supply and use of aircraft spares. The availability and nature of parts at any location are described by AirPartML, an internationally-agreed XML dialect constrained by a series of detailed XML Schema. Each member of the consortium publishes the availability of their spares on the web using AirPartML. These descriptions can subsequently be searched and retrieved by other consortium members when seeking parts for maintenance. The protocol for use of the descriptions requires invalid documents to be rejected. Oceanic wishes to also publish RDF descriptions of their parts and would prefer to reuse the AirPartML documents which are produced by systems that have undergone exhaustive testing for correctness. There is no provision in the existing schemas for extension elements and changing the schemas to accommodate RDF would require an extended international standardisation effort, likely to take many years. This means they cannot alter their XML documents to use GRDDL.

A network level means of associating XML instances with a GRDDL transform would allow Oceanic Consortium to serve RDF via GRDDL without altering their XML documents.

Atom defines two types of collections of resources: Entry Collections and Media Collections. In both cases, new members are added to the collection by POSTing a representation of the resource to the URI of the collection. The server responds to the POST with an HTTP Location header that gives the URI of the newly created resource.

A feature of ATOM is that resources may subsequently be edited and the URI at which this is possible may differ from that given by the server as its Location. It follows that the edit URI needs to be declared in the HTTP Response to the POST by a means other than the Location header. The use of HTTP Link: (see below) is suggested in the relevant documentation [ATOM-PACE]. This section has had to be withdrawn for several reasons, among them the lack of certainty over the status of HTTP Link.

Then either:

or

François Dauost adds: I say it's a clumsy use case, because we wrote the Content Transformation Guidelines to ensure that the "b" path (2b and 3b) should not appear in practice. But "should not" is still may... Anyway, the "a" path (2a and 3a) may be a valid use case, although probably not an existing one (how many web sites would have a mobile version and simply don't know how to identify a mobile user-agent?)

Anyway, the TAG, in: http://www.w3.org/2001/tag/doc/alternatives-discovery.html#id2261787 … suggests using "linking mechanisms provided by the representation being served". But images, audio, video, … are also subject to transformation, and either don't include such "linking mechanisms" or don't do so uniformly (without a separate parser per content type).

Obviously, the mobileOK example typically fits here! It would serve as a flag that tells the content transformation proxy not to transform resources (and again, that includes images…), that are labeled as such.

Another similar use case:

Again, the Link element could be used, but that doesn't work with images and the like"

Example: a site with images may be optimized for mobile-browsing, and images are adapted to most screen widths. But it may still not know everything about all devices, and leaves the possibility to recode the images if the device doesn't support a given format. To do that, it flags the images with a POWDER file describing that recoding of the images is allowed if the device does not support the format.

It is desirable to be able to find documentation for a URI given just the URI. The documentation assists humans in understanding uses of the URI (e.g. in RDF or OWL) and in considering an existing URI for use in some application. People may explore this kind of documentation using an semantic web browser such as Tabulator. There may also be applications that are able to make use of formally stated assertions (RDF, OWL) that help to define, declare [BOOTH], or otherwise document the URI, either in the general case of in the situation where they have some idea of what to expect from this information (which properties are supposed to be asserted for particular kinds of things, such as a person's name).

The accepted method for finding such information on the semantic web is the "follow your nose" algorithm, which (in simple form) says

A 303 response carries no implication that the redirect will lead to the documentation that an application wants - in fact, 303 was not designed with "follow your nose" in mind at all. For example, an application looking for a URI declaration has no assurrance that the document found by following the 303 will be one, and an application looking for RDF has no assurrance that the 303 document has an RDF representation. For these purposes it is desirable to relate the resource to the documentation not via the nonspecific Location: header but via a more specific relationship such as "is described by" (specified by a URI of course). The response would still be a 303 with a Location: header, but more specific information could be conveyed via an additional HTTP header or some other mechanism.

Metadata (i.e. "pertinent information" for information resources) has many purposes on the semantic web. Metadata such as author, title, creation date, and license is valuable in a semantic web context. However, it is also useful to be able to accurately describe an information resource - in particular, to characterize it by specifying it class(es) and properties. When using a URI on the semantic web it is important to know what it denotes, and this may not be possible just by examining its representations. (For example, consider an "RDF hall of shame" web site containing examples of incorrect RDF. Representations of the example resources from this site are by definition not good sources of information about the resources.) A link to metadata outside the HTTP response would be one way to convey such information.

303 responses are incompatible with 200 responses, so semantic-web-related documentation is not available for URIs that denote information resources and for which servers (and clients) would like to obtain representations via 200 responses. While in principle nothing rules out the use of # and 303 for information resources, they do not provide a graceful migration path for providing metadata for existing resources, because in the # case the URI must change and in the 303 case responses (and client behavior) would have to change.

It is argued that a uniform method for access to metadata could have the effect of lowering the barrier to entry to the semantic web for existing documents and could be a boost to the semantic web by bringing large numbers of entities onto the semantic web with relatively little pain.

Use case: Someone browses to an interesting document (HTML, PDF, PPT, DOC, PNG, etc). A browser plugin and/or document authoring tool plugin provides a "citation" feature that fetches information for the document, e.g. bibliographic information and durable location (if available). The information is communicated in RDF and the tool needn't know the details of all formats. The information is placed in a triple store and/or something like an Endnote or Bibtex database.

Use case: A URI occurs in some interesting RDF and someone wants to know what it denotes. Browsing to the URI takes one to a blog. How does one know that it is a blog (its class) and which blog it is (other than the one whose URI is ...)? What other statements can be made about the blog - author(s), license, permanence policy?

The following develops the bibliographic metadata case in a bit more detail.

Acme Publishing is an established publisher of academic journals serving thousands of hits on its corpus of PDF files daily. It has learned about RDF and in order to promote its journals wishes to provide bibliographic information for its articles in RDF, to assist automated agents that are RDF-aware.

Although the PDF files have a place to put metadata, this is deemed an unsuitable location as (1) many of its millions of PDF articles are quite old and regenerating them is so risky as to be infeasible, and (2) Acme judges that it is unreasonable to expect that client software will know how to parse a PDF to get at the metadata.

Acme's first approach is to create a CGI script that takes the article's URI as input and returns the bibliographic RDF for that article. This gets few adopters and the publisher realizes that monolithic action will not be very effective. At a trade conference they realize that other publishers are having the same thought, and there is discussion of how they can standardize so that agents can be generic across various publishers - indeed over the whole web.

Minimal modifications to its web server, such as CGI scripts, special response headers, or new HTTP request methods are within budget. Asking existing customers to change the URLs they're already using, or to change the way they use HTTP, is not acceptable.

User (T. C. Mits) wants personal information such as public key or buddies list to be known by many sites (ecommerce, social networking). User does not want to have to enter information separately at each site. User wants a single 'key' (login) that will lead all participating sites, even newly encountered ones, to the information.

JAR anticipates that some will object that the information should have been put in the representation (i.e. found via GET) in a standard way - after all the same entity controls both the representations and the "side protocol" (link, etc.). This is the approach taken by openid 1.0, which failed to get the uptake desired, in part because this didn't work often enough. The whole point here is that sometimes the way a site is deployed, the department that wants to provide the personal info in a sensible format has no influence over the department or vendor that's arranging the GET responses.

Mark Nottingham's RFC draft seeks to clear up confusion over status of HTTP Link (included in [RFC2068], but removed from [RFC2616]). It notes that ATOM defines a linking mechanism that is similar, but not identical, to HTML's link element and specifically does not map an XLink header to HTTP Link. It suggests that relationship types by declared as a URI with IANA as the single registry for relative URIs (e.g. next, prev, stylesheet etc.)

Formally, it proposes:

The Link header field is semantically equivalent to the <LINK> element in HTML, as well as the atom:link element in Atom [RFC4287].

Link = "Link" ":" #("<" URI-Reference ">"

*( ";" link-param ) )

link-param = ( ( "rel" "=" relationship )

| ( "rev" "=" relationship )

| ( "title" "=" quoted-string )

| ( link-extension ) )

link-extension = token [ "=" ( token | quoted-string ) ]

relationship = URI-Reference |

<"> URI-Reference *( SP URI-Reference) *lt;"> )

The title parameter MAY be used to label the destination of a link

such that it can be used as identification within a human-readable

menu.

Examples of usage include:

Link: <http://www.cern.ch/TheBook/chapter2>; rel="Previous"

Link: <mailto:timbl@w3.org>; rev="Made"; title="Tim Berners-Lee"

…

Relationship values are URIs that identify the type of link. If the

relationship is a relative URI, its base URI MUST be considered to be

"http://www.iana.org/assignments/link-relations.html#", and the value

MUST be present in the link relation registry.

Note that Link: responses can be put in HEAD and POST as well as GET responses, and that they work just as well for 303 responses as for 200 responses.

The draft has continued to be discussed on the HTTP mailing list (see Specific headers for Specific Tasks below). As part of this, Julian Reschke raised an issue concerning current implementation of HTTP Link. Following on from that a test page was set up that uses HTTP Link (and only HTTP Link) to associate a stylesheet. Firefox 2 and Opera 9 both apply the stylesheet (I.E. 7 doesn't).

Tabulator supports Link: with rel="meta".

Mozilla has another use for HTTP Link - to allow its browser to pre-fetch resources in its idletime and so speed up page-rendering.

Brian Smith (active in the ATOM community) has suggested that parsing HTTP Link Headers is hard and that a more efficient solution would be to create new application-specific headers. In essence, make the relationship type part of the header. So, rather than

Link: <http://foo.org> rel=edit;

one would use:

Edit-Links: <http://foo.org>

Brian Smith says:

This could be done by changing the registration rules for HTTP headers so that header fields with a "-Links" suffix must have the above syntax, with the definitions of the "media", "type", and "title" parameters to be the fixed to be the same as in HTML 4 (or 5) and Atom 1.0. Each link header would have to define the processing rules for when multiple links are provided, and applications must be prepared to handle multiple links of the same type, even when they are not expected (that is why I chose "-Links" instead of "-Link").

The core advantage of this method appears to be the ease of parsing - you only take notice of headers you know you're interested in.

WebDAV (RFC 4918) defines a PROPFIND HTTP method for obtaining metadata. The request details and response are both encoded in XML using elements from the DAV namespace. The RFC gives several examples.

Patrick Stickler has developed The URI Query Agent Model which proposes a new HTTP method of 'MGET' that returns a concise bounded description of the resource available at the given URI. The full paper suggests support for adding to and deleting from those descriptions with MPUT and MDELETE.

URIQA is fully developed and implemented in the Nokia URIQA semantic web service. Patrick Stickler includes a good summary of several of the arguments surrrounding this issue - such as why not use conneg, why not use HTML's link element and so on.

The URIQA approach has some similarities with PICS which defines an HTTP header of 'Protocol-request' which is sent with a GET request when seeking a PICS label describing a resource.

Instead of going out to a server for URI1 to obtain the name URI2 of a second resource that carries metadata for URI1, we could adopt a client-side convention for obtaining URI2 systematically from URI1. There is a faint resemblance here to favicon.ico and robots.txt, although those are site-specific secondary URIs instead of resource-specific secondary URIs. Here are two rules that have been suggested in particular contexts. It might be possible to pursue this idea to generalize beyond either of these cases.

Alan Ruttenberg has suggested:

For a given URI http://a.b/c/d/e, construct a new URI http://purl.org/about/a.b/c/d/e

Configure the purl.org server so that http://purl.org/provide-about/a.b/c/ d/e redirects to something akin to a structured wiki page or a REST service. (Let us assume for the moment that whoever currently provides the LSID WSDL that contains this information currently is the provider of this service.)

The Archival Resource Key has been developed by the California Digital Library. To obtain a metadata link for an ARK one simply appends "?" to the URI.

One way to uniformly transmit metadata is to designate one or more particular representation types (media types) to be the one(s) that are supposed to carry the resource's metadata. We could decide, for instance, that among a resource's many representations, if there is to be metadata, the metadata should reside in the RDF/XML representation (another alternative would be HTML, using <link> elements perhaps). If no rdf/xml representation exists, it should be created for the purpose of carrying the metadata.

Unfortunately this approach is likely to clash with the idea that if there are multiple representations then they should all carry the same "abstract information". The software responsible for providing the metadata is unlikely to be competent at translating arbitrary media types (e.g. a compressed "tar" file containing Erlang code with French comments) into HTML or RDF/XML.

Using a multipart media type has also been suggested. Metadata could go in one part, and the true content in another. Among the relevant documents turned up by a web search for "multipart metadata" is a 1999 IETF Internet-draft proposing an "ancillary" value for the multipart Content-disposition header, addressing exactly the present need. We have no information on the potential viability of this approach.

Put the information off site, in external metadata repositories, brokers, forwarding services, etc. In the library world metadata is never the business of the information provider (publisher, printer, etc) - you can't rely on them to care, to do the right thing, or even to have the necessary information. (Mackenzie Smith)

Use a search engine (Google, or a hypothetical Semantic Google) to find the information you're looking for. (Roy Fielding)

The many contributors to the discussion on www-tag and elsewhere.