Multimedia Annotation Interoperability Framework

Editor: Vassilis Tzouvaras (National Technical University of Athens)

Editor: Jeff Z. Pan (University of Aberdeen)

Publication Date: TBD

Request For Comments: The Multimedia Semantics Incubator Group (MMSEM-XG) seeks public feedback on this draft. Please send your comments to public-xg-mmsem@w3.org (public archive). If possible, please offer specific changes to the text which will address your concern.

Comments Due By: TBD

Document Class Code: ED

This Version: (not published)

Abstract

Multimedia systems typically contain digital documents of mixed media types, which are indexed on the basis of strongly divergent metadata standards. This severely hamplers the inter-operation of such systems. Therefore, machine understanding of metadata comming from different applications is a basic requirement for the inter-operation of distributed Multimedia systems. In this document, is presented how interoperability among metadata, vocabularies/ontologies and services is enhanced using Semantic Web technologies. In addition, it provides guidelines for semantic interoperability, illustrated by use cases. Finally, it presents an overview of the most comonly used metadata standards and tools, and provides the general research direction for semantic interoperability using Semantic Web technologies.

Document Roadmap

Target Audience

This document targets at everybody with an interest in semantic interoperability, ranging from non-professional end-users that have content are manually annotating their personal digital photos to professionals working with digital pictures in image and video banks, audiovisual archives, museums, libraries, media production and broadcast industry, etc.

Objectives

- To illustrate the benefits of using semantic technologies in enhancing semanticg interoperability in multimedia applications.

- To provide guidelines for applying semantic technologies in this area, illusrated by use case and possible solutions.

- To present an overview on currently used vocabularies/ontologies, metadata standards and services.

- To propose reasearch direction that seek to enhance semantic interoperability.

Table of Contents

1. Introduction in Semantic Interoperability in Multimedia Applications

1.1. motivation

1.2. semantic interoperability issues

1.2.1. UC Tagging

No standards for the representation of taggings exists. Different tagging platforms use different formats to represent taggings. The missing of a standard defining how to model taggings prevents interoperability between tagging platforms. Consequently, reuse of taggings across platforms is complicated and tags need to be maintained redundantly at each platform.

1.2.2. Algorithm Usecase

There is no standard method for describing media analysis algorithms independently of their implementation. Because of this it is difficult for users and applications to identify, manage and apply algorithms for media analysis. There are interoperability issues on two levels. Firstly at the low-level for identifying and combining algorithms in terms of the data formats and implementations used. Secondly at the high-level, describing the outcome or effect of executing the algorithm. This raises challenges of, for example, articulating and quantifying the ‘visual’ result of applying algorithms.

2. Use Cases and Possible Solutions

In this section, we present our use cases from the interoperability aspect, with links pointing to the full use case pages. Here each use cased also provides an example to illustrate the problem and solution. The use cases are divided in three levels, the...

2.1. Use Case: Photo

2.1.1. Introduction

Currently, we are facing a market in which about 20 bn digital photos are taken per year for example in Europe [1]. At the same time, we can observe that many digital photos are never viewed nor used again. Thousands of printed photos rest in the darkness and isolation of shoe boxes. Even though there is a great number of Web albums, online digital photo services and Web 2.0 applications that address the management of digital photos on Web, it is still difficult to find and share photos. Most of the management systems and sites allow the user to upload photos and annotate and tag them. Even though Flickr achieved a lot of images that are uploaded, described, tagged it host a very limited amount of those photos taken world wide. Also the search is limited to a set of non-standardized tags and manual descriptions with all the problems that arise from this. (See also taggigng use case)

At the same time, in more than a decade many results in multimedia analysis have been achieved to extract many different valuable features from multimedia content. For photos for example this includes color histograms, edge detection, brightness, texture and so on. With MPEG-7 a very large standard has been developed that allows to describe these features in a metadata standard and exchange content and metadata with other applications. However, both the size of the standard but also the many optional attributes in the standard have lead to a situation in which MPEG-7 is used only in very specific applications and has not been achieved as a world wide accepted standard for adding (some) metadata to a media item. (See also MPEG-7 interoperability use case) Especially in the area of personal media, in the same fashion as in the tagging scenario, a small but comprehensive shareable and exchangeable description scheme for personal media is missing.

2.1.2. Interoperability problems

2.1.2.1. Non shareable high-level and low-level descriptions

Lets consider a family Miller that travels to Italy. Equipped with a nice camera and also a GPS receiver the family takes nice photos from the family members but also from the sightseeing spots visited. At the end of the trip the pictures are uploaded to a nice photo annotation tool P1 which both extracts some features but also make suggestions for annotations (tags) or allows for personal descriptions. They upload everything on the Web in a folder that is accessible on the Web for the family members. However, the Web album provides just takes the photos as an input all the informatiom of the personal photo management system are lost after the Web upload.

2.1.2.2. Missing interoperability

Even though the Web upload allows to upload images with metadata and can use and exploit for their search at site A. When aount Mary visits the Web album and starts looking on the photos she tries to download a few onto her laptop to integrate them into her own photo management software. Now aunt Mary would like to incoroporate some of the pictures of her nieces and nephews into her photo management system P. The system imports the photos but the precious metadata are more or less gone that family Miller has already acquired her photo management system uses another vocabulary.

2.1.3. The solution needed

A standardization of tags is very helpful for a semantic search on photos in the Web. However, today the low(er) level features are also lost. Even though the semantic search is fine on a search level, for a later use and exploitation of a set of photos, previously extracted and annotated lower-level features might be interesting as well. Maybe a Web site would like to offer a grouping of photos along the color distribution. Then either the site needs to do the extraction of a color histogram or the photo itself brings this information already in in its standardized header information. A face detection software might have found the bounding boxes on the photo where a face has been detected and also provide a face count. Then the Web site might allow to search for photos with two or more persons on it. And so one. Even though low level features do not seem relevant at first sight, for a detailed search, visualization and also later processing the previously extracted metadata should be stored and available with the photo.

What is needed is a machine readable description that comes with each photo that allows a site to offer valuable search and selection functionality on the uploaded photos. Even though approches for Photo Annotation have been proposed they still do not address the wide range of metadata, annotations that could and should be stored with an image in a standardized fashion. PhotoRDF [2] "describes a project for describing & retrieving (digitized) photos with (RDF) metadata. It describes the RDF schemas, a data-entry program for quickly entering metadata for large numbers of photos, a way to serve the photos and the metadata over HTTP, and some suggestions for search methods to retrieve photos based on their descriptions." So wonderful, but the standard is separated into three different schemas Dublin Core [3], a Technical Schema, which comprises more or less entries about author, camera and short description, and a Content Schema, which provides a set of 10 keywords. With PhotoRDF, the type and number of attributes is limited, does not even comprise the full EXIF [4] scheme and is also limited with regard to the content description of a photo. The Extensible Metadata Platform or XMP [5] and the IPTC-NAA-Standard [6] have been introduced to define how metadata (not only) of a photo can be stored with the media element itself. However, these standards come with their own set of attributes to describe the photo or allow to define individual metadata templates. This is the killer for any sharing and semantic Web search! What is missing is an actual standardized vocabulary what information about a photo is important and relevant to a large set of next generation digital photo services has not been reached. The Image Annotation on the Semantic Web [7] provides a very nice overview of the existing standard such as those mentioned above. At the same time it shows how diverse the world of annotation is. The use case for photo anotation choses RDF/XML syntax of RDF in order to gain interoperability. It refers to a large set of different standards and approaches that can be used to image annotation but there is no unified view on image annotation and metadata relevant for photos. The attempt here is to integrated existing standards. If those however are too many, too comprehensive, and might even have overlapping attributes is might not be adopted as the commong phot annotation scheme on the Web. For example, for the low level features for example, there is only a link to MPEG-7. But, there is no chance to actually include an entire XML-based MPEG-7 description of a photo into the raw content. For the description of the content the use case refers to three domain-specific ontologies: personal history event, location and landscape.

The result is clear, that there is not one standardized representation and vocabulary for adding metadata to photos. Even though the differen semantic Web applications and develppments should be embraced, a photo annotaiton standard as a patchwork of too many different specifications is not helpful. What is urgently in need is that for content management, search, retrieval, sharing and innovative semantic (Web 2.0) applications one maybe limited and simple but at the same time comprehensive vocabulary in a machine-readable, echangeable, but not over complicated representation is needed.

The open question is who would and could define such a integrated standardized set of vocabulary?!

2.1.4. Issues

An issue for the publication of metadata of an image might be privacy. Besides the necessity for a standardized vocabulary for high level and low level features descriptions of photos the need to identify which of these parameters is public for a semantic search on the Web is needed. .

2.2. Use Case: Music

2.2.1. Introduction

In recent years the typical music consumption behaviour has changed dramatically. Personal music collections have grown favoured by technological improvements in networks, storage, portability of devices and Internet services. The amount and availability of songs has de-emphasized its value: it is usually the case that users own many digital music files that they have only listened to once or even never. It seems reasonable to think that by providing listeners with efficient ways to create a personalized order on their collections, and by providing ways to explore hidden "treasures" inside them, the value of their collection will drastically increase.

Also, notwithstanding the digital revolution had many advantages, we can point out some negative effects. Users own huge music collections that need proper storage and labelling. Searching inside digital collections arise new methods for accessing and retrieving data. But, sometimes there is no metadata -or only the file name- that informs about the content of the audio, and that is not enough for an effective utilization and navigation of the music collection.

Thus, users can get lost searching into the digital pile of his music collection. Yet, nowadays, the web is increasingly becoming the primary source of music titles in digital form. With millions of tracks available from thousands of websites, finding the right songs, and being informed of newly music releases is becoming a problematic task. Thus, web page filtering has become necessary for most web users.

Beside, on the digital music distribution front, there is a need to find ways of improving music retrieval effectiveness. Artist, title, and genre keywords might not be the only criteria to help music consumers finding music they like. This is currently mainly achieved using cultural or editorial metadata ("artist A is somehow related with artist B") or exploiting existing purchasing behaviour data ("since you bought this artist, you might also want to buy this one"). A largely unexplored (and potentially interesting) complement is using semantic descriptors automatically extracted from the music audio files. These descriptors can be applied, for example, to recommend new music, or generate personalized playlists.

2.2.2. Motivating Examples

2.2.2.1. A complete description of a Music title

In [Pac05] TODO ADD REF, Pachet classifies the music knowledge management. This classification allows to create meaningful descriptions of music, and to exploit these descriptions to build music related systems. The three categories that Pachet defines are: editorial (EM), cultural (CM) and acoustic metadata (AM).

Editorial metadata includes simple creation and production information (e.g. the song C'mon Billy, written by P.J. Harvey in 1995, was produced by John Parish and Flood, and the song appears as the track number 4, on the album "To bring you my love"). EM includes, in addition, artist biography, album reviews, genre information, relationships among artists, etc. As it can be seen, editorial information is not necessarily objective. It is usual the case that different experts cannot agree in assigning a concrete genre to a song or to an artist. Even more diffcult is a common consensus of a taxonomy of musical genres.

Cultural metadata is defined as the information that is implicitly present in huge amounts of data. This data is gathered from weblogs, forums, music radio programs, or even from web search engines' results. This information has a clear subjective component as it is based on personal opinions.

The last category of music information is acoustic metadata. In this context, acoustic metadata describes the content analysis of an audio file. It is intended to be objective information. Most of the current music content processing systems operating on complex audio signals are mainly based on computing low-level signal features. These features are good at characterising the acoustic properties of the signal, returning a description that can be associated to texture, or at best, to the rhythmical attributes of the signal. Alternatively, a more general approach proposes that music content can be successfully characterized according to several "musical facets" (i.e. rhythm, harmony, melody, timbre, structure) by incorporating higher-level semantic descriptors to a given feature set. Semantic descriptors are predicates that can be computed directly from the audio signal, by means of the combination of signal processing, machine learning techniques, and musical knowledge.

Semantic Web languages allow to describe all this metadata, as well as integrating it from different music repositories.

Next example shows an RDF description of an artist, and a song by the artist:

<rdf:Description rdf:about="http://www.garageband.com/artist/randycoleman">

<rdf:type rdf:resource="&music;Artist"/>

<music:name>Randy Coleman</music:name>

<music:decade>1990</music:decade>

<music:decade>2000</music:decade>

<music:genre>Pop</music:genre>

<music:city>Los Angeles</music:city>

<music:nationality>US</music:nationality>

<geo:Point>

<geo:lat>34.052</geo:lat>

<geo:long>-118.243</geo:long>

</geo:Point>

<music:influencedBy rdf:resource="http://www.coldplay.com"/>

<music:influencedBy rdf:resource="http://www.jeffbuckley.com"/>

<music:influencedBy rdf:resource="http://www.radiohead.com"/>

</rdf:Description>

<rdf:Description rdf:about="http://www.garageband.com/song?|pe1|S8LTM0LdsaSkaFeyYG0">

<rdf:type rdf:resource="&music;Track"/>

<music:title>Last Salutation</music:title>

<music:playedBy rdf:resource="http://www.garageband.com/artist/randycoleman"/>

<music:duration>T00:04:27</music:duration>

<music:key>D</music:key>

<music:keyMode>Major</music:keyMode>

<music:tonalness>0.84</music:tonalness>

<music:tempo>72</music:tempo>

</rdf:Description>

2.2.2.2. Personalized playlist

Mary has a large music collection and she is interested in laid back, and let the computer create a playlist, based on her actual mood. Starting from an existing seed audio track, steps toward providing an a suggestion for a "following track" could include:

- Identification of the track being played,

- Metadata collection about the identified track,

- Creation a list of potentially interesting next tracks based on Metadata distance metrics from the track being in use and the availability of local tracks. PossTODO ibly also from recommender systems, and

- Filtering the obtained list according to other parameters (e.g. the best match track was played too recently ago, user profile preferences)

Once the system has obtained a "list" of possible next matches, the device is ready to propose to Mary an automatically selected next track. Moreover, the device could allow Mary to push a "don't like it" button (relevance feedback), that forces the device to propose another track. If Mary pressed a more that, say, 3 times the skip button, it might signify that the metric used to calculate the distances among tracks is somehow wrong. It was not the idea of 'similarity' Mary had in mind.

2.2.2.3. Upcoming concerts

John has been listening to the "Clap Your Hand And Say Yeah" band for a while. He discovered the band while listening to one of his favorite podcasts about alternative music. He has to travel to New York next week, and he is finding upcoming concerts that he would enjoy there, and he asks his personalized semantic web desktop service to provide him with some recommendations.

The system is tracking user listening habits, so it detects than one song from "The Killers" band (scrapped from their website) sounds similar to the last song John has listened to from "Clap Your Hand And Say Yeah". Moreover, both bands have similar styles, and there are some podcasts that contain songs from both bands in the same session. Interestingly enough, the system knows that the Killers are playing close to NY next weekend, thus it recommends to John to assist to that gig.

TODO REFINE. Add FOAF (geolocation)? geonames, Google Maps, etc.

2.2.3. Integrating Various Vocabularies Using RDF

TODO: add ID3, Ogg Vorbis, APEv2, MPEG-7 (for acoustic metadata)

TODO interoperability among music metadata proposals. ID3 to RDF converter. MPEG-7 (any OWL version)

See Vocabularies - Audio Content Section, and Vocabularies - Audio Ontologies Section

2.2.4. Music Metadata on the Semantic Web

TODO

2.3. Use Case: Searching and Presenting News in the Semantic Web

2.3.1. Introduction

More and more news are produced and consumed each day. News are still mainly textual stories, but they are more and more often illustrated with graphics, images and videos. News can be further processed by professional (newspapers), directly accessible for web users through news agencies, or automatically aggregated on the web, generally by search engine portal and not without copyright problems.

For easing the exchange of news, the International Press Telecommunication Council (IPTC) is currently developping the NewsML2 Architecture whose goal is to provide a single generic model for exchanging all kinds of newsworthy information, thus providing a framework for a future family of IPTC news exchange standards [8]. This family includes NewsML, SportsML, EventsML, ProgramGuideML or a future WeatherML. All are XML-based languages used for describing not only the news content (traditional metadata), but also their management, packaging, or related to the exchange itself (transportation, routing).

However, despite this general framework, some interoperability problems occur. News are about the world, so their metadata might use specific controlled vocabularies. For example, IPTC itself is developping the IPTC News Codes [9] that currently contain 28 sets of controlled terms. These terms will be the values of the metadata in the NewsML2 Architecture. But the news descriptions often refer to other thesaurus and controlled vocabularies, that might come from the industry, and all are represented using different formats. From the media point of view, the pictures taken by the journalist come with their EXIF metadata [4]. Some videos might be described using the EBU format [10] or even with MPEG-7 [11].

The problems are then, how to search and present news content in such an heterogeneous environment.

2.3.2. Motivating Examples

We will need to work on a concrete example. We investigate currently the Financial domain. The idea is to show how the same information can be represented differently, using various vocabularies and exibit the interoperability problems.

Interesting ideas:

Representing financial information using XBRL eXtensible Buisness Reporting Language, see also Tim Bray's blog

- Representing the same information using NewsML

There are quite a few news feeds services within the financial domain, with the two most popular being the Dow Jones Newswire (http://www.djnewswires.com/) and Bloomberg. Dow Jones provides and XML based representation; Chris will work on getting some sample feed data.

One goal is to provide a platform that allows more semantics in automated ranking of creditworhtiness of companies. And here the, financial news as such are playing an important role like provide "qualitative" information on companies, branches, trends, countries, regions etc.

2.3.2.1. Query Examples

Cross modalities search on a given topic: I would like to find news related the IRAN nuclear politics, pictures, videos, graphics ans news stories.

Integrating heterogeneous resources: I am the CEO of a company that provides video sharing platforms on the web. I would like to have all news related to my concurrents. There exist a thesaurus of all personality, there exist a thesaurus of all companies, with their CEO, their buisness activity, etc.

2.3.2.2. Descriptions in the Broadcast Industry

Broadcasting comanies use also metadata to describe the content of their videos (and images). The overall objective is still to make available the existing multimedia material to journalists that are writing on a certain topic; and on the other way round, point to certain news articles that might be related to a video sequence (or an image).

Our starting point are material we got from three broadcasting institutions, specialized in news. We do not name them here and we abstract over their documents, since we still have to ask for permission for displaying information to the general public. We present below the (large) commonalities we found in the various metadata documents. The main interoperability problems are the use of controlled vocabularies for describing the content of the news (such as the IPTC News Codes) and the overall structuration of the news (which NewsML G2 is standardizing).

The metadata associated with the news video we have been looked at distinguish basically the information about the global programme (the news programme broadcasted at a certain day/time) and information about the parts covering various topics (economics, sports, international, entertainment, etc.). Within those sections, there are again different contributions, which we can consider like the "leaves" in the organisation structure: the video on a specific event, topic, person etc.

The general structure looks like:

- General Information:

- ID of the news programme

- Title of the news programme (including day and time of diffusion)

- Name of the producing society

- Detailed information about the diffusion

- Duration of the programme

- Genre (like "news")

- Several information for internal use

- etc.

- Topic Information (a segment of the news programme)

- ID of the news segment

- Title of the news segment

- Title of the programme to which the segment belongs

- Indication of the topic ("sports", "finance" etc.)

- Name of the producing society

- Detailed information about the diffusion

- Duration

- Genre

- A descriptive asbtract/summary of the topic of the video segment, in natural language

- Keywords

Detailed description of the shots in the news segment: list of natural language expressions describing what can be seen in the different video shots. These NL expressions consist mostly in short phrases and more seldomly in full sentences.

Interoperabilty can be achieved at different levels.

- On the base of data categories, which reflect all the information points mentioned above

- At the level of the semantic description of the content of the video (image). The descriptions of the video sequences content are in natural language and use sometimes keywords, which generally belong to controlled vocabularies.

To ensure interoperability here, one has to map the natural language expressions and the keywords to some structured semantic representation. At the beginning we can think of using a combination of both the "Structured Text Annotation" and the "Semantic Description" schemes of MPEG-7. This is not enough though since the values associated with the slots of the structured textual description scheme ("who", "why", "what_action" etc.) and the Semantic Description Scheme ("event", "location", "object" etc) are not per se normalized and not related to semantic resources (like instances of an ontology). So in case a newspaper article is reporting on events occuring in Paris, Texas and some metadata of a news video are describing scenes of Paris, France, it is difficult to differentiate those at the simple level of string values in the XML context of MPEG-7.

2.3.2.3. Descriptions according NewsML

TO DO:

- Chris: example of news feeds in the Financial domain

- Raphael: equivalent description in NewsML

2.3.3. Integrating Various Vocabularies Using RDF

Adding an RDF layer on top of these standards.

2.3.3.1. NewsML G2 in the Semantic Web

2.3.3.2. EXIF in the Semantic Web

One of today's commonly used image format and metadata standard is the Exchangeable Image File Format [4]. This file format provides a standard specification for storing metadata regarding image. Metadata elements pertaining to the image are stored in the image file header and are marked with unique tags, which serves as an element identifying.

As we note in this document, one potentional way to integrate EXIF metadata with additinoal news/multimedia metadata formats is to add an RDF layer on top of the metadata standards. Recently there has been efforts to encode EXIF metadata in such Semantic Web standards, which we briefly detail below. We note that both of these ontologies are semantically very similar, thus this issue is not addressed here. Essentially both are a straightforward encodings of the EXIF metadata tags for images (see [4]). There are some syntactic differences, but again they are quite similar; they primarily differ in their naming conventions utilized.

2.3.3.3. Kanzaki EXIF RDF Schema

The Kanzaki EXIF RDF Schema [12] provides an encoding of the basic EXIF metadata tags in RDFS. Essentially these are the tags defined from Section 4.6 of [4]. We also note here that relevant domains and ranges are utilized as well. [12] additionally provides an EXIF conversion service, EXIF-to-RDF (found at [12]), which extracts EXIF metadata from images and automatically maps it to the RDF encoding. In particular the service takes a URL to an EXIF image and extracts the embedded EXIF metadata. The service then converts this metadata to the RDF schema defined in [12] and returns this to the user.

2.3.3.4. Norm Walsh EXIF RDF Schema

The Norm Walsh EXIF RDF Schema [13] provides another encoding of the basic EXIF metadata tags in RDFS. Again, these are the tags defined from Section 4.6 of [4]. [13] additionally provides JPEGRDF, which is a Java application that provides an API to read and manipulate EXIF meatadata stored in JPEG images. Currently, JPEGRDF can can extract, query, and augment the EXIF/RDF data stored in the file headers. In particular, we note that the API can be used to convert existing EXIF metadata in file headers to the schema defined in [13]. The resulting RDF can then be stored in the image file header, etc. (Note here that the API's functionality greatly extends that which was briefly presented here).

2.3.4. News Syndication on the Semantic Web

From the discussions presented above, it is clear that a Semantic Web based approach (OWL and RDF) provides a solution to integrating the various news metadata formats. Adopting such an approach provides additional benefits as well, mainly due to the formal foundations of such representation languages; in particular advanced news syndication can be provided.

Over the past years news syndication systems on the Web have attracted increased attention and usage on the Web. As technologies have emerged and matured there has been a transition to more expressive syndication approaches; that is subscribers (and publishers) are provided with more expressive means for describing their interests (resp. published content), enabling more accurate news dissemination. Through the years there has been a transition from keyword based approaches to attribute-value pairs and more recently to XML. Given the lack of expressivity of XML (and XML Schema) there has been interest in using RDF and OWL for syndication purposes.

Using a more expressive approach with a formal semantics, many benefits can be achieved. These include a rich semantics-based mechanism for expressing subscriptions and published news items allowing increased selectivity and finer control for filtering, automated reasoning for discovering subscription matches not found using traditional syntactic syndication approaches, etc. Therefore adopting an approach such as the one presented here, advanced news dissemination services can potentially be provided as well.

2.4. Use Case:Algorithm representation

2.4.1. Problem

The problem is that algorithms for image analysis are difficult to manage, understand and apply, particularly for non-expert users. For instance, a researcher needs to reduce the noise and improve the contrast in a radiology image prior to analysis and interpretation but is unfamiliar with the specific algorithms that could apply in this instance. In addition, many applications require the processes applied to media to be concisely recorded for re-use, re-evaluation or integration with other analysis data. Quantifying and integrating knowledge, particularly visual outcomes, about algorithms for media is a challenging problem.

2.4.2. Solution

Our proposed solution is to use an algorithm ontology to record and describe available algorithms for application to image analysis. This ontology can then be used to interactively build sequences of algorithms to achieve particular outcomes. In addition, the record of processes applied to the source image can be used to define the history and provenance of data.

The algorithm ontology should consist of information such as:

- name;

- informal natural language description

- formal description

- input format

- output format

- example media prior to application

- example media after application

- goal of the algorithm

- ...

To achieve this solution we need:

- a sufficiently detailed and well-constructed algorithm ontology;

- a core multimedia ontology;

- domain ontologies and

- the underlying interchange framework supplied by semantic web technologies such as XML and RDF.

The benefits of this approach are:

- modularity through the use of independent ontologies to ensure usability and flexibility;

- …

2.4.3. State of the Art and Challenges

Currently there exists a taxonomy/thesaurus for image analysis algorithms we are working on [14, 15] but this is insufficient to support the required functionality. We are collaborating on expanding and converting this taxonomy to an OWL ontology.

The challenges are:

- to articulate and quantify the ‘visual’ result of applying algorithms;

- to associate practical example media with the algorithms specified;

- to integrate and harmonise the ontologies;

- to reason with and apply the knowledge in the algorithm ontology (e.g. using input and output formats to align processes).

2.4.4. Possible Applications

The formal representation of the semantics of algorithms enables recording of provenance, provides reasoning capabilities, facilitates application and supports interoperability of data. This is important in fields such as:

- Smart assistance to support quality control and defect detection of complex, composite, manufactured objects;

- Biometrics (face recognition, human behaviour, etc.)

- The composition of web services to automatically analyse media based on user goals and preferences;

- To assist in the formal definition of protocols and procedures in fields that are heavily dependent upon media analysis such as scientific or medical research.

These are applications that utilise media analysis and need to integrate information from a range of sources. Often recording the provenance of conclusions and the ability to duplicate and defend results is critical.

For example, in the field of aeronautical engineering, aeroplanes are constructed from components that are manufactured in many different locations. Quality control and defect detection requires data from many disparate sources. An inspector should understand the integrity of a component by acquiring local data (images and others) and combining it with information from one or more databases and possibly interaction with an expert.

2.4.5. Example

Problem:

- Suggest possible clinical descriptors (pneumothorax) given a chest x-ray.

Hypothesis of solution :

- 1) Get a digital chest x-ray of patient P (image A). 2) Apply on image A a digital filter to improve the signal-to-noise ratio (image B). 3) Apply on image B a region detection algorithm. This algorithm segments image B according to a partition of homogeneous regions (image C). 4) Apply on image C an algorithm that 'sorts' according to a given criterion the regions by their geometrical and densitometric properties (from largest to smallest, from darkest to clearest, etc.) (array D). 5) Apply on array D an algorithm that searching on a database of clinical descriptors detects the one that best fits the similarity criterion (result E).

However, we should consider the following aspects:

- step 2) Which digital filter should be applied on image A? We can consider different kinds of filters (Fourier, Wiener, Smoothing, etc. ) each one having different input-output formats and giving slightly different results. step 3) Which segmentation algorithm should be used? We can consider different algorithms (clustering, histogram, homogeneity criterion, etc.). step 4) How can we define geometrical and densitometric properties of the regions? There are several possibilities depending on the considered mathematical models for describing closed curves (regions) and the grey level distribution inside each region (histogram, Gaussian-like, etc.). step 5) How can we define similarity between patterns? There are multiple approaches that can be applied (vector distance, probability, etc.).

Each step could be influenced by the previous ones.

Finally, there are two types or levels of interoperability to be considered:

- 1) low-level interoperability, concerning data formats and algorithms, their transition or selection aspects among the different steps and consequently the possible related ontologies (algorithm ontology, media ontology, ?); 2) high-level interoperability, concerning the semantics at the base of the domain problem, that is how similar problems (segment this image; improve image quality) can be faced or even solved using codified 'experience' extracted from well-known case studies ?

In our present use case proposal we focused our attention mainly on the latter.

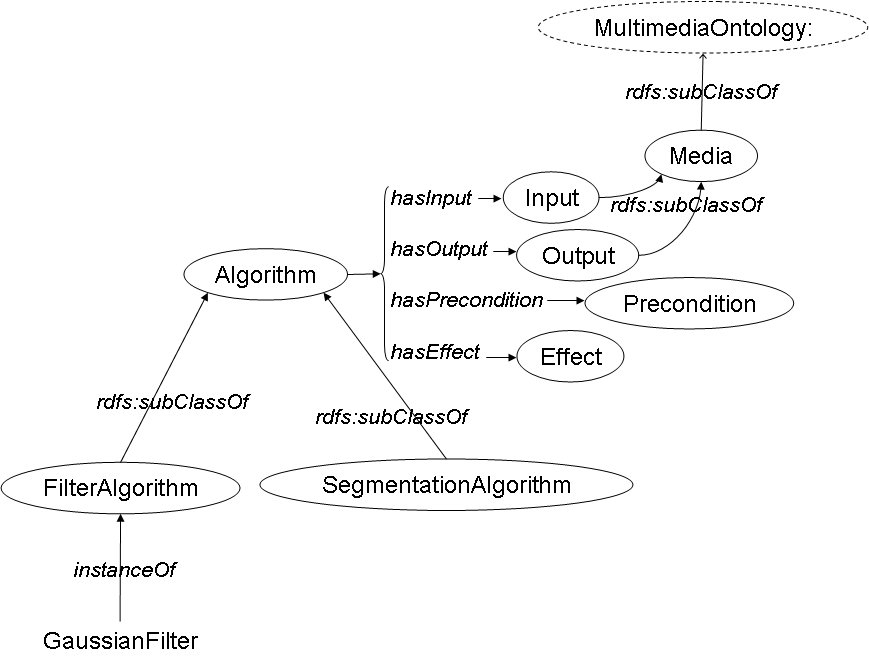

Figure 1: Segment of Algorithm Ontology

Goal: to segment the chest x-ray image (task 3)

A segmentation algorithm is selected. To be most effective this segmentation algorithm requires a particular level of signal-to-noise ratio. This is defined as the precondition (Algorithm.hasPrecondition) of the segmentation algorithm (instanceOf.SegmentationAlgoritm). To achieve this result a filter algorithm is found (Gaussian.instanceOf.FilterAlgorithm) which has the effect (Algorithm.hasEffect) of improving the signal-to-noise ratio for images of the same type as the chest x-ray image (Algorithm_hasInput). By comparing the values of the precondition of the segmentation algorithm with the effect of the filter algorithm we are able to decide on the best algorithms to achieve our goal.

The open research questions that we are currently investigating relate to the formal description of the values of effect and precondition and how these can be compared and related. The interoperability of the media descriptions and ability to describe visual features in a sufficiently abstract manner are key requirements.

2.5. Use Case: Collaborative Tagging

2.5.1. Introduction

Tags are what may be the simplest form of annotation: simple user-provided keywords that are assigned to resources, in order to support subsequent retrieval. In itself, this idea is not particularly new or revolutionary: keyword-based retrieval has been around for a while. In contrast to the formal semantics provided by the Semantic Web standards, tags have no semantic relations whatsoever, including a lack of hierarchy; tags are just flat collections of keywords.

There are however new dimensions that have boosted the popularity of this approach and given a new perspective on an old theme: low-cost applicability and collaborative tagging.

Tagging lowers the barrier of metadata annotation, since it requires minimal effort on behalf of annotators: there are no special tools or complex interface that the user needs to get familiar with, and no deep understanding of logic principles or formal semantics required – just some standard technical expertise. Tagging seems to work in a way that is intuitive to most people, as demonstrated by its widespread adoption, as well as by certain studies conducted on the field [20]. Thus, it helps bridging the 'semantic gap' between content creators and content consumers, by offering 'alternative points of access' [20] to document collections.

The main idea behind collaborative tagging is simple: collaborative tagging platforms (or, alternatively, distributed classification systems - DCSs [19]) provide the technical means, usually via some sort of web-based interface, that support users in tagging resources. What is the important aspect of this is that they aggregate collections of tags that an individual uses, or his tag vocabulary, called a personomy [24], into what has been termed a folksonomy: a collection of all personomies [22,23].

Some of the most popular collaborative tagging systems are Delicious (bookmarks), Flickr (images), Last.fm (music), YouTube (video), Connotea (bibliographic information), steve.museum (museum items) and Technorati (blogging). Using these platforms is free, although in some cases users can opt for more advanced features by getting an upgraded account, for which they have to pay. The most prominent among them are Delicious and Flickr, for which some quantitative user studies are available [16,17]. These user studies document a phenomenal growth, that indicates that in real-life tagging is a very viable solution for annotating any type of resource.

2.5.2. Motivating Scenario

Let us view some of the current limitations of tag-based annotation, by examining a motivating example:

Let's suppose that user Mary has an account on platform S1, that specializes in images. Mary has been using S1 for a while, so she has progressively built a large image collection, as well as a rich vocabulary of tags (personomy).

Another user, Sylvia, who is Mary's friend, is using a different platform, S2, to annotate her images. At some point, Mary and Sylvia attended the same event, and each one took some pictures with her own camera. As each user has her reasons for choosing a preferred platform, none of them would like to change. They would like however to be able to link to each other's annotated pictures, where applicable: it can be expected that since the pictures were taken at the same time and place, some of them may be annotated in similar way (same tags), even by different annotators. So they may (within the boundaries of word ambiguity) be about the same topic.

In the course of time Mary also becomes interested in video and starts shooting some of her own. As her personal video collection begins to grow, she decides to start using another collaborative tagging system, S3, that specializes in video, in order to better organise it. Since she already has a rich personomy built in S1, she would naturally like to reuse it in S3, to the extent possible: while some of the tags may not be appropriate, as they may represent one-off ('29-08-06') or photography-specific ('CameraXYZ') use, others might as well be reused across modalities/domains, in case they represent high-level concepts ('holidays'). So if Mary has both video and photographic material of some event, and since she has already created a personomy on S1, she would naturally like to be able to reuse it (partially, perhaps) on S2 as well.

2.5.3. Issues

The above scenario demonstrates limitations of tag-based systems with respect to personomy reuse:

- A personomy maintained at one platform cannot easily be reused for a tag-based retrieval on another tagging platform

- A personomy maintained at one platform cannot easily be reused to organize further media or resources on another tagging platform

As media resides not only on Internet platforms but is most likely maintained on a local computer at first, local organizational structures can also not easily be transferred to a tagging platform. The opposite holds as well, a personomy maintained on a tagging platform cannot easily be reused on a desktop computer.

Personomy reuse is currently not easily possible as each platform uses ad-hoc solutions and only provides tag navigation within its own boundaries: there is no standardization that regulates how tags and relations between tags, users, and resources are represented. Due to that lack of standardization there are further technical issues that become visible through the application programming interfaces provided by some tagging platforms:

- Some platforms prohibit tags containing space characters while other allow such tags

- Different platforms provide different functionality for organizing tags themselves, e.g. some platforms allow to summarize tags in tag-bundles

2.5.4. Possible Solutions

When it comes to interoperability, standards-based solutions have repeatedly proven successful in enabling to bridge different systems. This could also be the case here, as a standard for expressing personomies and folksonomies would enable interoperability across platforms. On the other hand, use of a standard should not enforce changes in the way tags are handled internally by each system - it simply aims to function as a bridge between different systems. The question is then, what standard?

We may be able to answer this question if we consider a personomy as a concept scheme: tags used by an individual express his or her expertise, interests and vocabulary, thus constituting the individual's own concept scheme. A recent W3C standard that has been designed specifically to express the basic structure and content of concept schemes is SKOS Core [21].The SKOS Core Vocabulary is an application of the Resource Description Framework (RDF), that can be used to express a concept scheme as an RDF graph. Using RDF allows data to be linked to and/or merged with other RDF data by semantic web applications.

Expressing personomies and folksonomies using SKOS is a good match for promoting a standard representation for tags, as well as integrating tag representation with Semantic Web standards: not only does it enable expression of personomies in a standard format that fits semantically, but also allows mixing personomies with existing Semantic Web ontologies. There is already a publicly available SKOS-based tagging ontology that can be used to build on [18], as well as some existing efforts to induce an ontology from collaborative tagging platforms [25].

Ideally, we would expect existing collaborative tagging platform to build on a standard representation for tags in order to enable interoperability and offer this as a service to their users. In practice however , even if such a representation was eventually adopted as a standard, our expectation is that there will be both technical and political reasons that could possibly hinder its adoption. A different strategy that may be able to deal with this issue then would be to implement this as a separate service that will integrate disparate collaborative tagging platforms based on such an emergind standard for tag representation, in the spirit of Web2.0 mashups. This service could either be provided by a 3rd party, or even be self-hosted by individual users, in the spirit of [26,27].

2.6. Use Case: Semantic Media retrieval - The Analysis perspective

2.6.1. Introduction

Semantic Media retrieval from a multimedia analysis perspective is equivalent to the automatic creation of semantic indexes for retrieval purposes. An efficient multimedia retrieval system [28], must (i) be able to handle the semantics of the query; (ii) unify multiple modalities in a homogeneous framework and (iii) abstract the relationship between low level media features and high level semantic concepts to allow the user to query in terms of these concepts rather than in terms of examples. This Use Case aims to pinpoint problems that arise during the effort for an automatic creation of semantic indexes and provide corresponding solutions using Semantic Web technologies.

For multimedia documents retrieval, using only low-level features as in the case of “retrieval by example”, on the one hand, one gets the advantage of an automatic computation of the required low-level features but on the other hand, it is lacks the ability to answer to high-level queries. For this, an abstraction of the high level multimedia content description is required. For example, the MPEG-7 standard which provides metadata descriptors for structural and low-level aspects of multimedia documents, needs to be properly linked to domain-specific ontologies that model high-level semantics.

Furthermore, since the provision of cross-linking between different media types or corresponding modalities supports a rich scope for inferencing a semantic interpretation, interoperability between different single media schemes is an important issue. This emerges from the need to homogenise different single modalities for which it is possible that (i) can inference particular high level semantics with different degrees of confidence, (ii) can be supported by a world modelling (or ontologies) where different relationships exist, e.g. in an image one can attribute spatial relationships while in a video sequence spatio-temporal relationships can be attained, and (iii) can have different role in a cross-modality fashion – which modality triggers the other, e.g. to identify that a particular photo in a Web page depicts person X, we first extract information from text and thereafter we cross-validate by the corresponding information extraction from the image.

Both of the above concerns, either the single modality or the cross-modality require semantic interoperability which will support a knowledge representation as well as a multimedia analysis part that can be directed towards an automatic semantics extraction from multimedia for semantic indexing and retrieval.

2.6.2. Motivating example

In the following, current pitfalls with respect to the desired semantic interoperability are given via examples. The discussed pitfalls are not the only ones, therefore, further discussion is needed to cover the broad scope of semantic multimedia retrieval.

Example 1 - Lack of semantics in low-level descriptors

Linking of low-level features to high-level semantics can be obtained by following two main trends: (i) using machine learning techniques to infer the required mapping and (ii) using ontology-driven approaches to both guide the semantic analysis and infer high-level concepts using reasoning.

In both above trends, it is appropriate for at least a certain granularity to produce concept detectors after a training phase based upon feature sets. Semantic interoperability cannot be achieved by only exchanging low-level features between different users since there is a lack of formal semantics. In particular, a set of low level descriptors (eg. MPEG-7) cannot be semantically meaningful since there is a lack of intuitive interpretation. The low level descriptors are represented as a vector of numerical values. Therefore, they are useful for a content-based multimedia retrieval rather than a semantic retrieval process.

Furthermore, since a set of low level descriptors can be conceived by only multimedia analysis experts, this set has to be transparent to any other user. For example, although a non-expert user can understand the color and shape of a particular object, he is unable to attribute to this object a suitable representation by the selection of appropriate low level descriptors. It is obvious that the low level descriptors do not only lack semantics but also limit their direct use to people that have gained a particular expertise concerning multimedia analysis.

The problem raised out of this Example and needs to be solved is in which way low level descriptors can be turned into an exchangeable bag of semantics.

Example 2 - Interchange of semantics between media

In semantic multimedia retrieval, cross-modality aspects are dominant. One aspect, is again motivated from the analysis part, that refers to particular concepts that require a priority in the processing of modalities during concept extraction. For example, to enhance recognition of a face of a particular person in an image of a Web page, that is a very difficult task, it seems natural that there should be an inference out of the textual content first and thereafter, it could be validated by the semantics extracted from the image.

For the sake of clarity, a possible scenario is described in the following which is taken from the ‘sports’ domain and more specifically from ‘athletics’.

Let assume that we need to index the web page shown at Figure 1, which is taken from the site of the International Association of Athletics Federation [29]. The subject of this page is ‘the victory of the athlete Reiko Sosa at the Tokyo’s marathon’. Let try to answer the question: What analysis steps are required if we would like to enable a semantic retrieval from the query “show me images with the athlete Reiko Sosa” ?

One might notice that for each image in this web page there is a caption which includes very useful information about the content of the image, in particular the persons appearing in it. Therefore, it is important to identify the areas of an image and the areas of a caption. Let assume that we can detect those areas (it is not useful to get into details how). Then, we proceed in the semantics extraction of the textual content in the caption which identifies Person Names = {Naoko Takahashi, Reiko Sosa}, Places = {Tokyo}, Athletics type = {Women’s Marathon} and activity = {runs away from} (see Figure 1, in yellow and blue color). In the case of the semantics extraction from images, we can identify the following concepts and relationships: In the image at the upper part of the web page, we can get the ‘athlete’s faces’ and with respect to the spatial relationship of those faces we can identify which face (athlete) takes lead against the other. Using only the image we cannot draw a conclusion who is the athlete. In the image at the lower part of the web page, we can identify that there exist a person after a face detection but still, we cannot ensure to whom this face belongs to. If we combine both the semantics from textual information in captions and the semantics from image we may give a large support to reasoning mechanisms to reach the conclusion that “we have images with the athlete Reiko Sosa”. Nonetheless, in the case that we have several athletes like in the image on the upper web image part, reasoning using the identified spatial relationship can spot which particular athlete between the two, is Reiko Sosa.

To fulfil such a scenario as above, we should solve the problem of interchanging semantics from different modalities.

(check the digital rights) ttp://www.iit.demokritos.gr/~ipratika/mmsem/webex1.jpg

Figure 1 : Example of a web page about athletics

2.6.3. Possible solutions

Example 1 (The solution)

As it was mentioned in the Example 1, semantics extraction can be achieved via concept detectors after a training phase based upon feature sets. Towards this goal, recently there was a suggestion in [30] to go from a low level description to a more semantic description by extending MPEG-7 to facilitate sharing classifier parameters and class models. This should occur by presenting the classification process in a standardised form. A classifier description must specify on what kind of data it operates, contain a description of the feature extraction process, the transformation to generate feature vectors and a model that associates specific feature vector values to an object class. For this, an upper ontology could be created, called a classifier ontology, which could be linked to a multimedia core ontology (eg. CIDOC CRM ontology), a visual descriptor ontology [31] as well as a domain ontology. (Here, a link to Algorithm Representation UC, could be issued). In the proposed solution, the visual descriptor ontology consists of a superset of MPEG-7 descriptors since the existing MPEG-7 descriptors cannot always support an optimal feature set for a particular class.

A scenario that exemplifies the use of the above proposal is given in the following. Maria is an architect who wishes to retrieve available multimedia material of a particular architecture style like ‘Art Nouveau’, ‘Art Deco’, ‘Modern’ among the bulk of data that she has already stored using her multimedia management software. Due to its particular interest, she plugs in the ‘Art Nouveau classifier kit’ that enables the retrieval of all images or videos that correspond to this particular style in the form of visual representation or non-visual or their combination (eg. a video on exploring the House of V. Horta, a major representative of Art Nouveau style in Brussels, which includes visual instances of the style as well as a narration about Art Nouveau history).

Here, the exchangeable bag of semantics is directly linked to an exchangeable bag of trained classifiers.

Example 2 (The solution)

In this example, to support reasoning mechanisms, it is required that apart of the ontological descriptions for each modality, there is a need for a cross-modality ontological description which interconnects all possible relations from each modality and constructs rules that are cross-modality specific. It is not clear, whether this can be achieved by an upper multimedia ontology (see [31]) or a new cross-modality ontology that will strive toward the knowledge representation of all possibilities combining media. Furthermore, in this new cross-modality ontology, special attention should be taken for the representation of the priorities among modalities for any multimodal concept (eg. get textual semantics first to attach semantics in an image). It is worth mentioning that the proposed solution is evidently superficial and requires a more in-depth study.

2.7. Use Case: Semantics from Multimedia Authoring

2.7.1. Introduction

Authoring of personalized multimedia content can be considered as a process consisting of selecting, composing, and assembling media elements into coherent multimedia presentations that meet the user’s or user group’s preferences, interests, current situation, and environment. In the approaches we find today, media items and semantically rich metadata information are used for the selection and composition task.

For example, Mary authors a multimedia birthday book for her daughter's 18th birthday with some nice multimedia authoring tool. For this she selects images, videos and audio from her personal media store but also content which is free or she own from the Web. The selection is based of the different metadata and descriptions that come with the media such as tags, descriptions, the time stamp, the size, the location of the media item and so on. In addition to the media elements used Mary arranges them in a spatio-temporal presentation: A welcome title first and then along "multimedia chapters" sequences and groups of images interleaved by small videos. Music underlies the presentation. Mary arranges and groups, adds comments and titles, resizes media elements, brings some media to front, takes others into the back. And then, finally, there is this great birthday presentation that shows the years of her daughter's life. She presses a button, creates a Flash presentation and all the authoring semantics are gone.

2.7.2. Lost multimedia semantics

Metadata and semantics today is mainly seen on the monomedia level. Single media elements such as image, video and text are annotated and enriched with metadata by different means ranging from automatic annotation to manual tagging. In a multimedia document typically a set of media items come together and are arranged into a coherent story with a spatial and temporal layout of the time-continuous presentation, that often also allows user interaction. The authored document is more than "just" the sum of the media elements it becomes a new document with its own semantics. However, in the way we pursue multimedia authoring today, we do not care and lose the emergent sementics from multimedia authoring [32].

2.7.2.1. Multimedia authoring semantics do not "survive" the composition

So, most valuable semantics for the media elements and the resulting multimedia content that emerge with and in the authoring process are not considered any further. This means that the effort for semantically enriching media content comes to a sudden halt in the created multimedia document – which is very unfortunate. For example, for a multimedia presentation it could be very helpful if an integrated annotation tells something about the structure of the presentation, the media items and formats used, the lenght of the presentation, its degree of interactivity, the table of contents of index of the presentation, a textual summary of the content, the targeted user group and so on. Current authoring tools just use metadata to select media elements and compose them into a multimedia presentation. They do not extract and summarize the semantics that emerge from the authoring and add them to the created document for later search, retrieval and presentation support.

2.7.2.2. Multimedia content can learn from composition and media usage

For example, the media store of Mary could "learn" that some of the media items seem to be more relevant than others. Additional comments on parts of the presentation could also be new metadata entries for the media items. And also the metadata of the single media items as well as of the presentation are not added to the presentation such that is can afterwards more easier be shared, searched, managed.

2.7.3. Interoperability problems

Currently, multimedia documents do not come with a single annotation scheme. SMIL [33] comes with the most advanced modeling of annotation. Based on RDF, the head of a SMIL document allows to add an RDF description of the presentation to the structured multiemdia document and gives the author or authoring tool a space where to put the presentation's semantics. In specific domains we find annotation schemes such as LOM [34] that provide the vocabulary for annotating Learning Objects which are often Powerpoint Presentations of PDF documents but might well be multimedia presentations. AKtive Media [35] is an ontology based multimedia annotation (Images and Text) system which provides an interface for adding ontology-based, free-text and relational annotations within multimedia documents. Even though the community effort will contribute to a more or less unified set of tags, this does not ensure interoperability, search, and exchange.

2.7.4. What is needed

A semantic description of multimedia presentation should reveal the semantics of its content as well as of the composition such that a user can search, reuse, integrate multimedia presentation on the Web into his or her system. A unified semantic Web annotation scheme could then describe the thousands of Flash presentations as well as powerpoints presentation, but also SMIL and SVG presentations. For existing presentations this would give the authors a chance to annotate the presentations. For authoring tool creators this will give the chance to publish a standardized semantic presentation description with the presentation.

3. Common Framework

In this section, we will propose a common framework that seek to provide both syntactic (via RDF) and semantic interoperability. During the FTF2, we have identified several layers of interoperability. Our methodology is simple: each use case identifies a common ontology/schema to facilitate interoperability in its own domain, and then we provide a simple framework to integrate and harmonise these common ontologies/schema from different domains. Furthermore, the simple extensible mechanism is provided to accommodate other ontologies/schema related to the use cases we considered. Last but not least, the framework includes some guidelines on which standard to use for specific tasks related to the use cases.

3.1. Syntactic Interoperability: RDF

Resource Description Framework (RDF) is a W3C recommendation that provides a standard to create, exchange and use annotations in the Semantic Web. An RDF statement is of the form [subject property object .] This simple and general form of syntax makes RDF a good candidate to provide (at least) syntactic interoperability.

3.2. Layers of Interoperability

[Based on discussions in FTF2]

3.3. Common Ontology/Schema

Individual use case provides its common ontology/schema for its domain.

3.4. Ontology/Schema Integration, Harmonisation and Extension

[Integrate and harmonise the common ontologies/schema presented in the previous sub-section. Based on this, to provide a simple extensible mechanism.]

3.5. Guidelines

Individual use case provides guidelines on which standard to use for specific tasks related to the use case.

4. Conclusions

5. Other Problems

5.1. Multilinguality

5.2. Multmedia Authoring

6. References

[1] GfK Group for CeWe Color. Usage behavior digital photography, 2006.

[2] Describing and retrieving photos using RDF and HTTP, W3C Note 19 April 2002, http://www.w3.org/TR/photo-rdf

[3] Dublin Core. http://dublincore.org/

[4] EXIF: Exchangeable Image File Format, Japan Electronic Industry Development Association (JEIDA). Specifications version 2.2 available in HTML and PDF

[5] Adobe, Extensible Metadata Platform (XMP) http://www.adobe.com/products/xmp/index.html

[6] Information Interchange Model, http://www.iptc.org/IIM/

[7] Image Annotation on the Semantic Web. http://www.w3.org/2001/sw/BestPractices/MM/image_annotation.html#vocabularies

[8] News Architecture (NAR) for G2-standards, http://www.iptc.org/NAR/

[9] The IPTC NewsCodes - Metadata taxonomies for the news industry, http://www.iptc.org/NewsCodes/

[10] EBU: European Broadcasting Union, http://www.ebu.ch/

[11] MPEG-7: Multimedia Content Description Interface, Standard No. ISO/IEC n°15938, 2001.

[12] Kanzaki EXIF-RDF Converter, http://www.kanzaki.com/test/exif2rdf

[13] JPEGRDF - Norm Walsh EXIF Converter, http://www.nwalsh.com/java/jpegrdf/

[14] Patrizia Asirelli, Massimo Martinelli, Ovidio Salvetti, An Infrastructure for MultiMedia Metadata Management SWAMM2006, Edinburgh.

[15] Patrizia Asirelli, Massimo Martinelli, Ovidio Salvetti, Call for a Common Multimedia Ontology Framework Requirements Harmonization of Multimedia Ontologies activity 2006.

[16] HitWise Intelligence: Del.icio.us Traffic More Than Doubled Since January, http://weblogs.hitwise.com/leeann-prescott/2006/08/delicious_traffic_more_than_do.html.

[17] Nielsen//NetRatings: USER-GENERATED CONTENT DRIVES HALF OF U.S. TOP 10 FASTEST GROWING WEB BRANDS, ACCORDING TO NIELSEN//NETRATINGS, http://www.nielsen-netratings.com/pr/PR_060810.PDF.

[18] Richard Newman, Danny Ayers, Seth Russell: Tag Ontology. http://www.holygoat.co.uk/owl/redwood/0.1/tags/.

[19] Ulises Ali Mejias, 2005: Tag literacy. http://ideant.typepad.com/ideant/2005/04/tag_literacy.html.

[20] J. Trant (2006), "Exploring the potential for social tagging and folksonomy in art museums: proof of concept", New Review of Hypermedia and Multimedia, 2006.

[21] SKOS Core: http://www.w3.org/2004/02/skos/core/.

[22] Mathes, A., Folksonomies - Cooperative Classification and Communication Through Shared Metadata. Computer Mediated Communication - LIS590CMC, Graduate School of Library and Information Science, University of Illinois Urbana-Champaign, http://www.adammathes.com/academic/computer-mediated-communication/folksonomies.html, 2004.

[23] Smith, G. “Atomiq: Folksonomy: social classification.” http://atomiq.org/archives/2004/08/folksonomy_social_classification.html, Aug 3, 2004

[24] Hotho, A., Jaschke, R., Schmitz, C., Stumme, G. (2006): Information Retrieval in Folksonomies: Search and Ranking. 3rd European Semantic Web Conference, Budva, Montenegro, http://www.kde.cs.uni-kassel.de/hotho/pub/2006/seach2006hotho_eswc.pdf, , 11-14 June 2006.

[25] Smitz, P. "Inducing Ontology from Flickr Tags", paper for the Collaborative Web Tagging Workshop at WWW2006, Edinburgh, http://www.rawsugar.com/www2006/22.pdf, 22 May 2006.

[26] Koivunen, M. Annotea and Semantic Web Supported Collaboration, in Proc. Of the ESWC2005 Conference, Crete, http://www.annotea.org/eswc2005/01_koivunen_final.pdf, May 2005.

[27] Segawa, O. 2006. Web annotation sharing using P2P. In Proceedings of the 15th International Conference on World Wide Web (Edinburgh, Scotland). WWW '06. ACM Press, New York, NY, 851-852. http://doi.acm.org/10.1145/1135777.1135910, May 23 - 26, 2006.

[28] M. Naphade and T. Huang, “Extracting semantics from audiovisual content: The final frontier in multimedia retrieval”, IEEE Transactions on Neural Networks, vol. 13, No. 4, 2002.

[29] http://www.iaaf.org.

[30] M. Asbach and J-R Ohm, “Object detection and classification based on MPEG-7 descriptions – Technical study, use cases and business models”, ISO/IEC JTC1/SC29/WG11/MPEG2006/M13207, April 2006, Montreaux, CH.

[31] H. Eleftherohorinou, V. Zervaki, A. Gounaris, V. Papastathis, Y. Kompatsiaris, P. Hobson, “Towards a common multimedia ontology framework (Analysis of the Contributions to Call for a Common multimedia ontology framework requirements)”, http://www.acemedia.org/aceMedia/files/multimedia_ontology/cfr/MM-Ontologies-Reqs-v1.3.pdf.

[32] Ansgar Scherp, Susanne Boll, Holger Cremer: Emergent Semantics in Personalized Multimedia Content, Fourth special Workshop on Multimedia Semantics, Chania, Greece, June 2006 http://www.kettering.edu/~pstanche/wms06homepage/Agenda.html.htm.

[33] W3C: Synchronized Multimedia Integration Language (SMIL 2.0) - [Second Edition]. W3C Recommendation 07 January 2005http://www.w3.org/TR/2005/REC-SMIL2-20050107/.

[34] IEEE: IEEE 1484.12.1-2002. Draft Standard for Learning Object Metadata http://ltsc.ieee.org/wg12/files/LOM_1484_12_1_v1_Final_Draft.pdf.

[35] AKTive Media. AKTive Media - Ontology based annotation system. http://www.dcs.shef.ac.uk/~ajay/html/cresearch.html.