Authors: OscarCelma, GiovanniTummarello

Contributors: Michiel Hildebrand, CWI

Music Use Case

Index

Contents

Introduction

In recent years the typical music consumption behaviour has changed dramatically. Personal music collections have grown favoured by technological improvements in networks, storage, portability of devices and Internet services. The amount and availability of songs has de-emphasized its value: it is usually the case that users own many digital music files that they have only listened to once or even never. It seems reasonable to think that by providing listeners with efficient ways to create a personalized order on their collections, and by providing ways to explore hidden "treasures" inside them, the value of their collection will drastically increase.

Also, notwithstanding the digital revolution had many advantages, we can point out some negative effects. Users own huge music collections that need proper storage and labelling. Searching inside digital collections arise new methods for accessing and retrieving data. But, sometimes there is no metadata -or only the file name- that informs about the content of the audio, and that is not enough for an effective utilization and navigation of the music collection.

Thus, users can get lost searching into the digital pile of his music collection. Yet, nowadays, the web is increasingly becoming the primary source of music titles in digital form. With millions of tracks available from thousands of websites, finding the right songs, and being informed of newly music releases is becoming a problematic task. Thus, web page filtering has become necessary for most web users.

Beside, on the digital music distribution front, there is a need to find ways of improving music retrieval effectiveness. Artist, title, and genre keywords might not be the only criteria to help music consumers finding music they like. This is currently mainly achieved using cultural or editorial metadata ("artist A is somehow related with artist B") or exploiting existing purchasing behaviour data ("since you bought this artist, you might also want to buy this one"). A largely unexplored (and potentially interesting) complement is using semantic descriptors automatically extracted from the music audio files. These descriptors can be applied, for example, to recommend new music, or generate personalized playlists.

A complete description of a popular song

In [Pac05], Pachet classifies the music knowledge management. This classification allows to create meaningful descriptions of music, and to exploit these descriptions to build music related systems. The three categories that Pachet defines are: editorial (EM), cultural (CM) and acoustic metadata (AM).

Editorial metadata includes simple creation and production information (e.g. the song C'mon Billy, written by P.J. Harvey in 1995, was produced by John Parish and Flood, and the song appears as the track number 4, on the album "To bring you my love"). EM includes, in addition, artist biography, album reviews, genre information, relationships among artists, etc. As it can be seen, editorial information is not necessarily objective. It is usual the case that different experts cannot agree in assigning a concrete genre to a song or to an artist. Even more diffcult is a common consensus of a taxonomy of musical genres.

Cultural metadata is defined as the information that is implicitly present in huge amounts of data. This data is gathered from weblogs, forums, music radio programs, or even from web search engines' results. This information has a clear subjective component as it is based on personal opinions.

The last category of music information is acoustic metadata. In this context, acoustic metadata describes the content analysis of an audio file. It is intended to be objective information. Most of the current music content processing systems operating on complex audio signals are mainly based on computing low-level signal features. These features are good at characterising the acoustic properties of the signal, returning a description that can be associated to texture, or at best, to the rhythmical attributes of the signal. Alternatively, a more general approach proposes that music content can be successfully characterized according to several "musical facets" (i.e. rhythm, harmony, melody, timbre, structure) by incorporating higher-level semantic descriptors to a given feature set. Semantic descriptors are predicates that can be computed directly from the audio signal, by means of the combination of signal processing, machine learning techniques, and musical knowledge.

Semantic Web languages allow to describe all this metadata, as well as integrating it from different music repositories.

The following example shows an RDF description of an artist, and a song by the artist:

<rdf:Description rdf:about="http://www.garageband.com/artist/randycoleman">

<rdf:type rdf:resource="&music;Artist"/>

<music:name>Randy Coleman</music:name>

<music:decade>1990</music:decade>

<music:decade>2000</music:decade>

<music:genre>Pop</music:genre>

<music:city>Los Angeles</music:city>

<music:nationality>US</music:nationality>

<geo:Point>

<geo:lat>34.052</geo:lat>

<geo:long>-118.243</geo:long>

</geo:Point>

<music:influencedBy rdf:resource="http://www.coldplay.com"/>

<music:influencedBy rdf:resource="http://www.jeffbuckley.com"/>

<music:influencedBy rdf:resource="http://www.radiohead.com"/>

</rdf:Description>

<rdf:Description rdf:about="http://www.garageband.com/song?|pe1|S8LTM0LdsaSkaFeyYG0">

<rdf:type rdf:resource="&music;Track"/>

<music:title>Last Salutation</music:title>

<music:playedBy rdf:resource="http://www.garageband.com/artist/randycoleman"/>

<music:duration>T00:04:27</music:duration>

<music:key>D</music:key>

<music:keyMode>Major</music:keyMode>

<music:tonalness>0.84</music:tonalness>

<music:tempo>72</music:tempo>

</rdf:Description>

Lyrics as metadata

For a complete description of a song, lyrics must be considered as well. While lyrics could in a sense be regarded as "acoustic metadata", they are per se actual information entities which have themselves annotation needs. Lyrics share many similarities with metadata, e.g. they usually refer directly to well specified song, but acceptions exists as different artist might sing the same lyrics sometimes even with different musical bases and styles. Most notably, lyrics have often different authors than the music and voice that interprets them and might be composed at a different time. Lyrics are not a simple text; they often have a structure which is similar to that of the song (e.g. a chorus) so they justify the use use of a markup language with a well specified semantics. Unlike the previous types of metadata, however, they are not well suited to be expressed using the W3C Semantic Web initiative languages, e.g. in RDF. While RDF has been suggested instead of XML for for representig texts in situation where advanced and multilayered markup is wanted [Ref RDFTEI], music lyrics markup needs usually limit themselves to indicating particular sections of the songs (e.g. intro, outro, chorus) and possibly the performing character (e.g. in duets). While there is no widespread standard for machine encoded lyrics, some have been proposed [LML][4ML] which in general fit the need for formatting and differentiating main parts. An encoding in RDF of lyrics would be of limited use but still possible with RDF based queries possible just thanks to text search operators in the query language (therfore likely to be limited to "lyrics that contain word X"). More complex queries could be possible if more characters are performing in the lirics and each denoted by an RDF entity which has other metadata attached to it (e.g. the metadata described in the examples above).

It is to be reported however that an RDF encoding would have the disadvantage of complexity. In general it would require a supporting software (for example http://rdftef.sourceforge.net/) to be encoded as XML/RDF can be difficultly written by hand. Also, contrary to an XML based encoding, it could not be easily visualized in a human readable way by, e.g., a simple XSLT transformation.

Both in case of RDF and XML encoding, interesting processing and queries (e.g. conceptual similarities between texts, moods etc) would necessitate advanced textual analysis algorithms well outside the scope or XML or RDF languages. Interestingly however, it might be possible to use RDF description to encode the results of such advanced processings. Keyword extraction algorithms (usually a combination of statistical analysis, stemming and linguistical processing e.g. using wordnet) can be successfully employed on lyrics. The resulting reppresentative "terms" can be encoded as metadata to the lyrics or to the related song itself.

Lower Level Acoustic metadata

"Acoustic metadata" is a broad term which can encompass both features which have an immediate use in higher level use cases (e.g. those presented in the above examples such as tempo, key, keyMode etc ) and those that can only be interpreted by data analisys (e.g. a full or simplified representation of the spectrum or the average power sliced every 10 ms). As we have seen, semantic technologies are suitable for reppresenting the higher level acoustic metadata. These are in fact both concise and can be used directly in semantic queries using, e.g., SparQL. Lower level metadata however, e.g. the MPEG7 features extracted by extractors like [Ref MPEG7AUDIODB] is very ill suited to be represented in RDF and is better kept in mpeg-7/xml format for serialization and interchange.

Semantic technologies could be of use in describing such "chunks" of low level metadata, e.g. describing what the content is in terms of describing which features are contained and at which quality. While this would be a duplicaiton of the information encoded in the MPEG-7/XML, it might be of use in semantic queries which select tracks also based on the availability of rich low level metadata.

Motivating Examples (Use Cases)

Commuting is a big issue in any modern society. Semantically Personalized Playlists might provide both relief and actually benefit in time that cannot be devoted to actively productive activities. Filippo commutes every morning an average of 50+-10 minutes. Before leaving he connects his USB stick/mp3 player to have it "filled" with his morning playlist. The process is completed in 10 seconds, afterall is just 50Mb. he is downloading. During the time of his commute, Filippo will be offered a smooth flow of news, personal daily , entertainment, and cultural snippets from audiobooks and classes.

Musical content comes from Filippo personal music collection or via a content provider (e.g. a low cost thanks to a one time pay license). Further audio content comes from podcasts but also from text to speech reading blog posts, emails, calendar items etc.

Behind the scenes the system works by a combination of semantic queries and ad-hoc algorithms. Semantic queries operate on an RDF database collecting the semantic reppresentation of music metadata (as explained in section 1), as well as annotations on podcasts, news items, audiobooks, and "semantic desktop items" that is represting Filippo's personal desktop information -such as emails and calendar entries.

Ad-hoc algorithms operate on low level metadata to provide smooth transition among tracks. Algorithms for text analysis provide further links among songs and links within songs, pieces of news, emails etc.

At a higher level, a global optimization algorithm takes care of the final playlist creation. This is done by balancing the need for having high priority items played first (e.g. emails from addresss considered important) with the overall goal of providing a smooth and entertaining experience (e.g. interleaving news with music etc).

Semantics can help in providing "related information or content" which can be put adjacent to the actual core content. This can be done in relative freedom since the content can be at any time skipped by the user using simply the forward button.

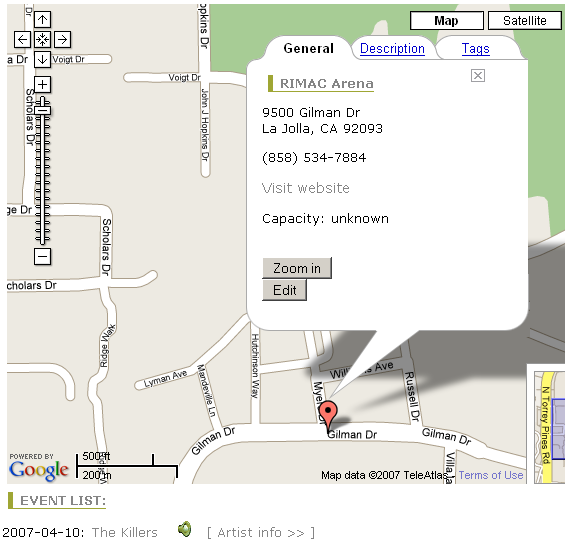

Upcoming concerts

John has been listening to the "Snow Patrol" band for a while. He discovered the band while listening to one of his favorite podcasts about alternative music. He has to travel to San Diego next week, and he is finding upcoming concerts that he would enjoy there, and he asks his personalized semantic web music service to provide him with some recommendations of upcoming gigs in the area, and decent bars to have a beer.

<!-- San Diego geolocation --> <foaf:based_near geo:lat='32.715' geo:long='-117.156' />

The system is tracking user listening habits, so it detects than one song from "The Killers" band (scrapped from their website) sounds similar to the last song John has listened to from "Snow Patrol". Moreover, both bands have similar styles, and there are some podcasts that contain songs from both bands in the same session. Interestingly enough, the system knows that the Killers are playing close to San Diego next weekend, thus it recommends to John to assist to that gig.

Facet browsing of Music Collections

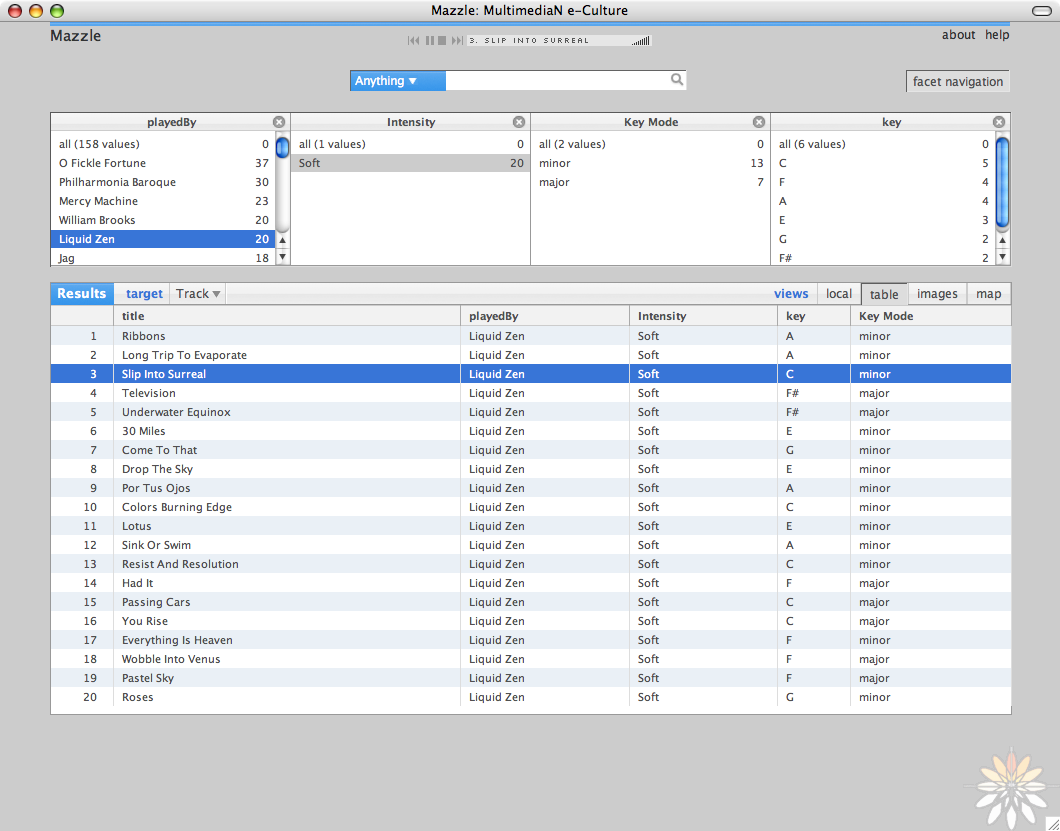

Michael has a brand new (last generation-posh) iPod. He is looking for some music using the classic hierarchical navigation (Genre->Artist->Album->Songs). But the main problem is that he is not able to find a decent list of songs (from his 100K music collection) to move into his iPod. On the other hand, facet browsing has recently become popular as a user friendly interface to data repositories.

/facet system [Hil06] presents a new and intuitive way to navigate large collections, using several facets or aspects, of multimedia assets. /facet extends browsing of Semantic Web data in four ways. First, users are able to select and navigate through facets of resources of any type and to make selections based on properties of other, semantically related, types. Second, it addresses a disadvantage of hierarchy-based navigation by adding a keyword search interface that dynamically makes semantically relevant suggestions. Third, the /facet interface, allows the inclusion of facet-specific display options that go beyond the hierarchical navigation that characterizes current facet browsing. Fourth, the browser works on any RDF dataset without any additional configuration.

Thus, based on a RDF description of music titles] (see section 1) the user can navigate through music facets, such as Rhythm (beats per minute), Tonality (Key and mode), Intensity of the piece (moderate, energetic, etc.)

A fully functional example can be seen at http://slashfacet.semanticweb.org/music/mazzle

The following image depicts the system (courtesy of CWI):

Music Metadata on the Semantic Web

Nowadays, in the context of the World Wide Web, the increasing amount of available music makes very difficult, to the user, to find music he/she would like to listen to. To overcome this problem, there are some audio search engines that can fit the user's needs (for example: http://search.singingfish.com/, http://audio.search.yahoo.com/, http://www.audiocrawler.com/, http://www.alltheweb.com/?cat=mp3, http://www.searchsounds.net and http://www.altavista.com/audio/).

Some of the current existing search engines are nevertheless not fully exploited because their companies would have to deal with copyright infringing material. Music search engines have a crucial component: an audio crawler, that scans the web and gathers related information about audio files.

Moreover, describing music it not an easy task. As presented in section 1, music metadata copes with several categories (editorial, acoustic, and cultural). Yet, none of the audio metadata used in practice (e.g ID3, OGG Vorbis, etc.) can fully describe all these facets. Actually, metadata for describing music are mostly tags implemented in the Key-Value form [TAG]=[VALUE], for instance, "ARTIST=The Killers".

The following section introduces, then, the mappings between current audio vocabularies within the Semantic Web technologies. This will allow to extend the description of a piece of music, as well as adding explicit semantics.

Integrating Various Vocabularies Using RDF

In this section we present a way to integrate several audio vocabularies into a single one, based on RDF. For more details about the audio vocabularies, the reader is refered to Vocabularies - Audio Content Section, and Vocabularies - Audio Ontologies Section.

This section will focus on the ID3 and OGG Vorbis metadata initiatives, as they are the most used ones. Though, both vocabularies cope only editorial data. Moreover, a first mapping with the Music Ontology is presented, too.

ID3 metadata

The ID3 is a metadata container most often used in conjunction with the MP3 audio file format. It allows information such as the title, artist, album, track number, or other information about the file to be stored in the file itself (from Wikipedia).

The most important metadata descriptors are:

Artist name <=> <foaf:name></foaf:name>

Album name <=> <mo:Record><dc:title>Album name</dc:title></mo:Record>

Song title <=> <mo:Track><dc:title>Album name</dc:title></mo:Track>

- Year

Track number <=> <mo:trackNum>Track number</mo:trackNum></mo:Track>

Genre (from a predefined list of more than 100 genres) <=> <mo:Genre>Genre name</mo:Genre>

OGG Vorbis metadata

OGG Vorbis metadata, called comments, support metadata 'tags' similar to those implemented in the ID3. The metadata is stored in a vector of strings, encoded in UTF-8

TITLE <=> <mo:Track><dc:title>Album name</dc:title></mo:Track>

- VERSION: The version field may be used to differentiate multiple versions of the same track title

ALBUM <=> <mo:Record><dc:title>Album name</dc:title></mo:Record>

TRACKNUMBER <=> <mo:trackNum>Track number</mo:trackNum></mo:Track>

ARTIST <=> <foaf:name></foaf:name>

PERFORMER <=> <foaf:name></foaf:name> ???

- COPYRIGHT: Copyright attribution

- LICENSE: License information, eg, 'All Rights Reserved', 'Any Use Permitted', a URL to a license such as a Creative Commons license

- ORGANIZATION: Name of the organization producing the track (i.e ‘a record label’)

- DESCRIPTION: A short text description of the contents

GENRE <=> <mo:Genre>Genre name</mo:Genre>

- DATE

- LOCATION: Location where the track was recorded

RDFizing songs

In this section we present a way to RDFize tracks based on the Music Ontology.

Example: Search a song into MusicBrainz and RDFize results. This first example shows how to query the MusicBrainz music repository, and RDFize the results based on the Music Ontology. Try a complete example here. The parameters are song title (The Fly) and artist name (U2).

<mo:Track rdf:about='http://musicbrainz.org/track/dddb2236-823d-4c13-a560-bfe0ffbb19fc'>

<mo:puid rdf:resource='2285a2f8-858d-0d06-f982-3796d62284d4'/>

<mo:puid rdf:resource='2b04db54-0416-d154-4e27-074e8dcea57c'/>

<dc:title>The Fly</dc:title>

<dc:creator>

<mo:MusicGroup rdf:about='http://musicbrainz.org/artist/a3cb23fc-acd3-4ce0-8f36-1e5aa6a18432'>

<foaf:img rdf:resource='http://ec1.images-amazon.com/images/P/B000001FS3.01._SCMZZZZZZZ_.jpg'/>

<mo:musicmoz rdf:resource='http://musicmoz.org/Bands_and_Artists/U/U2/'/>

<foaf:name>U2</foaf:name>

<mo:discogs rdf:resource='http://www.discogs.com/artist/U2'/>

<foaf:homepage rdf:resource='http://www.u2.com/'/>

<foaf:member rdf:resource='http://musicbrainz.org/artist/0ce1a4c2-ad1e-40d0-80da-d3396bc6518a'/>

<foaf:member rdf:resource='http://musicbrainz.org/artist/1f52af22-0207-40ac-9a15-e5052bb670c2'/>

<foaf:member rdf:resource='http://musicbrainz.org/artist/a94e530f-4e9f-40e6-b44b-ebec06f7900e'/>

<foaf:member rdf:resource='http://musicbrainz.org/artist/7f347782-eb14-40c3-98e2-17b6e1bfe56c'/>

<mo:wikipedia rdf:resource='http://en.wikipedia.org/wiki/U2_%28band%29'/>

</mo:MusicGroup>

</dc:creator>

</mo:Track>

ID3 to RDF Example

In this section we present a way to map ID3 tags with the Music Ontology.

Example: The parameter is a URL that contains an MP3 file. In this case it reads the ID3 tags from the MP3 file. See an output example here (it might take a little while).

<mo:Track rdf:about='http://musicbrainz.org/track/7201c2ab-e368-4bd3-934f-5d936efffcdc'>

<dc:creator>

<mo:MusicGroup rdf:about='http://musicbrainz.org/artist/6b28ecf0-94e6-48bb-aa2a-5ede325b675b'>

<foaf:name>Blues Traveler</foaf:name>

<mo:discogs rdf:resource='http://www.discogs.com/artist/Blues+Traveler'/>

<foaf:homepage rdf:resource='http://www.bluestraveler.com/'/>

<foaf:member rdf:resource='http://musicbrainz.org/artist/d73c9a5d-5d7d-47ec-b15a-a924a1a271c4'/>

<mo:wikipedia rdf:resource='http://en.wikipedia.org/wiki/Blues_Traveler'/>

<foaf:img rdf:resource='http://ec1.images-amazon.com/images/P/B000078JKC.01._SCMZZZZZZZ_.jpg'/>

</mo:MusicGroup>

</dc:creator>

<dc:title>Back in the Day</dc:title>

<mo:puid rdf:resource='0a57a829-9d3c-eb35-37a8-d0364d1eae3a'/>

<mo:puid rdf:resource='02039e1b-64bd-6862-2d27-3507726a8268'/>

</mo:Track>

A possible scenario to exploit RDFized songs

Example: Once the songs have been RDFize, we can ask last.fm for the latest tracks a user has been listening to, and then RDFize them. This example shows the latest tracks a user (RJ) has been listening to. You can try it here

References

[Pac05] "Knowledge Management and Musical Metadata", (F. Pachet) - Encyclopedia of Knowledge Management, 2005, in Encyclopedia of Knowledge Management, Schwartz, D. Ed. Idea Group, 2005.

[Hil06] "/facet: A browser for heterogeneous semantic web repositories" (Hildebrand, M., Ossenbruggen, J. R. van, Hardman, L.), in CWI. Information Systems [INS] ; E 0604. ISSN: 1386-3681, 2006.