simultaneous modalities

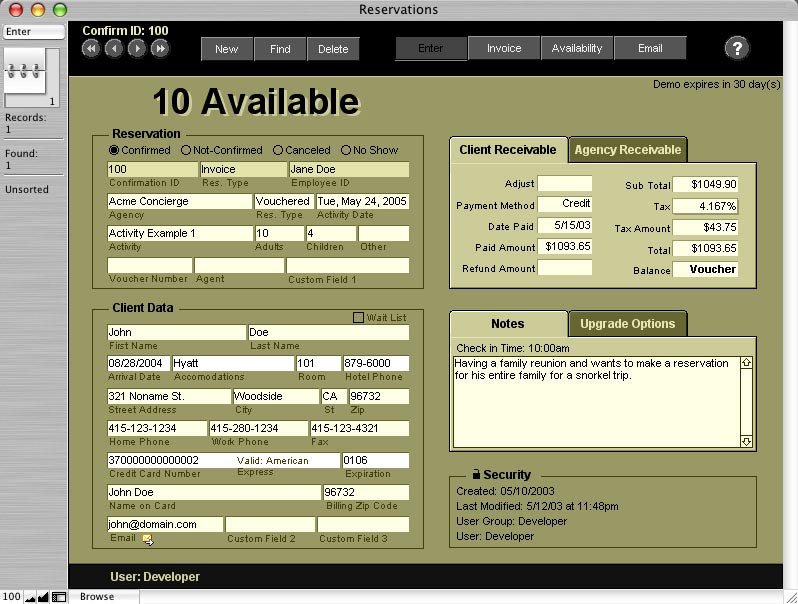

Travel reservations over phone/pda/wap

III.2 Who you gonna call?

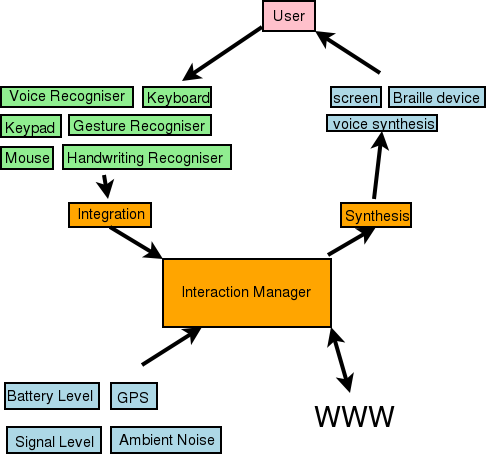

The WG was mostly a spin-off of the Voice Browser WG, after the realisation that voice interaction with computers isn't just for call centres. Instead it can now happen on the device itself. And then why limit ourselves to speech, how about ink, gestures, many input modes, concurrent or sequential. How about dynamic modality switching upon environmental conditions? etc.

III.3 How do they do that?

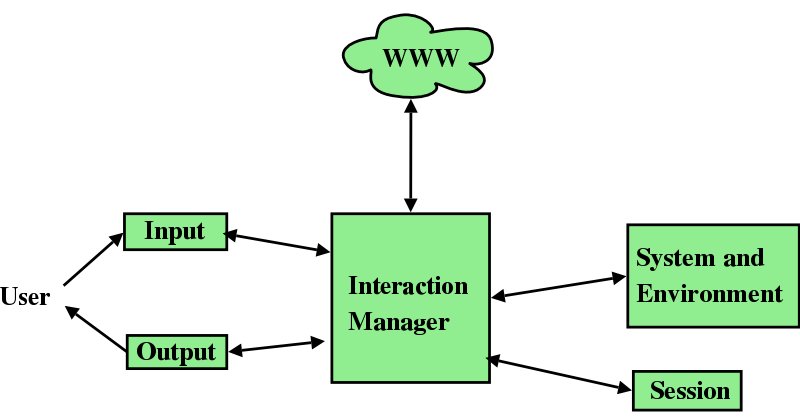

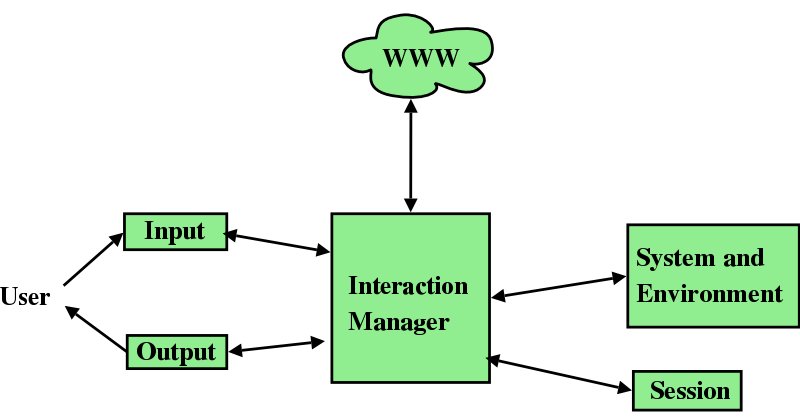

The Framework

What to standardise?

- First, the browser

- Second, the Web

Q: But why standardise things that are happening inside the browser?

A1: browsers are plurilithic

A2: you can't detach interaction form application

Deeper into the framework

- the boxes don't necessarily map to devices

- Reuse of existing markup: XHTML, CSS, SVG for output, SRGS for input

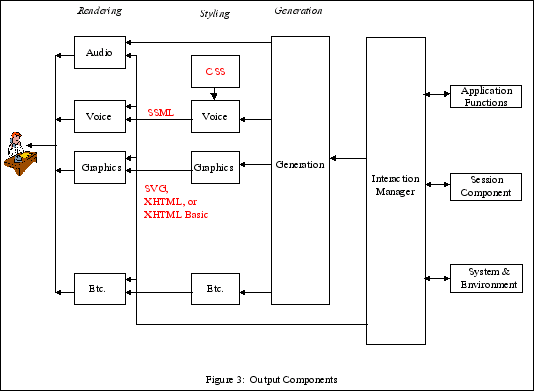

Deeper still:output

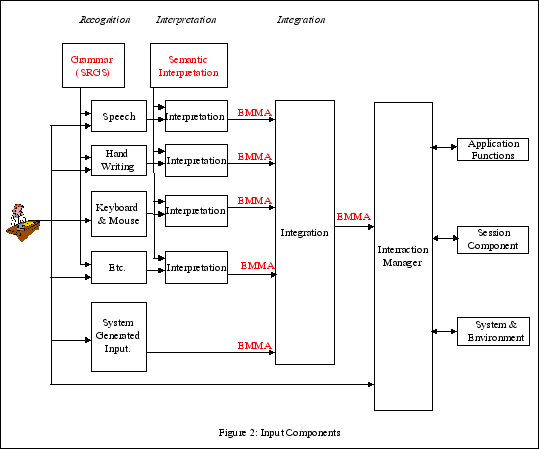

Deeper still: input

Work items

Now that we've constructed a framework, what new pieces do we add?

- "connecting" things together: the MID

- Input modalities: reuse standards for speech/text grammars (SRGS), need grammars for tablets: InkML

- Output modalities: reuse SVG, HTML, CSS, SSML, etc.

- Transmitting and merging input data: EMMA, Compositing

- Interaction Manager

- System and Environment (now DPF)

- Sessions