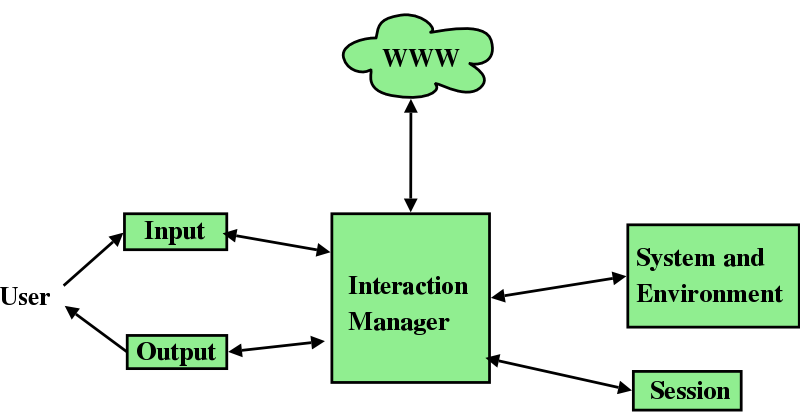

in the 90s came multi-output (aka multimedia)...

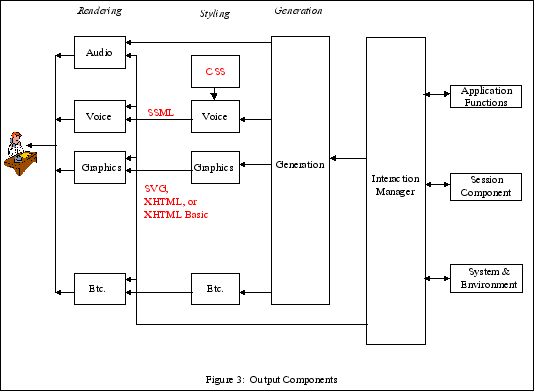

in the 00s come multi-input (aka multimodal)

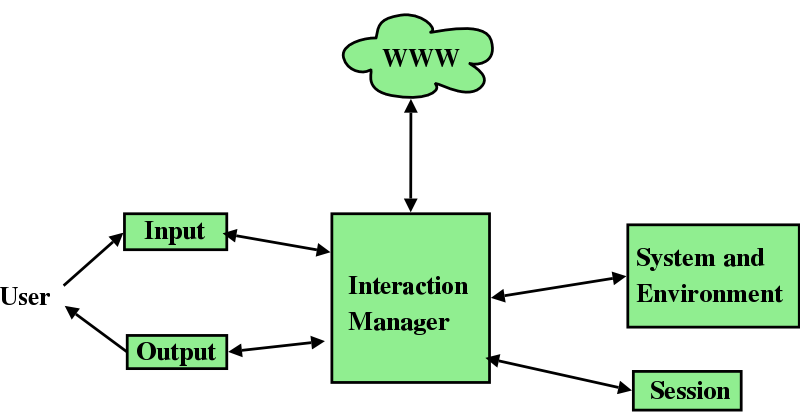

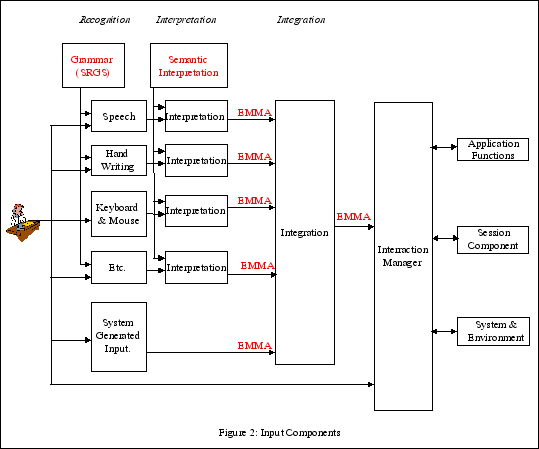

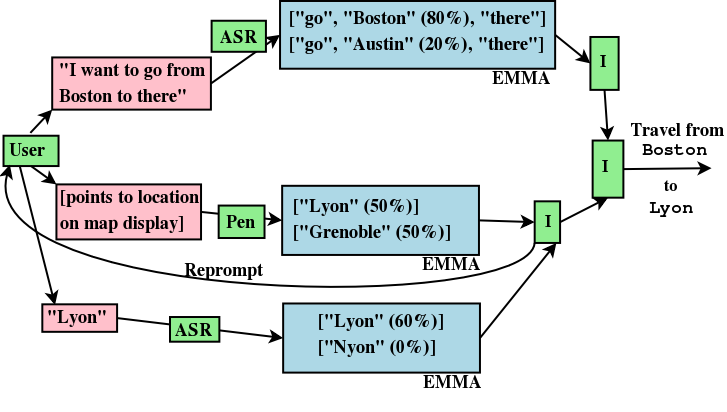

But multimodal is also about

Why W3C? W3C doesn't do browsers!

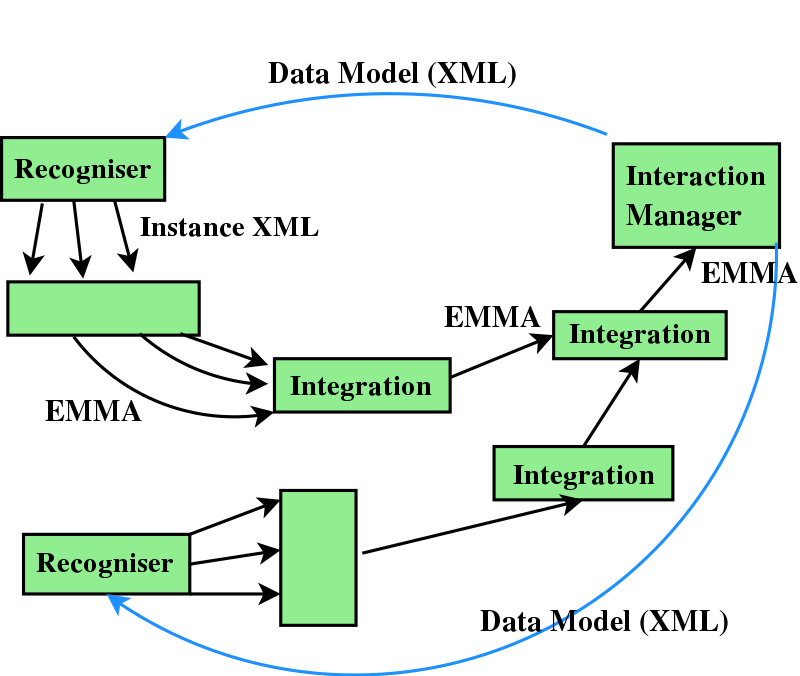

A whole framework

A big working group, with many participants from VoiceXML/SRGS/SSML group.

Goals

Standards in MMI area, are important, like in Voice applications, as software components come from very different areas of expertise.

Open Issue

The Multimodal Interaction Working Group is currently considering the role of RDF in EMMA syntax and processing...

<emma:emma emma:version="1.0"

xmlns:emma="http://www.w3.org/2003/04/emma#">

<emma:one-of emma:id="r1"

emma:start="2003-03-26T0:00:00.15"

emma:end="2003-03-26T0:00:00.2">

<emma:interpretation emma:id="int1" emma:confidence="0.75" >

<origin>Boston</origin>

<destination>Denver</destination>

<date>

<emma:absolute-timestamp

emma:start="2003-03-26T0:00:00.15"

emma:end="2003-03-26T0:00:00.2"/>

03112003

</date>

</emma:interpretation>

<emma:interpretation emma:id="int2" emma:confidence="0.68" >

<origin>Austin</origin>

<destination>Denver</destination>

<date>03112003</date>

</emma:interpretation>

</emma:one-of>

</emma:emma>

<emma:emma emma:version="1.0"

xmlns:emma="http://www.w3.org/2003/04/emma#"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#">

<emma:one-of emma:id="r1">

<emma:interpretation emma:id="int1">

<origin>Boston</origin>

<destination>Denver</destination>

<date>03112003</date>

</emma:interpretation>

<emma:interpretation emma:id="int2">

<origin>Austin</origin>

<destination>Denver</destination>

<date>03112003</date>

</emma:interpretation>

</emma:one-of>

<rdf:RDF>

<!-- time stamp for result -->

<rdf:Description rdf:about="#r1"

emma:start="2003-03-26T0:00:00.15"

emma:end="2003-03-26T0:00:00.2"/>

<!-- confidence score for first interpretation -->

<rdf:Description rdf:about="#int1" emma:confidence="0.75"/>

<!-- confidence score for second interpretation -->

<rdf:Description rdf:about="#int2" emma:confidence="0.68"/>

<!-- time stamps for date in first interpretation -->

<rdf:Description rdf:about="#emma(id('int1')/date)">

<emma:absolute-timestamp

emma:start="2003-03-26T0:00:00.15"

emma:end="2003-03-26T0:00:00.2"/>

</rdf:Description>

</rdf:RDF>

</emma:emma>

Links to structure of expected results, e.g.

<xsd:element name="origin" type="xsd:string"/>

Can be XML Schema, DTD, RelaxNG, XForms, ...

Linked to from EMMA document:

<emma:model ref="http://www.example.com/models/travel/flight.xsd"/>

<emma:emma emma:version="1.0"

xmlns:emma="http://www.w3.org/2003/04/emma#"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#">

<emma:one-of emma:id="r1">

<emma:interpretation emma:id="int1">

<origin>Boston</origin>

<destination>Denver</destination>

<date>03112003</date>

</emma:interpretation>

<emma:interpretation emma:id="int2">

<origin>Austin</origin>

<destination>Denver</destination>

<date>03112003</date>

</emma:interpretation>

</emma:one-of>

<rdf:RDF>

<!-- time stamp for result -->

<rdf:Description rdf:about="#r1"

emma:start="2003-03-26T0:00:00.15"

emma:end="2003-03-26T0:00:00.2"/>

<!-- confidence score for first interpretation -->

<rdf:Description rdf:about="#int1" emma:confidence="0.75"/>

<!-- confidence score for second interpretation -->

<rdf:Description rdf:about="#int2" emma:confidence="0.68"/>

<!-- time stamps for date in first interpretation -->

<rdf:Description rdf:about="#emma(id('int1')/date)">

<emma:absolute-timestamp

emma:start="2003-03-26T0:00:00.15"

emma:end="2003-03-26T0:00:00.2"/>

</rdf:Description>

</rdf:RDF>

</emma:emma>

<emma:emma emma:version="1.0"

xmlns:emma="http://www.w3.org/2003/04/emma#"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#">

<emma:one-of emma:id="r1">

<emma:interpretation emma:id="int1">

<origin>Boston</origin>

<destination>Denver</destination>

<date>03112003</date>

</emma:interpretation>

<emma:interpretation emma:id="int2">

<origin>Austin</origin>

<destination>Denver</destination>

<date>03112003</date>

</emma:interpretation>

</emma:one-of>

<rdf:RDF>

<!-- time stamp for result -->

<rdf:Description rdf:about="#r1"

emma:start="2003-03-26T0:00:00.15"

emma:end="2003-03-26T0:00:00.2"/>

<!-- confidence score for first interpretation -->

<rdf:Description rdf:about="#int1" emma:confidence="0.75"/>

<!-- confidence score for second interpretation -->

<rdf:Description rdf:about="#int2" emma:confidence="0.68"/>

<!-- time stamps for date in first interpretation -->

<rdf:Description rdf:about="#emma(id('int1')/date)">

<emma:absolute-timestamp

emma:start="2003-03-26T0:00:00.15"

emma:end="2003-03-26T0:00:00.2"/>

</rdf:Description>

</rdf:RDF>

</emma:emma>

references to external grammar, tokens, reference to external process, reference to raw signal, time stamps

medium (acoustic, tactile or visual)

mode: speech, dtmf, ink, gui, ...

function: recording, transcription, dialog, verification

verbal: true for anything that produces text, false for things that don't

<emma:emma version="1.0" xmlns:emma="http://www.w3.org/2003/04/emma">

<emma:interpretation id="raw">

<answer>From Boston to Denver tomorrow</answer>

</emma:interpretation>

<emma:interpretation id="better">

<emma:derived-from resource="#raw" />

<origin>Boston</origin>

<destination>Denver</destination>

<date>tomorrow</date>

</emma:interpretation>

<emma:interpretation id="best">

<emma:derived-from resource="#better" />

<origin>Boston</origin>

<destination>Denver</destination>

<date>20030315</date>

</emma:interpretation>

</emma:emma>

emma:tokens="arriving at 'Liverpool Street'"<rdf:Description

rdf:about="#better"emma:process="http://example.com/mysemproc1.xml"/><emma:interpretation emma:id="int1" emma:no-input="true"

/><rdf:Description rdf:about="#better"

emma:uninterpreted="true"/><rdf:Description rdf:about="#int1"

emma:lang="fr"/><rdf:Description rdf:about="#intp1"

emma:signal="http://example.com/signals/sg23.bin"/><rdf:Description rdf:about="#intp1"

emma:signal-codec="audio/3gpp"/><rdf:Description rdf:about="#meaning2"

emma:confidence="0.4"/><rdf:Description rdf:about="#intp1"

emma:source="http://example.com/microphone/NC-61"/><emma:interpretation emma:id="int1"

emma:start="2003-03-26T0:00:00"

emma:end="2003-03-26T0:00:00.2"><rdf:Description rdf:about="#interp1"

emma:confidence="0.6"

emma:medium="tactile"

emma:mode="ink"

emma:function="dialog"

emma:verbal="true"/>

| Medium | Device | Mode | Function | |||

|---|---|---|---|---|---|---|

| recording | dialog | transcription | verification | |||

| acoustic | microphone | speech | audiofile (e.g. voicemail) | spoken command / query / response (verbal = true) | dictation | speaker recognition |

| singing a note (verbal = false) | ||||||

| tactile | keypad | dtmf | audiofile / character stream | typed command / query / response (verbal = true) | text entry (T9-tegic, word completion, or word grammar) | password / pin entry |

| command key "Press 9 for sales" (verbal = false) | ||||||

| keyboard | keys | character / key-code stream | typed command / query / response (verbal = true) | typing | password / pin entry | |

| command key "Press S for sales" (verbal = false) | ||||||

| pen | ink | trace, sketch | handwritten command / query / response (verbal = true) | handwritten text entry | signature, handwriter recognition | |

| gesture (e.g. circling building) (verbal = false) | ||||||

| gui | N/A | tapping on named button (verbal = true) | soft keyboard | password / pin entry | ||

| drag and drop, tapping on map (verbal = false) | ||||||

| mouse | ink | trace, sketch | handwritten command / query / response (verbal = true) | handwritten text entry | N/A | |

| gesture (e.g. circling building) (verbal = false) | ||||||

| gui | N/A | clicking named button (verbal = true) | soft keyboard | password / pin entry | ||

| drag and drop, clicking on map (verbal = false) | ||||||

| joystick | ink | trace,sketch | gesture (e.g. circling building) (verbal = false) | N/A | N/A | |

| gui | N/A | pointing, clicking button / menu (verbal = false) | soft keyboard | password / pin entry | ||

| visual | page scanner | photograph | image | handwritten command / query / response (verbal = true) | optical character recognition, object/scene recognition (markup, e.g. SVG) | N/A |

| drawings and images (verbal = false) | ||||||

| still camera | photograph | image | objects (verbal = false) | visual object/scene recognition | face id, retinal scan | |

| video camera | video | movie | sign language (verbal = true) | audio/visual recognition | face id, gait id, retinal scan | |

| face / hand / arm / body gesture (e.g. pointing, facing) (verbal = false) | ||||||

How does a multimodal application author specify what composition rules to apply?

No work released yet.

<xsl:if test="@emma:confidence > 40"> <xsl:copy-of select="."/> </xsl:if>

Extensible Multimodal Annotation Language

At W3C, annotations are expressed in RDF

<rdf:Description rdf:about="#emma(id('int1')/date)">

<emma:absolute-timestamp

emma:start="2003-03-26T0:00:00.15"Z

emma:end="2003-03-26T0:00:00.2"/>

</rdf:Description>

A decision has been made...

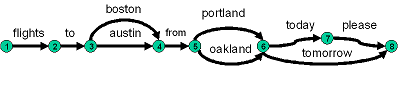

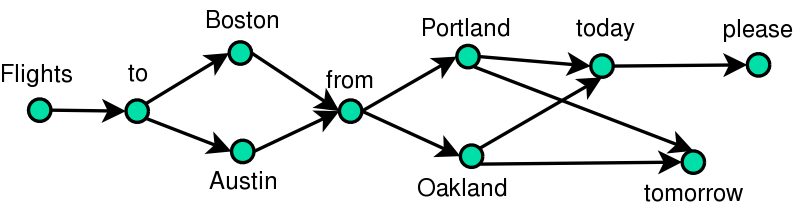

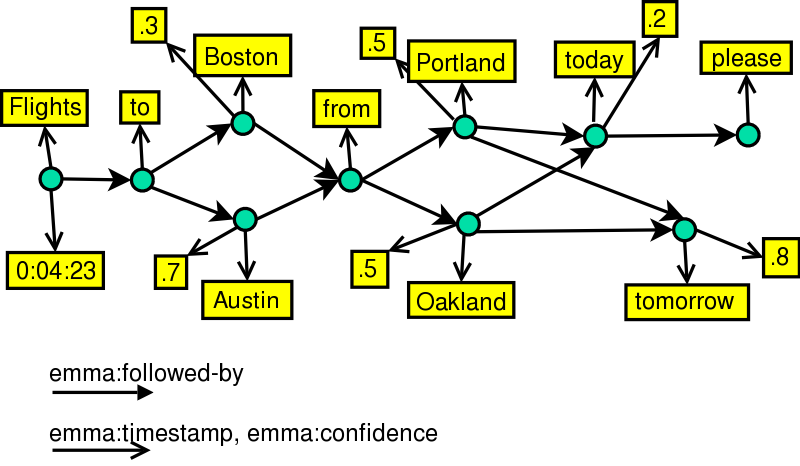

Instead of linear n-best list:

<departure>boston</departure><destination>portland</destination><date>today</date> <departure>austin</departure><destination>portland</destination><date>today</date> <departure>boston</departure><destination>oakland</destination><date>today</date> <departure>austin</departure><destination>oakland</destination><date>today</date> <departure>boston</departure><destination>portland</destination><date>tomorrow</date> <departure>austin</departure><destination>portland</destination><date>tomorrow</date> <departure>boston</departure><destination>oakland</destination><date>tomorrow</date> <departure>austin</departure><destination>oakland</destination><date>tomorrow</date>

Represent the actual graph in the EMMA instance.

Graph => RDF!

Difficult

What does that give us? Can write ontologies with emma:followed-by and perhaps other properties.

RDF/XML? Hmm...

EMMA shows yet another example of how XML technologies are usable in many contexts, here: multimodal and NLP

Still, lots of options: RDF, RDF/XML, XSLT/SMIL, DOM-IDL/WSDL, maybe more..

Multimodal interaction on the Web is a new problem, which connects it to many W3C activities: not just about XML, but also new devices (XHTML, CC/PP, SVG/CSS), accessibility, internationalisation, Web services, etc.