Florian Wegscheider, Rainer Simon, Konrad Tolar

Telecommunications Research Centre Vienna (ftw.)

Donaucitystraße 1, A-1220 Vienna

{wegscheider, simon, tolar}@ftw.at

In this position paper, we present our work in the area of device- and modality independent user interfaces for mobile devices. Within the application-oriented research project MONA ("Mobile multimOdal Next-generation Applications", [1]) we have developed the "MONA Presentation Server", a server-side adaptation engine which enables applications for a wide range of mobile devices. Developers of MONA applications provide a single source-code description of their user interface. The presentation server transforms this description into a graphical or multimodal user interface and adapts it dynamically for devices as diverse as low-end WAP-phones, Symbian-based smartphones or PocketPC PDAs.

Our current activities are focused on the topic of designer-centric single authoring for device independence and multimodality. We present an early version of an authoring tool that enables intuitive rapid prototyping of user interfaces for different mobile devices: The MONAEditor.

Within the MONA project, we have developed an experimental XML language for the device- and modality-independent representation of user interfaces [2]. The language builds on concepts of model-based user interface design and is practically implemented as a custom UIML ([3], [4]) vocabulary. In addition to a generic set of abstract UI widgets it includes tags for different tasks and for grouping these elements so the system can decide what elements to render together on small screens. Further information on our vocabulary can be found in [5].

We have implemented a prototype server-side system that translates the device- and modality- independent representation into a concrete graphical or multimodal user interface. Graphical user interfaces are automatically adapted according to each device's display capabilities. We implemented two example MONA applications – a unified messaging client and a quiz game – to demonstrate that our system is capable of generating complex user interfaces that allow dynamic real-time interaction among multiple users.

It was an initial requirement for the MONA project that application authors should at no point during the authoring process be concerned with device specific issues. They should specify their user interfaces on an abstract level and provide hints that allow automatic adaptation of the content by the presentation server. These hints include priority levels for different widgets and their contents, pagination hints, voice-dialog hints and “flexible” layout rules that are interpreted by an algorithm which optimizes the GUI layout for each specific device during runtime. This “content adaptation” metaphor is contrary to the “content selection” metaphor, where the author specifies different alternative contents for different devices.

The work with our application designers has, however, also shown limitations of the model-based approach:

We argue that a successful single-authoring solution must be adapted to the needs and demands of designers. An abstract model-based authoring paradigm that totally shields the author from the specifics of different devices will not be popular with designers. Even if the system correctly renders all the content the designer wants on a screen, the designer will not have detailed control of the layout – and therefore not like it. On the other hand, a single authoring format should provide sufficient abstraction and automatic adaptation capabilities to limit the work necessary for content changes and avoid that there are potentially unsupported devices, which the author has not considered during the design process. It will therefore be necessary to combine both philosophies by allowing content selection as well as content adoption mechanisms. We see good tool support as the crucial factor for the success of single authoring for the following two reasons:

Good tool support will move the creation of a multimodal and device independent user interface from the realm of programming to that of design.

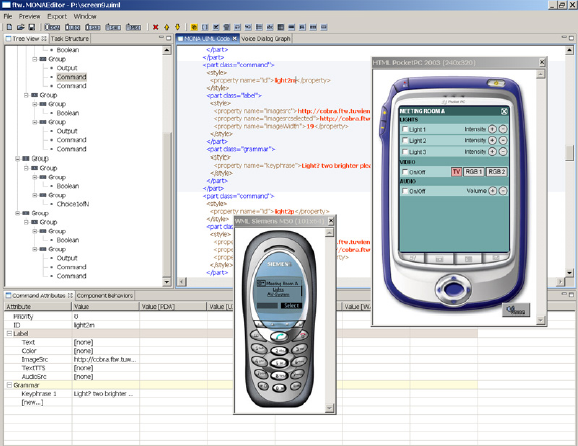

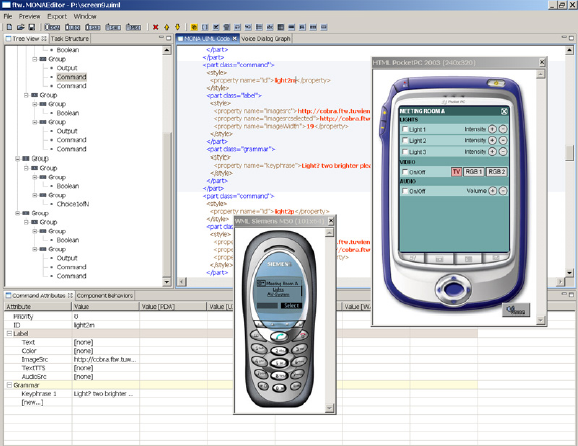

The MONAEditor, in its current state, is a basic rapid prototyping environment for user interfaces based on the markup language we have developed. It is implemented in Java, based on the Eclipse platform [6]. The main work area consists of three views (see Fig. 1).

All views are synchronized, i.e. selecting a user interface component in the tree view will automatically highlight and scroll to the corresponding code section in the source code view and show the corresponding attributes/behaviours in the attribute/behaviour table. Navigating through the source code will also automatically select the corresponding element in the tree and update the attribute/behaviour table accordingly.

Constructing a new user interface from scratch is mainly done in the tree view, using a right-click context menu or the component toolbar at the top of the application window. Editing of each component’s attributes and behaviours can be done in the attribute/behaviour table or directly in the source code view.

Different device emulators (including a Symbian UIQ smartphone and two different WML phones) offer a real-time preview of the GUI. Furthermore, a voice-enabled PDA emulator allows a multimodal preview.

A simple visual representation of the voice dialog is also available. A more sophisticated editing view for viewing/creating and manipulating the voice dialog directly, is under development. Direct visual editing by simply dragging and dropping user interface components onto the device emulator windows is currently not yet supported, but planned.

In the final version we envision, an author will first use the generic editors (tree view, behaviour tables and – if necessary – the source code) to create a rough sketch of the user interface that is generic for all devices. Then he uses the device emulators to make changes affecting only one device. These edits will of course also alter the main UIML code, spawning device specific sections wherever necessary.

Fig.1. MONAEditor screenshot

Our widget set is comparable to that of XForms [7]. However, we do not cover the powerful processing model of XForms in our language. In the future we want to move to XForms, enhanced by our concepts of “content-collections” for different modalities, task/pagination hints, as well as layout rules. We do not yet support content and layout selection, however we will use DISelect mechanisms [14], based on media features as defined in CSS Media Queries [13].

Currently user interfaces created with the MONAEditor are saved in our UIML dialect [5] and deployed on the MONA platform. However, the MONAEditor will feature the option to export concrete user interfaces. Standards we are aiming for are Pepper (a proprietary language for multimodal interfaces from our project partner Kirusa [10]), XHTML (partially implemented), X+V [8], XHTML+SALT (partially implemented), WML (mostly implemented), VoiceXML and XForms (partially implemented), SVG ([11], in the distant future). In that aspect we clearly want to support not only the “single-markup” authoring approach but to give authors the choice to use different markup versions if they want to and edit the device-specific descriptions separately. This is in accordance with the “flexible authoring” paradigm as defined in [15].

Especially in a mobile context, device independence and multimodality are important, as the devices vary considerably, and as the changing environment of the user calls for more than a single environment. Also multimodal applications (speech!) could lower the threshold for elderly users who are often uncomfortable with the small devices and that miniature buttons.

We hope that good tool support will flatten the learning curve for creating multimodal mobile applications so designers finally start experimenting with these possibilities. With good tools, the designer is not limited in his core work – creativity – and does not have to deal with the multitude of different mobile terminals – or only when he wants to.

[1] Website of the MONA project. http://mona.ftw.at

[2] Position Paper for the W3C Workshop on Multimodal Interaction: The MONA

Project; Rainer Simon, Florian Wegscheider, Georg Niklfeld; W3C Workshop on

Multimodal Interaction, 19/20 July, 2004, Sophia Antipolis, France. http://www.w3.org/2004/02/mmi-workshop/simon-ftw.html

[3] UIML website: http://www.uiml.org

[4] UIML.org. Draft specification for language version 3.0 of 12. Feb 2002.

http://www.uiml.org/specs/docs/uiml30-revised-02-12-02.pdf

[5] A Generic UIML Vocabulary for Device- and Modality Independent User Interfaces;

Rainer Simon, Michael Jank, Florian Wegscheider; Proceedings of the 13th International

World Wide Web Conference, May 17-22 2004, New York, NY, USA. ACM Press.

[6] The Eclipse project website. http://www.eclipse.org

[7] XForms 1.0, W3C Recommendation, 14 Oct. 2003. http://www.w3.org/TR/xforms/

[8] XHTML+Voice Profile 1.0. W3C Note 21 Dec. 2001. http://www.w3.org/TR/xhtml+voice/

[9] XHTML™ 1.0 The Extensible HyperText Markup Language (Second Edition),

W3C Recommendation 26 January 2000, revised 1 August 2002. http://www.w3.org/TR/2002/REC-xhtml1-20020801/

[10] Kirusa, Inc. website: http://www.kirusa.com/

[11] Scalable Vector Graphics (SVG). XML Graphics for the Web. http://www.w3.org/Graphics/SVG/

[12] Wireless Markup Language (WML) Version 2.0 Specification. Wireless Application

Protocol Forum 2001. http://www.wapforum.org/tech/documents/WAP-238-WML-20010911-a.pdf

[13] Media Queries. W3C Candidate Recommendation 8 July 2002. http://www.w3.org/TR/2002/CR-css3-mediaqueries-20020708

[14] Content Selection for Device Independence (DISelect) 1.0. W3C Working Draft

11 June 2004. http://www.w3.org/TR/2004/WD-cselection-20040611/

[15] Authoring Techniques for Device Independence. W3C Working Group Note 18

February 2004. http://www.w3.org/TR/2004/NOTE-di-atdi-20040218/

The MONA project is funded by Kapsch Carrier-Com AG, Mobilkom Austria AG and Siemens Austria AG together with the Austrian competence centre programme Kplus.